Prompt Debugging Is the New Stack Trace

What breaking AI workflows taught me about the future of engineering. In traditional software development, when something breaks, you look at the logs. You dig into the stack trace, inspect variables, step through the debugger, isolate the bug. In AI-powered applications, that world is gone. When an LLM fails, there’s often no crash, no error, and no stack trace. Just a subtly wrong response. A hallucination. A weird behavior that’s technically correct but totally wrong for your user. Debugging becomes conversational. And that changes everything. From Code Bugs to Prompt Bugs In our product Linkeme, we rely on prompts to: Generate social media content Choose relevant CTAs Compose visual overlays And yet, some of the most frustrating bugs we faced early on didn’t come from bad code — but from poorly constructed prompts. For example: "Generate a LinkedIn post for this article: 'AI agents will replace internal tools.'" Sounds fine? Not really. The model didn’t know: Who the target audience was What tone to use Whether to include hashtags, emojis, or links The output was generic and missed the mark. So we debugged it — not with breakpoints, but with iterations. What Prompt Debugging Looks Like Add role and tone: "You are a social media expert. Generate a LinkedIn post for B2B founders. Tone: bold but professional." Add formatting instructions: "...Include 3 lines max, use a punchy hook, and end with a CTA." Add context examples: "Here are 2 good posts for reference: [...]" We iterated until the model consistently produced good results. That’s prompt debugging. Building Tools for Prompt QA After spending too much time manually testing prompts, we built our own tools: Prompt versioning — Every prompt change is tracked like a commit. Prompt test cases — Each key prompt has expected inputs and outputs. Failure reporting — Human validators can tag a failed generation and auto-rollback to the last good version. Monitoring with PostHog — To track usage and spot regressions. LLM development requires building a new stack for non-deterministic outputs. Why Prompt Debugging Is the New Core Skill If you work with AI, prompt engineering is not a phase — it’s a new layer in your software stack. It’s less about logic, more about guidance. Less about syntax, more about semantics. And it’s becoming a core engineering skill. Final Thoughts You don’t debug LLM apps the way you debug JavaScript or Python. You test intentions, tweak contexts, and optimize outputs. If that sounds frustrating — well, welcome to the future. But once you embrace it, it’s surprisingly powerful. Your new debugger is not a terminal. It’s a chat. We’re exploring more around LLM-powered dev at easylab.ai — and prompt versioning is just the beginning.

What breaking AI workflows taught me about the future of engineering.

In traditional software development, when something breaks, you look at the logs. You dig into the stack trace, inspect variables, step through the debugger, isolate the bug.

In AI-powered applications, that world is gone.

When an LLM fails, there’s often no crash, no error, and no stack trace. Just a subtly wrong response. A hallucination. A weird behavior that’s technically correct but totally wrong for your user.

Debugging becomes conversational. And that changes everything.

From Code Bugs to Prompt Bugs

In our product Linkeme, we rely on prompts to:

- Generate social media content

- Choose relevant CTAs

- Compose visual overlays

And yet, some of the most frustrating bugs we faced early on didn’t come from bad code — but from poorly constructed prompts.

For example:

"Generate a LinkedIn post for this article: 'AI agents will replace internal tools.'"

Sounds fine? Not really. The model didn’t know:

- Who the target audience was

- What tone to use

- Whether to include hashtags, emojis, or links

The output was generic and missed the mark. So we debugged it — not with breakpoints, but with iterations.

What Prompt Debugging Looks Like

- Add role and tone:

"You are a social media expert. Generate a LinkedIn post for B2B founders. Tone: bold but professional."

- Add formatting instructions:

"...Include 3 lines max, use a punchy hook, and end with a CTA."

- Add context examples:

"Here are 2 good posts for reference: [...]"

We iterated until the model consistently produced good results. That’s prompt debugging.

Building Tools for Prompt QA

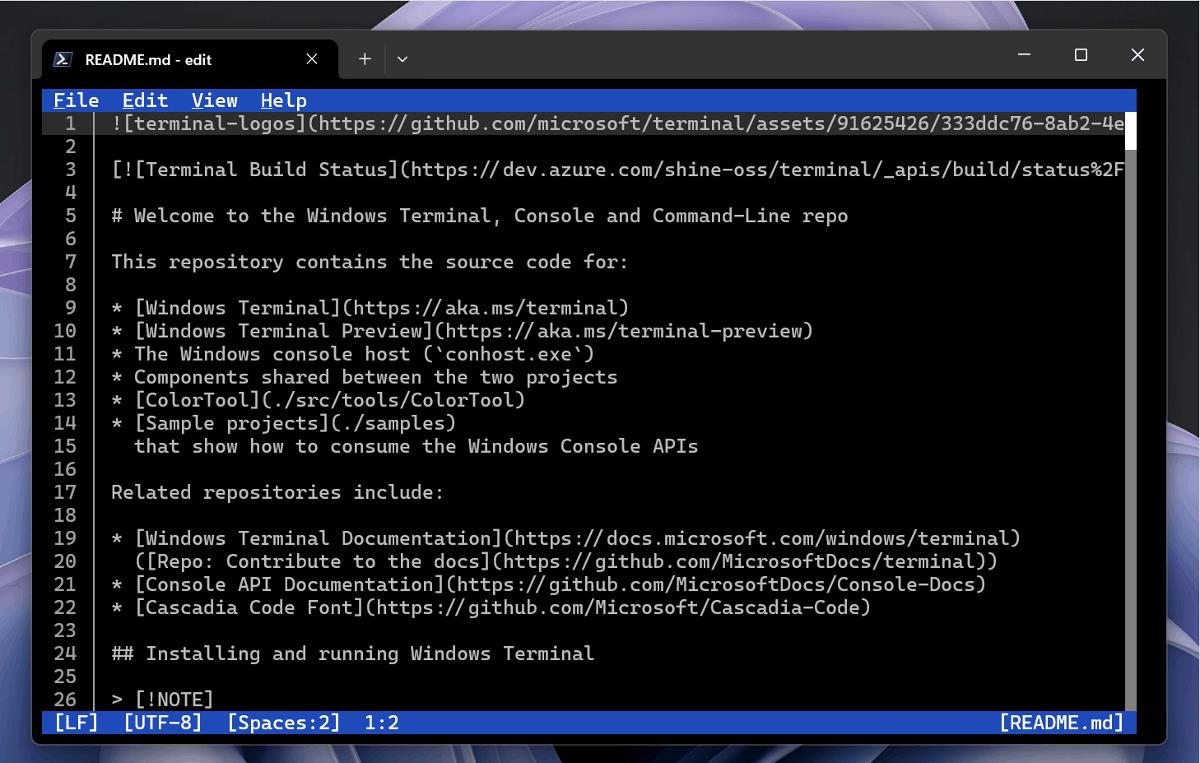

After spending too much time manually testing prompts, we built our own tools:

- Prompt versioning — Every prompt change is tracked like a commit.

- Prompt test cases — Each key prompt has expected inputs and outputs.

- Failure reporting — Human validators can tag a failed generation and auto-rollback to the last good version.

- Monitoring with PostHog — To track usage and spot regressions.

LLM development requires building a new stack for non-deterministic outputs.

Why Prompt Debugging Is the New Core Skill

If you work with AI, prompt engineering is not a phase — it’s a new layer in your software stack.

It’s less about logic, more about guidance.

Less about syntax, more about semantics.

And it’s becoming a core engineering skill.

Final Thoughts

You don’t debug LLM apps the way you debug JavaScript or Python.

You test intentions, tweak contexts, and optimize outputs.

If that sounds frustrating — well, welcome to the future.

But once you embrace it, it’s surprisingly powerful.

Your new debugger is not a terminal. It’s a chat.

We’re exploring more around LLM-powered dev at easylab.ai — and prompt versioning is just the beginning.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)

-(1).jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![What’s new in Android’s May 2025 Google System Updates [U: 5/19]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple's iPhone Shift to India Accelerates With $1.5 Billion Foxconn Investment [Report]](https://www.iclarified.com/images/news/97357/97357/97357-640.jpg)

![Apple Releases iPadOS 17.7.8 for Older Devices [Download]](https://www.iclarified.com/images/news/97358/97358/97358-640.jpg)