Ollama vs. LM Studio: Your First Guide to Running LLMs Locally

Alright, let's dive into the world of running Large Language Models (LLMs) locally! It's a super exciting space, moving fast, and putting incredible power right onto your own machine. Forget relying solely on cloud APIs – you can experiment, build, and chat with AI privately and often for free. Two popular contenders making this accessible for beginners are Ollama and LM Studio. Both let you download and run powerful open-source models, but they go about it quite differently. Choosing the right one depends on your comfort level with technology and what you want to achieve. Let's break them down to help you pick the best starting point for your local AI journey. Ollama: The Developer's Darling Think of Ollama as the streamlined, command-line-first approach. It's designed to get you up and running with LLMs quickly and efficiently, especially if you're comfortable typing commands into a terminal. Who's Behind It? Ollama, Inc. is the company driving its development. Open Source? Yes! It's available under the MIT license. This is a big plus for transparency, community contributions, and the assurance that you can see how it works under the hood. Strong Points: Simplicity: Its core strength. Installing Ollama and running a model can be as simple as ollama run llama3. It handles downloading and setup seamlessly. Lightweight: Primarily runs as a background service, making it less resource-intensive than a full GUI application when idle. Integration: Fantastic for developers. It exposes a local API (compatible with the OpenAI API spec) out-of-the-box, making it trivial to integrate local models into your Typescript/Node.js applications, scripts, or other tools. Modelfiles: Offers a Modelfile system (like a Dockerfile) to customize model behavior, system prompts, parameters, etc., which is great for reproducibility. Growing Ecosystem: A vibrant community and increasing support for various models. Weak Points: CLI-Focused: If you're allergic to the terminal, Ollama's primary interface might feel intimidating initially. While community GUIs exist (like Ollama Web UI), they aren't the core product. Less Visual Configuration: Adjusting model parameters often involves command-line flags, editing Modelfiles, or API calls rather than clicking buttons in a settings menu. Cost: Free. Consensus: Highly regarded in the developer community for its ease of use, clean design, and excellent integration capabilities. It's often the go-to for embedding local LLMs into projects. LM Studio: The All-in-One GUI Powerhouse LM Studio takes a different approach, offering a polished, comprehensive Graphical User Interface (GUI) that wraps everything you need into one application. Who's Behind It? Developed by LM Studio (Tech.). Open Source? No. LM Studio is proprietary freeware. You can use it for free, but the source code isn't publicly available. This means less transparency and reliance on the LM Studio team for updates and fixes. Strong Points: User-Friendly GUI: Its biggest draw. Everything is point-and-click: discovering models, downloading them, chatting, and configuring settings. Model Discovery: Features an excellent built-in browser to search for models on Hugging Face, showing different versions and quantization levels (like GGUF) clearly. Visual Configuration: Easily tweak inference parameters (temperature, context length, GPU layers, etc.) through intuitive menus for each model. Built-in Chat: Includes a familiar chat interface right within the app to interact with your loaded models immediately. Hardware Acceleration: Clear options for offloading computation to your GPU (Nvidia, Metal on Mac, potentially others) to speed things up. Weak Points: Closed Source: Lack of transparency and community contribution compared to open-source alternatives. Resource Usage: Being a full desktop application (built on Electron, I believe), it can consume more RAM and CPU than Ollama's background service, even when just sitting there. Less Scriptable: While it offers a local server mode (also OpenAI API compatible), its core design isn't geared towards CLI scripting and automation in the same way Ollama is. Cost: Free. Consensus: Extremely popular, especially among users who prefer a GUI or are less comfortable with the command line. Praised for making local LLMs accessible and easy to manage visually. Head-to-Head: Making the Choice Feature Ollama LM Studio Who Wins? Ease of Setup Very simple install, CLI commands Simple install, GUI interface LM Studio (for GUI lovers) Interface Primarily CLI (GUIs available separately) Integrated GUI LM Studio (for integrated UI) Model Discovery CLI (ollama pull), relies on knowing names Built-in Hugging Face browser LM Studio Configuration Modelfiles, CLI flags, API Visual GUI menus LM Studio (for visual ease) Integration Excellent built-in API, CLI scripting Lo

Alright, let's dive into the world of running Large Language Models (LLMs) locally! It's a super exciting space, moving fast, and putting incredible power right onto your own machine. Forget relying solely on cloud APIs – you can experiment, build, and chat with AI privately and often for free.

Two popular contenders making this accessible for beginners are Ollama and LM Studio. Both let you download and run powerful open-source models, but they go about it quite differently. Choosing the right one depends on your comfort level with technology and what you want to achieve.

Let's break them down to help you pick the best starting point for your local AI journey.

Ollama: The Developer's Darling

Think of Ollama as the streamlined, command-line-first approach. It's designed to get you up and running with LLMs quickly and efficiently, especially if you're comfortable typing commands into a terminal.

- Who's Behind It? Ollama, Inc. is the company driving its development.

- Open Source? Yes! It's available under the MIT license. This is a big plus for transparency, community contributions, and the assurance that you can see how it works under the hood.

- Strong Points:

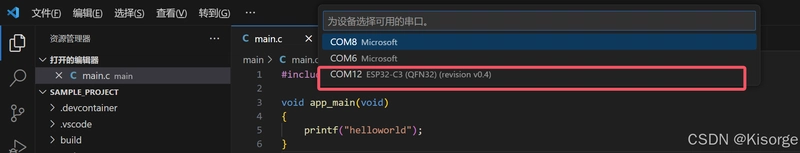

- Simplicity: Its core strength. Installing Ollama and running a model can be as simple as

ollama run llama3. It handles downloading and setup seamlessly. - Lightweight: Primarily runs as a background service, making it less resource-intensive than a full GUI application when idle.

- Integration: Fantastic for developers. It exposes a local API (compatible with the OpenAI API spec) out-of-the-box, making it trivial to integrate local models into your Typescript/Node.js applications, scripts, or other tools.

- Modelfiles: Offers a

Modelfilesystem (like a Dockerfile) to customize model behavior, system prompts, parameters, etc., which is great for reproducibility. - Growing Ecosystem: A vibrant community and increasing support for various models.

- Simplicity: Its core strength. Installing Ollama and running a model can be as simple as

- Weak Points:

- CLI-Focused: If you're allergic to the terminal, Ollama's primary interface might feel intimidating initially. While community GUIs exist (like Ollama Web UI), they aren't the core product.

- Less Visual Configuration: Adjusting model parameters often involves command-line flags, editing Modelfiles, or API calls rather than clicking buttons in a settings menu.

- Cost: Free.

- Consensus: Highly regarded in the developer community for its ease of use, clean design, and excellent integration capabilities. It's often the go-to for embedding local LLMs into projects.

LM Studio: The All-in-One GUI Powerhouse

LM Studio takes a different approach, offering a polished, comprehensive Graphical User Interface (GUI) that wraps everything you need into one application.

- Who's Behind It? Developed by LM Studio (Tech.).

- Open Source? No. LM Studio is proprietary freeware. You can use it for free, but the source code isn't publicly available. This means less transparency and reliance on the LM Studio team for updates and fixes.

- Strong Points:

- User-Friendly GUI: Its biggest draw. Everything is point-and-click: discovering models, downloading them, chatting, and configuring settings.

- Model Discovery: Features an excellent built-in browser to search for models on Hugging Face, showing different versions and quantization levels (like GGUF) clearly.

- Visual Configuration: Easily tweak inference parameters (temperature, context length, GPU layers, etc.) through intuitive menus for each model.

- Built-in Chat: Includes a familiar chat interface right within the app to interact with your loaded models immediately.

- Hardware Acceleration: Clear options for offloading computation to your GPU (Nvidia, Metal on Mac, potentially others) to speed things up.

- Weak Points:

- Closed Source: Lack of transparency and community contribution compared to open-source alternatives.

- Resource Usage: Being a full desktop application (built on Electron, I believe), it can consume more RAM and CPU than Ollama's background service, even when just sitting there.

- Less Scriptable: While it offers a local server mode (also OpenAI API compatible), its core design isn't geared towards CLI scripting and automation in the same way Ollama is.

- Cost: Free.

- Consensus: Extremely popular, especially among users who prefer a GUI or are less comfortable with the command line. Praised for making local LLMs accessible and easy to manage visually.

Head-to-Head: Making the Choice

| Feature | Ollama | LM Studio | Who Wins? |

|---|---|---|---|

| Ease of Setup | Very simple install, CLI commands | Simple install, GUI interface | LM Studio (for GUI lovers) |

| Interface | Primarily CLI (GUIs available separately) | Integrated GUI | LM Studio (for integrated UI) |

| Model Discovery | CLI (ollama pull), relies on knowing names |

Built-in Hugging Face browser | LM Studio |

| Configuration | Modelfiles, CLI flags, API | Visual GUI menus | LM Studio (for visual ease) |

| Integration | Excellent built-in API, CLI scripting | Local server mode (OpenAI compatible) | Ollama (for developers) |

| Open Source | Yes (MIT) | No | Ollama |

| Resource Usage | Generally lighter (background service) | Can be heavier (full GUI app) | Ollama |

| Beginner Friendliness | Simple commands, but CLI required | Very high via point-and-click GUI | LM Studio |

| Customization | Good via Modelfiles | Good via GUI settings | Tie (different approaches) |

| Cost | Free | Free | Tie |

Which Should You Choose?

- Choose Ollama if:

- You're comfortable using the command line.

- You plan to integrate local LLMs into your own applications or scripts (e.g., using its API from your Typescript code).

- You value open-source software.

- You prefer a more lightweight, focused tool.

- Choose LM Studio if:

- You strongly prefer a graphical user interface (GUI).

- You want an all-in-one solution for discovering, downloading, configuring, and chatting with models.

- You find the command line intimidating.

- You want easy visual access to various model quantizations and settings.

Getting Started & Exploring

Honestly, since both are free, the best advice is often to try both!

- Install LM Studio: Download it, browse for a popular model (like

Meta-Llama-3-8B-Instruct.Q4_K_M.gguf), download it, load it up, and start chatting. Play with the settings. - Install Ollama: Follow its simple install instructions. Then, in your terminal, run

ollama pull llama3andollama run llama3. Experience the CLI interaction. Maybe try hitting its API endpoint (http://localhost:11434/api/generate) withcurlor a tool like Postman.

Seeing them in action on your own machine is the best way to understand their workflow and decide which feels more natural to you.

Both Ollama and LM Studio are fantastic gateways into the fascinating world of local LLMs. They lower the barrier to entry significantly, letting you experiment with powerful AI without needing massive cloud budgets or deep ML expertise. Pick one, download a model, and start exploring – you'll likely be amazed at what you can run on your own hardware! Good luck!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

(1).jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_NicoElNino_Alamy.png?#)

.webp?#)

.webp?#)

![New iOS 19 Leak Allegedly Reveals Updated Icons, Floating Tab Bar, More [Video]](https://www.iclarified.com/images/news/96958/96958/96958-640.jpg)

![Apple to Source More iPhones From India to Offset China Tariff Costs [Report]](https://www.iclarified.com/images/news/96954/96954/96954-640.jpg)

![Blackmagic Design Unveils DaVinci Resolve 20 With Over 100 New Features and AI Tools [Video]](https://www.iclarified.com/images/news/96951/96951/96951-640.jpg)