My Journey Into AI #1, How AI Embeddings Transform Human Language Into Universal Knowledge

In this series of articles, we will explore Artificial Intelligence (AI) and learn how to implement it in web applications. By the end of this journey, we will have built a web app and gained a high-level understanding of AI. The Linguistic Puzzle Have you ever thought about how things get their names across different languages? It's fascinating how the same object carries completely different labels depending on where you are in the world. As an Indonesian, I call a chair "kursi," while my German friend calls it "Stuhl", two words with no phonetic similarity yet referring to the identical everyday object we sit on. My Frontend instincts immediately suggest a brute-force approach. I think this could be done using a hash map to map between words, but it isn't that simple right? AI's Language Challenge I'm curious how AI can solve that problem. This linguistic diversity presents maybe a fundamental challenge for AI?, how it possible machines understand that "kursi" and "Stuhl" are refer to chair? This is where AI Embeddings provide an elegant solution. How Embeddings Work Embeddings is the language that AI can "understands" this is a mathematical context to translate input into vector as a representation of the natural language, AI process so many things and with data they can think like human. In the context of embedding, a word (it can be phrase, document, image will be explain later) "kursi" and "Stuhl" would be positioned close together, despite their different sounds and letters but the meaning is the same right? Then the semantic meaning (the concept of a seat with a back for one person) becomes more important than the specific word itself. These numerical representations called as vector, it capture the essence of what chairs are. Cross-Lingual Understanding But if we focus on this problem AI solved it using cross-lingual embeddings that map words from different languages into a shared vector space, A model trained on multiple languages learns that "kursi" and "Stuhl" should have similar vector representations. This enables translation, cross language search, and multilingual understanding. So that's why transform words into vector as a proper solution, AI translate input (natural language) into vector to make it easier to find similarity between data but more than that. This mirrors how humans learn languages, we don't memorize direct translations but build conceptual bridges between languages. When I learn that "Stuhl" means the same thing as "kursi," I'm creating a mental connection to a concept, not just memorizing a word pair. Beyond Simple Word Mapping The power of embeddings extends beyond simple word for word mapping: They can represent entire concepts, capturing cultural nuances about furniture. The embedding for "kursi" might encode not just "chair" as literal but more subtle associations to Indonesian culture like "Partai pemenang mendapatkan kursi terbanyak di DPR". We are still talking about chair but added more context into it like political for example. Assume that you have data represented using vector, and if we put in : Kursi (2, 3), Stuhl (2.5, 2.5), Meja (3, 4), Tisch (3.5, 3.5) Don't mind with how we get the number, because it's just arbitrary input to show you the concept, if you curious with the number actually come from, it's another topic (deep learning) at least now you know how to transform your data into vectors. Practice Makes it Perfect Enough with the theory, so we can go to the implementation, if you follow this article I will use VoyageAI to handle the Text Embeddings, but you can choose other options like OpenAI, Mistral etc. Why I choose VoyageAI because there are free models that you can use to experiment, and obviously because as a Frontend Engineer primarily working with TypeScript, VoyageAI's official TypeScript SDK is particularly valuable to my workflow. The SDK provides convenient access to the Voyage API directly from TypeScript applications, creating a seamless development experience that integrates with my existing codebase Voyage TypeScript Library. Setup Your Typescript Project # Create directory mkdir ts-voyage cd ts-voyage # Init node project & install typescript npm init -y npm install typescript --save-dev # Init typescript npx tsc --init # Create a file named index.ts touch index.ts # If you have node >= v23.6.0 node --no-warnings index.ts # { const result = await getEmbedText("Hello, world!"); console.log(result); })(); After that you can run the code, and voila our "Hello, world!" text transformed into vector. If you see the embedding field it's not as simple as 2D array like this "kursi (2,3)", but 1024 array dimensions of floating number. You can modify your dimensions using outputDimension up to 2048D wow, can you imagine that how many possibilities that AI can make. It's a long way to see this message, congratulations

In this series of articles, we will explore Artificial Intelligence (AI) and learn how to implement it in web applications. By the end of this journey, we will have built a web app and gained a high-level understanding of AI.

The Linguistic Puzzle

Have you ever thought about how things get their names across different languages? It's fascinating how the same object carries completely different labels depending on where you are in the world. As an Indonesian, I call a chair "kursi," while my German friend calls it "Stuhl", two words with no phonetic similarity yet referring to the identical everyday object we sit on.

My Frontend instincts immediately suggest a brute-force approach. I think this could be done using a hash map to map between words, but it isn't that simple right?

AI's Language Challenge

I'm curious how AI can solve that problem. This linguistic diversity presents maybe a fundamental challenge for AI?, how it possible machines understand that "kursi" and "Stuhl" are refer to chair? This is where AI Embeddings provide an elegant solution.

How Embeddings Work

Embeddings is the language that AI can "understands" this is a mathematical context to translate input into vector as a representation of the natural language, AI process so many things and with data they can think like human.

- In the context of embedding, a word (it can be phrase, document, image will be explain later) "kursi" and "Stuhl" would be positioned close together, despite their different sounds and letters but the meaning is the same right?

- Then the semantic meaning (the concept of a seat with a back for one person) becomes more important than the specific word itself.

- These numerical representations called as vector, it capture the essence of what chairs are.

Cross-Lingual Understanding

But if we focus on this problem AI solved it using cross-lingual embeddings that map words from different languages into a shared vector space,

- A model trained on multiple languages learns that "kursi" and "Stuhl" should have similar vector representations.

- This enables translation, cross language search, and multilingual understanding.

So that's why transform words into vector as a proper solution, AI translate input (natural language) into vector to make it easier to find similarity between data but more than that.

This mirrors how humans learn languages, we don't memorize direct translations but build conceptual bridges between languages. When I learn that "Stuhl" means the same thing as "kursi," I'm creating a mental connection to a concept, not just memorizing a word pair.

Beyond Simple Word Mapping

The power of embeddings extends beyond simple word for word mapping:

- They can represent entire concepts, capturing cultural nuances about furniture.

- The embedding for "kursi" might encode not just "chair" as literal but more subtle associations to Indonesian culture like "Partai pemenang mendapatkan kursi terbanyak di DPR". We are still talking about chair but added more context into it like political for example.

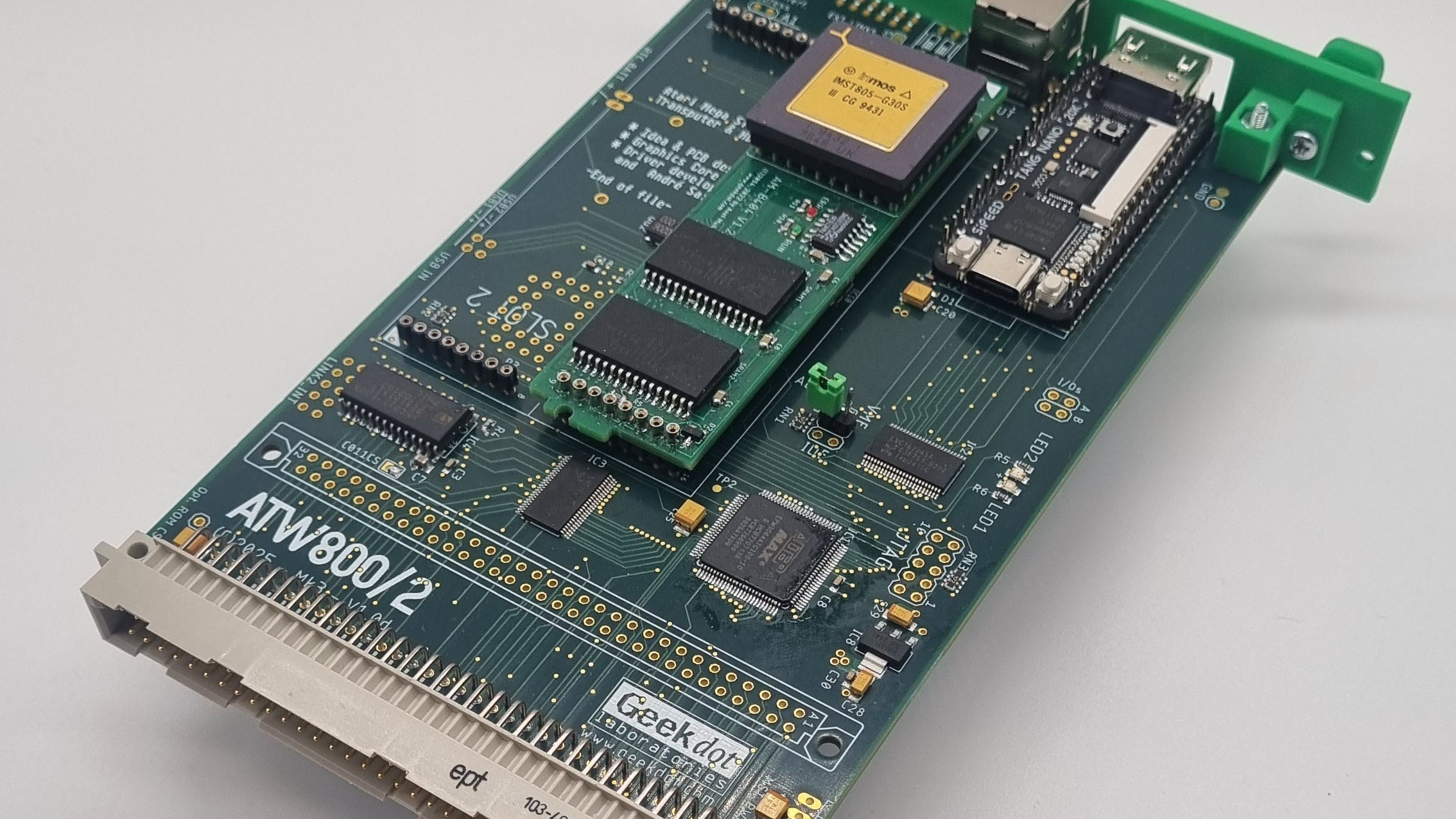

Assume that you have data represented using vector, and if we put in :

- Kursi (2, 3), Stuhl (2.5, 2.5), Meja (3, 4), Tisch (3.5, 3.5)

Don't mind with how we get the number, because it's just arbitrary input to show you the concept, if you curious with the number actually come from, it's another topic (deep learning) at least now you know how to transform your data into vectors.

Practice Makes it Perfect

Enough with the theory, so we can go to the implementation, if you follow this article I will use VoyageAI to handle the Text Embeddings, but you can choose other options like OpenAI, Mistral etc.

Why I choose VoyageAI because there are free models that you can use to experiment, and obviously because as a Frontend Engineer primarily working with TypeScript, VoyageAI's official TypeScript SDK is particularly valuable to my workflow. The SDK provides convenient access to the Voyage API directly from TypeScript applications, creating a seamless development experience that integrates with my existing codebase Voyage TypeScript Library.

Setup Your Typescript Project

# Create directory

mkdir ts-voyage

cd ts-voyage

# Init node project & install typescript

npm init -y

npm install typescript --save-dev

# Init typescript

npx tsc --init

# Create a file named index.ts

touch index.ts

# If you have node >= v23.6.0

node --no-warnings index.ts

# It's Time to Code

I know you can't wait to code :), but I have one more step before we go to our favorite text editor, If you following me to use VoyageAI, first thing first you have to create account and get the VOYAGE_API_KEY to access the module SDK later.

# Install dotenv

npm i dotenv

npm i -D @types/node

# Install VoyageAI SDK

npm i voyageai

Don't forget to save your VOYAGE_API_KEY in .env.

VOYAGE_API_KEY=your_api_key

Because in this code example we will use import syntax, so don't forget to modify your package.json and add "type": "module".

{

"name": "ts-voyage",

"version": "1.0.0",

"main": "index.js",

"type": "module", // add this

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"author": "",

"license": "ISC",

"description": "",

"devDependencies": {

"@types/node": "^22.15.17",

"typescript": "^5.8.3"

},

"dependencies": {

"dotenv": "^16.5.0",

"voyageai": "^0.0.4"

}

}

The code itself is really simple for now, we just used the module from the Voyage SDK to convert "Hello, world" into embedding vector.

import { VoyageAIClient } from "voyageai";

import dotenv from "dotenv";

dotenv.config();

interface EmbeddingResponse {

object: string;

embeddings: number[];

index: number;

}

async function getEmbedText(input: string): Promise {

const client = new VoyageAIClient({ apiKey: process.env.VOYAGE_API_KEY });

const embeddedText = await client.embed({

input,

model: "voyage-3.5",

outputDimension: 1024, // 256, 512, 1024 (default), 2048

});

return embeddedText.data as EmbeddingResponse[];

}

(async () => {

const result = await getEmbedText("Hello, world!");

console.log(result);

})();

After that you can run the code, and voila our "Hello, world!" text transformed into vector.

If you see the embedding field it's not as simple as 2D array like this "kursi (2,3)", but 1024 array dimensions of floating number. You can modify your dimensions using outputDimension up to 2048D wow, can you imagine that how many possibilities that AI can make.

It's a long way to see this message, congratulations you learn new things, if you're a Frontend Engineer like me maybe you still wondering how can I implement this into my next FE project, be patient guys we will be there, but for now I think it's enough. I hope this article give us a high level visual of how embedding works and see you in the next article.

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![How to Enable Remote Access on Windows 10 [Allow RDP]](https://bigdataanalyticsnews.com/wp-content/uploads/2025/05/remote-access-windows.jpg)

![Legends Reborn tier list of best heroes for each class [May 2025]](https://media.pocketgamer.com/artwork/na-33360-1656320479/pg-magnum-quest-fi-1.jpeg?#)

_KristofferTripplaar_Alamy_.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl.jpg)

![[Open Thread] Android 16 is just weeks away, but has it met your early expectations?](https://www.androidauthority.com/wp-content/uploads/2025/03/android-16-logo-outside-hero-1-scaled.jpeg)

![SoundCloud latest company to hit trouble with AI clause in T&Cs [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/05/SoundCloud-latest-company-to-hit-trouble-with-AI-clause-in-TCs.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Vision Pro May Soon Let You Scroll With Your Eyes [Report]](https://www.iclarified.com/images/news/97324/97324/97324-640.jpg)

![Apple's 20th Anniversary iPhone May Feature Bezel-Free Display, AI Memory, Silicon Anode Battery [Report]](https://www.iclarified.com/images/news/97323/97323/97323-640.jpg)