Boost 2-Bit LLM Accuracy with EoRA

A training-free solution for extreme LLM compression. The post Boost 2-Bit LLM Accuracy with EoRA appeared first on Towards Data Science.

Post-training quantization methods like GPTQ and AWQ can dramatically reduce the size of large models. A model like Llama 3 with 70 billion parameters can occupy around 140 GB in FP16, but this can be reduced to approximately 40 GB using 4-bit quantization, while still maintaining strong performance on downstream tasks.

However, despite this substantial reduction, such models still exceed the memory capacity of most consumer-grade GPUs, which typically offer 24 GB to 32 GB of VRAM. To make these models truly accessible, quantization to even lower bitwidths, such as 2-bit, is required. While recent advances in low-bit quantization are promising, achieving stable and accurate 2-bit quantization remains a significant challenge.

In this article, we review a technique called EoRA that helps compensate for quantization-induced errors. EoRA is a training-free method, meaning it can be applied quickly and efficiently to any model, even the largest ones. We’ll check how EoRA works and demonstrate how it can significantly improve the performance of 2-bit quantized models, bringing them close to the accuracy of their full-precision counterparts while being up to 5.5x smaller.

We’ll analyze experimental results obtained using large models such as Qwen3-32B and Qwen2.5-72B, both quantized to 2-bit using state-of-the-art quantization techniques, to illustrate the effectiveness of EoRA.

Diving into the Eigenspace in Search of an Adapter

Post-training quantization or, more generally, compression aims to reduce model size or inference cost by minimizing the output difference between the original weights Wl and compressed weights Ŵl using only a small calibration dataset.

Most quantization methods are framed layer-wise, but the choice of compression formats is rigid and limits flexibility across diverse deployment needs.

To bypass format constraints and improve accuracy, previous work, such as QLoRA [1] and HQQ+ [2], directly fine-tuned a Lora adapter on top of the frozen quantized models.

It is also possible to reframe compression as a compensation problem: given a compressed model, introduce low-rank residual paths that specifically correct compression errors.

A straightforward method uses SVD to decompose the compression error:

\[\Delta W_l = W_l – \hat{W}_l\]

into

\[U_l \Sigma_l V_l^T\]

forming low-rank approximations via two matrices:

\[B_l = U_l \Sigma_l \]

\[A_l = V_l^T\]

where Al and Bl are the standard tensors of a LoRA adapter.

However, plain SVD has two limitations: it does not minimize the original layerwise compression loss directly, and it allocates capacity uniformly across all error components, ignoring the varying importance of different parts of the model.

To address this, NVIDIA proposes EoRA [3].

EoRA: Training-free Compensation for Compressed LLM with Eigenspace Low-Rank Approximation

EoRA first projects the compression error into the eigenspace defined by the input activation covariance:

\[\tilde{X} \tilde{X}^T\]

where X̃ is the average activation over the calibration set. Then, by performing eigendecomposition, we get:

\[\tilde{X} \tilde{X}^T = Q \Lambda Q^T\]

The compression error ΔW is projected as:

\[\Delta W’ = \Delta W Q’\]

where Q′=QΛ. Then SVD is applied on ΔW′ to produce a low-rank approximation, and the result is projected back to the original space, adjusting the low-rank factors accordingly.

This eigenspace projection changes the optimization objective: it weights the importance of different error components according to their contribution to the layerwise output (via eigenvalues), making the approximation more efficient. It can be computed quickly without any training, requires only calibration activations, and does not introduce extra inference latency. Moreover, the derivation shows that this approach leads to a direct minimization of the layerwise compression loss, not just the raw weight error.

Analytically, truncating a singular value in the projected space corresponds to minimizing the true compression error under reasonable assumptions about the calibration activations.

In their paper, NVIDIA presents a wide range of strong results showing that EoRA can significantly boost the accuracy of quantized models. However, their experiments focus mostly on older Quantization methods like GPTQ and are limited to mid-sized LLMs, up to 13B parameters, at 3-bit and 4-bit precisions.

This leaves an open question: can EoRA still be effective for much larger models, using more modern quantization techniques, and even pushing down to 2-bit precision?

Let’s find out.

Calibrating an EoRA Adapter

Suppose we have quantized models that show significantly degraded performance compared to their full-precision counterparts on certain tasks. Our goal is to reduce this performance gap using EoRA.

For the experiments, I used Qwen2.5-72B Instruct and Qwen3-32B, both quantized to 2-bit using AutoRound (Apache 2.0 license), a state-of-the-art quantization algorithm developed by Intel. AutoRound leverages SignSGD optimization to fine-tune quantization, and is particularly effective for low-bit settings.

All the models I made are available here (Apache 2.0 license):

The 2-bit models were quantized with a group size of 32, except for which used a group size of 128. A larger group size reduces model size by storing less quantization metadata, but it introduces greater quantization error.

I evaluated the models on IFEval, a benchmark that measures instruction-following capabilities. Results showed a noticeable drop in performance for the quantized versions.

To compensate for this degradation, I applied an EoRA adapter using the implementation provided in the GPTQModel library (licensed under Apache 2.0). The integration is straightforward. If you’re curious about how it’s implemented in PyTorch, the codebase is compact, clean, and easy to follow:

- GPTQModel’s EoRA implementation: eora.py

EoRA requires a calibration dataset. Ideally, this dataset should reflect the model’s intended use case. However, since we don’t have a specific target task in this context and aim to preserve the model’s general capabilities, I used 1,024 randomly sampled examples from the C4 dataset (licensed under ODC-BY).

Another key parameter is the LoRA rank, which greatly influences the effectiveness of the EoRA adapter. Its optimal value depends on the model architecture, the target task, and the calibration data. A higher rank may yield better performance but risks overfitting to the calibration set. It also increases the size of the adapter, counterproductive when the overall goal of quantization is to reduce memory usage. Conversely, a lower rank keeps the adapter lightweight but might not capture enough information to effectively compensate for quantization errors.

In my experiments, I tested LoRA ranks of 32, 64, and 256.

Below is the code used to create the EoRA adapter with GPTQModel:

from gptqmodel import GPTQModel

from gptqmodel.adapter.adapter import Lora

from datasets import load_dataset

calibration_dataset = load_dataset(

"allenai/c4",

data_files="en/c4-train.00001-of-01024.json.gz",

split="train", download_mode="force_redownload"

).select(range(1024))["text"]

eora_adapter_path = "Qwen3-32B-autoround-2bit-gptq-r256"

model_path = "kaitchup/Qwen3-32B-autoround-2bit-gptq"

eora = Lora(

path=eora_adapter_path,

rank=256,

)

GPTQModel.adapter.generate(

adapter=eora,

model_id_or_path="Qwen/Qwen3-32B",

quantized_model_id_or_path=model_path,

calibration_dataset=calibration_dataset,

calibration_dataset_concat_size=0,

auto_gc=False)Using an NVIDIA A100 GPU on RunPod (referral link), it took approximately 4 hours to generate the EoRA adapter for the model Qwen3-32B-autoround-2bit-gptq.

All EoRA adapters created for these models are publicly available (Apache 2.0 license):

Evaluating EoRA Adapters for 2-bit LLMs

Let’s evaluate the effect of the EoRA adapters. Do they improve the accuracy of the 2-bit models?

It works!

The improvements are particularly notable for Qwen3-14B and Qwen3-32B. For instance, applying EoRA to Qwen3-32B, quantized to 2-bit with a group size of 128, resulted in an accuracy gain of nearly 7.5 points. Increasing the LoRA rank, from 32 to 64, also led to improvements, highlighting the impact of rank on performance.

EoRA is also effective on larger models like Qwen2.5-72B, though the gains are more modest. Lower-rank adapters showed little to no benefit on this model; it wasn’t until I increased the rank to 256 that significant improvements began to appear.

Memory Consumption of EoRA

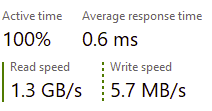

Using the EoRA adapter during inference results in the following increase in memory consumption:

The overhead is generally negligible. For instance for 2-bit Qwen3-14B, the adapters only add 257 MB and 514 MB to the total model size, with ranks of 32 and 64. With larger ranks, using an EoRA adapter becomes questionable as the total memory consumption may surpass the memory consumption of the same model quantized at a higher precision. For instance, 2-bit Qwen2.5 72B with an EoRA adapter of rank 256 is larger than 3-bit Qwen2.5 72B.

Note: This estimate includes only the memory consumed by the adapter’s parameters. For completeness, we could also account for the memory used by adapter activations during inference. However, these are extremely small relative to other tensors (such as the model’s attention and MLP layers) and can safely be considered negligible.

Conclusion

EoRA works. We’ve confirmed that it’s a simple yet effective method for compensating quantization errors, even at 2-bit precision. It’s intuitive, training-free, and delivers meaningful performance gains. That said, there are a few trade-offs to consider:

- Rank search: Finding the optimal LoRA rank requires experimentation. It’s difficult to predict in advance whether a rank of 32 will be sufficient or whether a higher rank, like 256, will cause overfitting. The optimal value depends on the model, calibration data, and target task.

- Increased memory consumption: The goal of quantization is to reduce memory usage, often for highly constrained environments. While EoRA adapters are relatively lightweight at lower ranks, they do slightly increase memory consumption, particularly at higher ranks, reducing the overall efficiency of 2-bit quantization.

Looking ahead, NVIDIA’s paper also demonstrates that EoRA adapters make excellent starting points for QLoRA fine-tuning. In other words, if you plan to fine-tune a 2-bit model using QLoRA, initializing from an EoRA-adapted model can lead to better results with less training effort. I’ve written about fine-tuning adapters for GPTQ model last year, in my newsletter:

QLoRA with AutoRound: Cheaper and Better LLM Fine-tuning on Your GPU

The main difference is that instead of initializing the adapter from scratch, we would load the EoRA adapter. This adapter will be fine-tuned.

References

[1] Dettmers et al, QLoRA: Efficient Finetuning of Quantized LLMs (2023), arXiv

[2] Badri and Shaji, Towards 1-bit Machine Learning Models (2024), Mobius Labs’ Blog

[3] Liu et al., EoRA: Training-free Compensation for Compressed LLM with Eigenspace Low-Rank Approximation (2024), arXiv

The post Boost 2-Bit LLM Accuracy with EoRA appeared first on Towards Data Science.

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![How to Enable Remote Access on Windows 10 [Allow RDP]](https://bigdataanalyticsnews.com/wp-content/uploads/2025/05/remote-access-windows.jpg)

![Legends Reborn tier list of best heroes for each class [May 2025]](https://media.pocketgamer.com/artwork/na-33360-1656320479/pg-magnum-quest-fi-1.jpeg?#)

_KristofferTripplaar_Alamy_.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl.jpg)

![[Open Thread] Android 16 is just weeks away, but has it met your early expectations?](https://www.androidauthority.com/wp-content/uploads/2025/03/android-16-logo-outside-hero-1-scaled.jpeg)

![SoundCloud latest company to hit trouble with AI clause in T&Cs [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/05/SoundCloud-latest-company-to-hit-trouble-with-AI-clause-in-TCs.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Vision Pro May Soon Let You Scroll With Your Eyes [Report]](https://www.iclarified.com/images/news/97324/97324/97324-640.jpg)

![Apple's 20th Anniversary iPhone May Feature Bezel-Free Display, AI Memory, Silicon Anode Battery [Report]](https://www.iclarified.com/images/news/97323/97323/97323-640.jpg)