Liquid Clustering: Optimizing Databricks Workloads for Performance and Cost

We are currently in an era where data is a goldmine. The amount of data stored in data stores is increasing rapidly, and the patterns of queries for each dataset are changing swiftly. This is due to the rising number of data users, automated systems, and AI tools that are accessing these datasets. As a result, there are greater challenges in maintaining the organization of the data efficiently to ensure optimal query performance and support concurrent writing, all while minimizing the total cost of ownership (TCO). When working with big data ecosystems like Databricks, data is stored in Delta Lake. Traditionally, optimization methods such as partitioning and z-ordering have been used to improve organization and querying. However, these methods have their limitations. In response, Databricks introduced Liquid Clustering, a revolutionary and simplified approach to data layout and optimization. This innovative feature promises significant cost savings and performance enhancements. The Challenges of Traditional Data Layouts Traditional partitioning and z-ordering can be effective for specific query patterns, but they also lead to several challenges, including: Data Skew: This occurs when data is unevenly distributed across partitions, resulting in some partitions being significantly larger than others. Partition Explosion: The creation of an excessive number of small partitions can cause metadata overhead and slow down query performance. Rigid Structure: Determining partition columns is often complicated. The need to predefine partition keys makes it difficult to adapt to changing query patterns. Limited Optimization for Joins: While z-ordering can enhance performance for certain queries, it may not be optimal for complex join operations. Expensive Writes: Z-ordering can lead to prolonged and costly write operations. Writes are often not incremental, and concurrent writes within the same partition can be challenging. This extends the duration of clustering jobs and raises computational costs. Enter Liquid Clustering Enter Liquid Clustering Liquid Clustering addresses these challenges through several innovative approaches: Dynamic Clustering: Rather than relying on static hive-partitioning, data is dynamically clustered based on clustering keys. This enables a more flexible and adaptive data layout, with Databricks managing all data-related decisions. Automatic Liquid Clustering: Databricks maintains metadata to track the physical location of data blocks. Automatic Liquid Clustering uses Predictive Optimization algorithms to self-tune the physical layout of data based on query patterns, reducing data skew and optimizing query performance, cost, and storage efficiency. Reduced Metadata Overhead: By minimizing excessive partitioning, Liquid Clustering decreases metadata overhead, leading to faster query planning and execution. Optimized for Joins: Liquid Clustering can adjust the data layout to enhance the performance of join operations, significantly improving results for complex queries. Optimized Writes: Liquid Clustering allows for cost-effective incremental clustering with low write amplification. It optimizes the data layout during write operations and background maintenance processes, eliminating the need for a complete data rewrite when cluster keys change. Let's explore Liquid Clustering with an example table. Consider that we have impressions data (TB scale) from the advertising campaigns run by a company. When this data is written each day without a data layout configuration, it is incrementally written to the files, resulting in a following data layout. Assume the user's frequent query pattern for accessing data from the ‘dcm_impression’ table is based on the event ‘date’ and ‘campaign_name’. SELECT * FROM dcm_impression WHERE date = '04/29/2025' AND campaign_name= 'C' The query engine leverages Delta data skipping statistics(min/max values, null counts, and total records per file) to identify the relevant files to scan. In this scenario, the engine needs to read 5 files out of 10, yielding a pruning rate of 50%. The primary issue here is that the data for campaign C is not collocated in a single file. The query engine will determine campaign C might be lying somewhere between min and max within each file for ‘4/29/2025’. The extraction of all records for campaign C also requires reading a significant number of entries for other campaigns from each of the 5 files. To improve query performance, we can enable Liquid Clustering. There are two ways to enable Liquid Clustering: Manually Using ALTER and OPTIMIZE commands In this example, we use the following ALTER command to enable liquid clustering with the new clustering keys: 'date' and 'campaign_name'. The ALTER will register the cluster keys in the log and create a new data layout. The data will reorganize into the new layout only when the OPTIMIZE command is executed. For a valid reason, the data

We are currently in an era where data is a goldmine. The amount of data stored in data stores is increasing rapidly, and the patterns of queries for each dataset are changing swiftly. This is due to the rising number of data users, automated systems, and AI tools that are accessing these datasets. As a result, there are greater challenges in maintaining the organization of the data efficiently to ensure optimal query performance and support concurrent writing, all while minimizing the total cost of ownership (TCO).

When working with big data ecosystems like Databricks, data is stored in Delta Lake. Traditionally, optimization methods such as partitioning and z-ordering have been used to improve organization and querying. However, these methods have their limitations. In response, Databricks introduced Liquid Clustering, a revolutionary and simplified approach to data layout and optimization. This innovative feature promises significant cost savings and performance enhancements.

The Challenges of Traditional Data Layouts

Traditional partitioning and z-ordering can be effective for specific query patterns, but they also lead to several challenges, including:

Data Skew: This occurs when data is unevenly distributed across partitions, resulting in some partitions being significantly larger than others.

Partition Explosion: The creation of an excessive number of small partitions can cause metadata overhead and slow down query performance.

Rigid Structure: Determining partition columns is often complicated. The need to predefine partition keys makes it difficult to adapt to changing query patterns.

Limited Optimization for Joins: While z-ordering can enhance performance for certain queries, it may not be optimal for complex join operations.

Expensive Writes: Z-ordering can lead to prolonged and costly write operations. Writes are often not incremental, and concurrent writes within the same partition can be challenging. This extends the duration of clustering jobs and raises computational costs.

Enter Liquid Clustering

Enter Liquid Clustering

Liquid Clustering addresses these challenges through several innovative approaches:

Dynamic Clustering: Rather than relying on static hive-partitioning, data is dynamically clustered based on clustering keys. This enables a more flexible and adaptive data layout, with Databricks managing all data-related decisions.

Automatic Liquid Clustering: Databricks maintains metadata to track the physical location of data blocks. Automatic Liquid Clustering uses Predictive Optimization algorithms to self-tune the physical layout of data based on query patterns, reducing data skew and optimizing query performance, cost, and storage efficiency.

Reduced Metadata Overhead: By minimizing excessive partitioning, Liquid Clustering decreases metadata overhead, leading to faster query planning and execution.

Optimized for Joins: Liquid Clustering can adjust the data layout to enhance the performance of join operations, significantly improving results for complex queries.

Optimized Writes: Liquid Clustering allows for cost-effective incremental clustering with low write amplification. It optimizes the data layout during write operations and background maintenance processes, eliminating the need for a complete data rewrite when cluster keys change.

Let's explore Liquid Clustering with an example table. Consider that we have impressions data (TB scale) from the advertising campaigns run by a company. When this data is written each day without a data layout configuration, it is incrementally written to the files, resulting in a following data layout.

Assume the user's frequent query pattern for accessing data from the ‘dcm_impression’ table is based on the event ‘date’ and ‘campaign_name’.

SELECT * FROM dcm_impression WHERE date = '04/29/2025' AND campaign_name= 'C'

The query engine leverages Delta data skipping statistics(min/max values, null counts, and total records per file) to identify the relevant files to scan. In this scenario, the engine needs to read 5 files out of 10, yielding a pruning rate of 50%.

The primary issue here is that the data for campaign C is not collocated in a single file. The query engine will determine campaign C might be lying somewhere between min and max within each file for ‘4/29/2025’. The extraction of all records for campaign C also requires reading a significant number of entries for other campaigns from each of the 5 files.

To improve query performance, we can enable Liquid Clustering. There are two ways to enable Liquid Clustering:

Manually Using ALTER and OPTIMIZE commands

In this example, we use the following ALTER command to enable liquid clustering with the new clustering keys: 'date' and 'campaign_name'. The ALTER will register the cluster keys in the log and create a new data layout. The data will reorganize into the new layout only when the OPTIMIZE command is executed. For a valid reason, the data team may choose not to execute the OPTIMIZE command right away.

ALTER TABLE dcm_impression CLUSTER BY (date, campaign_name);

OPTIMIZE dcm_impression;

In the manual approach, it is necessary to regularly monitor the query patterns. If there is a change in frequent query patterns, data team must evaluate the overhead cost of reclustering against the potential performance gains before altering the clustering keys. Managing decisions related to data layout design and implementing changes can be time-consuming and complex for a data team. Let's explore what else can be done in the next section.

Evolve Clustering through Automatic Liquid Clustering

Note: At the time of writing this article, automatic Liquid clustering is in Public Preview.

Automatic Liquid Clustering simplifies data management by eliminating the need for manual tuning. Predictive Optimization harnesses the power of Unity Catalog to monitor and analyze data and query patterns.

For our example, the following command will enable the automatic liquid clustering for Unity Catalog managed table- dcm_impression.

ALTER TABLE dcm_impression CLUSTER BY AUTO;

Once enabled, Predictive Optimization will take care of harder data management problems i.e. cluster key selection and evolve data layout based on the query pattern by continuously performing the following:

Note: The image is taken from the Databricks website.

Telemetry: To determine if dcm_impression will benefit from the Liquid Clustering, Predictive optimization analyzes the query filters from the transaction logs (including metadata within logs). It determines event date and campaign_name are frequently queried.

Perform Model Evaluation: Predictive Optimization involves a comprehensive evaluation of query workloads to identify the most effective clustering keys for maximizing data-skipping efficiency.

This process leverages insights gained from historical query patterns to estimate the potential performance enhancements associated with various clustering schemes. By simulating past queries, it predicts how effectively each option can reduce the amount of data that needs to be scanned.

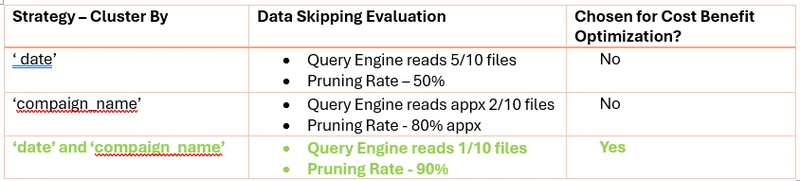

There are potentially 3 strategies Predictive Optimization discovers:

In the illustration below, with the liquid clustering on the ‘date’ and ‘compaign_name’, query engine will read the file that contains records for Campaign C and the rest of the files will be skipped. The min and max (data skipping) for this file is C.

Cost Benefit Optimization: Clustering or re-clustering always incurs some overhead. Therefore, the next step in predictive optimization is to perform a cost-benefit analysis for option number 3 mentioned earlier. Predictive optimization will assess whether the performance benefit of the query outweighs its associated costs. In our case, the pruning rate with the clustering keys "date" and "campaign_name" is 90%, and these columns are frequently queried. As a result, the benefits are substantial and justify clustering based on these columns. Predictive optimization will then proceed to update this Unity Catalog managed table, adding these columns as new cluster keys. The automatic decision-making of predictive optimization helps minimize the total cost of ownership (TCO).

Liquid Clustering Optimizes Writes

Liquid Clustering in Databricks achieves the ability to cluster data without rewriting the entire dataset in the traditional sense by leveraging the Delta Lake transaction log and a process of incremental clustering.

Here's a breakdown of the technical details:

1. Delta Lake Transaction Log as the Source of Truth:

- Delta Lake maintains a transaction log (stored in the delta_log directory of your table's storage). This log records every change made to the table as atomic commits.

- Each commit contains metadata about the changes, including which data files were added or removed.

- The transaction log provides a historical record of the table's state and is the single source of truth for the current and past versions of the data.

2. Incremental Clustering on Write:

When Liquid Clustering is enabled on a Delta table using ALTER TABLE ... CLUSTER BY (columns), Databricks doesn't immediately rewrite all existing data. Instead, it flags the table to use Liquid Clustering for future write operations.

During subsequent INSERT INTO, CTAS (CREATE TABLE AS SELECT), RTAS (REPLACE TABLE AS SELECT), COPY INTO, and append mode writes: Databricks' Spark engine intelligently organizes the newly written data based on the specified clustering keys.

This means that only the incoming data is clustered according to the defined columns. Existing data files that were written before Liquid Clustering was enabled, or written with different clustering keys, are not immediately rewritten.

The data that was clustered based on the old keys remains as is until it is rewritten by a future OPTIMIZE operation. This provides flexibility to adapt to evolving query patterns without a massive upfront cost.

3. OPTIMIZE Command for Reclustering (When Needed):

- While Liquid Clustering avoids rewriting all data on every write, the OPTIMIZE command plays a crucial role in further improving the data layout and consolidating files.

- When you run OPTIMIZE on a Liquid Clustered table: Databricks reads the existing data files and the transaction log. It then rewrites data as necessary to achieve a more efficient clustering based on the current clustering keys. This might involve merging smaller files and further organizing data based on the clustering columns. OPTIMIZE is the operation that actively rearranges the physical data on disk according to the clustering configuration.

- The key difference from traditional Z-ordering is that OPTIMIZE with Liquid Clustering is often more incremental and adaptive. It doesn't necessarily rewrite the entire table every time, especially if the existing data layout is already reasonably aligned with the clustering keys.

- **OPTIMIZE FULL: **In Delta Lake 3.3+, you can use OPTIMIZE FULL to force a reclustering of all records in the table, which is useful after changing clustering columns.

This incremental and metadata-driven approach makes Liquid Clustering more efficient and flexible than traditional data layout optimization techniques.

Data Volume Considerations

It's less about a strict "minimum volume" and more about the characteristics of your data such as cardinality, skew and query patterns. Liquid Clustering's advantages become increasingly apparent as the data volume grows. The optimizations it provides have a more significant impact on performance when dealing with terabytes or petabytes of data. Like any optimization technique, Liquid Clustering introduces some overhead. For very small datasets with extremely simple queries, the overhead might outweigh the benefits. However, in most real-world scenarios, the performance gains may far exceed the overhead.

Additionally, Databricks documentation provides thresholds relating to the number of clustering columns, and related data sizes. This is more of a guideline for how the system manages the data, and not a minimum data size for usage.

Clustering the Entire Data Lake

When you have several unrelated datasets in Delta tables serving distinct data products, using Liquid Clustering requires careful consideration of each dataset's unique characteristics and query patterns. If you are using Medallion Architecture start at the gold level. Here's a breakdown of how to approach this scenario:

- Isolate and Analyze Each Dataset: Each dataset, even if stored within the same Delta Lake, should be treated as a separate entity for Liquid Clustering purposes. For each dataset, thoroughly analyze the queries that are executed against it. Identify the most common filter columns, join keys, and aggregation columns. Evaluate the data's characteristics, including cardinality, skew, and data volume. As a next step you may choose to manually configure Liquid Clustering for each dataset or set to AUTO for all the datasets or take a hybrid approach. Follow the following steps for the manual approach.

- Define Clustering Keys for Each Dataset: Select clustering keys for each dataset based on its specific query patterns. Prioritize columns that are frequently used in filters, joins, and aggregations. Avoid clustering on columns with extremely high cardinality or significant skew, as this can negatively impact performance. Liquid Clustering supports multiple clustering keys, allowing you to optimize for a variety of query patterns.

- Implement Liquid Clustering Separately: Configure Liquid Clustering independently for each Delta table. This includes defining the clustering keys and setting any relevant configuration options. Schedule regular OPTIMIZE operations for each table to maintain an efficient data layout. The frequency of optimization should be based on the data's update frequency and query patterns.

- Monitoring and Adjustment: Continuously monitor the performance of queries against each dataset. Identify any performance bottlenecks and adjust the clustering keys or optimization schedule as needed. Be prepared to adapt the Liquid Clustering configuration as data and query patterns evolve.

Key Considerations:

- Liquid Clustering requires compute resources for optimization. Ensure that you have adequate resources to handle the optimization of multiple datasets.

- Ensure that the Liquid Clustering configuration meets the specific performance requirements of each data product.

- Maintain clear documentation of the Liquid Clustering configuration for each dataset to ensure data governance and consistency.

- Do not attempt to apply one liquid clustering strategy across multiple datasets, even if they reside in the same delta lake.

Guidance for Verifying Effectiveness

Once Automatic Liquid Clustering is enabled on your Delta table, verifying cost minimization and improved query efficiency requires a combination of monitoring query performance, analyzing cloud resource consumption, and understanding the characteristics of your data and workloads. Here's a breakdown of what to verify and how:

To verify Automatic Liquid Clustering's cost and efficiency benefits:

- **Monitor Query Performance: **Compare query execution times and data scanned (EXPLAIN) before and after enabling it. Look for faster queries and less data processed in Databricks query history and Spark UI. Check for reduced shuffle sizes in joins.

- Analyze Cloud Costs: Track your Databricks compute unit (DBU) consumption for analytical workloads, aiming for a decrease. Monitor storage I/O if your cloud provider offers such metrics, expecting lower reads. Importantly, ensure cost due to predictive optimization (AI) is not increasing over time and net benefit is well above the overall cost.

- Understand Clustering: Verify that the automatically chosen clustering columns align with your frequently used filter and join columns. Observe if data skew is reduced over time.

If you don't see improvements:

- Review the chosen clustering columns and your query patterns.

- Consider your data characteristics (e.g., high cardinality).

- Allow time for the automatic clustering to work with new data.

By systematically monitoring these aspects, you can gain confidence that Automatic Liquid Clustering is effectively minimizing costs and improving query efficiency for your Delta tables. Remember that the benefits might accrue over time as more data is ingested and the automatic clustering has more opportunities to optimize the layout.

Conclusion

Databricks' Liquid Clustering represents a significant advancement in data lake optimization. By dynamically adapting data layout to query patterns, Liquid Clustering delivers substantial cost savings and performance improvements. As data-lake grow and complexity increases, Liquid Clustering will become an increasingly essential tool for optimizing Databricks workloads. By embracing this technology, organizations can unlock the full potential of their data and drive data-driven innovation more efficiently. However, while opting for Automatic Liquid Clustering can be very beneficial, it's important to remain vigilant in making AI-related decisions and managing costs effectively.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] The Premium Python Programming PCEP Certification Prep Bundle (67% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Aleksey_Funtap_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Sergey_Tarasov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Developing New Chips for Smart Glasses, Macs, AI Servers [Report]](https://www.iclarified.com/images/news/97269/97269/97269-640.jpg)

![Apple Shares New Mother's Day Ad: 'A Gift for Mom' [Video]](https://www.iclarified.com/images/news/97267/97267/97267-640.jpg)

![Apple Shares Official Trailer for 'Stick' Starring Owen Wilson [Video]](https://www.iclarified.com/images/news/97264/97264/97264-640.jpg)