Handling Transactions on Large Tables in High-Traffic C# Applications

Working with large database tables in high-traffic environments can be challenging, especially when performing updates. As a C# developer, you need strategies to optimize transactions, reduce timeouts, and maintain performance. Here’s how to approach this problem. 1. Optimize Transactions Transactions are critical for data consistency but can become bottlenecks. Keep Transactions Short Minimize the time a transaction remains open. Split large operations into smaller batches (e.g., update 1,000 rows at a time). using (var transaction = connection.BeginTransaction()) { for (int i = 0; i TimeSpan.FromSeconds(2)); retryPolicy.Execute(() => UpdateLargeTable()); 4. Scale and Maintain Tables Partition Large Tables Split tables into smaller partitions by date or category (e.g., monthly partitions). This reduces lock contention. Archive Old Data Move historical data to an archive table to keep the main table lean. Update Statistics Regularly Ensure the database optimizer has up-to-date statistics for efficient query plans. 5. Use Asynchronous Programming For high-traffic apps, use async/await to avoid blocking threads: public async Task UpdateRecordAsync(int id) { using (var connection = new SqlConnection(connectionString)) { await connection.ExecuteAsync( "UPDATE LargeTable SET Column = @Value WHERE Id = @Id", new { Value = "NewData", Id = id } ); } } Final Tips Monitor Performance: Use tools like SQL Server Profiler or Application Insights to identify slow queries. Test Under Load: Simulate high traffic to uncover bottlenecks before deployment. Avoid Cursors: Use set-based operations instead of row-by-row processing. By combining optimized code, smart database design, and proper error handling, you can maintain performance even when working with large tables in high-traffic C# applications.

Working with large database tables in high-traffic environments can be challenging, especially when performing updates. As a C# developer, you need strategies to optimize transactions, reduce timeouts, and maintain performance. Here’s how to approach this problem.

1. Optimize Transactions

Transactions are critical for data consistency but can become bottlenecks.

- Keep Transactions Short Minimize the time a transaction remains open. Split large operations into smaller batches (e.g., update 1,000 rows at a time).

using (var transaction = connection.BeginTransaction())

{

for (int i = 0; i < totalRecords; i += batchSize)

{

var batch = GetBatchData(i, batchSize);

connection.Execute("UPDATE LargeTable SET Column = @Value WHERE Id = @Id", batch, transaction);

}

transaction.Commit();

}

- Use Read Committed Snapshot Isolation (RCSI) Enable RCSI in SQL Server to reduce locking and blocking. This allows readers to see a consistent snapshot of data without blocking writers.

2. Tune Queries and Indexes

Slow queries are a common cause of timeouts.

-

Optimize Indexes

Ensure indexes exist on columns used inWHERE,JOIN, orORDER BYclauses. Avoid over-indexing, as it slows down writes.- Use SQL Server Execution Plans to identify missing indexes.

Filter and Batch Updates

Update only necessary columns and rows. UseWHEREclauses to target specific data.

UPDATE LargeTable

SET Status = 'Processed'

WHERE Status = 'Pending' AND CreatedDate < DATEADD(DAY, -1, GETDATE());

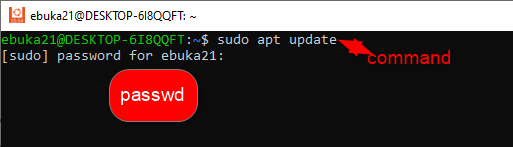

3. Handle Timeouts Gracefully

Timeouts often occur due to resource contention or long-running queries.

- Increase Command Timeout Adjust the timeout for specific operations in C#:

using (var command = new SqlCommand(query, connection))

{

command.CommandTimeout = 120; // 120 seconds

}

- Implement Retry Logic Use libraries like Polly to retry transient errors (e.g., deadlocks):

var retryPolicy = Policy

.Handle<SqlException>(ex => ex.Number == 1205) // Deadlock error code

.WaitAndRetry(3, retryAttempt => TimeSpan.FromSeconds(2));

retryPolicy.Execute(() => UpdateLargeTable());

4. Scale and Maintain Tables

Partition Large Tables

Split tables into smaller partitions by date or category (e.g., monthly partitions). This reduces lock contention.Archive Old Data

Move historical data to an archive table to keep the main table lean.Update Statistics Regularly

Ensure the database optimizer has up-to-date statistics for efficient query plans.

5. Use Asynchronous Programming

For high-traffic apps, use async/await to avoid blocking threads:

public async Task UpdateRecordAsync(int id)

{

using (var connection = new SqlConnection(connectionString))

{

await connection.ExecuteAsync(

"UPDATE LargeTable SET Column = @Value WHERE Id = @Id",

new { Value = "NewData", Id = id }

);

}

}

Final Tips

- Monitor Performance: Use tools like SQL Server Profiler or Application Insights to identify slow queries.

- Test Under Load: Simulate high traffic to uncover bottlenecks before deployment.

- Avoid Cursors: Use set-based operations instead of row-by-row processing.

By combining optimized code, smart database design, and proper error handling, you can maintain performance even when working with large tables in high-traffic C# applications.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

-Mouse-Work-Reveal-Trailer-00-00-51.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

OSAMU-NAKAMURA.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_NicoElNino_Alamy.png?#)

.webp?#)

.webp?#)

![New iOS 19 Leak Allegedly Reveals Updated Icons, Floating Tab Bar, More [Video]](https://www.iclarified.com/images/news/96958/96958/96958-640.jpg)

![Apple to Source More iPhones From India to Offset China Tariff Costs [Report]](https://www.iclarified.com/images/news/96954/96954/96954-640.jpg)

![Blackmagic Design Unveils DaVinci Resolve 20 With Over 100 New Features and AI Tools [Video]](https://www.iclarified.com/images/news/96951/96951/96951-640.jpg)