Google I/O 2025

Google I/O is Google’s annual developer extravaganza (this year held May 20–21, 2025), where the company showcases upcoming tech and software. In 2025 the theme was unmistakably AI first: Sundar Pichai opened by talking about “the progress we’re making in AI and applying it across our products”. I watched the two-hour keynote with my morning coffee, excited to see what Google had up its sleeve. It did not disappoint: dozens of announcements spanned improvements to Search, the Gemini AI assistant, generative creativity tools, and even futuristic gadgets. Here are the highlights I loved the most. Top announcements: Google’s keynote was packed, but the biggest highlights were: AI Mode in Search: Google launched a new AI-powered “Labs” tab in Search to deliver chatty, helpful answers. Features like Deep Search can handle longer research, and an AI shopping assistant can virtually try on clothes using Gemini and Google’s Shopping Graph. (There’s also a ticket-buying agent now.) Google says AI Mode is rolling out in the U.S. and you can opt in via Labs right now. Gemini AI upgrades: The Gemini chatbot got a big boost. The new Gemini 2.5 model (with Pro and Flash versions) is now the top performer on key AI benchmarks. In the app you can create quizzes, make it operate across apps (like adding events to Calendar mid-chat), and soon an experimental Agent Mode will even do tasks for you. Best of all, Gemini Live – the voice-and-vision assistant – is now free for everyone on Android and iOS, with full screen-sharing coming to iPhones soon. In short, Gemini just got a lot more powerful (and accessible). Generative media tools: Google showed off next-gen creative AI. Imagen 4 (its image-generator) can make stunning, high-res images with detailed textures and even accurate text. Veo 3 is a brand-new video model that can generate video with audio – including synced dialogue – marking the end of AI’s “silent era”. Google even demonstrated four new short films made with Veo. And the new Flow tool lets you craft cinematic movie clips by giving the AI a script or scene description. All of these generative tools are available now for Google AI Pro/Ultra subscribers. New AI-powered apps: We got some practical releases too. NotebookLM (the notebook app that turns your documents into audio overviews) is now available on Android and iOS – imagine listening to a summary of your lecture notes or PDFs! Google also announced SynthID Detector, a tool to help spot AI-generated images with Google’s watermark. (It’s a sign that with so much AI art around, even Google wants ways to verify authenticity.) On the developer side, Colab notebooks will soon become “agentic” (you can simply tell Colab what to do), and new GenAI APIs in ML Kit and Vertex AI will let programmers tap into Gemini behind the scenes. Android & hardware: On the device front, Google focused on future tech. We finally saw a working pair of Android XR smart glasses (co-developed with Samsung’s Project Moohan), complete with Gemini integration. In a fun demo, NBA star Giannis Antetokounmpo wore the glasses for a real-time translation call – no bulky VR headset needed! (It was pretty “Black Mirror” in action.) Aside from that, the keynote skipped new Pixel phones or Android versions – no Pixel 9 or watch unveil here. Google is clearly saving hardware for later. Search Gets Smarter (AI Mode) Google Search is no longer just links and snippets – it’s basically a mini Gemini chatbot now. The new AI Mode tab (visible right on mobile Search) launches Google’s Gemini engine to answer questions in-depth. Need to plan a trip or learn a complex topic? You can ask follow-up questions in conversation-style. There’s a “Deep Search” option for multi-step queries, and even an AI assistant for shopping that can show you outfits and let you virtually try them on from a selfie. Under the hood, Project Astra’s live capabilities are coming to Search too: later this summer you’ll be able to point your camera and talk to Google about what you see (called “Search Live”). In short, googling in 2025 feels more like chatting with a knowledgeable friend – and that’s exactly what Google’s aiming for. Gemini Upgrades (Free Live, 2.5 Pro & Flash) Screenshot of Google’s Gemini Live in action on a phone (it can talk, listen, and see through your camera). One of the stars of the show was Gemini, Google’s AI assistant. This year’s keynote teased lots of new tricks. The latest Gemini 2.5 models (including a speed-optimized “Flash” edition) are now available – Google even boasts they top all AI benchmarks for knowledge and reasoning. You can now do things like ask Gemini to generate a quiz on any topic, or let it plan a weekend trip across Gmail, Calendar and Maps seamlessly. Perhaps the biggest consumer-friendly news: Gemini Live – the conversational, voice-and-camera assistant – is now free on both Android and iOS. That means you can chat with Gemini and show it images ri

Google I/O is Google’s annual developer extravaganza (this year held May 20–21, 2025), where the company showcases upcoming tech and software. In 2025 the theme was unmistakably AI first: Sundar Pichai opened by talking about “the progress we’re making in AI and applying it across our products”. I watched the two-hour keynote with my morning coffee, excited to see what Google had up its sleeve. It did not disappoint: dozens of announcements spanned improvements to Search, the Gemini AI assistant, generative creativity tools, and even futuristic gadgets. Here are the highlights I loved the most.

Top announcements: Google’s keynote was packed, but the biggest highlights were:

- AI Mode in Search: Google launched a new AI-powered “Labs” tab in Search to deliver chatty, helpful answers. Features like Deep Search can handle longer research, and an AI shopping assistant can virtually try on clothes using Gemini and Google’s Shopping Graph. (There’s also a ticket-buying agent now.) Google says AI Mode is rolling out in the U.S. and you can opt in via Labs right now.

- Gemini AI upgrades: The Gemini chatbot got a big boost. The new Gemini 2.5 model (with Pro and Flash versions) is now the top performer on key AI benchmarks. In the app you can create quizzes, make it operate across apps (like adding events to Calendar mid-chat), and soon an experimental Agent Mode will even do tasks for you. Best of all, Gemini Live – the voice-and-vision assistant – is now free for everyone on Android and iOS, with full screen-sharing coming to iPhones soon. In short, Gemini just got a lot more powerful (and accessible).

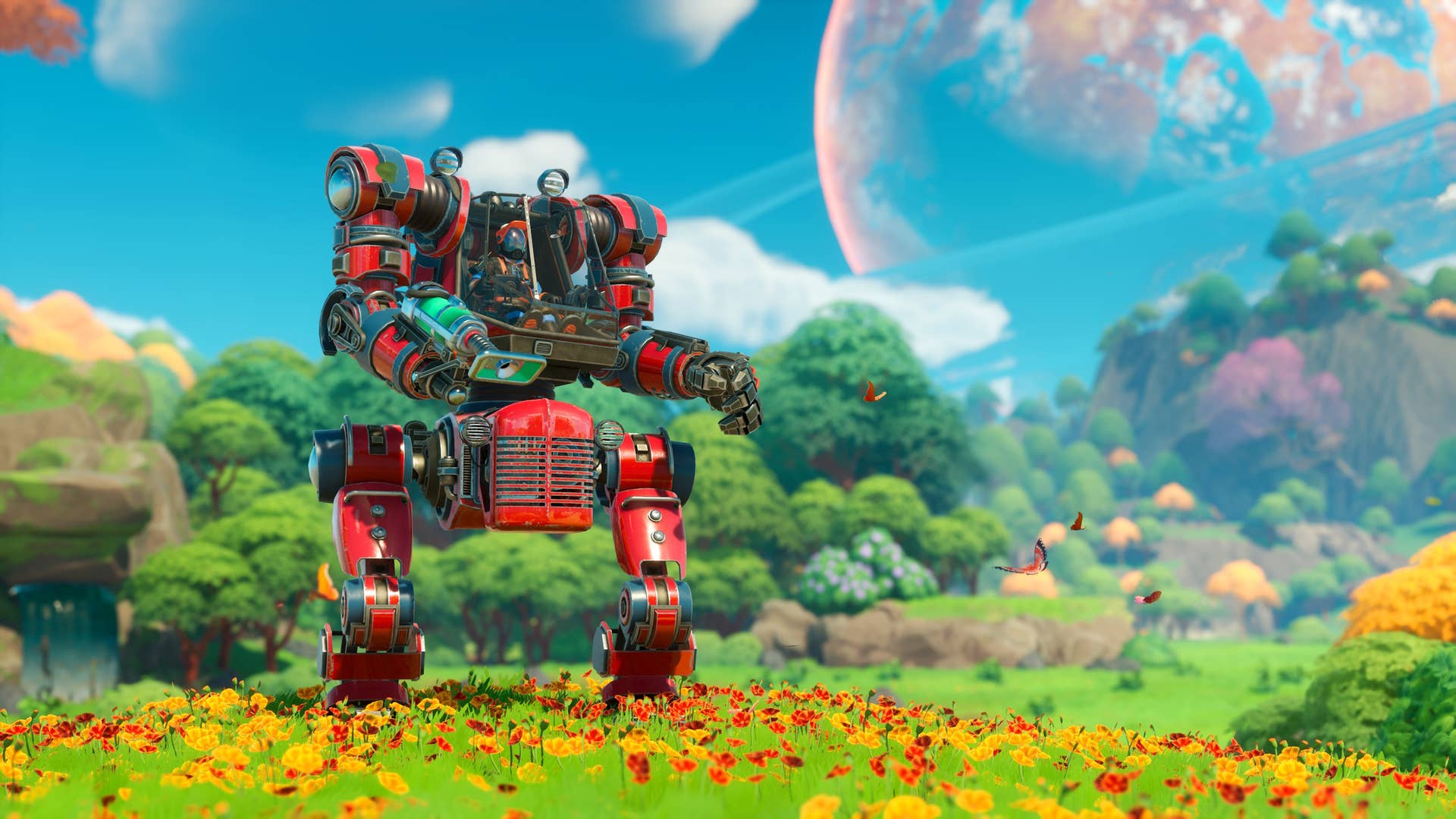

- Generative media tools: Google showed off next-gen creative AI. Imagen 4 (its image-generator) can make stunning, high-res images with detailed textures and even accurate text. Veo 3 is a brand-new video model that can generate video with audio – including synced dialogue – marking the end of AI’s “silent era”. Google even demonstrated four new short films made with Veo. And the new Flow tool lets you craft cinematic movie clips by giving the AI a script or scene description. All of these generative tools are available now for Google AI Pro/Ultra subscribers.

- New AI-powered apps: We got some practical releases too. NotebookLM (the notebook app that turns your documents into audio overviews) is now available on Android and iOS – imagine listening to a summary of your lecture notes or PDFs! Google also announced SynthID Detector, a tool to help spot AI-generated images with Google’s watermark. (It’s a sign that with so much AI art around, even Google wants ways to verify authenticity.) On the developer side, Colab notebooks will soon become “agentic” (you can simply tell Colab what to do), and new GenAI APIs in ML Kit and Vertex AI will let programmers tap into Gemini behind the scenes.

- Android & hardware: On the device front, Google focused on future tech. We finally saw a working pair of Android XR smart glasses (co-developed with Samsung’s Project Moohan), complete with Gemini integration. In a fun demo, NBA star Giannis Antetokounmpo wore the glasses for a real-time translation call – no bulky VR headset needed! (It was pretty “Black Mirror” in action.) Aside from that, the keynote skipped new Pixel phones or Android versions – no Pixel 9 or watch unveil here. Google is clearly saving hardware for later.

Search Gets Smarter (AI Mode)

Google Search is no longer just links and snippets – it’s basically a mini Gemini chatbot now. The new AI Mode tab (visible right on mobile Search) launches Google’s Gemini engine to answer questions in-depth. Need to plan a trip or learn a complex topic? You can ask follow-up questions in conversation-style. There’s a “Deep Search” option for multi-step queries, and even an AI assistant for shopping that can show you outfits and let you virtually try them on from a selfie. Under the hood, Project Astra’s live capabilities are coming to Search too: later this summer you’ll be able to point your camera and talk to Google about what you see (called “Search Live”). In short, googling in 2025 feels more like chatting with a knowledgeable friend – and that’s exactly what Google’s aiming for.

Gemini Upgrades (Free Live, 2.5 Pro & Flash)

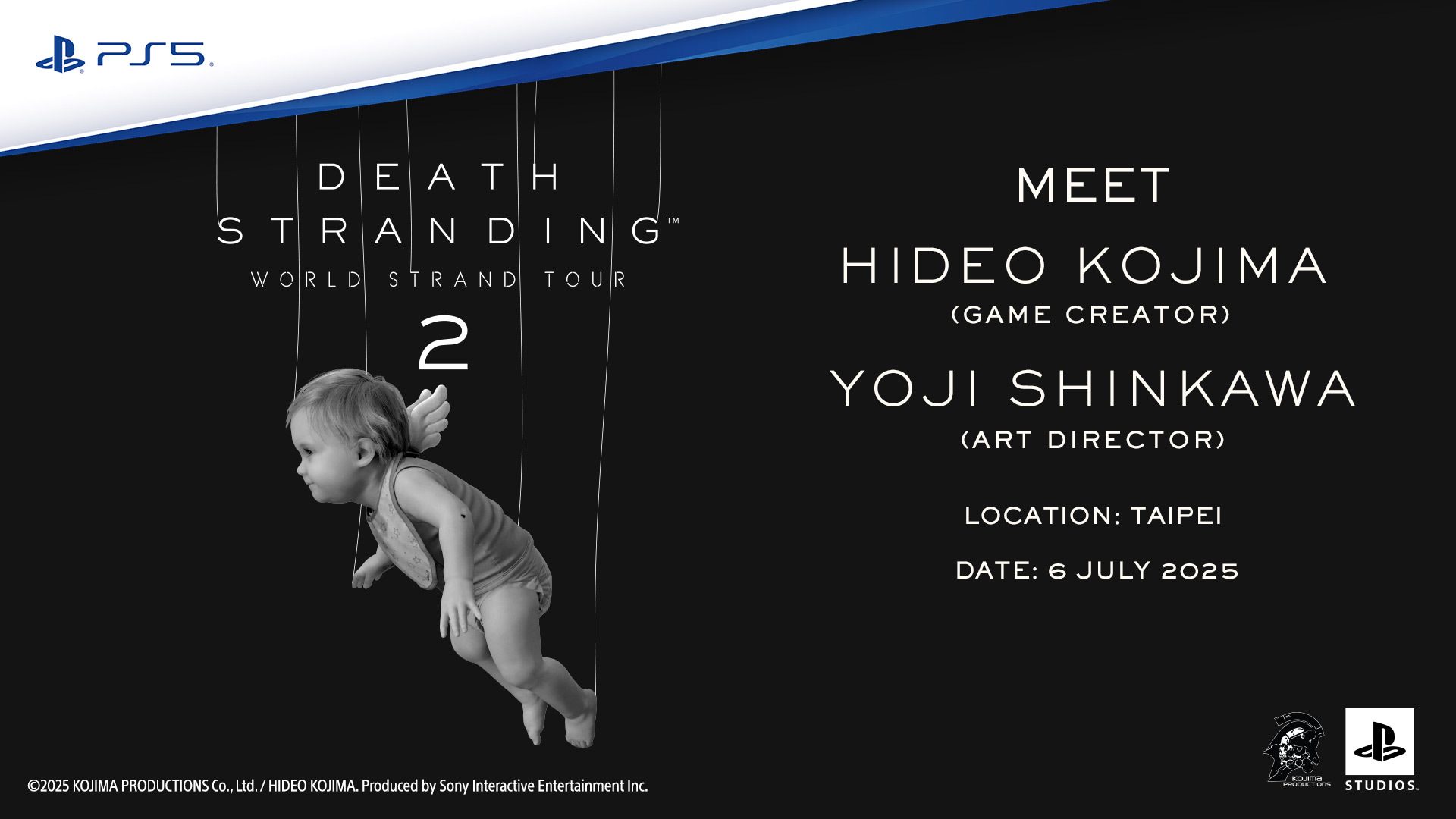

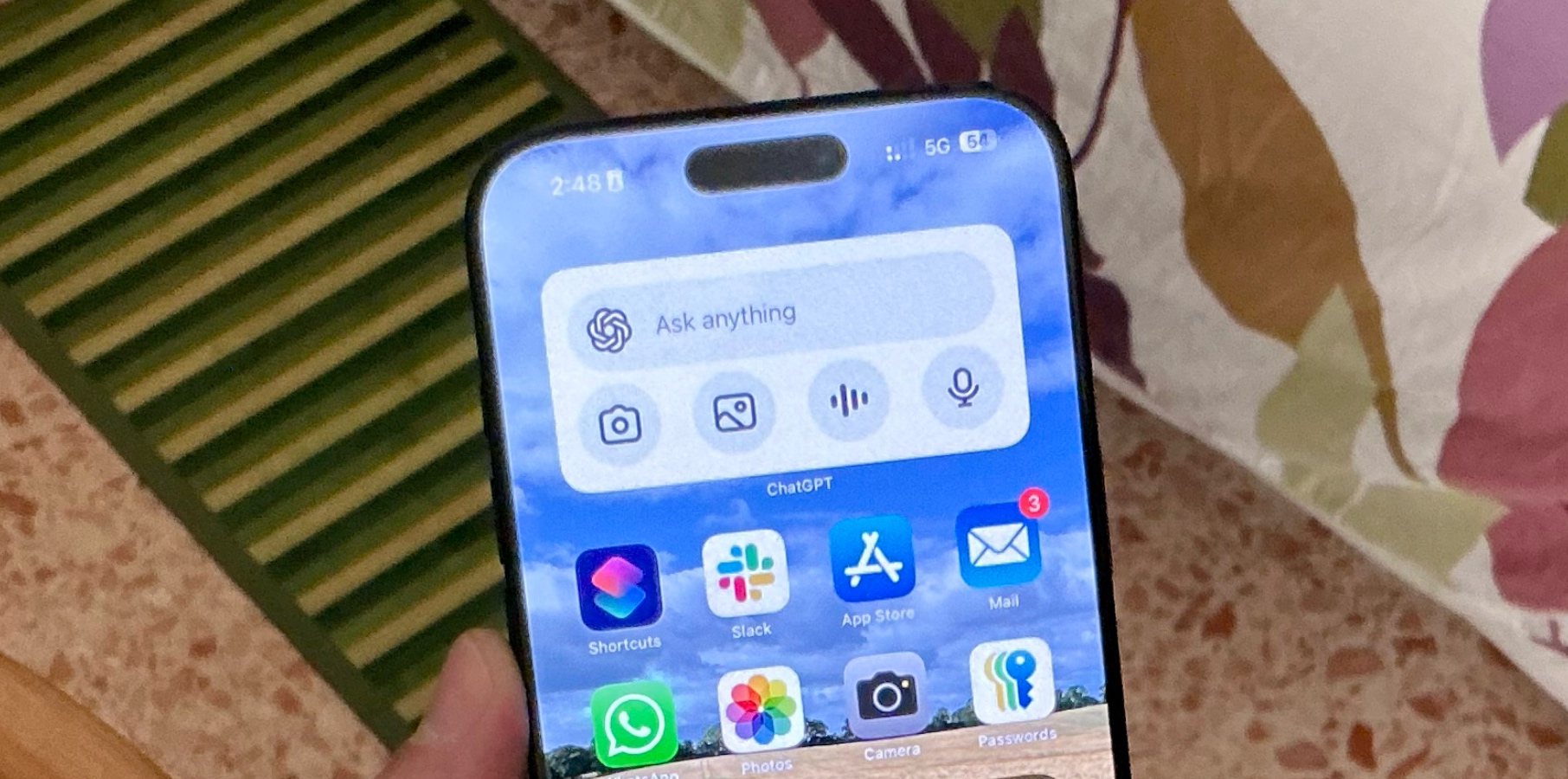

Screenshot of Google’s Gemini Live in action on a phone (it can talk, listen, and see through your camera).

One of the stars of the show was Gemini, Google’s AI assistant. This year’s keynote teased lots of new tricks. The latest Gemini 2.5 models (including a speed-optimized “Flash” edition) are now available – Google even boasts they top all AI benchmarks for knowledge and reasoning. You can now do things like ask Gemini to generate a quiz on any topic, or let it plan a weekend trip across Gmail, Calendar and Maps seamlessly.

Perhaps the biggest consumer-friendly news: Gemini Live – the conversational, voice-and-camera assistant – is now free on both Android and iOS. That means you can chat with Gemini and show it images right from your phone at no extra cost. For example, on new phones like the Pixel 9 you can have Gemini literally “see” through your camera and answer questions about objects or scenes. (In fact, Google’s own demo showed Gemini Live seamlessly walking a user through sharing their camera and controlling their device.) I can’t wait to try asking Gemini to help with everyday tasks just by talking – it really feels like having Jarvis from Iron Man in your pocket! Google even announced that the Gemini app now has over 400 million monthly users, so it’s already everywhere. Personally, I’m thrilled that Apple and Android users alike can now use Gemini Live without paying – that’s going to make AI assistants mainstream.

Generative Creativity: Images, Video, Music

Google also went big on AI creativity. The new Imagen 4 model blew me away in the demos – it generates photo-realistic images (even complex text and textures!) faster than before. Whether you want a detailed painting or a sci-fi scene, Imagen 4 delivers extremely high-quality results. Even cooler: Veo 3 is now out, and it can generate video with audio from a text prompt. Imagine typing “a cat plays piano in a jazz club” and getting a mini-movie with synchronized music and even meows! DeepMind CEO Demis Hassabis quipped that we’re “emerging from the silent era of video generation,” which was totally justified when Google showed a demo clip with talking characters.

Beyond images and video, Google previewed Flow, a new AI filmmaking tool. Flow lets anyone weave together short cinematic films by controlling characters, scenes, and styles via AI. (You could say, “Make a rainy urban scene in noir style,” and Flow generates it.) Flow is available now to Google AI Pro/Ultra users in the US. They even partnered with filmmaker Darren Aronofsky’s studio to create short films using these tools, one of which (titled “ANCESTRA”) will premiere at Tribeca this year. And for music lovers, Lyria 2 can generate song vocals and harmonies, even in realtime via the new Lyria RealTime model. All these announcements together signal that Google wants to put Hollywood-grade creative AI in everyone’s hands. I’m personally excited to try making a short film with just a text script!

Handy AI Apps and Tools

Aside from the big generative models, Google highlighted some fun new apps. The NotebookLM app (short for Notebook Language Model) is now available on Android and iPhone. NotebookLM can ingest your documents or notes and turn them into audio “podcasts” or summaries. For instance, I could upload a research article and ask the app to create an audio overview I can listen to on the go. Google showed how NotebookLM will soon add Video Overviews too (basically turning slides or PDFs into narrated clips). All of this is free.

On the flip side, Google is also helping us tell AI content apart from real content. They announced SynthID Detector – a portal to identify images that were AI-generated with Google’s watermark. As AI art booms, these kinds of tools are going to be important.

Finally, Google reminded us that many of these AI features are rolling out across their apps. Later this year Gmail will have Gemini-powered smart replies and writing assistance, Google Calendar can help you plan tasks with AI, and the Gemini chatbot will be available in Chrome for Windows and Mac. In short, whether you’re a student, a content creator or just a curious user, Google’s evolving toolkit means there’s a new AI feature around every corner.

Android, Devices, and (Not Much) Hardware

Despite all the AI talk, there were still a few hardware tidbits. First, no new Pixel phones or Android versions were announced at I/O – Google explicitly focused the keynote on AI, not gadgets. The latest Android 16 had already been demoed elsewhere, and the next Pixel (rumored to be called “Pixel 9”) is expected later in 2025. (In fact, Google’s store page already teases the Pixel 9 Pro Fold with Gemini AI, but that was a separate launch.)

What did we see? A glimpse of the near future in the form of Android XR smart glasses. These are lightweight glasses (built in partnership with Samsung and fashion brands) that display augmented reality directly in your vision. In Google’s demo, NBA star Giannis Antetokounmpo wore the glasses and talked into them to get a near-instant translation of speech in real time. Google even said the glasses will feature Gemini AI – imagine walking down the street and asking your glasses to “identify that mountain” or “schedule this meeting” just by looking at a calendar on your wall. It felt like a scene from Minority Report. These XR Glasses won’t be on shelves immediately, but Google’s serious about bringing them to market with partners like Gentle Monster and others.

(Image: Google’s latest Pixel 9 smartphone in “Coral,” shown next to Samsung’s Galaxy S25. Note: new Pixel devices weren’t announced at I/O, but Gemini features on Pixel phones are.)

Looking at consumer gadgets, the coral Google phone above (Pixel 9) is already shipping in stores and shows how Google is pushing AI on mobile. For example, the Pixel 9 can run Gemini Live so you can ask questions about what its camera sees. But at I/O itself, the takeaway was: software over hardware. The slide above had no mention of a Pixel 9 — instead Google was all about AI: cloud, apps, search, and yes, even future glasses.

Looking Forward – What’s Next?

Whew – that was a whirlwind! I/O 2025 made one thing clear: Google is doubling down on AI. Search will feel more like chatting with your smartest friend, Gemini is becoming incredibly powerful (and free!), and tools for creating art, video and music are out of this world. As a tech enthusiast, I’m thrilled (and a bit overwhelmed) at how fast AI is moving into everyday tools.

Now I’m curious: what are you most excited to try? Will you play with Gemini Live on your phone, have AI art create a birthday card, or maybe beta-test those futuristic glasses? Drop a comment below and let me know your thoughts! And if you enjoyed this I/O recap, be sure to share it and follow along for more tech news – plenty of fun AI surprises are surely coming later this year.

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] AdGuard Family Plan: Lifetime Subscription (76% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Laid off but not afraid with X-senior Microsoft Dev MacKevin Fey [Podcast #173]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747965474270/ae29dc33-4231-47b2-afd1-689b3785fb79.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_David_Hall_-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Andriy_Popov_Alamy_Stock_Photo.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple 15-inch M4 MacBook Air On Sale for $1049.99 [Deal]](https://www.iclarified.com/images/news/97419/97419/97419-640.jpg)

![Xiaomi Tops Wearables Market as Apple Slips to Second in Q1 2025 [Chart]](https://www.iclarified.com/images/news/97417/97417/97417-640.jpg)