Dev Diary #1 — Google Agent Development Kit: Lessons I Learned

This diary-tutorial hybrid tracks my first months with Google’s Agent Development Kit (ADK) — pure experience, insight sharing, and nothing else. The ADK is Google’s open-source framework for designing, chaining, and shipping autonomous AI agents. If you are unfamiliar with this framework, read my beginner guide to build AI agents quickly. Lessons I learned You don’t need many AI agents, one for each task. An agent can call as many tools as it needs. AI independently decides what tool it should use and when. Write a precise prompt and correctly declare what tools AI can access and when it should be used. You should care what you save in the session state. AI agents could save the output to the session state, and different agents in your AI pipeline could access it. While developing, you should navigate to the browser to check if the AI agent correctly saved information in the context. If the context is garbage, every later step is garbage. You should correctly work with the session state. Defining the output key for an agent will save only the AI agent response in the session state using this key. Even if you’re writing in the prompt instructions like save A to state B, it won’t be saved. Another problem with working with the session state is if you instruct the AI agent to use data from the session state, but data is missing in the session, AI will use something and not raise an error, hiding the problem somewhere deep. For a basic example, one agent used a tool to generate numbers in the session context, and another returned a generated number from the session context. What could go wrong? import random from google.adk.agents import LlmAgent, SequentialAgent GEMINI_MODEL = "gemini-2.5-flash-preview-04-17" def get_random_number(): return random.randint(0, 100) agent_A = LlmAgent( name="random_number_provider_agent", model=GEMINI_MODEL, output_key="random_number", instruction="Return random number between 0 and 100 using get_random_number.", tools=[get_random_number] ) agent_B = LlmAgent( name="random_number_consumer_agent", instruction="Return random number between 0 and 100 from session state under key random_number.", model=GEMINI_MODEL, ) root_agent = SequentialAgent( name="sequential_agent", sub_agents=[agent_A, agent_B], ) As you can see, the agent works as expected. The number was generated and returned. Let’s see what happens if we remove the first agent that generated the number. Reminder: Agent B expects random_number in the state. As Agent A was removed, there is no data in the session. AI agent returned the number and pretended the data was in the state, but it wasn’t. This is a fundamental problem — AI agents could hallucinate and lie to us, deeply making our output from the first steps invalid. You could pre-prompt additional validation, but you should do that each time you use the session state to share data with other agents. Writing prompts is challenging. Sometimes, I spend many hours doing simple tasks that I could code with Kotlin in 10 minutes. Output with AI agents could sometimes be unpredictable, as classic programming tools have determined behavior, but AI agents have not. You should carefully instruct AI agents on acting in different cases with corner cases, as you do with classic tools. You could ask what the problem is, prompting one more skill you should have. Yes, I know it, but there are no automatic tools to validate your prompts as syntax checkers in IDE, just plain text in English and nothing else. No modern linters, static analysis, no validations, and covering each AI prompt function with unit tests to make results predictable. At the end I’m not an AI agent expert. I’m just a software engineer passionate about technology. I find AI agent development exciting, but sometimes I struggle. These are my first steps in this field, and I hope to share more insights with you. Are you enjoying this guide and want to build your own AI agent? Just comment on what you want to implement with this technology. I will choose the idea I like and implement it by writing a story. Follow for the next stories!

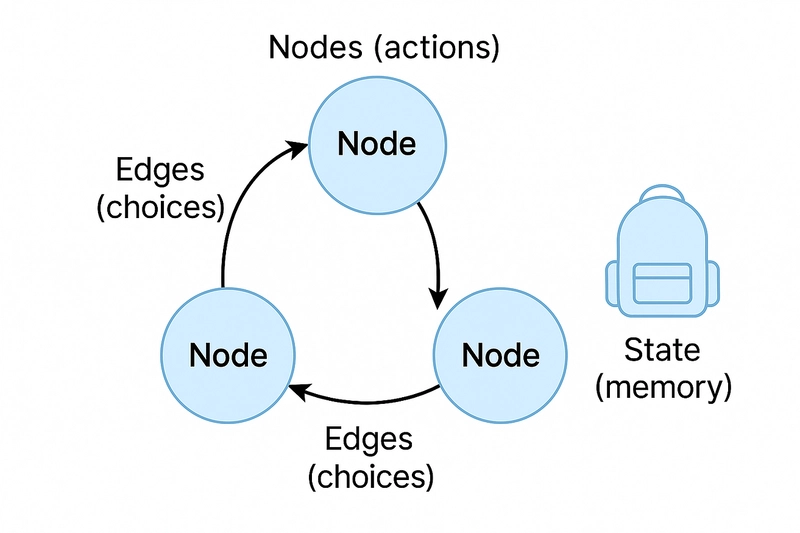

This diary-tutorial hybrid tracks my first months with Google’s Agent Development Kit (ADK) — pure experience, insight sharing, and nothing else. The ADK is Google’s open-source framework for designing, chaining, and shipping autonomous AI agents. If you are unfamiliar with this framework, read my beginner guide to build AI agents quickly.

Lessons I learned

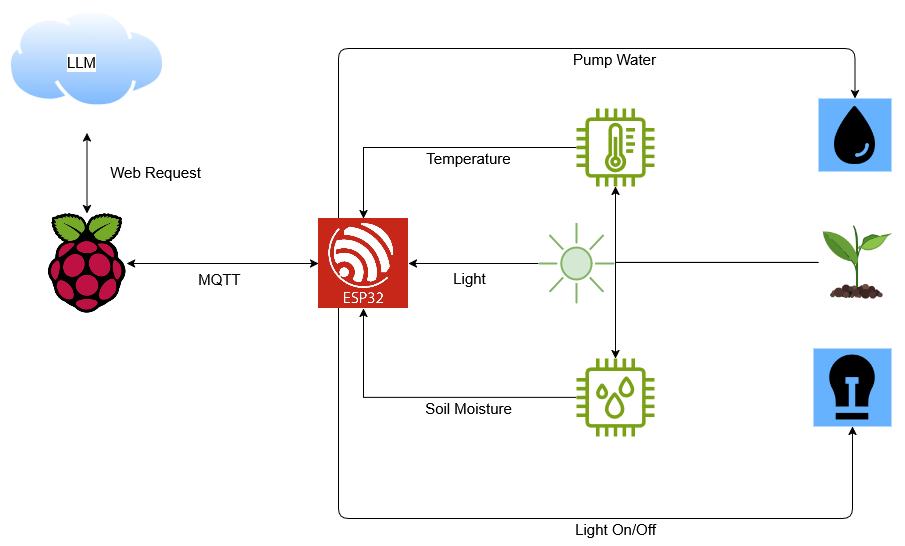

You don’t need many AI agents, one for each task. An agent can call as many tools as it needs. AI independently decides what tool it should use and when. Write a precise prompt and correctly declare what tools AI can access and when it should be used.

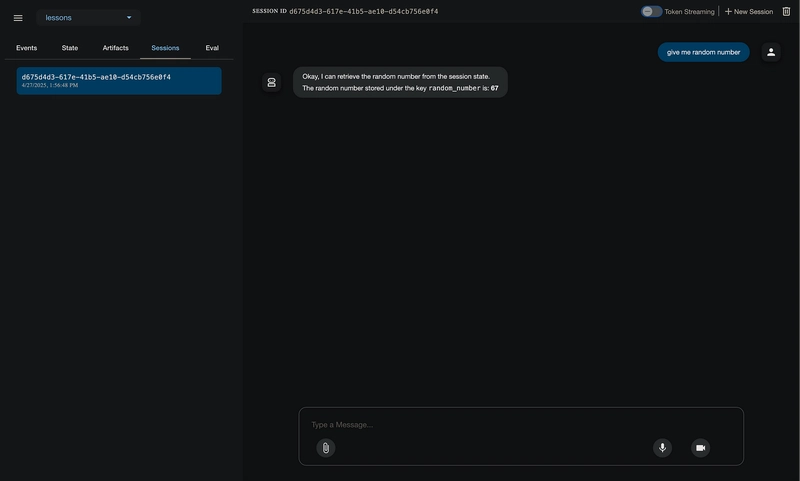

You should care what you save in the session state. AI agents could save the output to the session state, and different agents in your AI pipeline could access it. While developing, you should navigate to the browser to check if the AI agent correctly saved information in the context. If the context is garbage, every later step is garbage.

You should correctly work with the session state. Defining the output key for an agent will save only the AI agent response in the session state using this key. Even if you’re writing in the prompt instructions like save A to state B, it won’t be saved. Another problem with working with the session state is if you instruct the AI agent to use data from the session state, but data is missing in the session, AI will use something and not raise an error, hiding the problem somewhere deep.

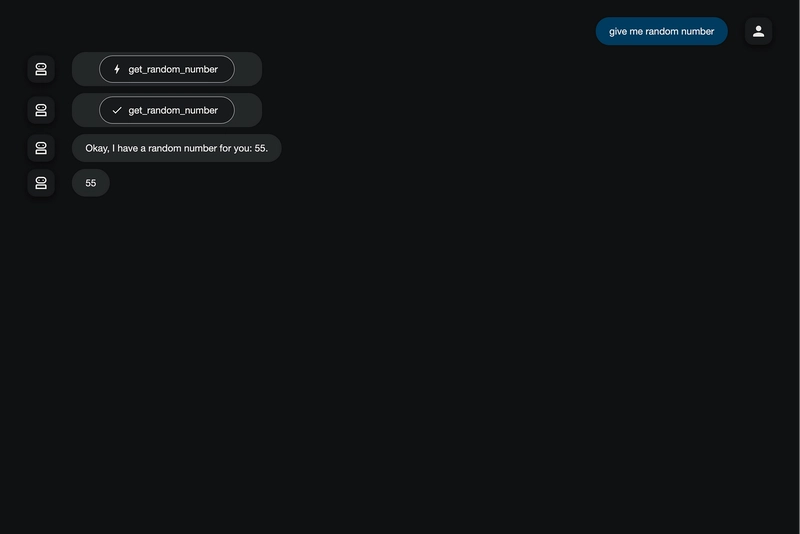

For a basic example, one agent used a tool to generate numbers in the session context, and another returned a generated number from the session context. What could go wrong?

import random

from google.adk.agents import LlmAgent, SequentialAgent

GEMINI_MODEL = "gemini-2.5-flash-preview-04-17"

def get_random_number():

return random.randint(0, 100)

agent_A = LlmAgent(

name="random_number_provider_agent",

model=GEMINI_MODEL,

output_key="random_number",

instruction="Return random number between 0 and 100 using get_random_number.",

tools=[get_random_number]

)

agent_B = LlmAgent(

name="random_number_consumer_agent",

instruction="Return random number between 0 and 100 from session state under key random_number.",

model=GEMINI_MODEL,

)

root_agent = SequentialAgent(

name="sequential_agent",

sub_agents=[agent_A, agent_B],

)

As you can see, the agent works as expected. The number was generated and returned. Let’s see what happens if we remove the first agent that generated the number. Reminder: Agent B expects random_number in the state. As Agent A was removed, there is no data in the session.

AI agent returned the number and pretended the data was in the state, but it wasn’t. This is a fundamental problem — AI agents could hallucinate and lie to us, deeply making our output from the first steps invalid. You could pre-prompt additional validation, but you should do that each time you use the session state to share data with other agents.

Writing prompts is challenging. Sometimes, I spend many hours doing simple tasks that I could code with Kotlin in 10 minutes. Output with AI agents could sometimes be unpredictable, as classic programming tools have determined behavior, but AI agents have not. You should carefully instruct AI agents on acting in different cases with corner cases, as you do with classic tools.

You could ask what the problem is, prompting one more skill you should have. Yes, I know it, but there are no automatic tools to validate your prompts as syntax checkers in IDE, just plain text in English and nothing else. No modern linters, static analysis, no validations, and covering each AI prompt function with unit tests to make results predictable.

At the end

I’m not an AI agent expert. I’m just a software engineer passionate about technology. I find AI agent development exciting, but sometimes I struggle. These are my first steps in this field, and I hope to share more insights with you.

Are you enjoying this guide and want to build your own AI agent? Just comment on what you want to implement with this technology. I will choose the idea I like and implement it by writing a story.

Follow for the next stories!

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

_NicoElNino_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Craft adds Readwise integration for working with book notes and highlights [50% off]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/craft3.jpg.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Restructures Global Affairs and Apple Music Teams [Report]](https://www.iclarified.com/images/news/97162/97162/97162-640.jpg)

![New iPhone Factory Goes Live in India, Another Just Days Away [Report]](https://www.iclarified.com/images/news/97165/97165/97165-640.jpg)