Anthropic Tried to Defend Itself With AI and It Backfired Horribly

The advent of AI has already made a splash in the legal world, to say the least. In the past few months, we've watched as a tech entrepreneur gave testimony through an AI avatar, trial lawyers filed a massive brief riddled with AI hallucinations, and the MyPillow guy tried to exonerate himself in front of a federal judge with ChatGPT. By now, it ought to be a well-known fact that AI is an unreliable source of info for just about anything, let alone for something as intricate as a legal filing. One Stanford University study found that AI tools make […]

The advent of AI has already made a splash in the legal world, to say the least.

In the past few months, we've watched as a tech entrepreneur gave testimony through an AI avatar, trial lawyers filed a massive brief riddled with AI hallucinations, and the MyPillow guy tried to exonerate himself in front of a federal judge with ChatGPT.

By now, it ought to be a well-known fact that AI is an unreliable source of info for just about anything, let alone for something as intricate as a legal filing. One Stanford University study found that AI tools make up information on 58 to 82 percent of legal queries — an astonishing amount, in other words.

That's evidently something AI company Anthropic wasn't aware of, because they were just caught using AI as part of its defense against allegations that the company trained its software on copywritten music.

Earlier this week, a federal judge in California raged that Anthropic had filed a brief containing a major "hallucination," the term describing AI's knack for making up information that doesn't actually exist.

Per Reuters, those music publishers filing suit against the AI company argued that Anthropic cited a "nonexistent academic article" in a filing in order to lend credibility to Anthropic's case. The judge demanded answers, and Anthropic's was mind numbing.

Rather than deny the fact that the AI produced a hallucination, defense attorneys doubled down. They admitted to using Anthropic's own AI chatbot Claude to write their legal filing. Anthropic Defense Attorney Ivana Dukanovic claims that, while the source Claude cited started off as genuine, its formatting became lost in translation — which is why the article's title and authors led to an article that didn't exist.

As far as Anthropic is concerned, according to The Verge, Claude simply made an "honest citation mistake, and not a fabrication of authority."

"I asked Claude.ai to provide a properly formatted legal citation for that source using the link to the correct article," Dukanovic confessed. "Unfortunately, although providing the correct publication title, publication year, and link to the provided source, the returned citation included an inaccurate title and incorrect authors. Our manual citation check did not catch that error."

Anthropic apologized for the flagrant error, saying it was "an embarrassing and unintentional mistake."

Whatever someone wants to call it, one thing it clearly is not: A great sales pitch for Claude.

It'd be fair to assume that Anthropic, of all companies, would have a better internal process in place for scrutinizing the work of its in-house AI system — especially before it's in the hands of a judge overseeing a landmark copyright case.

As it stands, Claude is joining the ranks of infamous courtroom gaffs committed by the likes of OpenAI's ChatGPT and Google's Gemini — further evidence that no existing AI model has what it takes to go up in front of a judge.

More on AI: Judge Blasts Law Firm for Using ChatGPT to Estimate Legal Costs

The post Anthropic Tried to Defend Itself With AI and It Backfired Horribly appeared first on Futurism.

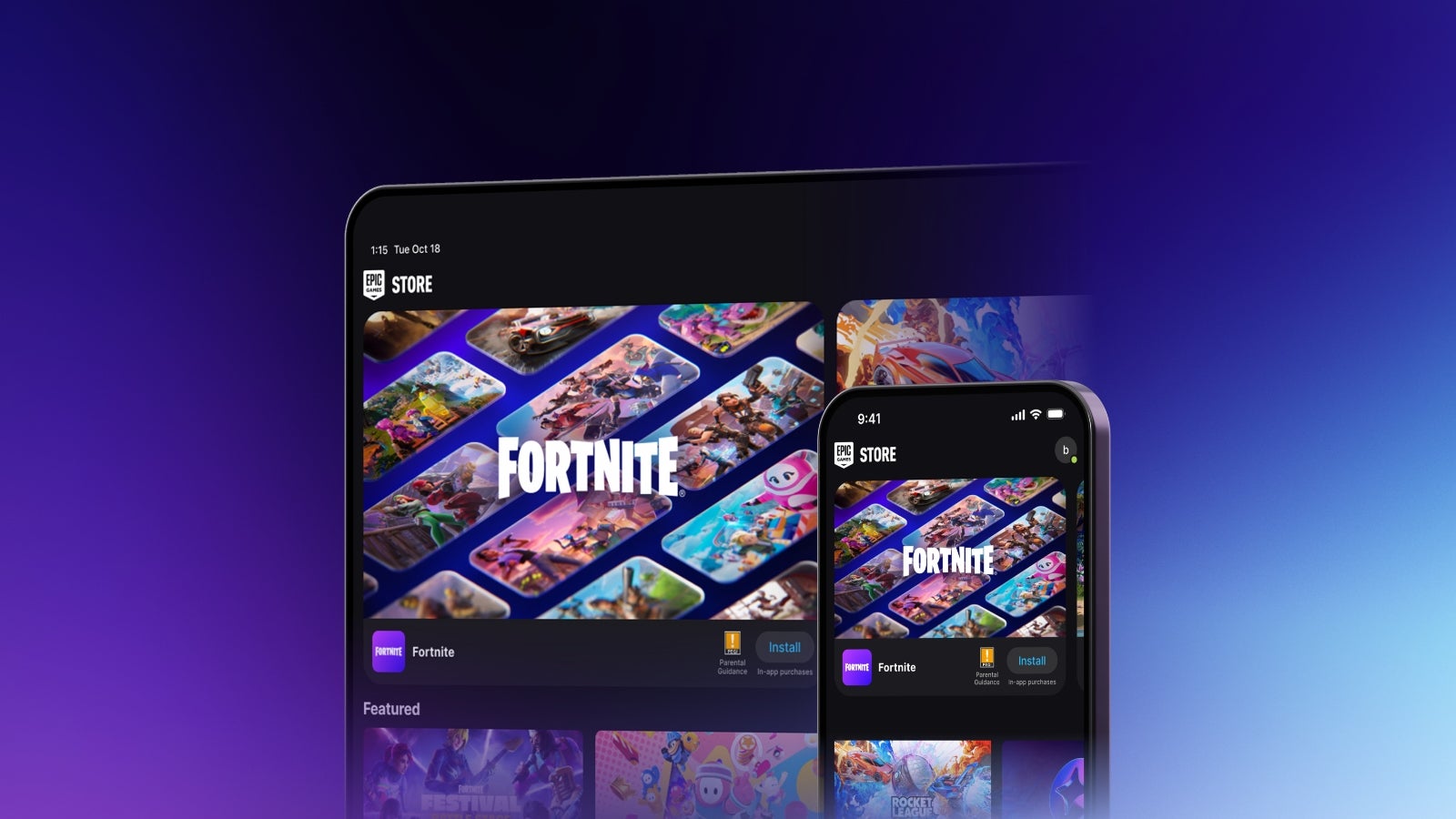

![Epic Games: Fortnite is offline for Apple devices worldwide after app store rejection [updated]](https://helios-i.mashable.com/imagery/articles/00T6DmFkLaAeJiMZlCJ7eUs/hero-image.fill.size_1200x675.v1747407583.jpg)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![[Virtual Event] Strategic Security for the Modern Enterprise](https://eu-images.contentstack.com/v3/assets/blt6d90778a997de1cd/blt55e4e7e277520090/653a745a0e92cc040a3e9d7e/Dark_Reading_Logo_VirtualEvent_4C.png?width=1280&auto=webp&quality=80&disable=upscale#)

-xl-(1)-xl-xl.jpg)

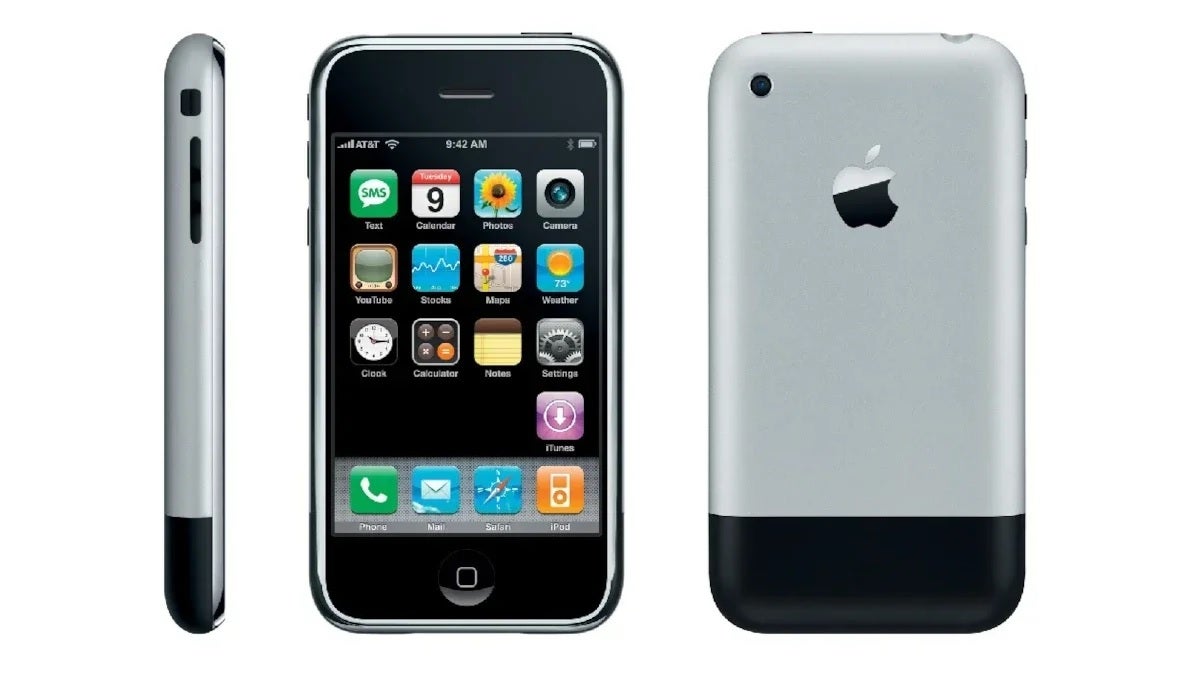

![‘Apple in China’ book argues that the iPhone could be killed overnight [Updated]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/05/Apple-in-China-review.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s May 2025 Google System Updates [U: 5/16]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![iPhone 17 Air Could Get a Boost From TDK's New Silicon Battery Tech [Report]](https://www.iclarified.com/images/news/97344/97344/97344-640.jpg)

![Vision Pro Owners Say They Regret $3,500 Purchase [WSJ]](https://www.iclarified.com/images/news/97347/97347/97347-640.jpg)

![Apple Showcases 'Magnifier on Mac' and 'Music Haptics' Accessibility Features [Video]](https://www.iclarified.com/images/news/97343/97343/97343-640.jpg)

![Sony WH-1000XM6 Unveiled With Smarter Noise Canceling and Studio-Tuned Sound [Video]](https://www.iclarified.com/images/news/97341/97341/97341-640.jpg)

![Apple Stops Signing iPadOS 17.7.7 After Reports of App Login Issues [Updated]](https://images.macrumors.com/t/DoYicdwGvOHw-VKkuNvoxYs3pfo=/1920x/article-new/2023/06/ipados-17.jpg)

![Apple Pay, Apple Card, Wallet and Apple Cash Currently Experiencing Service Issues [Update: Fixed]](https://images.macrumors.com/t/RQPLZ_3_iMyj3evjsWnMLVwPdyA=/1600x/article-new/2023/11/apple-pay-feature-dynamic-island.jpg)