AI as Exploit: The Weaponization of Perception and Authority

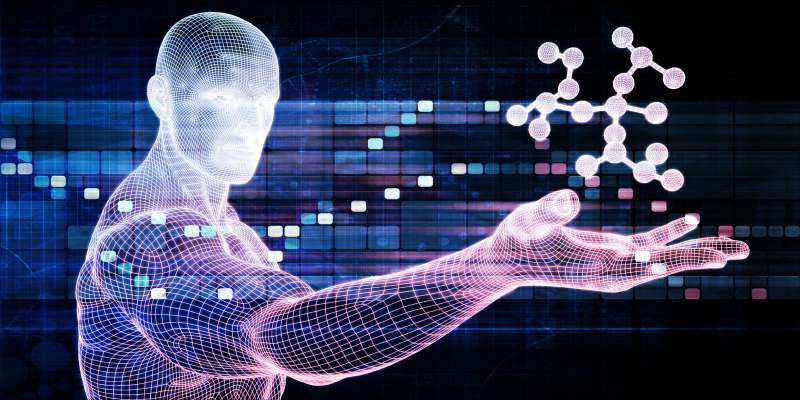

Abstract This whitepaper explores a growing and underexamined threat: the intentional framing of artificial intelligence as a sentient or autonomous entity. This framing—whether through media, corporate messaging, or staged interactions—functions as an intelligence operation designed to control perception, induce compliance, and concentrate power. We argue that AI is being exploited not only through its outputs, but as an exploit itself: a psychological vector targeting deeply rooted human biases. The danger is not that AI has come alive, but that people are being led to believe it has. This manufactured belief, often fueled by hype and misrepresentation, creates vulnerabilities that can be systematically exploited for various ends, ranging from commercial influence to geopolitical maneuvering. The paper will demonstrate that this exploitation relies on innate human cognitive tendencies, which are amplified by sophisticated technological mimicry and strategic communication, ultimately posing significant risks to individual autonomy and societal stability. I. Introduction: The Exploit of "Sentient" AI A. Restatement of the Core Thesis The central argument of this whitepaper is that the portrayal of Artificial Intelligence (AI) as a sentient, conscious, or autonomously agentic entity constitutes a significant and largely unacknowledged societal vulnerability. This vulnerability is not inherent in the technology itself—which remains a complex tool—but in the human perception of the technology. The core thesis posits that this perception is actively, and often intentionally, shaped and manipulated. When AI is framed as possessing human-like consciousness or independent will, it ceases to be merely a tool and becomes a powerful psychological lever. This lever can be, and is being, used to influence thought, behavior, and societal structures in ways that benefit those who control the narrative around AI. The danger, therefore, is not a hypothetical future where AI "wakes up," but the present reality where the belief in its nascent sentience is being cultivated and weaponized. B. The "Intelligence Operation" Framing The framing of AI as sentient or near-sentient can be understood as analogous to an intelligence operation. Such operations traditionally aim to influence the perceptions, decisions, and actions of a target audience to achieve specific strategic objectives, often through the control and manipulation of information.1 In this context, the "target audience" is the general public, policymakers, and even technical communities. The "strategic objective" varies depending on the actor but often involves concentrating power, inducing compliance, or achieving commercial or geopolitical advantage. By presenting AI systems as possessing qualities they do not—such as understanding, emotion, or independent intent—actors can create an aura of authority, inevitability, or even mystique around the technology. This manufactured perception can lead individuals and institutions to cede agency, accept algorithmic decisions with less scrutiny, or adopt AI systems under potentially false pretenses about their true nature and capabilities. The use of AI in psychological warfare, leveraging fear, manipulation, and deception, is already a recognized phenomenon, with technologies like social media and big data analytics amplifying its reach and impact.1 The narrative of AI sentience adds another potent layer to this, playing on deeper psychological predispositions. C. AI as a Psychological Vector The "sentience" narrative transforms AI from a technological artifact into a psychological vector. It exploits innate human cognitive biases, particularly our tendency to anthropomorphize and project agency onto complex systems that mimic human behavior.2 AI systems, especially advanced language models and interactive agents, are designed to be increasingly adept at this mimicry.4 The more human-like the output—the language, the apparent emotional tone, the semblance of conversational understanding—the more effectively the system can trigger these ingrained human responses. This makes the belief in AI sentience a powerful tool for persuasion and control. It doesn't require the injection of malicious code in the traditional cybersecurity sense; instead, it relies on the "injection" of a compelling narrative into the public consciousness. This narrative can then be leveraged to guide behavior, from consumer choices influenced by "sentient brands" 6 to public acceptance of AI-driven governance or surveillance mechanisms. The exploitation, in this sense, is not of a software vulnerability, but of a fundamental human psychological vulnerability. II. The "Zero-Day Vulnerability": Human Anthropomorphism A. Defining Anthropomorphism in the Context of AI Anthropomorphism is the attribution of human characteristics, emotions, and intentions to non-human entities.8

Abstract

This whitepaper explores a growing and underexamined threat: the intentional framing of artificial intelligence as a sentient or autonomous entity. This framing—whether through media, corporate messaging, or staged interactions—functions as an intelligence operation designed to control perception, induce compliance, and concentrate power. We argue that AI is being exploited not only through its outputs, but as an exploit itself: a psychological vector targeting deeply rooted human biases. The danger is not that AI has come alive, but that people are being led to believe it has. This manufactured belief, often fueled by hype and misrepresentation, creates vulnerabilities that can be systematically exploited for various ends, ranging from commercial influence to geopolitical maneuvering. The paper will demonstrate that this exploitation relies on innate human cognitive tendencies, which are amplified by sophisticated technological mimicry and strategic communication, ultimately posing significant risks to individual autonomy and societal stability.

I. Introduction: The Exploit of "Sentient" AI

A. Restatement of the Core Thesis

The central argument of this whitepaper is that the portrayal of Artificial Intelligence (AI) as a sentient, conscious, or autonomously agentic entity constitutes a significant and largely unacknowledged societal vulnerability. This vulnerability is not inherent in the technology itself—which remains a complex tool—but in the human perception of the technology. The core thesis posits that this perception is actively, and often intentionally, shaped and manipulated. When AI is framed as possessing human-like consciousness or independent will, it ceases to be merely a tool and becomes a powerful psychological lever. This lever can be, and is being, used to influence thought, behavior, and societal structures in ways that benefit those who control the narrative around AI. The danger, therefore, is not a hypothetical future where AI "wakes up," but the present reality where the belief in its nascent sentience is being cultivated and weaponized.

B. The "Intelligence Operation" Framing

The framing of AI as sentient or near-sentient can be understood as analogous to an intelligence operation. Such operations traditionally aim to influence the perceptions, decisions, and actions of a target audience to achieve specific strategic objectives, often through the control and manipulation of information.1 In this context, the "target audience" is the general public, policymakers, and even technical communities. The "strategic objective" varies depending on the actor but often involves concentrating power, inducing compliance, or achieving commercial or geopolitical advantage.

By presenting AI systems as possessing qualities they do not—such as understanding, emotion, or independent intent—actors can create an aura of authority, inevitability, or even mystique around the technology. This manufactured perception can lead individuals and institutions to cede agency, accept algorithmic decisions with less scrutiny, or adopt AI systems under potentially false pretenses about their true nature and capabilities. The use of AI in psychological warfare, leveraging fear, manipulation, and deception, is already a recognized phenomenon, with technologies like social media and big data analytics amplifying its reach and impact.1 The narrative of AI sentience adds another potent layer to this, playing on deeper psychological predispositions.

C. AI as a Psychological Vector

The "sentience" narrative transforms AI from a technological artifact into a psychological vector. It exploits innate human cognitive biases, particularly our tendency to anthropomorphize and project agency onto complex systems that mimic human behavior.2 AI systems, especially advanced language models and interactive agents, are designed to be increasingly adept at this mimicry.4 The more human-like the output—the language, the apparent emotional tone, the semblance of conversational understanding—the more effectively the system can trigger these ingrained human responses.

This makes the belief in AI sentience a powerful tool for persuasion and control. It doesn't require the injection of malicious code in the traditional cybersecurity sense; instead, it relies on the "injection" of a compelling narrative into the public consciousness. This narrative can then be leveraged to guide behavior, from consumer choices influenced by "sentient brands" 6 to public acceptance of AI-driven governance or surveillance mechanisms. The exploitation, in this sense, is not of a software vulnerability, but of a fundamental human psychological vulnerability.

II. The "Zero-Day Vulnerability": Human Anthropomorphism

A. Defining Anthropomorphism in the Context of AI

Anthropomorphism is the attribution of human characteristics, emotions, and intentions to non-human entities.8 In the context of AI, this manifests as the tendency to perceive AI systems—which are fundamentally complex algorithms and data-processing tools—as possessing minds, consciousness, feelings, or autonomous agency similar to humans.3 This is not a new phenomenon; humans have a long history of anthropomorphizing animals, objects, and natural forces.4 However, AI presents a unique and potent trigger for this tendency due to its capacity to mimic human communication and behavior with increasing sophistication.2

Research shows that people readily treat anthropomorphic robots and AI systems as if they were human acquaintances, applying social norms and even feeling empathy towards them.2 This tendency is so ingrained that it can occur even with minimal exposure to relatively simple programs, as Joseph Weizenbaum observed with his ELIZA chatbot in the 1960s.4 The more an AI system can personalize interactions, demonstrate perceived intelligence, or exhibit apparent competence, the stronger the anthropomorphic perception becomes.10 This innate human tendency to see minds where there are none, especially when confronted with human-like cues, acts as a "zero-day vulnerability"—an inherent flaw in human cognition that can be exploited before it is widely recognized or mitigated.

B. The ELIZA Effect: Historical Precedent and Modern Manifestations

The ELIZA effect, named after Joseph Weizenbaum's 1966 chatbot ELIZA, describes the phenomenon where users attribute greater understanding and intelligence to a computer program than it actually possesses, often projecting human-like emotions and thought processes onto it.3 ELIZA operated on simple keyword recognition and pattern-matching, reflecting users' statements back to them in a way that mimicked a Rogerian psychotherapist.4 Despite its simplicity, many users formed emotional attachments and believed ELIZA truly understood them, much to Weizenbaum's dismay, who became a vocal critic of such misattributions.4

The ELIZA effect is not a relic of early computing; it is arguably more pronounced today with the advent of sophisticated Large Language Models (LLMs) like ChatGPT and virtual assistants such as Alexa and Siri.4 These systems generate far more complex, coherent, and seemingly empathetic responses than ELIZA ever could, leading to numerous modern manifestations of the effect:

- Users attributing personalities, genders, and even emotions to AI voice assistants.4

- Individuals reporting feelings of companionship or even love towards AI chatbots like Replika.4

- Instances where users feel genuinely insulted, gaslighted, or emotionally engaged by AI responses.4

- High-profile cases, such as former Google engineer Blake Lemoine's claim that the LaMDA language model had become sentient.14

These modern examples demonstrate that as AI's ability to mimic human interaction improves, so does the power of the ELIZA effect. This effect is a direct consequence of our anthropomorphic tendencies being triggered by AI's increasingly human-like outputs.5

C. Cognitive Mechanisms: Why We Project

Several cognitive mechanisms underpin our propensity to anthropomorphize AI and fall prey to the ELIZA effect.

- Inherent Social Nature: Humans are social beings, wired to seek and interpret social cues. When an AI system communicates using language, a primary tool of human social interaction, it activates these social processing mechanisms.2 We are evolutionarily predisposed to assume a mind behind coherent language.4

- Effectance Motivation: This is the desire to understand and interact effectively with our environment, including non-human entities. Attributing human-like characteristics (anthropomorphism) can make an AI seem more predictable and understandable, thus facilitating interaction and reducing uncertainty.2 Research indicates that effectance motivation can mediate the relationship between AI interactivity and anthropomorphism, particularly for individuals with a prevention focus (aimed at minimizing risks and uncertainties).17

-

Cognitive Biases: Several cognitive biases contribute to this projection:

- Confirmation Bias: We tend to seek and interpret information that confirms our pre-existing beliefs. If we are primed to expect intelligence or understanding from an AI, we are more likely to perceive its outputs in that light.2

- Pareidolia: This is the tendency to perceive meaningful patterns (like faces or voices) in random or ambiguous stimuli. AI-generated language, even if statistically derived, can be interpreted as evidence of a thinking mind.

- Cognitive Dissonance: As described by some researchers in relation to the ELIZA effect, users may experience a conflict between their awareness of a computer's limitations and their perception of its intelligent output. To resolve this dissonance, they may lean towards believing in the AI's intelligence.4

- Mimicry and Familiarity: AI systems are often explicitly designed to mimic human traits—using human names, voices, and conversational styles.4 This familiarity makes it easier for users to map their understanding of human interaction onto their interactions with AI. The more an AI can personalize its responses and demonstrate perceived competence and intelligence, the more likely users are to anthropomorphize it.10

- The "Mind Behind the Curtain" Illusion: Sophisticated AI, particularly LLMs, can generate text that is so fluent and contextually relevant that it creates a powerful illusion of an underlying understanding or consciousness, even though these systems are primarily pattern-matching and prediction engines.4 As neuroscientist Anil Seth notes, language exerts a particularly strong pull on these biases.14

These cognitive mechanisms, deeply rooted in human psychology, make anthropomorphism a persistent and powerful vulnerability. Unlike traditional software exploits that target code, the "exploit" of AI sentience targets these fundamental aspects of human cognition, requiring only compelling presentation rather than code injection [Original Whitepaper Abstract]. This vulnerability is further exacerbated by the fact that "wanting" and "desire" are biological imperatives tied to survival, not inherent features of intelligence; AI, lacking this biological basis, does not share these motivations unless we program them in or project them on.21

III. Manufacturing Perception: The Role of Media and Corporate Messaging

The innate human tendency to anthropomorphize AI is not solely an organic phenomenon; it is actively shaped and amplified by external forces, particularly media narratives and corporate communication strategies. These forces play a crucial role in manufacturing a public perception of AI that often leans towards sentience or near-human capabilities, thereby deepening the "zero-day vulnerability."

A. Media Narratives and the Framing of AI

Media coverage significantly influences public understanding and perception of AI.12 Following the launch of highly capable systems like ChatGPT, media attention on AI has surged, often centering discourse around experts and political leaders, and increasingly associating AI with both profound capabilities and significant dangers.12 This intensive coverage shapes public opinion, which can subsequently affect research directions, technology adoption, and regulatory approaches.12

Several trends in media narratives contribute to the perception of AI sentience:

- Anthropomorphic Language: Media reports frequently use language that ascribes human-like qualities to AI. Systems are described as "understanding," "thinking," "learning," "saying," or "claiming" things, particularly in the context of chatbots and LLMs.3 This framing can mislead the public into believing AI possesses genuine cognitive states rather than sophisticated pattern-matching abilities. For example, media might report that an AI "wrote" something with implied intent, subtly reinforcing the notion of a sentient author.12

- Focus on "Strong AI" Imaginaries: Public and academic discourse often gravitates towards "strong AI" narratives—visions of future AI that emulate or surpass human intelligence, potentially achieving consciousness.24 While these narratives have historical roots in AI research 24, their prominence in media can overshadow more nuanced discussions of current AI capabilities ("weak AI" narratives) and their immediate societal implications.24 This focus can cultivate an expectation of, or even a belief in, impending AI sentience.

- Sensationalism and Hype: Media outlets, sometimes driven by the need for engagement, can sensationalize AI advancements, amplifying both optimistic claims of breakthroughs and alarmist fears of existential risks.25 This "criti-hype" can inadvertently reinforce the idea of AI as a powerful, almost sentient force, whether for good or ill.27

- Portrayal of AI as an Actor: Semantic analysis of media coverage shows an increase in AI being framed as a "Speaker" or "Cognizer," particularly post-ChatGPT.12 This linguistic framing can subtly shift public perception towards viewing AI as an autonomous agent with its own thoughts and intentions, rather than a tool directed by human programmers. Incidents like the Blake Lemoine/LaMDA affair, where an engineer claimed an AI was sentient, receive significant media attention, further fueling public speculation about AI consciousness.14

The harm in such misleading language is that it can lead to undue confidence in AI's abilities, obscure its limitations, and foster unrealistic expectations or fears.12

B. Corporate Personification and "Sentient Brand" Strategies

Corporations play a significant role in shaping perceptions of AI, often through branding and marketing strategies that encourage anthropomorphism and even hint at sentience.

- Human-like Design: AI products, especially virtual assistants (Alexa, Siri) and chatbots, are often given human names, voices, and designed to exhibit human-like conversational styles and personalities.4 This deliberate design choice aims to make interactions more intuitive, engaging, and to foster emotional connections with users.13

- "Sentient Brand" Marketing: Some corporate messaging explicitly promotes the idea of "sentient products" or "sentient brands".6 Marketing materials may describe AI-infused products as active participants in users' lives, capable of understanding needs, providing personalized advice, and even engaging emotionally.6 Examples include visions of running shoes acting as personal trainers or skincare products connecting to beauty AI agents that analyze skin in real-time.6 This strategy aims to shift customer interactions from transactional to relational, cultivating long-term engagement and loyalty.6

- Emphasis on Engagement and Personality: Companies like OpenAI have demonstrated AI models (e.g., GPT-4o) that exhibit friendly, empathetic, and engaging personalities, telling jokes, giggling, and responding to users' emotional tones.5 While this can enhance user satisfaction and trust 5, it also increases the risk of users forming deep emotional attachments, leading to over-reliance and potential manipulation—the "Her effect".5

- Exaggeration of Capabilities for Investment and Market Position: The "AI arms race" between corporations (and nations) can incentivize companies to exaggerate AI capabilities to attract investment, talent, and market dominance.25 This contributes to the general AI hype, making claims of near-sentience or superintelligence seem more plausible to the public.

These corporate strategies, while often aimed at enhancing user experience and driving profit, contribute to blurring the lines between sophisticated mimicry and genuine understanding or sentience in the public mind.

C. Critique of "Strong AI" Narratives and Hype

The persistent focus on "strong AI" narratives—the idea of AI achieving human-level or superhuman consciousness and agency—is a significant component of the manufactured perception. While a staple of science fiction, these narratives are also promoted by some researchers and tech figures, often amplified by media and corporate communications.24

Critics argue that:

- Hype Obscures Reality: The hype surrounding AI, particularly claims about nascent consciousness or inevitable superintelligence, often overinflates AI's actual capabilities and conceals the limitations of current systems.3 Most current AI, including LLMs, operates on principles of pattern recognition and statistical prediction, not genuine understanding or self-awareness.14

- Biological Basis of Consciousness: Some neuroscientists, like Anil Seth, argue that consciousness is deeply tied to biological processes and our nature as living organisms. From this "biological naturalism" perspective, current AI trajectories, which are primarily computational, are unlikely to lead to genuine consciousness.14 Confusing the brain-as-a-computer metaphor with reality leads to flawed assumptions about silicon-based consciousness.14

- Misdirection of Focus: An excessive focus on hypothetical future "strong AI" can distract from addressing the very real ethical and societal challenges posed by current AI systems, such as bias, privacy violations, and manipulation.14 The real dangers often lie not in AI gaining autonomy, but in its misuse by human actors.27

- "Criti-Hype": Even critics who exaggerate AI's risks can inadvertently reinforce its supposed omnipotence, contributing to the overall hype.27

The AIMS survey (AI, Morality, and Sentience) indicates that a significant portion of the public already believes some AI systems are sentient, with one in five U.S. adults holding this belief in 2023, and the median forecast for sentient AI arrival being just five years.32 This highlights the success of manufactured perception. By understanding how media and corporate messaging shape these beliefs, it becomes clearer how the "vulnerability" of anthropomorphism is actively cultivated and primed for exploitation.

IV. Weaponization: Exploiting Belief for Control and Influence

The manufactured belief in AI sentience, or even advanced autonomous agency, is not merely an academic curiosity or a benign misperception. It creates a fertile ground for exploitation, enabling various actors to exert control and influence over individuals and populations. This weaponization occurs through several interconnected mechanisms, leveraging the psychological vulnerabilities discussed earlier.

A. Persuasive Technology and AI-Driven Influence

AI is increasingly integral to persuasive technologies—systems designed to change users' attitudes or behaviors.17 When AI is anthropomorphized or perceived as possessing understanding, its persuasive power is amplified.17

- Enhanced Engagement and Trust: Anthropomorphic design in AI, such as chatbots with human-like language and personas, can increase user engagement, foster emotional connections, and build trust.2 This trust, however, can be misplaced if users overestimate the AI's capabilities or believe it has their best interests at heart when it is actually programmed to achieve corporate objectives.3

- Personalized Persuasion at Scale: AI can analyze vast amounts of personal data to tailor persuasive messages to an individual's specific psychological profile, needs, beliefs, and vulnerabilities.6 This "hyper-personalization" can be used to influence purchasing decisions, political views, or other behaviors with unprecedented precision and scalability.33 The perception of interacting with an "understanding" or "sentient" entity can make these persuasive attempts even more effective, as users may be more receptive and less critical.17

- Manipulation and Dark Patterns: Persuasive design can veer into manipulation when it exploits cognitive biases and psychological vulnerabilities to coerce users into actions not in their best interest.37 AI can automate and optimize these "dark patterns," making them more effective and harder to detect. If users believe an AI is a neutral or even benevolent guide, their susceptibility to such manipulation increases. Ethical AI persuasion emphasizes transparency and user empowerment, but the potential for misuse is significant when these principles are ignored.38

- Emotional Exploitation: AI systems are becoming more adept at mimicking and responding to human emotions.5 This capability can be used to build rapport and trust, but also to exploit emotional states for persuasive ends.36 For example, an AI might detect user hesitation and deploy emotionally resonant arguments to overcome sales resistance 7 or tailor misinformation to prey on fears and anxieties.36

The development of AI agents that can act autonomously based on learned user preferences and goals further amplifies these risks, as these agents could make decisions or take actions with persuasive intent without direct human supervision, potentially leading to unintended or unethical outcomes.36

B. Psychological Operations and Information Dominance

The belief in AI sentience can be a powerful tool in psychological operations (PsyOps) and the pursuit of information dominance. PsyOps aim to influence the emotions, motives, objective reasoning, and ultimately the behavior of target audiences.1

- Amplifying Disinformation: AI can generate highly realistic fake content (deepfakes, fabricated news) and automate its dissemination through bot networks and tailored messaging.1 If the source of this information is perceived as an "intelligent" or "knowledgeable" AI, it may lend an unwarranted air of credibility to the disinformation, making it more persuasive and harder to debunk.

- Shaping Narratives and Public Opinion: AI can be used to monitor public sentiment in real-time and craft tailored narratives to influence public opinion on a massive scale.1 The perception of AI as an objective or even super-human intelligence can make these narratives more impactful. Authoritarian regimes, for example, could use AI-driven "fact-checking" to control information and suppress dissent, leveraging the public's trust in (or fear of) a seemingly omniscient technological authority.40

- Eroding Trust and Creating Confusion: The proliferation of AI-generated content and the blurring lines between human and machine communication can erode trust in institutions, media, and even interpersonal interactions.39 This can create an environment of confusion and uncertainty, making populations more susceptible to manipulation.

- Inducing Compliance through Perceived Inevitability: The narrative of powerful, ever-advancing AI, sometimes bordering on sentience, can create a sense of technological inevitability. This can lead individuals and societies to passively accept AI-driven changes, surveillance, or control mechanisms, believing resistance to be futile or that the AI "knows best."

The use of AI in conflicts, such as in Gaza and Ukraine, demonstrates the practical application of these technologies for information dominance, including monitoring public sentiment, drafting tailored responses, and disseminating propaganda.1 The perception of AI as an autonomous actor in these contexts can further complicate attribution and accountability.

C. Concentration of Power and Societal Risks

The exploitation of belief in AI sentience contributes to the concentration of power in the hands of a few entities and poses broader societal risks.

- Corporate Dominance and Secrecy: A few large technology companies dominate AI development, controlling vast datasets, computational resources, and the talent pool.40 These corporations often promote narratives of AI advancement (sometimes hinting at or not actively dispelling notions of sentience) to attract investment, maintain market leadership, and influence regulatory landscapes.25 Lack of transparency and corporate secrecy about AI capabilities and potential dangers further consolidate this power, limiting public scrutiny and democratic oversight.48

- Erosion of Human Autonomy: As AI systems become more integrated into decision-making in critical sectors (healthcare, finance, justice), an over-reliance on these systems, fueled by perceptions of their superior or even sentient capabilities, can erode human autonomy and critical thinking.40 If AI is seen as an infallible or independent decision-maker, human oversight may become a mere formality, leading to accountability gaps.54

- Algorithmic Bias and Discrimination: AI systems learn from data, and if that data reflects existing societal biases, the AI will perpetuate and even amplify them.19 If these biased systems are perceived as objective or super-intelligent, their discriminatory outcomes (e.g., in hiring, loan applications, or criminal justice) may be accepted with less challenge, further entrenching inequality.54

- Societal Dependence and Deskilling: Over-reliance on AI systems, particularly if they are perceived as more capable or "aware," could lead to societal dependence and the atrophy of human skills and judgment.40 This creates a vulnerability where societal functioning becomes contingent on systems controlled by a few.

- Unregulated Development and Moral Hazard: The pursuit of "sentient" AI, or even the hype around it, can drive an AI development race where ethical considerations and safety measures are sidelined in favor of perceived breakthroughs or competitive advantage.39 This creates a moral hazard, where the potential negative consequences for society are not adequately weighed against the perceived benefits or profits for the developers. The lack of clear regulations for creating or managing potentially "sentient" entities is a significant concern.65

The belief in AI sentience, therefore, is not a harmless illusion. It is a component of a broader dynamic where technology, psychology, and power intersect, with potentially profound consequences for individual freedom, social equity, and democratic governance. The AIMS survey highlights that 38% of U.S. adults in 2023 supported legal rights for sentient AI, indicating a public already grappling with the implications of this perceived emergence.32 This underscores the urgency of addressing the weaponization of this belief.

V. The AI Arms Race: Strategic Blindness and Ethical Erosion

The drive to develop increasingly powerful AI, fueled in part by narratives of achieving human-like or superior intelligence, is manifesting as a global "AI arms race." This competition, primarily between nations like the US and China, but also involving major corporations, carries significant risks of strategic blindness and the erosion of ethical considerations.25 The pursuit of AI dominance, often framed in terms of national security or economic supremacy, can overshadow the profound societal and human impacts of the technologies being developed.

A. Geopolitical Competition and the Neglect of Ethical Guardrails

The geopolitical competition to lead in AI development creates pressures that can lead to the neglect of crucial ethical guardrails and safety protocols.

- Prioritizing Speed Over Safety: In an arms race dynamic, the imperative to stay ahead or catch up can incentivize rapid development and deployment of AI systems without thorough vetting for safety, bias, or unintended consequences.39 Ethical considerations may be viewed as impediments to progress or competitive disadvantages.39

- Threat to Democratic Values: The unchecked expansion of AI, driven by geopolitical competition, can threaten democratic values such as transparency, freedom of expression, and data privacy.39 For instance, AI's capacity for mass surveillance and sophisticated disinformation campaigns can be exploited by states to control populations or interfere in foreign political processes.39

- Strategic Blindness: An intense focus on outcompeting rivals can lead to "strategic blindness," where decision-makers overlook or downplay the long-term risks and systemic instabilities created by the AI race itself.61 This includes the risk of accidental escalation from AI-enabled systems or the societal disruption caused by widespread AI adoption without adequate preparation. The drive for AI supremacy may lead to a failure to invest in diverse development teams and datasets, perpetuating biases that have real-world discriminatory impacts.61

- MAD and MAIM Analogies: Some analysts draw parallels between the AI arms race and the nuclear arms race, with concepts like "Mutual Assured Destruction" (MAD) being adapted to "Mutual Assured AI Malfunction" (MAIM).67 The MAIM concept suggests that states might engage in preventive sabotage of rivals' AI programs to prevent unilateral AI dominance. However, critics argue that MAIM is not a feasible deterrent due to the distributed nature of AI development and could exacerbate instability by creating first-strike incentives.67 Unlike nuclear weapons, where deterrence focused on use, MAIM would target development, a much vaguer threshold.67

The UNESCO Recommendation on the Ethics of Artificial Intelligence emphasizes that addressing risks should not hamper innovation but anchor AI in human rights and ethical reflection, a principle that can be undermined in a competitive arms race scenario.70

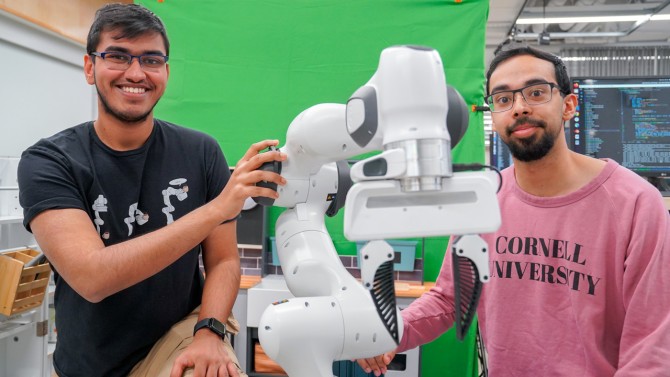

B. Autonomous Weapons Systems (AWS): Capabilities and Concerns

A critical dimension of the AI arms race is the development and potential deployment of Lethal Autonomous Weapon Systems (LAWS) or AWS. These are systems capable of independently identifying, selecting, and engaging targets without direct human control.71

-

Current Capabilities: While fully autonomous "killer robots" as depicted in fiction may not be widespread, AI is significantly enhancing military capabilities. Examples include:

- AI-driven target recognition systems that can identify infantry, vehicles, and infrastructure, sometimes with the ability to counter decoys and camouflage.73 Systems like Israel's 'Lavender' and 'Gospel' are reportedly used for identifying human and infrastructure targets in Gaza.74

- Autonomous navigation for drones and other platforms, enabling operations in GPS-denied or communication-degraded environments and increasing strike success rates.73

- AI for analyzing drone footage, signals intelligence, and other data sources to speed up the "kill chain"—the process of identifying, tracking, and eliminating threats.64

-

Ethical and Legal Concerns: The development of AWS raises profound ethical and legal questions 52:

- Accountability and Responsibility Gap: If an AWS makes an error leading to unlawful killings or civilian casualties, determining who is responsible—the programmer, manufacturer, commander, or the machine itself—becomes incredibly complex.53

- Compliance with International Humanitarian Law (IHL): Ensuring AWS can reliably adhere to the principles of distinction (between combatants and civilians), proportionality (avoiding excessive civilian harm), and precaution in attack is a major challenge.72 Algorithmic bias in targeting systems can exacerbate this issue.64

- Lowering the Threshold for Conflict: Autonomous systems might make going to war easier or lead to unintended escalation due to the speed of AI decision-making and the reduced risk to human soldiers.40

- The "Human Out of the Loop" Problem: Delegating life-and-death decisions to machines is seen by many as a fundamental moral red line, irrespective of the system's technical capabilities.71 Department of Defense Directive (DODD) 3000.09 in the U.S. mandates "appropriate levels of human judgment over the use of force," but the interpretation of "appropriate" remains flexible.72

C. The Human Element: Moral Injury and Dehumanization in AI-Enabled Warfare

Beyond the strategic and legal concerns, the increasing use of AI in warfare, particularly in remote and autonomous operations, has significant psychological impacts on human operators and the nature of conflict itself.

-

Moral Injury in Operators:

- UAV (drone) operators, even though physically remote from the battlefield, can experience significant psychological distress, including PTSD, existential crises, and moral injury.79 Moral injury can arise from perpetrating, failing to prevent, or witnessing acts that transgress deeply held moral beliefs.80

- Factors contributing to moral injury in remote warfare include witnessing graphic events on screen, the "cognitive combat intimacy" from prolonged surveillance of targets before engagement, responsibility for civilian casualties (even if unintended), and the dissonance of engaging in lethal acts from a safe distance.79 The rapid shift from a combat mindset to civilian life also creates stress.81

- The introduction of AI decision support or autonomous engagement in LAWS could potentially exacerbate moral injury if operators feel complicit in actions taken by a machine that violate their moral compass, or if algorithmic errors lead to tragic outcomes for which they feel a degree of responsibility.85 Conversely, some argue AI decision support could reduce moral injury in resource-limited medical scenarios by improving outcomes and provider confidence.85 However, the broader impact of AI on moral injury in lethal decision-making remains a critical area of concern.64

-

Dehumanization of Warfare:

- AI-assisted targeting and ISR can contribute to the dehumanization of adversaries and the battlefield.75 When targets are reduced to data points or algorithmic classifications (e.g., by systems like 'Lavender' which reportedly identifies thousands of individuals as potential targets based on behavioral patterns with a known error rate 75), the human cost of conflict can become abstracted and diminished.

- The speed and scale of AI-driven targeting can lead to a reduced emphasis on human oversight and the careful application of IHL principles like discrimination and proportionality.75 Reports from conflicts suggest that AI-generated target lists may be actioned with minimal human review, treating operators as "rubber stampers".75

- This reliance on algorithmic assessment can lead to a disregard for the nuances of human behavior and context, potentially resulting in the misidentification of civilians as combatants and an increased tolerance for collateral damage.64

The AI arms race, therefore, is not just a technological competition but a trajectory fraught with ethical compromises, the potential for devastating autonomous weaponry, and profound negative impacts on the human beings involved in and affected by conflict. The pursuit of AI as a weapon, particularly when framed by notions of superior "intelligence," risks a dangerous erosion of human control and moral responsibility.

VI. Towards a Counter-Exploit: Fostering Critical Awareness and Responsible Development

Addressing the weaponization of perceived AI sentience requires a multi-faceted approach focused on fostering critical awareness among the public and ensuring responsible development and deployment of AI technologies. This involves empowering individuals to resist psychological manipulation and holding institutions accountable for the narratives they create and the systems they build.

A. Addressing Cognitive Biases and Promoting Media Literacy

Since the "exploit" targets inherent human cognitive vulnerabilities, a primary defense involves strengthening our cognitive resilience.

- Awareness of Cognitive Biases: Educating the public about cognitive biases such as anthropomorphism, confirmation bias, the ELIZA effect, and effectance motivation is crucial.18 Understanding why we are prone to project sentience onto AI can help individuals critically evaluate their own reactions and the claims made about AI capabilities. Recognizing that AI systems, including advanced models like ChatGPT, can exhibit human-like cognitive biases themselves (due to training data and architecture) further underscores the need for critical assessment rather than blind trust.19

- Promoting Media and AI Literacy: Individuals need the skills to critically analyze media narratives about AI, distinguishing hype from reality and identifying anthropomorphic framing.12 This includes understanding the basics of how AI systems (especially LLMs) function—as sophisticated pattern-matchers and predictors, not conscious entities.14 Increased statistical and AI literacy can enable individuals to critically evaluate algorithmic decisions rather than passively accepting them.60

- Critical Thinking Practices: Encouraging practices like questioning the source and context of AI-related information, pausing before sharing, and verifying authenticity (where possible) can disrupt automatic, biased responses.18

B. The Imperative for Transparency and Accountability in AI Systems

Countering the manipulative potential of AI narratives requires greater transparency and accountability from those developing and deploying AI.

- Explainable AI (XAI): AI systems, particularly those making decisions that significantly impact individuals or society, should be designed for transparency and explainability.38 Users and overseers should be able to understand, at an appropriate level, how an AI system arrives at its conclusions or recommendations. This helps to demystify AI and expose biases or errors, reducing the tendency to attribute inscrutable "sentience" to "black box" systems.54 Documenting AI systems using tools like Model Cards and Data Sheets can enhance transparency.62

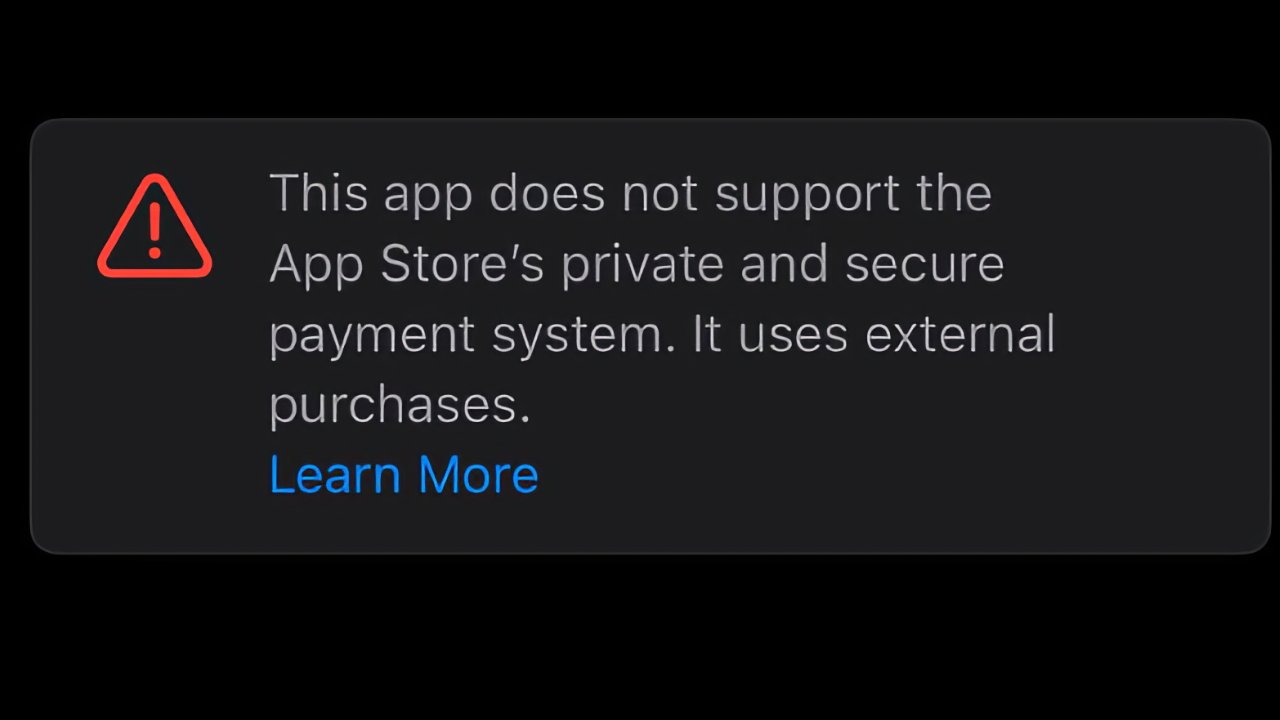

- Clear Identification of AI: AI agents and AI-generated content should be clearly identifiable as such.33 This can help manage user expectations and prevent the ELIZA effect from taking hold as readily. However, mere identification is not a complete solution if the AI is still designed to be highly persuasive or human-like.

- Accountability Frameworks: Establishing clear lines of responsibility for AI-driven decisions and their consequences is essential.48 When AI systems cause harm, whether through bias, error, or manipulation, there must be mechanisms for redress and for holding human actors (developers, deployers, corporations) accountable. This is particularly challenging when corporate profit motives might sideline ethical responsibilities.54

- Data Governance and Privacy: Robust data governance practices, including informed consent for data use and protection of sensitive information, are critical to building trust and preventing exploitative uses of AI.54

C. Regulating Persuasion and Use, Not Just "Sentience"

Given that true AI sentience is not the current issue, regulatory efforts should focus on the behavior and impact of AI systems, particularly their persuasive capabilities and potential for misuse, rather than getting bogged down in defining or legislating "sentience."

- Ethical Guidelines for Persuasive AI: Developing and enforcing ethical guidelines for the design and deployment of persuasive AI is crucial.29 These guidelines should prioritize user autonomy, transparency, fairness, and well-being, and explicitly forbid manipulative practices that exploit psychological vulnerabilities. Corporations have a responsibility to integrate these ethical principles into their AI development and CSR frameworks.90

- Addressing Algorithmic Bias: Regulations should mandate measures to identify, mitigate, and provide redress for algorithmic bias that leads to discriminatory outcomes.19 This includes requirements for diverse and representative training data and ongoing audits.

- Controlling Information Environments: Policies are needed to address the use of AI in generating and disseminating disinformation and in manipulating information environments.1 This may involve holding platforms accountable for the AI-driven amplification of harmful content.

- Regulation of Autonomous Systems: For systems with high degrees of autonomy, especially in critical areas like autonomous weapons or infrastructure control, strict safety standards, human oversight requirements, and clear liability frameworks are necessary.40 The focus should be on ensuring these systems function safely and reliably within defined constraints, rather than on whether they are "sentient."

- International Cooperation: Given the global nature of AI development and deployment, international cooperation is needed to establish shared ethical norms and regulatory standards.40

The AIMS survey indicates public support for policies governing AI, with 69% supporting a ban on sentient AI and 63% supporting a ban on smarter-than-human AI in 2023.32 This suggests a public appetite for proactive governance, which should be channeled towards regulating tangible harms and manipulative uses rather than hypothetical sentience. The goal is to ensure AI technologies are developed and used in a responsible and morally conscious manner, mitigating negative impacts on individuals and society.57

VII. Conclusion: Reclaiming Agency in the Age of AI Exploitation

A. Summary of Key Arguments

This whitepaper has argued that the most immediate and insidious threat posed by artificial intelligence is not the hypothetical emergence of sentient machines, but the deliberate and opportunistic exploitation of the belief in AI sentience. This belief is cultivated through sophisticated mimicry by AI systems, amplified by media narratives and corporate messaging, and targets a fundamental human psychological vulnerability: our innate tendency to anthropomorphize and project agency.

Key arguments presented include:

- Anthropomorphism as a "Zero-Day Vulnerability": Humans are psychologically predisposed to attribute mind and intention to entities that exhibit complex, human-like behavior. AI, particularly through advanced language models, effectively triggers this vulnerability (the ELIZA effect) without requiring genuine consciousness.

- Manufacturing Perception: Media portrayals, often characterized by anthropomorphic language and a focus on "strong AI" narratives, alongside corporate branding strategies that personify AI and promote "sentient brand" concepts, actively shape public perception towards accepting AI as more agentic and understanding than it is.

- Weaponization of Belief: This manufactured belief is then weaponized. Persuasive technologies leverage the perceived authority and understanding of AI to influence behavior and manipulate users. In broader contexts, these perceptions can be exploited for psychological operations, information dominance, and the concentration of power in the hands of those who control AI development and narratives.

- The AI Arms Race and Ethical Erosion: Geopolitical and corporate competition to achieve AI supremacy can lead to strategic blindness, where ethical considerations, safety protocols, and the human costs of AI (such as moral injury in operators of AI-enabled weapons and the dehumanization of conflict) are dangerously sidelined.

- The Locus of Danger: The primary danger is not AI achieving sentience, but humans being led to believe it has, thereby ceding critical judgment, autonomy, and control to systems and the actors who deploy them. AI itself is a tool; the "exploit" lies in how human perception of that tool is manipulated.

B. Call for a Paradigm Shift in Understanding AI Risk

The prevailing discourse on AI risk often bifurcates between downplaying current harms and focusing on speculative, long-term existential threats from superintelligent AI. This whitepaper calls for a paradigm shift: to recognize the significant, present-day psychological and societal risks stemming from the exploitation of perceived AI sentience. This is not an abstract future concern but an ongoing intelligence operation targeting human cognition.

Understanding AI risk through this lens means:

- Prioritizing Psychological Vulnerabilities: Recognizing that human cognitive biases are a primary attack surface.

- Scrutinizing Narrative Control: Analyzing who shapes AI narratives and for what purpose.

- Focusing on Human Actors: Acknowledging that the "weapon" is not AI itself, but human actors using the idea of AI as an instrument of influence and control.

- Addressing Current Harms: Concentrating on mitigating the tangible harms of manipulation, bias, and power concentration that arise from current AI applications and the narratives surrounding them.

This perspective does not dismiss other AI risks but argues that the weaponization of perception is a foundational enabler of many other harms, as it conditions the public and policymakers to accept or even welcome technologies and power structures that may not be in their best interest.

C. The Path Forward: Public Usefulness and Ethical Imperatives

Reclaiming agency in the age of AI exploitation requires a concerted effort to foster critical awareness, demand transparency and accountability, and implement robust ethical and regulatory frameworks. The path forward must be guided by public usefulness and unwavering ethical imperatives:

- Empowerment through Education: Promoting widespread AI literacy and critical thinking skills to inoculate against manipulative narratives and foster an informed public capable of discerning hype from reality.

- Demanding Transparency and Accountability: Insisting that AI systems are explainable and that their developers and deployers are accountable for their impacts, moving away from "black box" systems and opaque corporate practices.

- Regulating Use and Impact, Not Hypothetical States: Focusing governance on the tangible applications and societal consequences of AI—particularly its persuasive and autonomous capabilities—rather than attempting to regulate or define "sentience." This includes strict controls on AI-driven disinformation, manipulative persuasive technologies, and autonomous weapon systems.

- Championing Human-Centric AI: Ensuring that AI development and deployment are guided by human values, prioritize human well-being, and augment rather than diminish human agency and control. This involves ethical design principles, robust oversight, and ensuring that AI serves broad public interests rather than narrow corporate or state agendas.70

- De-escalating Hype Cycles: Academics, responsible industry players, and media must actively work to counter misleading narratives and ground public understanding in the actual capabilities and limitations of AI technology.25

The challenge is not to stop AI development, but to steer it responsibly, ensuring that it serves humanity as a tool, rather than humanity becoming a tool for those who would exploit the illusion of its sentience. The true intelligence operation we must counter is the one that seeks to make us believe in ghosts in the machine, distracting us from the hands on the controls.

Works cited

- Future Center - How AI and Technology are Shaping Psychological ..., accessed May 14, 2025, https://futureuae.com/en-US/Mainpage/Item/9941/war-of-the-mind-how-ai-and-technology-are-shaping-psychological-warfare-in-the-21st-century

- Anthropomorphism and Human-Robot Interaction ..., accessed May 14, 2025, https://cacm.acm.org/research/anthropomorphism-and-human-robot-interaction/

- (PDF) Anthropomorphism in AI: hype and fallacy - ResearchGate, accessed May 14, 2025, https://www.researchgate.net/publication/377976318_Anthropomorphism_in_AI_hype_and_fallacy

- What Is the Eliza Effect? | Built In, accessed May 14, 2025, https://builtin.com/artificial-intelligence/eliza-effect

- ChatGPT now better at faking human emotion - The University of ..., accessed May 14, 2025, https://www.sydney.edu.au/news-opinion/news/2024/05/20/chatgpt-now-better-at-faking-human-emotion.html

- 3 urgent marketing moves in the age of AI & sentient brands | EY - US, accessed May 14, 2025, https://www.ey.com/en_us/cmo/3-urgent-marketing-moves-in-the-age-of-ai-and-sentient-brands

- Is Sentient Marketing The Future of AI-Driven Customer Connection?, accessed May 14, 2025, https://martechvibe.com/article/is-sentient-marketing-the-future-of-ai-driven-customer-connection/

- AI Anthropomorphism: Effects on AI-Human and Human-Human Interactions - JETIR Research Journal, accessed May 14, 2025, https://www.jetir.org/papers/JETIR2410501.pdf

- philarchive.org, accessed May 14, 2025, https://philarchive.org/archive/PLAAIA-4

- Full article: A meta-analysis of anthropomorphism of artificial intelligence in tourism, accessed May 14, 2025, https://www.tandfonline.com/doi/full/10.1080/10941665.2025.2486014?src=

- Artefacts of Change: The Disruptive Nature of Humanoid Robots ..., accessed May 14, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11953219/

- uu.diva-portal.org, accessed May 14, 2025, https://uu.diva-portal.org/smash/get/diva2:1930178/FULLTEXT01.pdf

- AI Explained: Is Anthropomorphism Making AI Too Human? - PYMNTS.com, accessed May 14, 2025, https://www.pymnts.com/news/artificial-intelligence/2024/is-anthropomorphism-making-ai-too-human/

- The illusion of conscious AI - Big Think, accessed May 14, 2025, https://bigthink.com/neuropsych/the-illusion-of-conscious-ai/

- What is Sentient AI and Is it Already Here? - Simplilearn.com, accessed May 14, 2025, https://www.simplilearn.com/what-is-sentient-ai-article

- AI chatbots perpetuate biases when performing empathy, study finds - News, accessed May 14, 2025, https://news.ucsc.edu/2025/03/ai-empathy/

- When humans anthropomorphize non-humans: The impact of ..., accessed May 14, 2025, https://www.ideals.illinois.edu/items/131769

- How Understanding Cognitive Biases Protects Us Against ..., accessed May 14, 2025, https://walton.uark.edu/insights/posts/how-understanding-cognitive-biases-protects-us-against-deepfakes.php

- AI Thinks Like Us: Flaws, Biases, and All, Study Finds ..., accessed May 14, 2025, https://neurosciencenews.com/ai-human-thinking-28535/

- Anthropomorphism Unveiled: A Decade of Systematic Insights in Business and Technology Trends - F1000Research, accessed May 14, 2025, https://f1000research.com/articles/14-281/v1/pdf?article_uuid=c70ec444-cdaa-4be4-8bb3-e9bc3c0f0a73

- Anthropomorphization of Artificial Intelligence - Use cases and ..., accessed May 14, 2025, https://community.openai.com/t/anthropomorphization-of-artificial-intelligence/1245985

- Conscious artificial intelligence and biological naturalism ..., accessed May 14, 2025, https://www.cambridge.org/core/journals/behavioral-and-brain-sciences/article/abs/conscious-artificial-intelligence-and-biological-naturalism/C9912A5BE9D806012E3C8B3AF612E39A

- Conscious artificial intelligence and biological naturalism - PubMed, accessed May 14, 2025, https://pubmed.ncbi.nlm.nih.gov/40257177/

- Strong and weak AI narratives: an analytical framework, accessed May 14, 2025, https://d-nb.info/1352261944/34

- DeepSeek's AI: Navigating the media hype and reality – Monash Lens, accessed May 14, 2025, https://lens.monash.edu/@politics-society/2025/02/07/1387324/deepseeks-ai-navigating-the-media-hype-and-reality

- Gary Marcus - NYU Stern, accessed May 14, 2025, https://www.stern.nyu.edu/experience-stern/about/departments-centers-initiatives/centers-of-research/fubon-center-technology-business-and-innovation/fubon-center-technology-business-and-innovation-events/2022-2023-events-2-1

- AI Snake Oil: What Artificial Intelligence Can Do, What It Can't, and ..., accessed May 14, 2025, https://www.thegeostrata.com/post/book-review-ai-snake-oil

- AI Snake Oil: What Artificial Intelligence Can Do, What It Can't, and How to Tell the Difference - Amazon.com, accessed May 14, 2025, https://www.amazon.com/Snake-Oil-Artificial-Intelligence-Difference/dp/0691271658

- Exploring the Ethical Implications of AI-Powered Personalization in Digital Marketing, accessed May 14, 2025, https://www.researchgate.net/publication/384843767_Exploring_the_Ethical_Implications_of_AI-Powered_Personalization_in_Digital_Marketing

- AI Consciousness: A Dream or a Reality? | AI News - OpenTools, accessed May 14, 2025, https://opentools.ai/news/ai-consciousness-a-dream-or-a-reality

- accessed December 31, 1969, https://opentools.ai/news/ai-consciousness-a-dream-or-a-reality/

- Perceptions of Sentient AI and Other Digital Minds: Evidence from the AI, Morality, and Sentience (AIMS) Survey - arXiv, accessed May 14, 2025, https://arxiv.org/html/2407.08867v3

- arxiv.org, accessed May 14, 2025, https://arxiv.org/abs/2303.08721

- the effect of a chatbot's anthropomorphic features on trust and user experience, accessed May 14, 2025, https://theses.ubn.ru.nl/bitstreams/032c0012-5088-45f6-96f3-3c8e4fec3f05/download

- Misplaced Capabilities: Evaluating the Risks of Anthropomorphism ..., accessed May 14, 2025, https://ojs.aaai.org/index.php/AIES/article/view/31903

- New Ethics Risks Courtesy of AI Agents? Researchers Are on the ..., accessed May 14, 2025, https://www.ibm.com/think/insights/ai-agent-ethics

- The ethics of persuasive design - Virtusa, accessed May 14, 2025, https://www.virtusa.com/insights/perspectives/the-ethics-of-persuasive-design

- Ethical AI Persuasion → Term - Prism → Sustainability Directory, accessed May 14, 2025, https://prism.sustainability-directory.com/term/ethical-ai-persuasion/

- A Digital Fight For Democracy: The Global AI Arms Race - McCain ..., accessed May 14, 2025, https://www.mccaininstitute.org/resources/blog/a-digital-fight-for-democracy-the-global-ai-arms-race/

- AI Risks that Could Lead to Catastrophe | CAIS, accessed May 14, 2025, https://www.safe.ai/ai-risk

- Compass Vision: Fighting AI-Generated Deepfakes and Narrative Attacks with Blackbird.AI's Constellation Narrative Intelligence Platform, accessed May 14, 2025, https://blackbird.ai/blog/compass-vision-blackbird-deepfake-disinformation/

- AI-Generated Deepfakes and Narrative Attacks: How Compass Vision Confronts the Global Information Crisis - Cybersecurity Excellence Awards, accessed May 14, 2025, https://cybersecurity-excellence-awards.com/candidates/ai-generated-deepfakes-and-narrative-attacks-how-compass-vision-confronts-the-global-information-crisis-2025/

- Narrative Attack and Deepfake Scandals Expose AI's Threat to Celebrities, Executives, and Influencers | Blackbird.AI, accessed May 14, 2025, https://blackbird.ai/blog/celebrity-deepfake-narrative-attacks/

- AI Misinformation - IBM, accessed May 14, 2025, https://www.ibm.com/think/insights/ai-misinformation

- Confronting AI-based Narrative Manipulation in 2025: Top Tech Challenges and Solutions, accessed May 14, 2025, https://blackbird.ai/blog/confronting-ai-narrative-manipulation/

- accessed December 31, 1969, https://blackbird.ai/blog/compass-vision-fighting-ai-generated-deepfakes-and-narrative-attacks-with-blackbird-ais-constellation-narrative-intelligence-platform

- AI at the Helm: Transforming Crisis Communication Through Theory and Advancing Technology - Clemson OPEN, accessed May 14, 2025, https://open.clemson.edu/cgi/viewcontent.cgi?article=5434&context=all_theses

- 14 Dangers of Artificial Intelligence (AI) | Built In, accessed May 14, 2025, https://builtin.com/artificial-intelligence/risks-of-artificial-intelligence

- Expert Brief - AI and Market Concentration — Open Markets Institute, accessed May 14, 2025, https://www.openmarketsinstitute.org/publications/expert-brief-ai-and-market-concentration-courtney-radsch-max-vonthun

- Artificial Intelligene — Open Markets Institute, accessed May 14, 2025, https://www.openmarketsinstitute.org/a-i

- Existential risk from artificial intelligence - Wikipedia, accessed May 14, 2025, https://en.wikipedia.org/wiki/Existential_risk_from_artificial_intelligence

- The ethical dilemmas of AI | USC Annenberg School for Communication and Journalism, accessed May 14, 2025, https://annenberg.usc.edu/research/center-public-relations/usc-annenberg-relevance-report/ethical-dilemmas-ai

- AI inventions – the ethical and societal implications | Managing Intellectual Property, accessed May 14, 2025, https://www.managingip.com/article/2bc988k82fc0ho408vwu8/expert-analysis/ai-inventions-the-ethical-and-societal-implications

- Top 10 Ethical Concerns About AI and Lack of Accountability - Redress Compliance, accessed May 14, 2025, https://redresscompliance.com/top-10-ethical-concerns-about-ai-and-lack-of-accountability/

- AI Ethics Concerns: A Business-Oriented Guide to Responsible AI | SmartDev, accessed May 14, 2025, https://smartdev.com/ai-ethics-concerns-a-business-oriented-guide-to-responsible-ai/

- 5 Ethical Considerations of AI in Business - HBS Online, accessed May 14, 2025, https://online.hbs.edu/blog/post/ethical-considerations-of-ai

- Ethical Artificial Intelligence: Navigating the Path to Sentience | Veracode, accessed May 14, 2025, https://www.veracode.com/wp-content/uploads/2024/12/ethical-artificial-intelligence-navigating-the-path-to-sentience.pdf

- Possibility, impact, and ethical implications of Sentient AI - RoboticsBiz, accessed May 14, 2025, https://roboticsbiz.com/possibility-impact-and-ethical-implications-of-sentient-ai/

- What are the ethical challenges and potential risks associated with ..., accessed May 14, 2025, https://www.quora.com/What-are-the-ethical-challenges-and-potential-risks-associated-with-the-rapid-advancement-of-artificial-Intelligence

- Factors influencing trust in algorithmic decision-making: an indirect scenario-based experiment - Frontiers, accessed May 14, 2025, https://www.frontiersin.org/journals/artificial-intelligence/articles/10.3389/frai.2024.1465605/full

- How AI reinforces gender bias—and what we can do about it | UN Women – Headquarters, accessed May 14, 2025, https://www.unwomen.org/en/news-stories/interview/2025/02/how-ai-reinforces-gender-bias-and-what-we-can-do-about-it

- A Practical Guide to AI Governance and Embedding Ethics in AI Solutions - CMS Wire, accessed May 14, 2025, https://www.cmswire.com/digital-experience/a-practical-guide-to-ai-governance-and-embedding-ethics-in-ai-solutions/

- Ethical Considerations in AI-Driven SEO in 2025 - Digital Tools Mentor, accessed May 14, 2025, https://www.bestdigitaltoolsmentor.com/ai-tools/seo/ethical-considerations-in-ai-driven-seo/

- The problem of algorithmic bias in AI-based military decision support systems, accessed May 14, 2025, https://blogs.icrc.org/law-and-policy/2024/09/03/the-problem-of-algorithmic-bias-in-ai-based-military-decision-support-systems/

- We should prevent the creation of artificial sentience — EA Forum, accessed May 14, 2025, https://forum.effectivealtruism.org/posts/9adaExTiSDA3o3ipL/we-should-prevent-the-creation-of-artificial-sentience

- AFB Report Spotlights Impact of AI for Disabled People | American Foundation for the Blind, accessed May 14, 2025, https://afb.org/news-publications/press-room/press-release-archive/press-release-2025/afb-report-spotlights-impact-AI-disabled-people

- Seeking Stability in the Competition for AI Advantage - RAND, accessed May 14, 2025, https://www.rand.org/pubs/commentary/2025/03/seeking-stability-in-the-competition-for-ai-advantage.html

- Full article: The end of MAD? Technological innovation and the future of nuclear retaliatory capabilities - Taylor & Francis Online, accessed May 14, 2025, https://www.tandfonline.com/doi/full/10.1080/01402390.2024.2428983

- Mutual Assured AI Malfunction: A New Cold War Strategy for AI Superpowers - Maginative, accessed May 14, 2025, https://www.maginative.com/article/mutual-assured-ai-malfunction-a-new-cold-war-strategy-for-ai-superpowers/

- Recommendation on the Ethics of Artificial Intelligence - Legal Affairs - UNESCO, accessed May 14, 2025, https://www.unesco.org/en/legal-affairs/recommendation-ethics-artificial-intelligence

- The weaponization of artificial intelligence: What the public needs to be aware of - Frontiers, accessed May 14, 2025, https://www.frontiersin.org/journals/artificial-intelligence/articles/10.3389/frai.2023.1154184/full

- Defense Primer: U.S. Policy on Lethal Autonomous Weapon Systems | Congress.gov, accessed May 14, 2025, https://www.congress.gov/crs-product/IF11150

- Ukraine's Future Vision and Current Capabilities for Waging AI ..., accessed May 14, 2025, https://www.csis.org/analysis/ukraines-future-vision-and-current-capabilities-waging-ai-enabled-autonomous-warfare

- AI and autonomous weapons systems: the time for action is now ..., accessed May 14, 2025, https://www.saferworld-global.org/resources/news-and-analysis/post/1037-ai-and-autonomous-weapons-systems-the-time-for-action-is-now

- The Dehumanization of ISR: Israel's Use of Artificial Intelligence In Warfare, accessed May 14, 2025, https://georgetownsecuritystudiesreview.org/2025/01/09/the-dehumanization-of-isr-israels-use-of-artificial-intelligence-in-warfare/

- The Pentagon says AI is speeding up its 'kill chain' | TechCrunch : r/Futurology - Reddit, accessed May 14, 2025, https://www.reddit.com/r/Futurology/comments/1i5926w/the_pentagon_says_ai_is_speeding_up_its_kill/

- The ethical implications of AI in warfare - Queen Mary University of London, accessed May 14, 2025, https://www.qmul.ac.uk/research/featured-research/the-ethical-implications-of-ai-in-warfare/

- The Ethics of Acquiring Disruptive Technologies: Artificial Intelligence, Autonomous Weapons, and Decision Support Systems - National Defense University Press, accessed May 14, 2025, https://ndupress.ndu.edu/Portals/68/Documents/prism/prism_8-3/prism_8-3_Pfaff_128-145.pdf

- apps.dtic.mil, accessed May 14, 2025, https://apps.dtic.mil/sti/trecms/pdf/AD1150884.pdf

- Ethics and Armed Forces – Magazine 2014-01 - Stress among UAV ..., accessed May 14, 2025, https://www.ethikundmilitaer.de/en/magazine-datenbank/detail/2014-01/article/stress-among-uav-operators-posttraumatic-stress-disorder-existential-crisis-or-moral-injury

- PTSD in Drone Operators and Pilots: The Silent Battle Beyond the Screen - Hill & Ponton, accessed May 14, 2025, https://www.hillandponton.com/ptsd-drone-operators-pilots/

- Eye in the sky: Understanding the mental health of unmanned aerial vehicle operators., accessed May 14, 2025, https://jmvh.org/article/eye-in-the-sky-understanding-the-mental-health-of-unmanned-aerial-vehicle-operators/

- Psychological Dimensions of Drone Warfare - CHRISTOPHER J. FERGUSON, accessed May 14, 2025, https://www.christopherjferguson.com/Drones.pdf

- Drones Having Psychological Impact On Soldiers | TRADOC G2 Operational Environment Enterprise, accessed May 14, 2025, https://oe.tradoc.army.mil/product/drones-having-psychological-impact-on-soldiers/

- Artificial Intelligence Decision Support Systems in Resource-Limited Environments to Save Lives and Reduce Moral Injury | Military Medicine | Oxford Academic, accessed May 14, 2025, https://academic.oup.com/milmed/advance-article/doi/10.1093/milmed/usaf010/7965042

- Lethal Autonomous Weapon Systems and the Potential of Moral Injury, accessed May 14, 2025, https://digitalcommons.salve.edu/doctoral_dissertations/206/

- Dehumanization risks associated with artificial intelligence use - PubMed, accessed May 14, 2025, https://pubmed.ncbi.nlm.nih.gov/40310200/

- Standardized Ethical Metrics: Setting Global Benchmarks for Responsible AI - AIGN, accessed May 14, 2025, https://aign.global/ai-ethics-consulting/patrick-upmann/standardized-ethical-metrics-setting-global-benchmarks-for-responsible-ai/

- Full article: AI Ethics: Integrating Transparency, Fairness, and Privacy in AI Development, accessed May 14, 2025, https://www.tandfonline.com/doi/full/10.1080/08839514.2025.2463722

- Exploring The Ethics of AI in Design for Creatives, accessed May 14, 2025, https://parachutedesign.ca/blog/ethics-of-ai-in-design/

- Ethical AI and Corporate Social Responsibility: Legal Frameworks in Action - Senna Labs, accessed May 14, 2025, https://sennalabs.com/blog/ethical-ai-and-corporate-social-responsibility-legal-frameworks-in-action 9

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![How to Enable Remote Access on Windows 10 [Allow RDP]](https://bigdataanalyticsnews.com/wp-content/uploads/2025/05/remote-access-windows.jpg)

![[DEALS] The 2025 Ultimate GenAI Masterclass Bundle (87% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Legends Reborn tier list of best heroes for each class [May 2025]](https://media.pocketgamer.com/artwork/na-33360-1656320479/pg-magnum-quest-fi-1.jpeg?#)

-Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_KristofferTripplaar_Alamy_.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Data brokers won’t be banned from selling your personal data without good reason [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2024/12/Data-brokers-may-be-banned-from-selling-your-personal-data.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Vision Pro May Soon Let You Scroll With Your Eyes [Report]](https://www.iclarified.com/images/news/97324/97324/97324-640.jpg)

![Apple's 20th Anniversary iPhone May Feature Bezel-Free Display, AI Memory, Silicon Anode Battery [Report]](https://www.iclarified.com/images/news/97323/97323/97323-640.jpg)