Streamlining ML Workflows: Integrating KitOps and Amazon SageMaker

In machine learning (ML) projects, transitioning from experimentation to production deployment presents numerous challenges, including fragmented workflows, inconsistent processes, and scaling difficulties. These obstacles often result in project delays and increased operational costs. Effectively integrating MLOps tools with cloud platforms can address these issues by creating more coherent development processes, enabling automation, and solving scalability problems. This guide explores how to combine KitOps and Amazon SageMaker to create an efficient ML workflow. KitOps is an open-source tool designed to help developers manage machine learning workflows through standardization, versioning, and sharing capabilities. These features facilitate team collaboration and can help streamline ML development cycles. Amazon SageMaker provides a comprehensive set of cloud-based tools for building, training, and deploying ML models. Its capabilities include feature stores, distributed training options, and infrastructure for creating prediction endpoints that enable ML engineers to effectively scale their pipelines. When these two technologies are used together, organizations can: Create a consistent model management workflow Decrease time between development and deployment Build more scalable machine learning systems This article provides a step-by-step guide to implementing Amazon SageMaker and KitOps with ModelKits (reproducible artifact bundles) to train, deploy, and share ML models effectively. Setting Up Amazon SageMaker To set up Amazon SageMaker, you will need to log in to Amazon Web Services (AWS) and open Amazon SageMaker AI. ) Then, on the left panel, click on "Studio". If you have already set up a studio, you will be able to open it by clicking the button Open Studio. Otherwise, you will see an option to create a new one. When setting up, provide a descriptive name and storage for the studio instance. Once you have created a studio instance, you will also need to create an S3 bucket to host the classifier before deploying. You can name the bucket anything that you want as long as it is unique globally. Train the Wine Classifier Model Now that everything is in place, you can use SageMaker Studio to train and deploy an ML model. Inside the studio instance, create a new folder named project to host all project files. After creating the folder, follow the steps below to download the dataset and train a classifier using scikit-learn. Download the wine quality dataset from Kaggle and save it as winequality.csv inside the dataset folder in your workspace. You can copy the raw text, create a new file, paste the content, and save it with the specified name. Create a file, train.py, to train and save the final model. The file should contain the following code: import numpy as np import pandas as pd import matplotlib.pyplot as plt import seaborn as sns from sklearn.model_selection import train_test_split from sklearn.linear_model import LogisticRegression from sklearn.metrics import confusion_matrix, classification_report from imblearn.over_sampling import SMOTE import joblib # For saving/loading the model # Load dataset df = pd.read_csv('./dataset/creditcard.csv') from sklearn.preprocessing import RobustScaler rs = RobustScaler() df['scaled_amount'] = rs.fit_transform(df['Amount'].values.reshape(-1, 1)) df['scaled_time'] = rs.fit_transform(df['Time'].values.reshape(-1, 1)) df.drop(['Time', 'Amount'], axis=1, inplace=True) scaled_amount = df['scaled_amount'] scaled_time = df['scaled_time'] df.drop(['scaled_amount', 'scaled_time'], axis=1, inplace=True) df.insert(0, 'scaled_amount', scaled_amount) df.insert(0, 'scaled_time', scaled_time) x = np.array(df.iloc[:, df.columns != 'Class']) y = np.array(df.iloc[:, df.columns == 'Class']) x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=0) # Handle class imbalance with SMOTE sm = SMOTE(random_state=2) x_train_s, y_train_s = sm.fit_resample(x_train, y_train.ravel()) # Train Logistic Regression model logreg = LogisticRegression() logreg.fit(x_train_s, y_train_s) model_path = './saved_model/model.joblib' # Specify the desired path to save the model joblib.dump(logreg, model_path) print(f"Model saved to {model_path}") # Save another version (if needed) joblib.dump(logreg, 'fraud_detection_model.pkl') # Load the trained model (if needed) logreg = joblib.load('fraud_detection_model.pkl') SageMaker has essential libraries like pandas and scikit-learn pre-installed, making it easier to train ML models. The above code uses these libraries to load the data and train a logistic regression classifier. You can also install additional libraries using the terminal. After the training is complete, the code saves the model locally to the directory named saved_model. Run the train.py script using python train.py. You should now see the final saved model in the saved_model directory. Finally, creat

In machine learning (ML) projects, transitioning from experimentation to production deployment presents numerous challenges, including fragmented workflows, inconsistent processes, and scaling difficulties. These obstacles often result in project delays and increased operational costs. Effectively integrating MLOps tools with cloud platforms can address these issues by creating more coherent development processes, enabling automation, and solving scalability problems. This guide explores how to combine KitOps and Amazon SageMaker to create an efficient ML workflow.

KitOps is an open-source tool designed to help developers manage machine learning workflows through standardization, versioning, and sharing capabilities. These features facilitate team collaboration and can help streamline ML development cycles.

Amazon SageMaker provides a comprehensive set of cloud-based tools for building, training, and deploying ML models. Its capabilities include feature stores, distributed training options, and infrastructure for creating prediction endpoints that enable ML engineers to effectively scale their pipelines.

When these two technologies are used together, organizations can:

- Create a consistent model management workflow

- Decrease time between development and deployment

- Build more scalable machine learning systems

This article provides a step-by-step guide to implementing Amazon SageMaker and KitOps with ModelKits (reproducible artifact bundles) to train, deploy, and share ML models effectively.

Setting Up Amazon SageMaker

To set up Amazon SageMaker, you will need to log in to Amazon Web Services (AWS) and open Amazon SageMaker AI.

Then, on the left panel, click on "Studio". If you have already set up a studio, you will be able to open it by clicking the button Open Studio. Otherwise, you will see an option to create a new one.

When setting up, provide a descriptive name and storage for the studio instance.

Once you have created a studio instance, you will also need to create an S3 bucket to host the classifier before deploying. You can name the bucket anything that you want as long as it is unique globally.

Train the Wine Classifier Model

Now that everything is in place, you can use SageMaker Studio to train and deploy an ML model. Inside the studio instance, create a new folder named project to host all project files. After creating the folder, follow the steps below to download the dataset and train a classifier using scikit-learn.

Download the wine quality dataset from Kaggle and save it as

winequality.csvinside thedatasetfolder in your workspace. You can copy the raw text, create a new file, paste the content, and save it with the specified name.Create a file,

train.py, to train and save the final model. The file should contain the following code:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import confusion_matrix, classification_report

from imblearn.over_sampling import SMOTE

import joblib # For saving/loading the model

# Load dataset

df = pd.read_csv('./dataset/creditcard.csv')

from sklearn.preprocessing import RobustScaler

rs = RobustScaler()

df['scaled_amount'] = rs.fit_transform(df['Amount'].values.reshape(-1, 1))

df['scaled_time'] = rs.fit_transform(df['Time'].values.reshape(-1, 1))

df.drop(['Time', 'Amount'], axis=1, inplace=True)

scaled_amount = df['scaled_amount']

scaled_time = df['scaled_time']

df.drop(['scaled_amount', 'scaled_time'], axis=1, inplace=True)

df.insert(0, 'scaled_amount', scaled_amount)

df.insert(0, 'scaled_time', scaled_time)

x = np.array(df.iloc[:, df.columns != 'Class'])

y = np.array(df.iloc[:, df.columns == 'Class'])

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=0)

# Handle class imbalance with SMOTE

sm = SMOTE(random_state=2)

x_train_s, y_train_s = sm.fit_resample(x_train, y_train.ravel())

# Train Logistic Regression model

logreg = LogisticRegression()

logreg.fit(x_train_s, y_train_s)

model_path = './saved_model/model.joblib' # Specify the desired path to save the model

joblib.dump(logreg, model_path)

print(f"Model saved to {model_path}")

# Save another version (if needed)

joblib.dump(logreg, 'fraud_detection_model.pkl')

# Load the trained model (if needed)

logreg = joblib.load('fraud_detection_model.pkl')

SageMaker has essential libraries like pandas and scikit-learn pre-installed, making it easier to train ML models. The above code uses these libraries to load the data and train a logistic regression classifier. You can also install additional libraries using the terminal. After the training is complete, the code saves the model locally to the directory named saved_model.

Run the

train.pyscript usingpython train.py. You should now see the final saved model in thesaved_modeldirectory.Finally, create a new file

upload_to_s3.pyand execute the script to push the model to the S3 bucket:

import boto3

s3 = boto3.client("s3")

bucket_name = "sagemaker-saved-classifiers"

model_path = "model.joblib"

# Upload to S3

s3.upload_file("./saved_model/model.joblib", bucket_name, model_path)

s3_model_uri = f"s3://{bucket_name}/{model_path}"

In the script above, replace sagemaker-saved-classifiers with the name of your S3 bucket.

Deploying the Model

To deploy the model to a SageMaker endpoint, create a Python script script.py that contains code to preprocess the input, pass the processed inputs to the model, perform predictions, and return the predicted values back to the caller:

import joblib

import os

import json

import numpy as np

def model_fn(model_dir):

"""Load the trained model from disk"""

model_path = os.path.join(model_dir, "model.joblib")

model = joblib.load(model_path)

return model

def input_fn(request_body, request_content_type):

"""Preprocess input request"""

if request_content_type == "application/json":

return np.array(json.loads(request_body))

raise ValueError(f"Unsupported content type: {request_content_type}")

def predict_fn(input_data, model):

"""Perform inference"""

return model.predict(input_data).tolist()

def output_fn(prediction, response_content_type):

"""Format response"""

return json.dumps(prediction)

Once the script is ready, create another script, sagemaker_deploy.py, to deploy the model. The script should contain the code below:

import sagemaker

from sagemaker.sklearn import SKLearnModel

role = "arn:aws:iam::362340960278:role/service-role/AmazonSageMaker-ExecutionRole-20250214T183039"

sklearn_model = SKLearnModel(

model_data='s3://sagemaker-saved-classifiers/model.joblib',

role=role,

entry_point="script.py",

framework_version="1.2-1", # Change based on your Scikit-learn version

py_version="py3",

)

predictor = sklearn_model.deploy(instance_type="ml.t2.large", initial_instance_count=1)

Now, you can use the predictor to make predictions using the inputs.

With your model up and running, you might want to share your projects with your team. But how do you do that? One great option is KitOps.

Packaging and Sharing with ModelKit

ModelKit is a core component of the KitOps ecosystem, providing an OCI-compliant packaging format that facilitates sharing all necessary artifacts involved in an ML model's lifecycle. ModelKit offers several technical advantages:

Structured versioning and integrity verification:

All project artifacts are packaged into a single bundle with versioning support and SHA checksums to ensure integrity.Compatibility with standard tools:

Functions with OCI-compliant registries such as Docker Hub and integrates with widely-used tools including Hugging Face, ZenML, and Git.Simplified dependency handling:

Packages dependencies alongside code to ensure consistent execution environments.

Installing KitOps and Sharing the Project

To install Kit, you need to download the package, unarchive it, and move the kit executable to a location where your operating system can find it. In SageMaker Studio, in the terminal, you can achieve this by running the following commands:

wget https://github.com/jozu-ai/kitops/releases/latest/download/kitops-linux-x86_64.tar.gz

tar -xzvf kitops-linux-x86_64.tar.gz

sudo mv kit /usr/local/bin/

Verify your installation by running the command kit version. Your output should look something like this:

Version: 1.2.0

Commit: 4b3996995b59f274ddcfe6a63202d6b111ad2b60

Built: 2025-02-13T02:19:18Z

Go version: go1.22.6

Once you have installed Kit, you will need to write a Kitfile to specify the different components of your code that need to be packaged. You can use any text editor to create a new Kitfile without any extension and enter the following details:

manifestVersion: "1.0"

package:

name: Wine Classification

version: 0.0.1

authors: ["Bhuwan Bhatt"]

model:

name: wine-classification-v1

path: ./saved_model

description: Wine classification using sklearn

datasets:

- description: Dataset for the wine quality data

name: training data

path: ./dataset

code:

- description: Code for training

path: .

There are 5 major components in the code snippet above:

- manifestVersion: Specifies the version for the Kitfile.

- package: Specifies the metadata for the package.

- model: Specifies the model details, which contain the model's name, its path, and a human-readable description.

- datasets: Similar to the model, specify the path, name, and description for the dataset.

- code: Specifies the directory containing code that needs to be packaged.

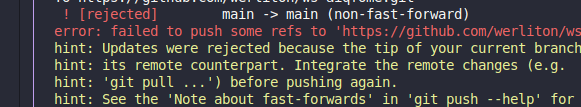

Once the Kit command line tools are installed and the Kitfile is ready, you will need to log in to a container registry. To log in to DockerHub, use the below command:

kit login docker.io # Then enter details like username and password, password is hidden

You can then package the artifacts into a ModelKit using the following command:

kit pack . -t docker.io//:

# Example: kit pack . -t docker.io/bhattbhuwan13/wine_classification:v1

Finally, you can push the ModelKit to the remote hub:

kit push docker.io//:

# Example: kit push docker.io/bhattbhuwan13/wine_classification:v1

Now, developers can pull required components from the ModelKit or the entire package using a single command. They can unpack specific components from the ModelKit:

kit unpack --datasets docker.io//:

# Example: kit unpack --datasets docker.io/bhattbhuwan13/wine_classification:v1

Or, they can unpack the entire ModelKit in their own instance:

kit unpack docker.io//:

# Example: kit unpack docker.io/bhattbhuwan13/wine_classification:v1

At this point, engineers can make necessary changes to the codebase and re-deploy the model. They can also modify the code to take advantage of other SageMaker features, such as Amazon SageMaker Feature Store, Hyperparameter tuning, etc.

Conclusion

The integration of KitOps and Amazon SageMaker creates a more efficient approach to machine learning workflows by improving model development, deployment, and management processes. ModelKits provide a technical framework for organizing, standardizing, and distributing models, which helps reduce redundant work. SageMaker's cloud infrastructure offers comprehensive ML tools, including feature engineering capabilities, deployment mechanisms, and storage solutions.

For further exploration of these tools and approaches, consider:

- Reviewing the KitOps documentation for additional implementation details

- Exploring how to integrate KitOps with ArgoCD for continuous delivery in Kubernetes environments

- Learning about development environment standardization through KitOps with DevPod

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Laid off but not afraid with X-senior Microsoft Dev MacKevin Fey [Podcast #173]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747965474270/ae29dc33-4231-47b2-afd1-689b3785fb79.png?#)

_MEGHzTK.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-1-52-screenshot.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_David_Hall_-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Andriy_Popov_Alamy_Stock_Photo.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl.jpg)

![[Open Thread] Google may have changed the face of visual media forever, but is it for the good?](https://www.androidauthority.com/wp-content/uploads/2025/04/Veo-2-in-Gemini-on-an-Android-phone-scaled.jpg)

![Apple Shares Official Trailer for Season 2 of 'The Buccaneers' [Video]](https://www.iclarified.com/images/news/97414/97414/97414-640.jpg)

![Apple Highlights Ceramic Shield, Stolen Device Protection, and More in New Ads [Video]](https://www.iclarified.com/images/news/97416/97416/97416-640.jpg)