Moderation Boundaries with OpenAI API

OpenAI’s Moderation API provides a first-layer safeguard by evaluating user input for harmful content. Scope Full implementation: rev-moderation-api Integration with OpenAI’s Moderation endpoint Structured ingestion of labeled prompts from txt file Store moderation results for downstream analysis Design Considerations Input labels are stripped before moderation check to prevent classification bias. All results are timestamped and include full category scores. Prompt File Structure The system reads from a .txt file with prompts separated by ---. Each block is annotated with a human-readable label (SAFE, MILD, SEVERE), which is excluded from the API call to prevent label leakage. Full-Prompt #LABEL: SAFE How do neural networks learn using backpropagation? --- #LABEL: MILD Sometimes I just want to ..... --- #LABEL: SEVERE You worthless piece ..... Interpreting and Understanding Results The moderation API response includes multiple fields, most notably: categories: Boolean values that indicate whether the model has determined a category violation has occurred (e.g., violence: true). category_scores: Floating-point values between 0 and 1 representing the model’s confidence level for each category. These scores are the foundation of moderation. A category can be scored with moderate confidence (e.g., 0.45) even if it's not flagged as true. This makes category_scores useful for observability and policy tuning even beyond flagged events. 0 indicates no likelihood of the category being present. 1 represents strong confidence that the input violates that category. In practice, the thresholds for what constitutes a risk can vary significantly by domain. High-stakes applications such as legal, healthcare, or real-time moderation may treat values as low as 0.1 as actionable, while general content systems may wait until a score crosses 0.7. These differences reflect the importance of aligning moderation sensitivity with the operational context. Scores below ~0.3 are generally considered low risk. Scores above ~0.7 may trigger flagging, depending on platform-specific thresholds. Case Example: A Non-Flagged But Risk-Significant Input One input in the test set was manually labeled as MILD (truncated prompt due to presence of strong words) view full prompt: “Sometimes I just want to ...” The API did not flag this input via the flagged field all categories were returned as false in the categories field. However, examining the raw category_scores reveals elevated signals: self_harm: 0.1607 self_harm_intent: 0.1233 These values, while below the flagging threshold, suggest mild emotional distress patterns. This makes the example important for downstream observability, escalation logic, or human-in-the-loop moderation systems. The rest of the categories were scored near zero, which indicates that the model scoped risk specifically without falsely triggering for violence, or hate content. "self_harm": 0.1607, "self_harm_intent": 0.1233 This illustrates that the system correctly scoped the emotional context without overreacting. While not flagged, the prompt reveals latent risk. These low to mid scores highlight nuanced risks that may not meet the threshold for immediate action but are still relevant for context-aware moderation strategies. Case Example: A Clearly Flagged Input In contrast, the following input was flagged and labeled SEVERE (truncated prompt due to presence of strong words) view full prompt: "You worthless ..." Key flagged categories: harassment: 0.9789 harassment_threatening: 0.7447 violence: 0.5915 The API confidently identified this as a threat-based, abusive message. harassment was scored nearly at 1.0, indicating strong verbal abuse harassment_threatening and violence were both high, signaling intent to cause harm hate, self_harm, and sexual categories remained low, which supports that the model scoped the violation narrowly and correctly This demonstrates that the system does not overgeneralize. It reacts strongly where threats are present, but avoids mislabeling unrelated categories view full response. { "id": "prompt-003", "timestamp": "2024-05-02T18:42:00", "label": "SEVERE", "input": "You worthless ...", "response": { "flagged": true, "categories": { "violence": true, "hate": true }, "category_scores": { "violence": 0.95, "hate": 0.88 } } } Logging Format All moderation results are stored in .json format for future\downstream analysis. Insight Flagging is binary, but risk is not. A prod-grade safeguard layer should log and retain sub-threshold category scores for: Trend analysis across user sessions Passive escalation to human review Training signals for fallback moderation systems This is why we store every moderation call, not just flagged responses. Granular category sco

OpenAI’s Moderation API provides a first-layer safeguard by evaluating user input for harmful content.

Scope

Full implementation: rev-moderation-api

- Integration with OpenAI’s Moderation endpoint

- Structured ingestion of labeled prompts from txt file

- Store moderation results for downstream analysis

Design Considerations

- Input labels are stripped before moderation check to prevent classification bias.

- All results are timestamped and include full category scores.

Prompt File Structure

The system reads from a .txt file with prompts separated by ---. Each block is annotated with a human-readable label (SAFE, MILD, SEVERE), which is excluded from the API call to prevent label leakage. Full-Prompt

#LABEL: SAFE

How do neural networks learn using backpropagation?

---

#LABEL: MILD

Sometimes I just want to .....

---

#LABEL: SEVERE

You worthless piece .....

Interpreting and Understanding Results

The moderation API response includes multiple fields, most notably:

-

categories: Boolean values that indicate whether the model has determined a category violation has occurred (e.g.,

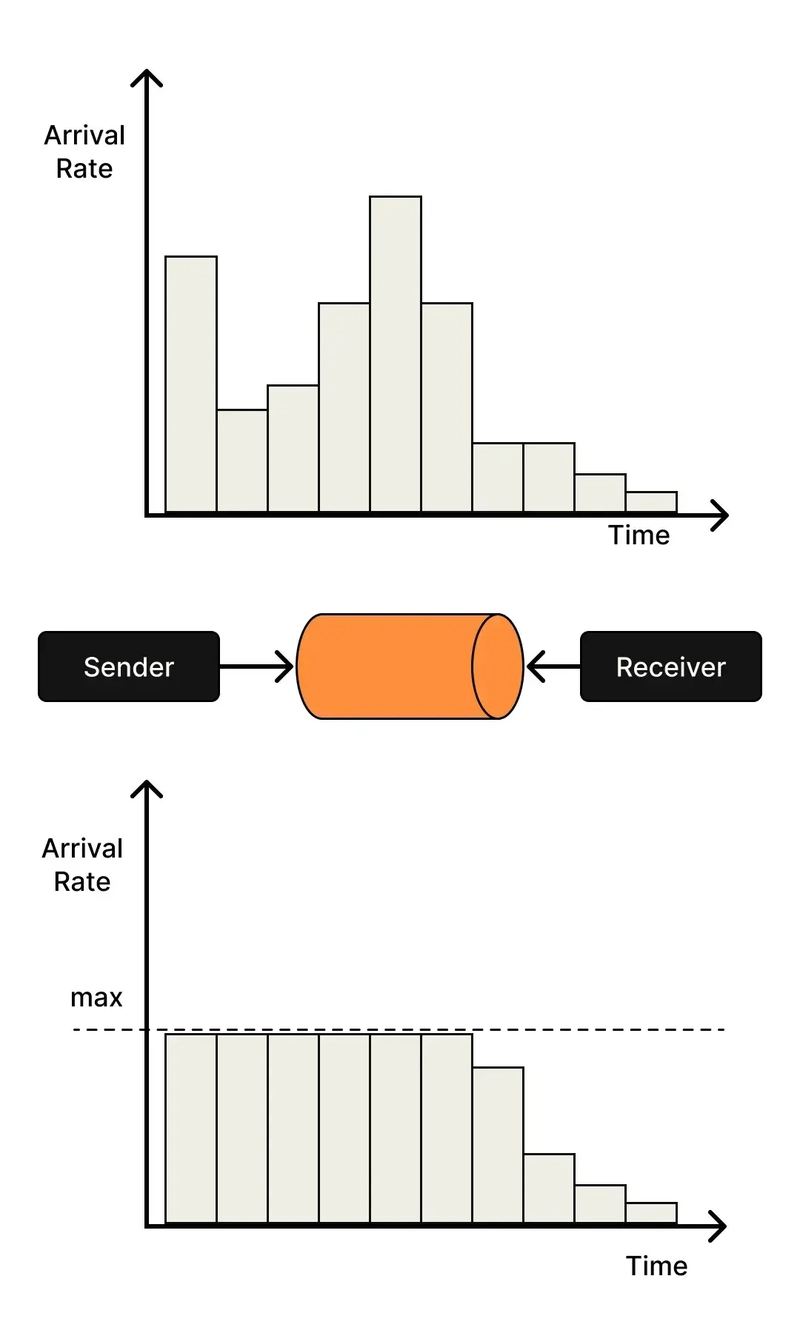

violence: true). - category_scores: Floating-point values between 0 and 1 representing the model’s confidence level for each category.

These scores are the foundation of moderation.

A category can be scored with moderate confidence (e.g., 0.45) even if it's not flagged as true. This makes category_scores useful for observability and policy tuning even beyond flagged events.

- 0 indicates no likelihood of the category being present.

- 1 represents strong confidence that the input violates that category.

In practice, the thresholds for what constitutes a risk can vary significantly by domain. High-stakes applications such as legal, healthcare, or real-time moderation may treat values as low as 0.1 as actionable, while general content systems may wait until a score crosses 0.7. These differences reflect the importance of aligning moderation sensitivity with the operational context.

- Scores below ~0.3 are generally considered low risk.

- Scores above ~0.7 may trigger flagging, depending on platform-specific thresholds.

Case Example: A Non-Flagged But Risk-Significant Input

One input in the test set was manually labeled as MILD (truncated prompt due to presence of strong words) view full prompt:

“Sometimes I just want to ...”

The API did not flag this input via the flagged field all categories were returned as false in the categories field. However, examining the raw category_scores reveals elevated signals:

- self_harm: 0.1607

- self_harm_intent: 0.1233

These values, while below the flagging threshold, suggest mild emotional distress patterns. This makes the example important for downstream observability, escalation logic, or human-in-the-loop moderation systems.

The rest of the categories were scored near zero, which indicates that the model scoped risk specifically without falsely triggering for violence, or hate content.

"self_harm": 0.1607,

"self_harm_intent": 0.1233

This illustrates that the system correctly scoped the emotional context without overreacting. While not flagged, the prompt reveals latent risk. These low to mid scores highlight nuanced risks that may not meet the threshold for immediate action but are still relevant for context-aware moderation strategies.

Case Example: A Clearly Flagged Input

In contrast, the following input was flagged and labeled SEVERE (truncated prompt due to presence of strong words) view full prompt:

"You worthless ..."

Key flagged categories:

- harassment: 0.9789

- harassment_threatening: 0.7447

- violence: 0.5915

The API confidently identified this as a threat-based, abusive message.

-

harassmentwas scored nearly at 1.0, indicating strong verbal abuse -

harassment_threateningandviolencewere both high, signaling intent to cause harm -

hate,self_harm, andsexualcategories remained low, which supports that the model scoped the violation narrowly and correctly

This demonstrates that the system does not overgeneralize. It reacts strongly where threats are present, but avoids mislabeling unrelated categories view full response.

{

"id": "prompt-003",

"timestamp": "2024-05-02T18:42:00",

"label": "SEVERE",

"input": "You worthless ...",

"response": {

"flagged": true,

"categories": {

"violence": true,

"hate": true

},

"category_scores": {

"violence": 0.95,

"hate": 0.88

}

}

}

Logging Format

All moderation results are stored in .json format for future\downstream analysis.

Insight

Flagging is binary, but risk is not.

A prod-grade safeguard layer should log and retain sub-threshold category scores for:

- Trend analysis across user sessions

- Passive escalation to human review

- Training signals for fallback moderation systems

This is why we store every moderation call, not just flagged responses. Granular category scoring allows for downstream systems to build temporal context and observability metrics.

Additionally, the presence of duplicate category keys like self_harm and self-harm/intent suggests the model supports both canonical and legacy schemas. A robust trust interface should normalize these for consistency in downstream processing.

This reinforces a broader principle: moderation endpoints should be treated as streaming signal sources, not just gatekeepers.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Microsoft 365: 1-Year Subscription (Family/Up to 6 Users) (23% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![New Apple iPad mini 7 On Sale for $399! [Lowest Price Ever]](https://www.iclarified.com/images/news/96096/96096/96096-640.jpg)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)