MCP Client Agent: Architecture & Implementation, Integration with LLMs

This is a follow up article after a brief introduction to MCP (Model Context Protocol). In this post we will go much deeper into an overall Architecture and MCP Client flow as well as implement an MCP Client Agent. …and hopefully provide a clarity on ‘What happens when you submit your request to MCP powered with LLMs” There are a bunch of articles/posts on how to implement MCP Servers, for reference here is an official example from MCP website. In this article we will only focus on implementing an MCP Client agents that can programmatically connect to the MCP servers. High-Level MCP Architecture Architecture image from modelcontextprotocol.io MCP Components Host: AI Code editors (like Claude Desktop or Cursor) that users directly interact with, serving as the main interface and system manager. Clients: Intermediaries that maintain connections between hosts and MCP servers, handling communication protocols and data flow. Servers: Components that provide specific functionalities, data sources, and tools to AI models through standardized interfaces Without delaying further lets get to the core of this article. What are MCP Client Agents? Custom MCP Clients: Programmatically invoking MCP Servers Most of the use cases we have seen so far are about using MCP in an AI powered IDE. Users configure MCP servers in the IDEs and use its chat interface to interact with MCP Servers, here the chat interface is the MCP Client/Host. But what if you would want to programmatically invoke these MCP servers from your services? This is the real advantage of MCPs, which is a standardized way to provide context and tools to your LLMs, and so we don’t have to start implementing code to integrate with all External APIs, Resources or files, and instead start providing the context and tools and send them to LLM for intelligence. MCP Client Agent Flow w/Multi MCP Servers The diagram illustrates how MCP Custom Clients/AI agents process user requests through MCP servers. Below is a step-by-step breakdown of this interaction flow: Step 1: User Initiates Request User asks a query or submits a request either through an IDE, or browser or terminal Query is received by the Custom MCP Client/Agent interface. Step 2: MCP Client & Server Connection MCP Client connects to the MCP Server. It can connect to multiple servers at a time and requests for tools from these servers Servers send back the supported list of tools and functions. Step 3: AI Processing Both user query and tools list are sent to the LLM (e.g., OpenAI) LLM analyzes the request and suggests appropriate tool and input parameters and sends back response to MCP Client Step 4: Function Execution MCP Client calls the selected function in MCP Server with the suggested parameters. MCP Server receives the function call and processes the request, depending on the request the corresponding tool in a specific MCP Server will get called. Please note to make sure the tool names across your MCP servers are different to avoid LLM hallucination and non-deterministic responses. Server may interact with databases, external APIs, or file systems to process the request Step 5: (Optional) Improve Response using LLM MCP Server returns the function execution response to MCP Client. (Optional) MCP Client can then forward that response to LLM for refinement LLM converts technical response to natural language or creates a summary Step 6: Respond to User Final processed response is sent back to the user through the client interface User receives the answer to their original query Custom MCP Client Implementation / Source Code Connect to MCP Servers: As described above an MCP client can connect to multiple MCP servers, and we can simulate the same in Custom MCP Client. Note: To avoid over hallucination and get fixed results it is recommended to not have collision among tools across these multiple servers. MCP Servers 2 types of transport selection: STDIO (for local processes), SSE (for http/websocket requests) Connecting to STDIO transport async def connect_to_stdio_server(self, server_script_path: str): """Connect to an MCP stdio server""" is_python = server_script_path.endswith('.py') is_js = server_script_path.endswith('.js') if not (is_python or is_js): raise ValueError("Server script must be a .py or .js file") command = "python" if is_python else "node" server_params = StdioServerParameters( command=command, args=[server_script_path], env=None ) stdio_transport = await self.exit_stack.enter_async_context(stdio_client(server_params)) self.stdio, self.write = stdio_transport self.session = await self.exit_stack.enter_async_context(ClientSession(self.stdio, self.write)) await self.se

This is a follow up article after a brief introduction to MCP (Model Context Protocol).

In this post we will go much deeper into an overall Architecture and MCP Client flow as well as implement an MCP Client Agent.

…and hopefully provide a clarity on ‘What happens when you submit your request to MCP powered with LLMs”

There are a bunch of articles/posts on how to implement MCP Servers, for reference here is an official example from MCP website. In this article we will only focus on implementing an MCP Client agents that can programmatically connect to the MCP servers.

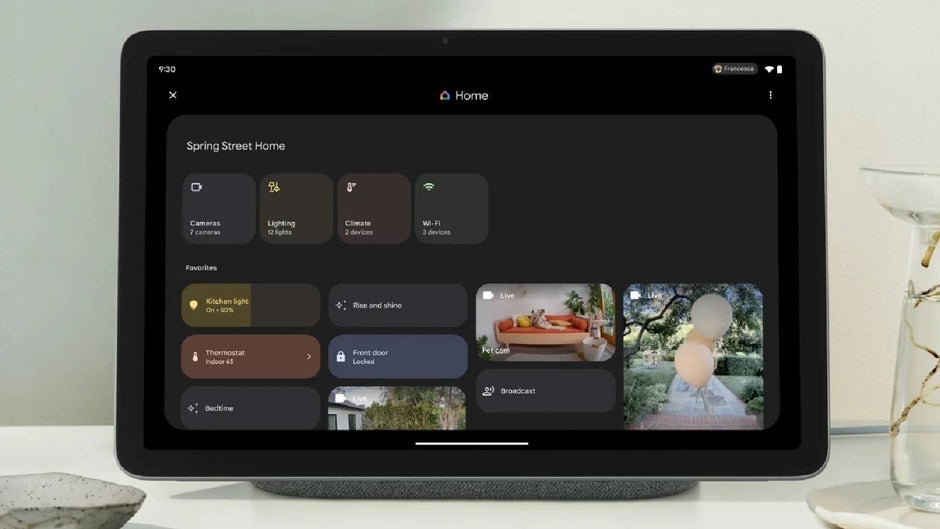

High-Level MCP Architecture

Architecture image from modelcontextprotocol.io

MCP Components

- Host: AI Code editors (like Claude Desktop or Cursor) that users directly interact with, serving as the main interface and system manager.

- Clients: Intermediaries that maintain connections between hosts and MCP servers, handling communication protocols and data flow.

- Servers: Components that provide specific functionalities, data sources, and tools to AI models through standardized interfaces

Without delaying further lets get to the core of this article.

What are MCP Client Agents?

Custom MCP Clients: Programmatically invoking MCP Servers

Most of the use cases we have seen so far are about using MCP in an AI powered IDE. Users configure MCP servers in the IDEs and use its chat interface to interact with MCP Servers, here the chat interface is the MCP Client/Host.

But what if you would want to programmatically invoke these MCP servers from your services? This is the real advantage of MCPs, which is a standardized way to provide context and tools to your LLMs, and so we don’t have to start implementing code to integrate with all External APIs, Resources or files, and instead start providing the context and tools and send them to LLM for intelligence.

MCP Client Agent Flow w/Multi MCP Servers

The diagram illustrates how MCP Custom Clients/AI agents process user requests through MCP servers. Below is a step-by-step breakdown of this interaction flow:

Step 1: User Initiates Request

- User asks a query or submits a request either through an IDE, or browser or terminal

- Query is received by the Custom MCP Client/Agent interface.

Step 2: MCP Client & Server Connection

- MCP Client connects to the MCP Server. It can connect to multiple servers at a time and requests for tools from these servers

- Servers send back the supported list of tools and functions.

Step 3: AI Processing

- Both user query and tools list are sent to the LLM (e.g., OpenAI)

- LLM analyzes the request and suggests appropriate tool and input parameters and sends back response to MCP Client

Step 4: Function Execution

- MCP Client calls the selected function in MCP Server with the suggested parameters.

- MCP Server receives the function call and processes the request, depending on the request the corresponding tool in a specific MCP Server will get called. Please note to make sure the tool names across your MCP servers are different to avoid LLM hallucination and non-deterministic responses.

- Server may interact with databases, external APIs, or file systems to process the request

Step 5: (Optional) Improve Response using LLM

MCP Server returns the function execution response to MCP Client.

-

(Optional)

- MCP Client can then forward that response to LLM for refinement

- LLM converts technical response to natural language or creates a summary

Step 6: Respond to User

- Final processed response is sent back to the user through the client interface

- User receives the answer to their original query

Custom MCP Client Implementation / Source Code

- Connect to MCP Servers: As described above an MCP client can connect to multiple MCP servers, and we can simulate the same in Custom MCP Client.

- Note: To avoid over hallucination and get fixed results it is recommended to not have collision among tools across these multiple servers.

- MCP Servers 2 types of transport selection: STDIO (for local processes), SSE (for http/websocket requests)

Connecting to STDIO transport

async def connect_to_stdio_server(self, server_script_path: str):

"""Connect to an MCP stdio server"""

is_python = server_script_path.endswith('.py')

is_js = server_script_path.endswith('.js')

if not (is_python or is_js):

raise ValueError("Server script must be a .py or .js file")

command = "python" if is_python else "node"

server_params = StdioServerParameters(

command=command,

args=[server_script_path],

env=None

)

stdio_transport = await self.exit_stack.enter_async_context(stdio_client(server_params))

self.stdio, self.write = stdio_transport

self.session = await self.exit_stack.enter_async_context(ClientSession(self.stdio, self.write))

await self.session.initialize()

print("Initialized stdio...")

Connecting to SSE transport

async def connect_to_sse_server(self, server_url: str):

"""Connect to an MCP server running with SSE transport"""

# Store the context managers so they stay alive

self._streams_context = sse_client(url=server_url)

streams = await self._streams_context.__aenter__()

self._session_context = ClientSession(*streams)

self.session: ClientSession = await self._session_context.__aenter__()

# Initialize

await self.session.initialize()

print("Initialized SSE...")

Get Tools and Process User request with LLM & MCP Servers

Once the Servers are initialized, we can now fetch tools from all available servers and process user query, processing user query will follow the steps as described above

# get available tools from the servers

stdio_tools = await std_server.list_tools()

sse_tools = await sse_server.list_tools()

Process user request

async def process_user_query(self, available_tools: any, user_query: str, tool_session_map: dict):

"""

Process the user query and return the response.

"""

model_name = "gpt-35-turbo"

api_version = "2022-12-01-preview"

# On first user query, initialize messages if empty

self.messages = [

{

"role": "user",

"content": user_query

}

]

# Initialize your LLM - e.g., Azure OpenAI client

openai_client = AzureOpenAI(

api_version=api_version,

azure_endpoint=<OPENAI_ENDPOINT>,

api_key=<API_KEY>,

)

# send the user query to the LLM along with the available tools from MCP Servers

response = openai_client.chat.completions.create(

messages=self.messages,

model=model_name,

tools=available_tools,

tool_choice="auto"

)

llm_response = response.choices[0].message

# append the user query along with LLM response

self.messages.append({

"role": "user",

"content": user_query

})

self.messages.append(llm_response)

# Process respose and handle tool calls

if azure_response.tool_calls:

# assuming only one tool call suggested by LLM or keep in for loop to go over all suggested tool_calls

tool_call = azure_response.tool_calls[0]

# tool call based on the LLM suggestion

result = await tool_session_map[tool_call.function.name].call_tool(

tool_call.function.name,

json.loads(tool_call.function.arguments)

)

# append the response to messages

self.messages.append({

"role": "tool",

"tool_call_id": tool_call.id,

"content": result.content[0].text

})

# optionally send the response to LLM to summarize

azure_response = openai_client.chat.completions.create(

messages=self.messages,

model=model_name,

tools=available_tools,

tool_choice="auto"

).choices[0].message

Hopefully this has provided enough guidance to get you started with implementing MCP Clients, in the later posts we will learn more about hosting MCPs for remote access using Kubernetes / Docker.

Here is a sample source code with MCP Client Agent and Server implementation.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] The ChatGPT & AI Super Bundle (91% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)

![Seven tech accessories I keep coming back to [Video]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/05/7-tech-accessories-FI-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![iPhone 17 Air Could Get a Boost From TDK's New Silicon Battery Tech [Report]](https://www.iclarified.com/images/news/97344/97344/97344-640.jpg)

![Vision Pro Owners Say They Regret $3,500 Purchase [WSJ]](https://www.iclarified.com/images/news/97347/97347/97347-640.jpg)

![Apple Showcases 'Magnifier on Mac' and 'Music Haptics' Accessibility Features [Video]](https://www.iclarified.com/images/news/97343/97343/97343-640.jpg)