Small Language Models (SLMs)

Introduction As we navigate through 2025, a significant paradigm shift is occurring in the AI landscape. While much attention has been focused on increasingly massive language models with hundreds of billions of parameters, a countercurrent has emerged: Small Language Models (SLMs). These lightweight yet powerful models are gaining tremendous traction among developers, enterprises, and researchers for their efficiency, practicality, and accessibility. SLMs, typically defined as models with fewer than 10 billion parameters (and often less than 1 billion), are proving that bigger isn't always better when it comes to solving real-world problems. Their rise represents a maturing of the AI field, moving from raw capability demonstrations toward optimized, targeted solutions. The Rise of SLMs vs. Large Language Models Efficiency Advantages Small Language Models offer compelling efficiency benefits across several dimensions: Memory footprint: SLMs require significantly less RAM, often running on consumer hardware with 8-16GB of memory compared to LLMs requiring 48GB+ of VRAM Computational requirements: Training and inference require fewer computational resources, reducing GPU/TPU demand Energy consumption: SLMs can reduce energy usage by 90-99% compared to frontier models, addressing growing concerns about AI's environmental impact Latency: Smaller models typically deliver faster response times, crucial for real-time applications A recent benchmark by MLCommons found that leading SLMs achieved inference speeds 5-10x faster than their larger counterparts on equivalent hardware. Cost Considerations and ROI Analysis The economic advantages of SLMs are compelling: Training costs: SLMs can be trained for thousands rather than millions of dollars Deployment expenses: Lower compute requirements translate to reduced cloud or on-premises infrastructure costs Maintenance overhead: Smaller models require less extensive monitoring and optimization Scalability economics: Cost scales more linearly with usage, avoiding the exponential infrastructure demands of larger models In a 2025 industry report, enterprises deploying SLMs reported an average 73% cost reduction compared to equivalent LLM implementations while maintaining 90%+ of functionality for targeted use cases. Development Timeline and Key Breakthroughs The evolution of SLMs has accelerated dramatically: 2022: Initial research showing knowledge distillation could preserve LLM capabilities in smaller models 2023: Introduction of Microsoft Phi-1 (1.3B parameters) demonstrating reasoning capabilities previously seen only in much larger models 2024: Breakthrough training techniques enabling sub-billion parameter models to match GPT-3.5 on many benchmarks 2025: Specialized domain SLMs outperforming general-purpose LLMs in specific verticals Leading SLM Technologies in 2025 Microsoft's Phi Models Microsoft Research has pioneered some of the most impressive SLMs: Phi-3 Mini (3.8B parameters): Achieves performance comparable to models 10x larger on reasoning tasks Phi-3 Micro (1.3B parameters): Optimized for edge deployment while maintaining strong reasoning capabilities Phi-3 Nano (450M parameters): Designed for mobile and IoT applications The Phi family has been particularly notable for its textbook-quality training approach, showing that carefully curated data can be more important than model size. Top Open-Source Alternatives The open-source ecosystem has flourished with competitive SLMs: TinyLlama (1.1B parameters): Trained on 3 trillion tokens with performance rivaling models 5x larger Mistral 7B Small (2.7B parameters): A highly optimized model exceeding GPT-3.5 performance on several benchmarks OLMo-1B (1B parameters): A fully open weights, data, and training code model with state-of-the-art performance for its size Gemma-2B (2B parameters): Google's contribution to open SLMs with strong performance-to-size ratio Specialized Domain-Specific SLMs Perhaps the most exciting development has been highly specialized SLMs: MedSLM (1.2B parameters): Outperforms GPT-4 on medical diagnostics despite being 100x smaller CodeMini (800M parameters): Specialized for Python programming with performance exceeding larger general-purpose models LegalBert-Small (680M parameters): Fine-tuned for legal document analysis with state-of-the-art performance FinSLM (1.5B parameters): Optimized for financial analysis with enhanced numerical reasoning Technical Architecture and Innovations Several technical innovations have enabled SLMs to achieve their remarkable efficiency: Parameter-Efficient Training Techniques Mixture-of-Experts (MoE): Using specialized sub-networks activated only for relevant inputs Low-Rank Adaptation (LoRA): Modifying only a small subset of parameters during fine-tuning QLoRA: Quantized low-rank

Introduction

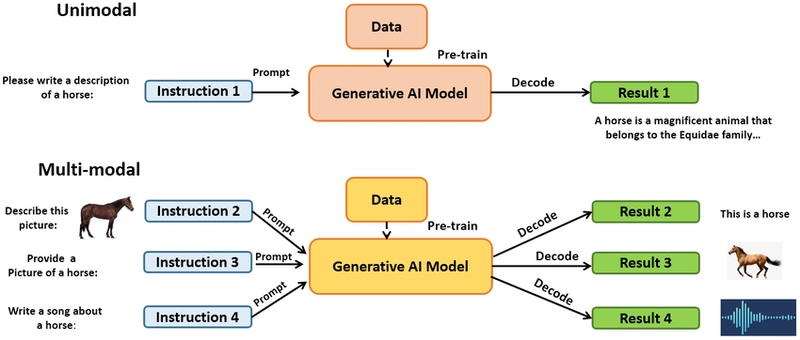

As we navigate through 2025, a significant paradigm shift is occurring in the AI landscape. While much attention has been focused on increasingly massive language models with hundreds of billions of parameters, a countercurrent has emerged: Small Language Models (SLMs). These lightweight yet powerful models are gaining tremendous traction among developers, enterprises, and researchers for their efficiency, practicality, and accessibility.

SLMs, typically defined as models with fewer than 10 billion parameters (and often less than 1 billion), are proving that bigger isn't always better when it comes to solving real-world problems. Their rise represents a maturing of the AI field, moving from raw capability demonstrations toward optimized, targeted solutions.

The Rise of SLMs vs. Large Language Models

Efficiency Advantages

Small Language Models offer compelling efficiency benefits across several dimensions:

- Memory footprint: SLMs require significantly less RAM, often running on consumer hardware with 8-16GB of memory compared to LLMs requiring 48GB+ of VRAM

- Computational requirements: Training and inference require fewer computational resources, reducing GPU/TPU demand

- Energy consumption: SLMs can reduce energy usage by 90-99% compared to frontier models, addressing growing concerns about AI's environmental impact

- Latency: Smaller models typically deliver faster response times, crucial for real-time applications

A recent benchmark by MLCommons found that leading SLMs achieved inference speeds 5-10x faster than their larger counterparts on equivalent hardware.

Cost Considerations and ROI Analysis

The economic advantages of SLMs are compelling:

- Training costs: SLMs can be trained for thousands rather than millions of dollars

- Deployment expenses: Lower compute requirements translate to reduced cloud or on-premises infrastructure costs

- Maintenance overhead: Smaller models require less extensive monitoring and optimization

- Scalability economics: Cost scales more linearly with usage, avoiding the exponential infrastructure demands of larger models

In a 2025 industry report, enterprises deploying SLMs reported an average 73% cost reduction compared to equivalent LLM implementations while maintaining 90%+ of functionality for targeted use cases.

Development Timeline and Key Breakthroughs

The evolution of SLMs has accelerated dramatically:

- 2022: Initial research showing knowledge distillation could preserve LLM capabilities in smaller models

- 2023: Introduction of Microsoft Phi-1 (1.3B parameters) demonstrating reasoning capabilities previously seen only in much larger models

- 2024: Breakthrough training techniques enabling sub-billion parameter models to match GPT-3.5 on many benchmarks

- 2025: Specialized domain SLMs outperforming general-purpose LLMs in specific verticals

Leading SLM Technologies in 2025

Microsoft's Phi Models

Microsoft Research has pioneered some of the most impressive SLMs:

- Phi-3 Mini (3.8B parameters): Achieves performance comparable to models 10x larger on reasoning tasks

- Phi-3 Micro (1.3B parameters): Optimized for edge deployment while maintaining strong reasoning capabilities

- Phi-3 Nano (450M parameters): Designed for mobile and IoT applications

The Phi family has been particularly notable for its textbook-quality training approach, showing that carefully curated data can be more important than model size.

Top Open-Source Alternatives

The open-source ecosystem has flourished with competitive SLMs:

- TinyLlama (1.1B parameters): Trained on 3 trillion tokens with performance rivaling models 5x larger

- Mistral 7B Small (2.7B parameters): A highly optimized model exceeding GPT-3.5 performance on several benchmarks

- OLMo-1B (1B parameters): A fully open weights, data, and training code model with state-of-the-art performance for its size

- Gemma-2B (2B parameters): Google's contribution to open SLMs with strong performance-to-size ratio

Specialized Domain-Specific SLMs

Perhaps the most exciting development has been highly specialized SLMs:

- MedSLM (1.2B parameters): Outperforms GPT-4 on medical diagnostics despite being 100x smaller

- CodeMini (800M parameters): Specialized for Python programming with performance exceeding larger general-purpose models

- LegalBert-Small (680M parameters): Fine-tuned for legal document analysis with state-of-the-art performance

- FinSLM (1.5B parameters): Optimized for financial analysis with enhanced numerical reasoning

Technical Architecture and Innovations

Several technical innovations have enabled SLMs to achieve their remarkable efficiency:

Parameter-Efficient Training Techniques

- Mixture-of-Experts (MoE): Using specialized sub-networks activated only for relevant inputs

- Low-Rank Adaptation (LoRA): Modifying only a small subset of parameters during fine-tuning

- QLoRA: Quantized low-rank adaptation enabling fine-tuning on consumer hardware

- Sparse Attention Mechanisms: Focusing computational resources on the most relevant tokens

Knowledge Distillation Approaches

Knowledge distillation has been key to transferring capabilities from larger to smaller models:

- Teacher-student training: Large models guide the training of smaller ones

- Selective distillation: Focusing on transferring specific capabilities rather than general knowledge

- Progressive distillation: Multi-stage approach transferring knowledge through intermediately sized models

- Data-free distillation: Techniques requiring minimal additional data for effective knowledge transfer

Optimization Strategies for Small Models

Novel optimization approaches include:

- Architectural efficiency innovations: Modified transformer blocks requiring fewer computations

- Quantization advances: Models operating effectively at 4-bit and even 2-bit precision

- Pruning techniques: Removing redundant connections without sacrificing performance

- Hardware-specific optimizations: Models designed for specific accelerators (GPU, TPU, etc.)

Real-World Applications and Use Cases

SLMs are finding application across numerous domains:

Edge and Mobile Device Implementation

- On-device assistants: Fully local AI assistants running without cloud connectivity

- Augmented reality applications: Real-time text processing and generation for AR experiences

- Mobile productivity tools: Document summarization, email composition, and meeting notes

- Camera-based translation: Real-time translation running entirely on device

IoT and Embedded Systems Integration

- Smart home devices: Enhanced natural language understanding in resource-constrained environments

- Industrial sensors: Adding contextual intelligence to industrial IoT systems

- Autonomous vehicles: Local language processing for command interpretation and status reporting

- Wearable technology: Health insights and recommendations from local data processing

Enterprise-Specific Customized Models

- Customer service automation: Company-specific models trained on proprietary knowledge bases

- Internal documentation analysis: Extracting insights from corporate document repositories

- Compliance monitoring: Specialized models for regulatory compliance in specific industries

- Sales enablement tools: Contextual product information and customized communication

Offline Capabilities and Privacy Advantages

The ability to run models completely offline offers significant benefits:

- Sensitive data processing: Handling confidential information without external transmission

- Disconnected operations: Functioning in environments with limited or no connectivity

- Regulatory compliance: Meeting strict data localization and privacy requirements

- Reduced latency: Eliminating network delays for time-sensitive applications

Implementation Guide for Developers

Choosing the Right SLM for Your Use Case

Selection criteria should include:

- Task alignment: Matching model capabilities to specific requirements

- Performance thresholds: Identifying minimum acceptable performance metrics

- Resource constraints: Considering available computational resources

- Fine-tuning potential: Assessing adaptability to domain-specific data

- Community support: Evaluating documentation and community resources

Fine-Tuning and Customization Approaches

Effective customization strategies include:

- Parameter-efficient fine-tuning: Using LoRA, QLoRA, or adapter techniques

- Few-shot learning optimization: Crafting effective prompts and examples

- Synthetic data generation: Creating targeted training examples

- Continuous learning pipelines: Implementing feedback loops for ongoing improvement

- Ensemble approaches: Combining multiple specialized SLMs for broader capabilities

Deployment Best Practices

For successful deployment, consider:

- Model quantization: Reducing precision for efficiency without sacrificing quality

- Caching strategies: Optimizing for repeated or similar queries

- Request batching: Processing multiple inputs simultaneously

- Monitoring and observability: Tracking performance and drift over time

- A/B testing frameworks: Comparing model versions in production

- Graceful degradation: Handling edge cases and unexpected inputs

Future Outlook

The Evolving Balance Between LLMs and SLMs

The future AI landscape will likely feature:

- Tiered model deployment: Organizations maintaining both large and small models for different needs

- Specialized middleware: Systems routing queries to appropriate model sizes

- Hybrid architectures: Small models handling routine tasks with escalation to larger models when needed

- Distributed processing: Networks of SLMs collaborating on complex tasks

Research Directions and Upcoming Innovations

Promising research areas include:

- Compositional models: Combining multiple specialized small models for broader capabilities

- Dynamic architecture switching: Models adapting their structure based on input complexity

- Hardware co-design: Models explicitly designed for new accelerator architectures

- Multimodal small models: Integrating text, image, and audio in resource-efficient frameworks

- Neural-symbolic integration: Combining neural approaches with symbolic reasoning for enhanced efficiency

Conclusion

Small Language Models represent not just a trend but a fundamental rethinking of how AI can be deployed efficiently at scale. Their rise reflects a maturing industry moving beyond raw capability demonstrations toward practical, sustainable, and accessible implementations.

For developers, SLMs offer an opportunity to integrate sophisticated language capabilities into applications that previously couldn't support them due to resource constraints. They enable new paradigms of privacy-preserving, edge-based AI that can function independently of cloud services.

As the ecosystem continues to evolve, we can expect further innovations that push the boundaries of what's possible with limited parameters, ultimately making advanced AI capabilities more ubiquitous and democratized.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[FREE EBOOKS] Modern Generative AI with ChatGPT and OpenAI Models, Offensive Security Using Python & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)

![[Virtual Event] Strategic Security for the Modern Enterprise](https://eu-images.contentstack.com/v3/assets/blt6d90778a997de1cd/blt55e4e7e277520090/653a745a0e92cc040a3e9d7e/Dark_Reading_Logo_VirtualEvent_4C.png?width=1280&auto=webp&quality=80&disable=upscale#)

-xl-(1)-xl-xl.jpg)

![iPhone 17 Air Could Get a Boost From TDK's New Silicon Battery Tech [Report]](https://www.iclarified.com/images/news/97344/97344/97344-640.jpg)

![Vision Pro Owners Say They Regret $3,500 Purchase [WSJ]](https://www.iclarified.com/images/news/97347/97347/97347-640.jpg)

![Apple Showcases 'Magnifier on Mac' and 'Music Haptics' Accessibility Features [Video]](https://www.iclarified.com/images/news/97343/97343/97343-640.jpg)

![Sony WH-1000XM6 Unveiled With Smarter Noise Canceling and Studio-Tuned Sound [Video]](https://www.iclarified.com/images/news/97341/97341/97341-640.jpg)