K-Means Clustering Made Simple: Grouping Data the Smart Way

K-Means is an easy yet powerful algorithm that organizes data into groups based on similarity. It's widely used in customer segmentation, image compression, and more—perfect for making sense of messy data. Before we dive into how K-Means works, let’s first understand what clustering actually means. Clustering referes to the task of identifying similar instances in a dataset and assigning them to unlabelled groups called clusters. and to let you know it is an unsupervised machine learning task It is widely applicable in areas like -Customer segmentation : group customers based on their purchase and activity in an e-commerce site -Data analysis: when analyzing a large dataset. Think of performing inferential statistics. You can perform a clustering algorithm to see variety groups in the data set and that will play an important role in your sampling algorithm. -As a dimensionality reduction technique : This can be achieved when you take a dataset with alot of features and you group datapoints into clusters such that the number of clusters is less than the number of features in the dataset. and then work with these datapoints based on the cluster they belong to and not by their features again. for example detecting a non-plant object from a picture . you can group all the different plants to one cluster and all the non plant object to another cluster thus leaving you with just 2 features which are plant and non-plant For anomaly detection : when an instance has low affinity to all the clusters( does not belong to any of the clusters ). That is to say when an instance(a data point) exhibit characteristic that is not common in any cluster Image segmentation: cluster pixels according to their colors the replace each pixel with the mean color of it’s cluster .that will reduce the number of colors in the image (used for object detection ) Search Engines : first apply clustering algorithm to all the images in the database. When a user searches an image send it with its cluster members What is kmeans clustering This algorithm was first proposed by Stuart Lloyd at Bell Lab in 1957 as a technique for pulse code modulation. It Was later published virtually by Edward W. Forgy . that is why it is also called Lloyd-Forgy How does this algorithm works for a given dataset to perform kmeans clustering on Select randomly the number of clusters k Randomly assign k points as centroids Assign datapoints to these centroids to form clusters Keep updating the centroids till they stop moving ( converge…) WRONG CENTROID INITIALIZATION WILL CAUSE YOUR ALGORITHM TO CONVERGE TO A NON-OPTIMAL SOLUTION and that is why you must learn how to correctly initialise centroids centroid of a cluster is that** datapoint(instance)** that can be used to represent all the datapoint(instances) of that cluster How to effectively initialize centroids Assuming you ran the algorithm earlier and happens to know approximately where the centroids should be. Then you can set the init hyper parameter to a Numpy array containing the list of centroids and set n_init = 1 import numpy as np #still need to import KMeans from sklearn good_init_array = np.array([[],[],[],[],[]]) kmeans = KMeans(n_clusters=5, init = good_init_array, n_init=1) #n_init = 1 means the algorithm will run only once Another solution is to run the algorithm multiple times with different randomized solutions and take the best solution The number of randomized inititalization is controlled by the n_init hyper parameter . By default n_init=10. This means that the whole algorithm runs 10 times when you call the .fit() function and then scikit-learn will keep the best solution. But how does scikit-learn know the best solution :) ? it uses a performance metric called model's inertia model's inertia It is the mean squared distance between each instance and its closest centroid The kMeans class runs the algorithm n_init times and keeps the model with the lowest inertia *how to get the model's inertia * Kmeans.inertia_ Kmeans.score() # kmeans++ In 2006 David Arthur and Sergei Vassilvitskii. In their paper proposed a smarter initialization step that tend to select centroids that are distant from one another and this improvement makes the K-Means algorithm much less likely to converge to a suboptimal solution. They showed that even though this method requires an additional steps for smarter initialization. It is worth it because it makes it possible to drastically reduce the number of the algorithm needs to run to find the optimal solution. kmeans ++ initialization algorithm Take one centroid c(1)\text{c}^\text{(1)}c(1) , chosen uniformly at random from the dataset. Take a new centroid c(i)\text{c}^\text{(i)}c(i) , choosing an instance x(i)\text{x}^\text{(i)}x(i) with probability D(x(i))2∑j=1mD(x(j))2 \frac{D(x^\text{(i)})^2}{\sum_{j=1}^{m} D(x^\text{(j)})^2} ∑j=1mD(x(j))2D(

K-Means is an easy yet powerful algorithm that organizes data into groups based on similarity. It's widely used in customer segmentation, image compression, and more—perfect for making sense of messy data.

Before we dive into how K-Means works, let’s first understand what clustering actually means.

Clustering referes to the task of identifying similar instances in a dataset and assigning them to unlabelled groups called clusters.

and to let you know it is an unsupervised machine learning task

It is widely applicable in areas like

-Customer segmentation : group customers based on their purchase and activity in an e-commerce site

-Data analysis: when analyzing a large dataset. Think of performing inferential statistics. You can perform a clustering algorithm to see variety groups in the data set and that will play an important role in your sampling algorithm.

-As a dimensionality reduction technique : This can be achieved when you take a dataset with alot of features and you group datapoints into clusters such that the number of clusters is less than the number of features in the dataset. and then work with these datapoints based on the cluster they belong to and not by their features again. for example detecting a non-plant object from a picture . you can group all the different plants to one cluster and all the non plant object to another cluster thus leaving you with just 2 features which are plant and non-plant

For anomaly detection : when an instance has low affinity to all the clusters( does not belong to any of the clusters ). That is to say when an instance(a data point) exhibit characteristic that is not common in any cluster

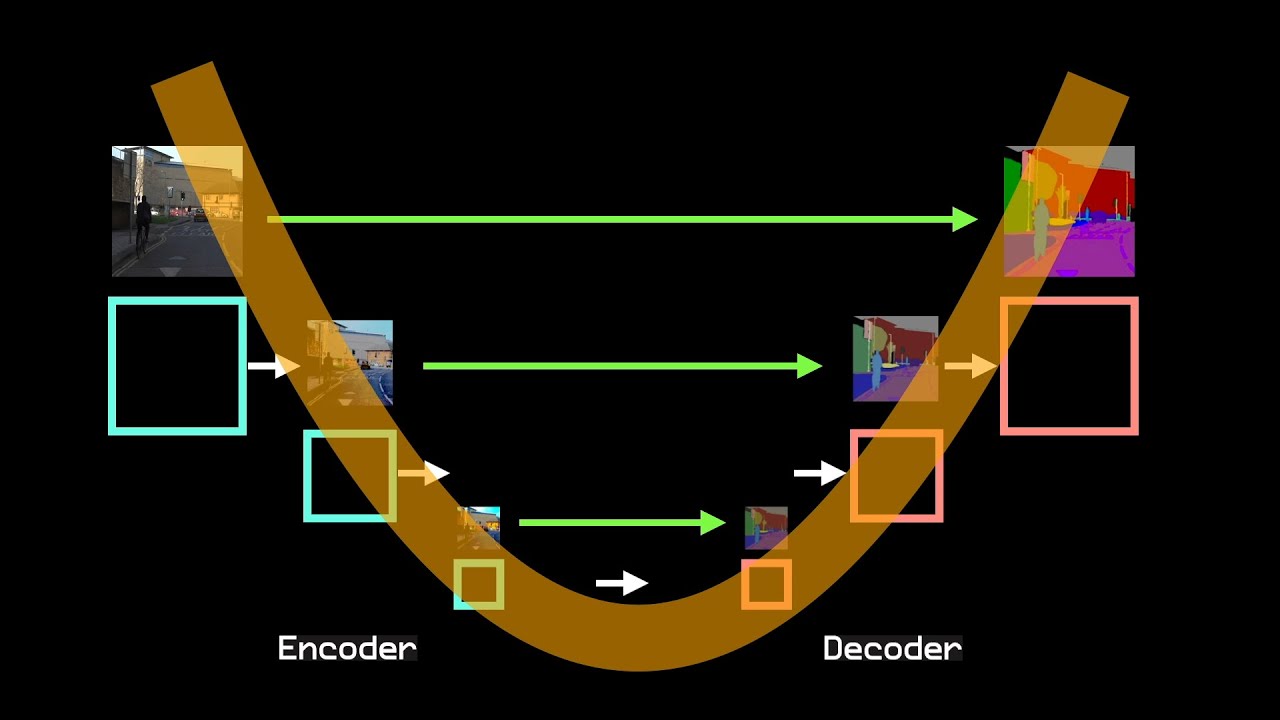

Image segmentation: cluster pixels according to their colors the replace each pixel with the mean color of it’s cluster .that will reduce the number of colors in the image (used for object detection )

Search Engines : first apply clustering algorithm to all the images in the database. When a user searches an image send it with its cluster members

What is kmeans clustering

This algorithm was first proposed by Stuart Lloyd at Bell Lab in 1957 as a technique for pulse code modulation. It Was later published virtually by Edward W. Forgy . that is why it is also called Lloyd-Forgy

How does this algorithm works

for a given dataset to perform kmeans clustering on

Select randomly the number of clusters k

Randomly assign k points as centroids

Assign datapoints to these centroids to form clusters

Keep updating the centroids till they stop moving ( converge…)

WRONG CENTROID INITIALIZATION WILL CAUSE YOUR ALGORITHM TO CONVERGE TO A NON-OPTIMAL SOLUTION

and that is why you must learn how to correctly initialise centroidscentroid of a cluster is that** datapoint(instance)** that can be used to represent all the datapoint(instances) of that cluster

How to effectively initialize centroids

Assuming you ran the algorithm earlier and happens to know approximately where the centroids should be. Then you can set the init hyper parameter to a Numpy array containing the list of centroids and set n_init = 1

import numpy as np

#still need to import KMeans from sklearn

good_init_array = np.array([[],[],[],[],[]])

kmeans = KMeans(n_clusters=5, init = good_init_array, n_init=1)

#n_init = 1 means the algorithm will run only once

Another solution is to run the algorithm multiple times with different randomized solutions and take the best solution

The number of randomized inititalization is controlled by the n_init hyper parameter .

By default n_init=10. This means that the whole algorithm runs 10 times when you call the .fit() function and then scikit-learn will keep the best solution.

But how does scikit-learn know the best solution :) ?

it uses a performance metric called model's inertia

model's inertia

It is the mean squared distance between each instance and its closest centroid

The kMeans class runs the algorithm n_init times and keeps the model with the lowest inertia

*how to get the model's inertia *

Kmeans.inertia_

Kmeans.score() #

kmeans++

In 2006 David Arthur and Sergei Vassilvitskii. In their paper proposed a smarter initialization step that tend to select centroids that are distant from one another and this improvement makes the K-Means algorithm much less likely to converge to a suboptimal solution.

They showed that even though this method requires an additional steps for smarter initialization. It is worth it because it makes it possible to drastically reduce the number of the algorithm needs to run to find the optimal solution.

kmeans ++ initialization algorithm

Take one centroid c(1)\text{c}^\text{(1)}c(1) , chosen uniformly at random from the dataset.

-

Take a new centroid c(i)\text{c}^\text{(i)}c(i) , choosing an instance x(i)\text{x}^\text{(i)}x(i) with probability

D(x(i))2∑j=1mD(x(j))2 \frac{D(x^\text{(i)})^2}{\sum_{j=1}^{m} D(x^\text{(j)})^2} ∑j=1mD(x(j))2D(x(i))2

where,

x(i)x^{(i)}x(i)

: The

ithi^\text{th}ith

data point in your dataset.

D(x(i))D(x^{(i)})D(x(i))

: The shortest distance from

x(i)x^{(i)}x(i)

to the nearest already chosen cluster center.

D(x(i))2D(x^{(i)})^2D(x(i))2

: The squared distance, giving higher weight to points farther away.

∑j=1mD(x(j))2\sum_{j=1}^{m} D(x^{(j)})^2∑j=1mD(x(j))2

: The sum of all squared distances from each point

x(j)x^{(j)}x(j)

to its nearest cluster center.

-This algorithm ensures that any instances farthest from from the centroid is likely to me a centroid

- Repeat till all the k –clusters have been found

Accelerated kmeans clustering

This one was produced in 2003 by Charles Elkan.

It considerably accelerated the algorithm by avoiding many unnecessary calculations .

Elkan achieved this by exploiting the triangle of inequalities(i.e that a straight line is always the shortest path between 2 points)

And by keeping track of upper and lower bounds for distances between instances and centroids.

This is the algorithm the k means class uses by default.

Mini-batch kmeans clustering

This algorithm was proposed in a 2010 paper by David Scully . Instead of using the whole dataset for each iteration ,the algorithm is capble of using mini-batches moving the centroid just slightly after each iteration .

This speeds up the algorithm typically by a factor of three or four and makes it possible to cluster huge datasets that do not fit in memory. Scikit-Learn implements this algorithm in the MiniBatchKMeans class. You can just use this class like the KMeans class

#import

from sklearn.cluster import MiniBatchKMeans

--

#this is how it is used

minibatch_kmeans = MiniBatchKMeans(n_clusters=5)

minibatch_kmeans.fit(X) # X is our dataset

Although the Mini-batch K-Means algorithm is much faster than the regular KMeans algorithm, its inertia is generally slightly worse, especially as the number of clusters increases

How to find the optimal number of clusters

this is when you will now know your elbow is not useless

Generally it is not always easy to get the number of cluster for your algorithm. And setting a wrong value of k will lead to bad results.

Now what if we just take the k value with the smallest model’s inertia

This will not work because the value of inertia decreases as the value of k increases meaning that even when you exceed the optimum value of k the model’s inertia will still keep decreasing till every data point becomes a clusters on its own and at that point models inertia = 0

But what actually happens is that as you increase k . the models inertia decreases at very hight rate till you reach the optimum k value from there now the model’s inertia will now be decreasing at a very low rate and this might split perfect clusters for no good reason hence the inertia is not a good performance metric.

Since inertia have failed, a more precise but computationally expensive approach is to use the **silhouette score **which is the mean silhouette coefficient over all instances.

How to calculate the silhouette score

remember the silhouette score is the mean(average) silhouette coefficient over all instances.

so for each instance

Calculate the mean distance from that instance to its cluster members and assign this value to a variable a

Get the closest neighbor cluster

Calculate the mean distance to all the instances of this neighbor cluster and assign to b

Then silhouette score = (b-a)max(a,b)\frac{\text{(b-a)}}{\text{max(a,b)}}max(a,b)(b-a)

The silhouette coefficient vary between -1 and 1

value approaching 1 means instance is in the correct cluster

value approaching 0 means instance is in cluster boundary

value approaching -1 means instance is in the wrong cluster

To compute the silhouette score in scikit-learn is very easy

#import

from sklearn.metrics import silhouette_score

#use

silhouette_score(X, kmeans.labels)

Limitations of K-Means Clustering

While K-Means is simple and widely used, it comes with several important limitations:

1. Requires Predefined Number of Clusters (k)

You must specify the number of clusters in advance, which is often unknown. Choosing the wrong value can lead to poor clustering results.

2. Assumes Spherical, Evenly Sized Clusters

K-Means assumes that all clusters are circular and similar in size. It struggles with:

- Non-spherical (e.g., elongated or irregular) clusters

- Clusters of different densities

- Unevenly sized clusters

3. Sensitive to Initialization

The initial placement of centroids greatly affects the final output. Bad initialization can cause the algorithm to converge to a suboptimal solution.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

_NicoElNino_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Standalone Meta AI App Released for iPhone [Download]](https://www.iclarified.com/images/news/97157/97157/97157-640.jpg)