Introducing Claude Crew: Enhancing Claude Desktop's Coding Agent Capabilities

I've developed a tool called "Claude Crew" that extends Claude Desktop's capabilities as an autonomous coding agent. I'd like to introduce this tool and share some insights gained during its development. Repository: https://github.com/d-kimuson/claude-crew Claude Crew is designed with inspiration from OpenHands but specifically for Claude Desktop. It leverages Model Context Protocol (MCP) and custom instructions to maximize Claude Desktop's coding capabilities. TL;DR Created Claude Crew, an autonomous coding agent for Claude Desktop similar to OpenHands Provides RAG functionality and project-optimized MCP to enhance Claude's performance What is a Claude Desktop-Based Agent? Recently, LLM-based coding tools have become widespread, with solutions like Cline, Cursor, Devin, and others being actively used by developers. You may have tried some of these or even incorporated them into your workflow. Claude Desktop comes equipped with: Custom instructions setting capabilities A Model Context Protocol (MCP) client By configuring MCP with file operations (through the official filesystem implementation) and third-party or self-built shell access, and setting custom instructions to enable agent behavior, Claude Desktop can function as a coding agent on its own. While these existing mechanisms make it possible to create a coding agent based on Claude Desktop, basic MCP integration alone has limitations when it comes to understanding context in large projects and efficiently using tokens. Claude Crew provides a toolset to address these challenges. Differences from Editor-Integrated Coding Assistants AI coding assistants like Cline, Cursor, and Windsurf are designed for human collaboration. They follow an interactive workflow where humans review and help modify code in real-time while the agent is making edits. In contrast, Claude Crew aims for a more autonomous workflow based on the concept of "create XXX" tasks, where AI completes tasks autonomously from start to finish. In essence, Claude Crew resembles implementing OpenHands on Claude Desktop. It's worth noting that higher autonomy isn't always better—collaborative and autonomous approaches have clear trade-offs: Collaborative (Cline/Cursor) Autonomous (Claude Crew) Better at difficult or poorly articulated problems Only handles well-defined, simpler tasks Human judgment can redirect to solve harder problems Removes human bottlenecks for parallel processing Requires continuous feedback Only needs final result verification Requires engineering feedback Works better with feedback from non-technical stakeholders In practice, both approaches complement each other. In my own workflow, once Claude Crew became usable, I would: Delegate simpler feature implementations to Claude Crew in parallel Focus my attention on more complex problems using Cursor Problems Claude Crew Solves While basic agent functionality can be achieved by simply connecting Claude Desktop with MCP, there were several challenges to overcome for practical use: Context Understanding Limitations - Understanding related code in large projects is crucial but difficult Token Restrictions - Claude Desktop offers unlimited usage with a subscription, but has relatively strict character limits, requiring efficient context window usage Feature Gaps - Compared to editor-integrated tools like Cursor, Claude Desktop lacks feedback mechanisms for LLMs, which would require significant implementation work to match Claude Crew addresses these challenges comprehensively: Builds local RAG for your codebase to effectively supplement context understanding Provides effective LLM feedback reflecting your development project information Automatic unit testing and static analysis when files are modified, with results fed back to minimize trial and error and produce correct code Offers an all-in-one solution with features that enhance coding performance but would be difficult to set up individually: Memory banks, TypeScript Compiler API-based identifier implementation search, "think" tool for improved coding performance, etc. How Claude Crew Works Claude Crew provides both MCP for Claude Desktop integration and a CLI that comprehensively enhances Claude Desktop's functionality. In addition to Claude Desktop, you'll need: OpenAI API key for running local RAG (optional but recommended) Docker for running PostgreSQL for RAG searches The CLI offers interactive setup that outputs structured configuration for your project: $ cd /path/to/your-project $ npx claude-crew@latest setup This configuration is passed during MCP startup, allowing MCP to operate according to your project structure. Configuration example: { "mcpServers": { "claude-crew-claude-crew": { "command": "npx", "args": [ "-y", "claude-crew@latest", "serve-mcp", "/path/to/.claude-crew/c

I've developed a tool called "Claude Crew" that extends Claude Desktop's capabilities as an autonomous coding agent. I'd like to introduce this tool and share some insights gained during its development.

Repository:

https://github.com/d-kimuson/claude-crew

Claude Crew is designed with inspiration from OpenHands but specifically for Claude Desktop. It leverages Model Context Protocol (MCP) and custom instructions to maximize Claude Desktop's coding capabilities.

TL;DR

- Created Claude Crew, an autonomous coding agent for Claude Desktop similar to OpenHands

- Provides RAG functionality and project-optimized MCP to enhance Claude's performance

What is a Claude Desktop-Based Agent?

Recently, LLM-based coding tools have become widespread, with solutions like Cline, Cursor, Devin, and others being actively used by developers. You may have tried some of these or even incorporated them into your workflow.

Claude Desktop comes equipped with:

- Custom instructions setting capabilities

- A Model Context Protocol (MCP) client

By configuring MCP with file operations (through the official filesystem implementation) and third-party or self-built shell access, and setting custom instructions to enable agent behavior, Claude Desktop can function as a coding agent on its own.

While these existing mechanisms make it possible to create a coding agent based on Claude Desktop, basic MCP integration alone has limitations when it comes to understanding context in large projects and efficiently using tokens. Claude Crew provides a toolset to address these challenges.

Differences from Editor-Integrated Coding Assistants

AI coding assistants like Cline, Cursor, and Windsurf are designed for human collaboration. They follow an interactive workflow where humans review and help modify code in real-time while the agent is making edits.

In contrast, Claude Crew aims for a more autonomous workflow based on the concept of "create XXX" tasks, where AI completes tasks autonomously from start to finish.

In essence, Claude Crew resembles implementing OpenHands on Claude Desktop.

It's worth noting that higher autonomy isn't always better—collaborative and autonomous approaches have clear trade-offs:

| Collaborative (Cline/Cursor) | Autonomous (Claude Crew) |

|---|---|

| Better at difficult or poorly articulated problems | Only handles well-defined, simpler tasks |

| Human judgment can redirect to solve harder problems | Removes human bottlenecks for parallel processing |

| Requires continuous feedback | Only needs final result verification |

| Requires engineering feedback | Works better with feedback from non-technical stakeholders |

In practice, both approaches complement each other.

In my own workflow, once Claude Crew became usable, I would:

- Delegate simpler feature implementations to Claude Crew in parallel

- Focus my attention on more complex problems using Cursor

Problems Claude Crew Solves

While basic agent functionality can be achieved by simply connecting Claude Desktop with MCP, there were several challenges to overcome for practical use:

- Context Understanding Limitations - Understanding related code in large projects is crucial but difficult

- Token Restrictions - Claude Desktop offers unlimited usage with a subscription, but has relatively strict character limits, requiring efficient context window usage

- Feature Gaps - Compared to editor-integrated tools like Cursor, Claude Desktop lacks feedback mechanisms for LLMs, which would require significant implementation work to match

Claude Crew addresses these challenges comprehensively:

- Builds local RAG for your codebase to effectively supplement context understanding

- Provides effective LLM feedback reflecting your development project information

- Automatic unit testing and static analysis when files are modified, with results fed back to minimize trial and error and produce correct code

- Offers an all-in-one solution with features that enhance coding performance but would be difficult to set up individually:

- Memory banks, TypeScript Compiler API-based identifier implementation search, "think" tool for improved coding performance, etc.

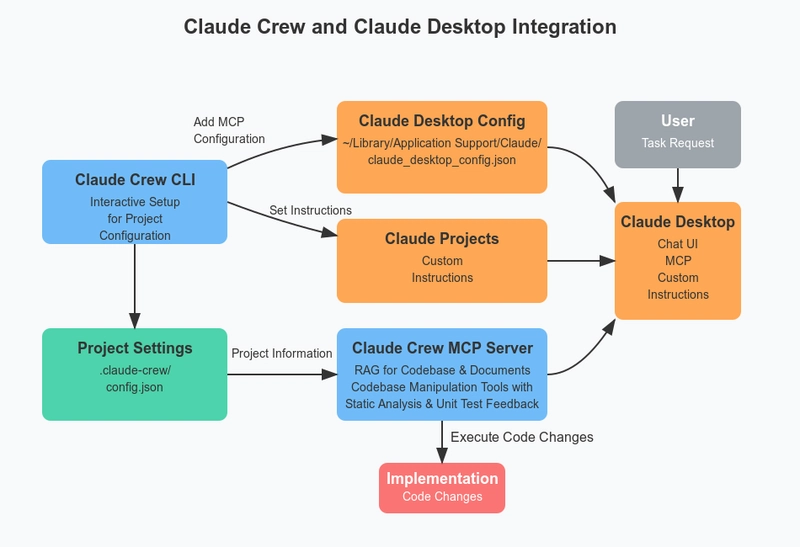

How Claude Crew Works

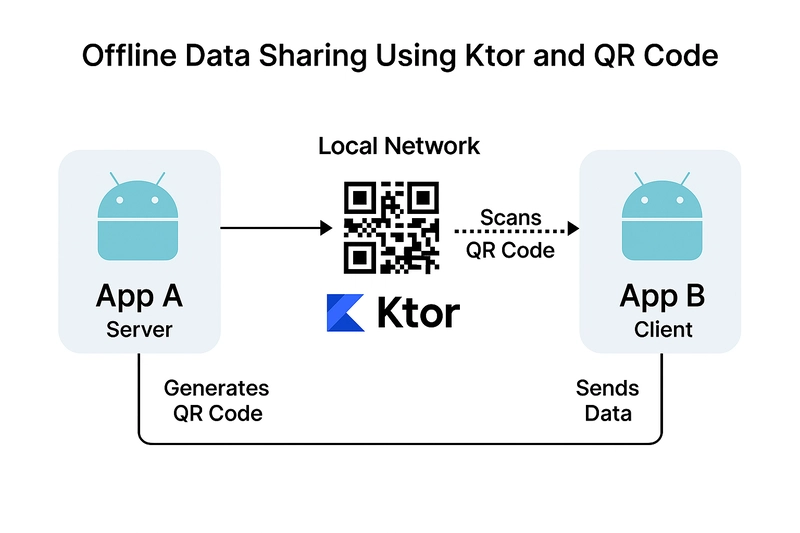

Claude Crew provides both MCP for Claude Desktop integration and a CLI that comprehensively enhances Claude Desktop's functionality.

In addition to Claude Desktop, you'll need:

- OpenAI API key for running local RAG (optional but recommended)

- Docker for running PostgreSQL for RAG searches

The CLI offers interactive setup that outputs structured configuration for your project:

$ cd /path/to/your-project

$ npx claude-crew@latest setup

This configuration is passed during MCP startup, allowing MCP to operate according to your project structure.

Configuration example:

{

"mcpServers": {

"claude-crew-claude-crew": {

"command": "npx",

"args": [

"-y",

"claude-crew@latest",

"serve-mcp",

"/path/to/.claude-crew/config.json"

]

}

}

}

Additionally, it creates:

.claude-crew/instruction.md.claude-crew/memory-bank.md

Setting .claude-crew/instruction.md as Claude Project's custom instruction enables the coding agent functionality.

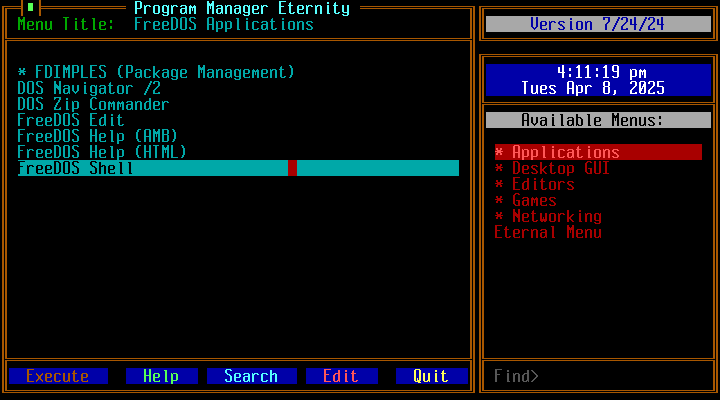

Running a Real Task

Let's use Claude Crew to add a feature to Claude Crew itself. In this case, we'll add a "keep branch up-to-date" feature.

Since there is no mechanism to keep the branch up-to-date, please implement one.

Allow setting git's default branch when configuring via CLI

During prepare, if there are no local changes and we're on the default branch, do git pull --rebase origin [default branch]

Update the README to reflect the changes

After verifying the functionality, commit with a message in English

Let's begin.

Once a task begins, the custom instruction invokes the "prepare" tool, which provides the LLM with:

- Highly relevant source code

- Relevant documentation

- Memory bank contents

- Project overview and tech stack information parsed from configuration and package.json

Using this context information, it explores for additional necessary information and performs coding tasks.

The claude-crew-write-file tool is used to update files, automatically:

- Returning type-checking results

- Returning ESLint results

- Detecting and running relevant tests and returning results

We want the output to pass tests and linting, but running these as MCP would increase round-trip communications, so they're built into MCP for efficiency.

When implementation is complete, the custom instruction calls the claude-crew-check-all tool to verify everything works correctly and completes the task.

This process allows features to be implemented without human intervention.

Lessons and Challenges

Here are some lessons learned and remaining challenges:

MCP Inspector is Useful for Development

MCP Inspector is a valuable tool for debugging. It lets you test tools as if you were the LLM.

Capturing stdout is Essential for Stability

MCP uses standard input/output for communication, so using console.log can cause MCP client errors due to invalid messages.

I tried using a custom logger to avoid standard output during MCP usage, but some libraries inevitably needed it, so I overrode process.stdout:

// eslint-disable-next-line @typescript-eslint/unbound-method -- It's fine because we're just returning to a method with the same 'this'

const originalWrite = process.stdout.write

process.stdout.write = (content) => {

myLogger.info(content.toString())

return true

}

// Process that outputs to stdout

process.stdout.write = originalWrite

However, permanently overriding process.stdout.write would break MCP itself, so this approach must be used selectively. A cleaner solution would be preferable.

Long Tasks are Difficult with MCP

Initially, I wanted the "prepare" tool to handle database migrations and re-indexing changed code, but debugging with MCP Inspector revealed timeout issues.

As this article explains, MCP Inspector times out after 10 seconds, while Claude Desktop allows 60 seconds. The client has the final say.

Since the server can't request longer wait times for heavy tasks like "prepare," design must account for these constraints on long-running tasks.

MCP Optimization Achieves Many Goals

I've previously experimented with coding agents:

Vercel AI SDK Mastra AI Agent

While coding the agent lifecycle offers more flexibility, MCP and instructions can accomplish quite a lot on their own.

The main advantages I observed were:

- RAG availability

- Optimized feedback during file operations

- Message compression for cost efficiency

- Dynamic system prompt modification during tasks

The first two can be achieved with MCP and configuration (plus CLI tools), while the latter two require complete implementation, which comes with:

- Usage-based billing concerns

- Complex implementation challenges

- Despite assistance from LLMs, Vercel AI SDK, Mastra, etc., implementing chat UIs and dynamically modifying agent system prompts involves difficult state management

Starting with an MCP-first approach might be worthwhile.

Context Understanding Could Be More Efficient

In the task example, we hit character limits and had to request continuation. While some constraints are unavoidable, I'd like to minimize them.

To improve context understanding and for better cost-efficiency than letting the system autonomously seek context, I included:

- RAG for codebase and source code

- Simplified memory bank

- Unlike the original memory bank implementation with multiple files, Claude Crew uses a single file with reduced sections

In the example, the prompt and context consumed about 5,500 tokens. I'm considering optimizations like choosing between memory bank and RAG to reduce this overhead.

Conclusion

I've introduced Claude Crew, a tool I developed to enhance Claude Desktop's coding agent capabilities!

https://github.com/d-kimuson/claude-crew

I'm continuing development with the goal of creating a tool that allows you to delegate relatively simple tasks entirely to Claude Desktop within your subscription limits.

Please try it out! Contributions and stars are welcome and motivating!

Footnotes

- Reference: https://www.anthropic.com/engineering/claude-think-tool

- The embedding model costs approximately 10,000,000 tokens per dollar. Indexes are automatically kept up-to-date, but a content hash-based differential update logic is used to minimize updates.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

-11.11.2024-4-49-screenshot.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_jvphoto_Alamy.jpg?#)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)