'Five years of research in seconds': Google DeepMind’s Manish Gupta on driving scientific innovation with AI

At DevSparks 2025, Gupta discusses how the lab’s latest Gemini releases, multilingual research projects, and energy-efficient ‘Matryoshka’ model are solving India’s most pressing real-world problems.

Scientific research has traditionally been a long, painstaking process, often taking years of experimentation and analysis. Manish Gupta, Senior Director of Google DeepMind, says that the timeline is now collapsing down to a few seconds, all thanks to artificial intelligence.

“What once took an entire PhD can now be done in a few seconds,” said Gupta, addressing an audience of developers, technologists, and founders at DevSparks 2025, YourStory's flagship developer summit held in Bengaluru.

Speaking at a session titled “Augmenting India with AI: What’s Cooking at Google DeepMind?”, Gupta pointed to DeepMind’s AlphaFold, an AI program that performs predictions of protein structures.

“I feel this area (protein research) hasn’t received as much attention in India,” he said, noting that last year’s Nobel Prize-winning in Chemistry is ‘a good signal of what’s likely to come.’

“Proteins are the basic building blocks of life, comprising chains of amino acids, which fold into a particular shape that gives them their distinctive properties. A decade ago, it used to take about an entire PhD, close to five years of research, to uncover the structure of a single protein. And today, with the help of this model, AlphaFold, for which Demis Hassabis and John Jumper won the Nobel Prize, this can be done in a few seconds,” he explained.

Gupta adds that the AI tool has predicted the structures of all 200 million known proteins, and is now widely used by over two million researchers for applications like drug discovery and designing enzymes for biodegradable plastics.

Solving for India

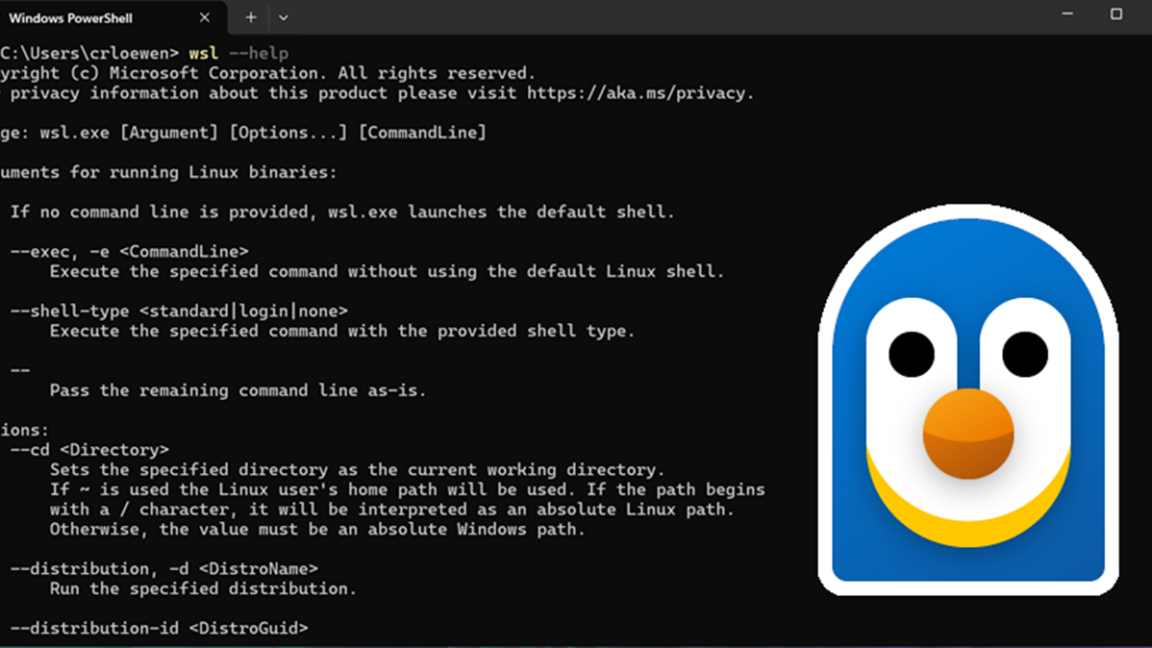

Google has been advancing its AI roadmap through its in-house model, Gemini, which goes beyond basic language processing to handle multimodal tasks. The tech giant recently launched Gemini 2.5 Pro Preview, which excels in coding.

Gupta, who currently leads research teams in AI across India and Japan, believes that a key focus for his team is making these technologies more inclusive and accessible, especially in India, ensuring they benefit all and not just a ‘privileged few’.

He stated there is a huge performance gap for large language models (LLMs), including Google’s Gemini, when it comes to Indian languages, compared to English.

At a major conference last year, his team presented a paper benchmarking 29 Indian languages, exposing this disparity. To address it, they began developing a new module called ‘Morni’, designed to understand over a hundred Indian languages.

However, Gupta stated 72 out of the 135 languages were at that time zero-corpus languages, which means there were no online texts, audio, or transcripts that can be used to train AI models.

“I speak Hindi… and it is spoken in so many different ways depending on the region that you're coming from. In India, there is a saying, ‘kos kos pe badle paani, chaar kos par vaani’.. which means that every few kilometers the taste of water changes, and every 10 kilometers or so, the nature of the voice changes,” he said.

These developments inspired the team to build Project Vaani, launched in partnership with IISc Bangalore, designed to collect speech data from 773 districts of India.

“All of this data is being made freely available. If you are an AI researcher, developer, or building models for Indian languages, you are welcome to make use of this data, which is available from IISC as well as the Bhashini program from the government of India,” he added.

The team has released the first phase of data, which covers 59 Indian languages, including 15 that were previously zero-corpus languages.

Gupta also highlighted how the AI models are being applied to underserved sectors like agriculture, which nearly 45% of India’s population depends on for their livelihoods. His team has developed a satellite-based AI model that maps farm boundaries, water sources, and crops across the country.

Building efficient AI models

Gupta spoke about the growing focus on building efficient AI models, especially following the hype around China’s DeepSeek model, which was built with fewer computational resources.

Gupta explained how the company is adopting the ‘Matryoshka architecture’, inspired by Russian nesting dolls, where smaller AI models are built inside a larger one to improve efficiency.

For example, Gemini Nano, largely used on Android devices, follows this method, allowing the model to adjust its size based on the task, without losing performance.

“Even though you see one model, nested inside that model are increasingly smaller models, which are as powerful, or even more powerful, than an independently trained model of that size. Depending on the complexity of your problem, you can use the appropriate size model,” he said.

“Google Gemini Flash model is, in terms of its computational power per rupee per watt, it's roughly five times more powerful than these DeepSeek R1 models,” he added.

He further credited the Bengaluru-based teams for driving much of the innovation behind Gemini Flash, the company’s lightweight and high-efficiency AI model built using fewer computational resources.

“I'm very proud of a lot of the work from our teams in Bangalore that have gone into making these Gemini Flash models best of breed. In India, we have this amazing, once-in-a-lifetime opportunity to tackle problems and build solutions that can benefit not just a billion-plus Indians but the rest of the world as well,” he emphasised.

Edited by Jyoti Narayan

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)

-(1).jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?#)

![Apple's iPhone Shift to India Accelerates With $1.5 Billion Foxconn Investment [Report]](https://www.iclarified.com/images/news/97357/97357/97357-640.jpg)

![Apple Releases iPadOS 17.7.8 for Older Devices [Download]](https://www.iclarified.com/images/news/97358/97358/97358-640.jpg)

![[Updated With Statement] Verizon’s Motorola Razr 2025 Rollout Is on Hold](https://www.talkandroid.com/wp-content/uploads/2025/04/razr-colorways-2000x1331-1.png)