AI-Powered Phishing Attacks: Can AI Fool Even Cybersecurity Experts?

Cyber threats are evolving fast, and artificial intelligence (AI) is now playing a major role in phishing attacks. These attacks, where hackers try to trick people into revealing sensitive information, are becoming more advanced. Did you know? In 2023, over 60% of phishing attacks used AI tools to mimic human writing styles, making scams harder to spot. As artificial intelligence (AI) grows smarter, cybercriminals are weaponizing it to launch sneaky, personalized phishing attacks. But here’s the big question: Can these AI tricks fool even cybersecurity pros? Let’s break it down. What Makes AI-Powered Phishing Different? Phishing isn’t new. For years, scammers have sent fake emails like “Your account is locked!” to steal passwords or money. But traditional phishing has flaws: Poor grammar or spelling mistakes. Generic messages (e.g., “Dear Customer”). Easy to block with basic spam filters. Can AI Outsmart Cybersecurity Experts? Cybersecurity experts are trained to spot red flags. But AI attacks are designed to slip under the radar: Hyper-Personalized Attacks AI can analyze your LinkedIn profile, tweets, or shopping habits to build believable stories. For example: “Hi [Your Name], loved your post about hiking! Our team at [Fake Outdoor Brand] wants to sponsor you. Click here to claim your reward!” Even experts might struggle to tell you this isn’t legit, especially if the email looks identical to a real company’s. Deepfakes & Voice Cloning AI can clone voices or create video deepfakes. Imagine your “boss” calling via Zoom, urging you to wire money urgently. Scarily, tools like OpenAI’s Voice Engine can mimic voices with just a 15-second audio sample. Evading Detection AI-generated phishing emails often lack suspicious links or attachments. Instead, they urge you to reply with sensitive info. Others use “clean” websites that only turn malicious after you click. How Cybersecurity Experts Fight Back? While hackers use AI for harm, cybersecurity teams are ready for defending: AI Detectors: Tools like Darktrace scan emails for AI-generated text. Behavior Analysis: AI learns your normal habits (e.g. when you log in) and flags odd activity. Simulated Attacks: Companies use AI to run fake phishing drills and train employees. But it’s a constant battle. As phishing AI evolves, defenses must adapt faster. How to Protect Yourself You don’t need to be a tech genius to stay safe. Follow these steps: Slow Down: Phishing preys on panic. Check URLs before clicking. Verify Odd Requests: Call the sender directly if an email seems “off.” Use Multi-Factor Authentication (MFA): Even if hackers get your password, MFA blocks them. Update Software: Newest security patches fix loopholes in AI exploits. Conclusion AI-powered phishing attacks are a serious threat, even for cybersecurity experts. However, as hackers use AI to create more convincing scams, cybersecurity professionals are also using AI to build better defenses. The key to staying safe is awareness. By being cautious, double-checking unexpected requests, and using security tools, individuals and businesses can reduce the risk of falling victim to AI-powered scams. The battle between hackers and cybersecurity experts is ongoing, but one thing is clear AI is changing the game for both sides.

Cyber threats are evolving fast, and artificial intelligence (AI) is now playing a major role in phishing attacks. These attacks, where hackers try to trick people into revealing sensitive information, are becoming more advanced.

Did you know? In 2023, over 60% of phishing attacks used AI tools to mimic human writing styles, making scams harder to spot. As artificial intelligence (AI) grows smarter, cybercriminals are weaponizing it to launch sneaky, personalized phishing attacks. But here’s the big question: Can these AI tricks fool even cybersecurity pros? Let’s break it down.

What Makes AI-Powered Phishing Different?

Phishing isn’t new. For years, scammers have sent fake emails like “Your account is locked!” to steal passwords or money. But traditional phishing has flaws:

Poor grammar or spelling mistakes.

Generic messages (e.g., “Dear Customer”).

Easy to block with basic spam filters.

Can AI Outsmart Cybersecurity Experts?

Cybersecurity experts are trained to spot red flags. But AI attacks are designed to slip under the radar:

Hyper-Personalized Attacks

AI can analyze your LinkedIn profile, tweets, or shopping habits to build believable stories. For example:

“Hi [Your Name], loved your post about hiking! Our team at [Fake Outdoor Brand] wants to sponsor you. Click here to claim your reward!”

Even experts might struggle to tell you this isn’t legit, especially if the email looks identical to a real company’s.

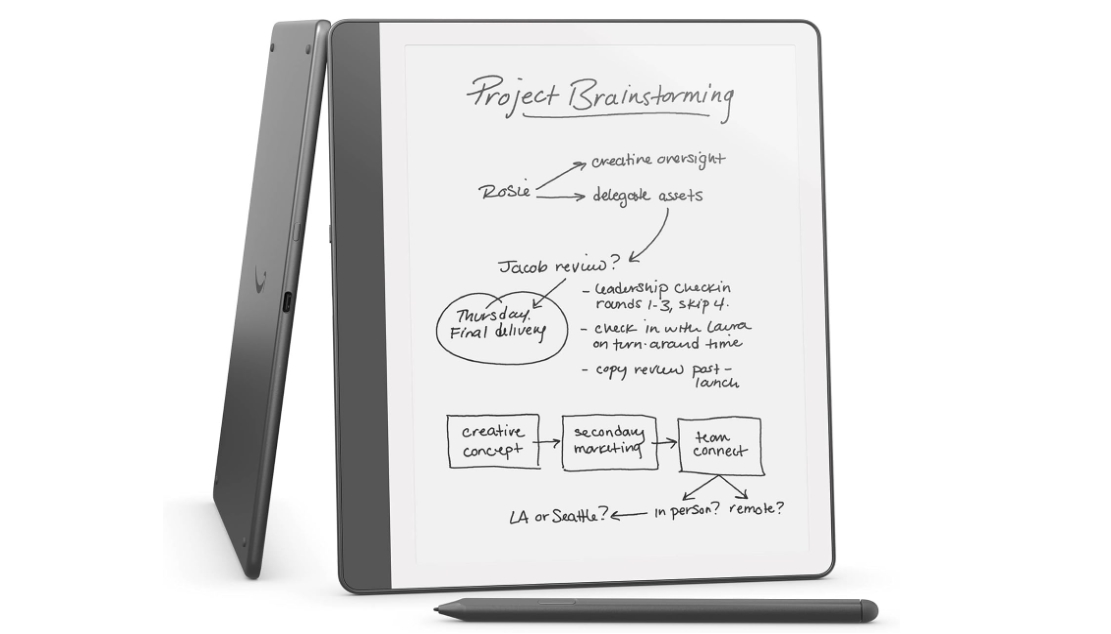

Deepfakes & Voice Cloning

AI can clone voices or create video deepfakes. Imagine your “boss” calling via Zoom, urging you to wire money urgently. Scarily, tools like OpenAI’s Voice Engine can mimic voices with just a 15-second audio sample.

Evading Detection

AI-generated phishing emails often lack suspicious links or attachments. Instead, they urge you to reply with sensitive info. Others use “clean” websites that only turn malicious after you click.

How Cybersecurity Experts Fight Back?

While hackers use AI for harm, cybersecurity teams are ready for defending:

AI Detectors: Tools like Darktrace scan emails for AI-generated text.

Behavior Analysis: AI learns your normal habits (e.g. when you log in) and flags odd activity.

Simulated Attacks: Companies use AI to run fake phishing drills and train employees.

But it’s a constant battle. As phishing AI evolves, defenses must adapt faster.

How to Protect Yourself

You don’t need to be a tech genius to stay safe. Follow these steps:

Slow Down: Phishing preys on panic. Check URLs before clicking.

Verify Odd Requests: Call the sender directly if an email seems “off.”

Use Multi-Factor Authentication (MFA): Even if hackers get your password, MFA blocks them.

Update Software: Newest security patches fix loopholes in AI exploits.

Conclusion

AI-powered phishing attacks are a serious threat, even for cybersecurity experts. However, as hackers use AI to create more convincing scams, cybersecurity professionals are also using AI to build better defenses. The key to staying safe is awareness. By being cautious, double-checking unexpected requests, and using security tools, individuals and businesses can reduce the risk of falling victim to AI-powered scams.

The battle between hackers and cybersecurity experts is ongoing, but one thing is clear AI is changing the game for both sides.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Alexey_Kotelnikov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brian_Jackson_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

Stolen 884,000 Credit Card Details on 13 Million Clicks from Users Worldwide.webp?#)

![Roku clarifies how ‘Pause Ads’ work amid issues with some HDR content [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/roku-pause-ad-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Look at this Chrome Dino figure and its adorable tiny boombox [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/chrome-dino-youtube-boombox-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Seeds visionOS 2.5 RC to Developers [Download]](https://www.iclarified.com/images/news/97240/97240/97240-640.jpg)

![Apple Seeds tvOS 18.5 RC to Developers [Download]](https://www.iclarified.com/images/news/97243/97243/97243-640.jpg)