AI Image Captioning Breakthrough Stops Models from 'Hallucinating' Objects That Aren't There

This is a Plain English Papers summary of a research paper called AI Image Captioning Breakthrough Stops Models from 'Hallucinating' Objects That Aren't There. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Overview Examines the problem of "object hallucination" in image captioning models Proposes a counterfactually regularized approach to address this issue Focuses on improving the reliability and faithfulness of image captions Plain English Explanation Image captioning models are designed to generate textual descriptions of images. However, these models can sometimes "hallucinate" objects or details that are not actually present in the image. This can lead to inaccurate or misleading captions. The researchers behind this pap... Click here to read the full summary of this paper

This is a Plain English Papers summary of a research paper called AI Image Captioning Breakthrough Stops Models from 'Hallucinating' Objects That Aren't There. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

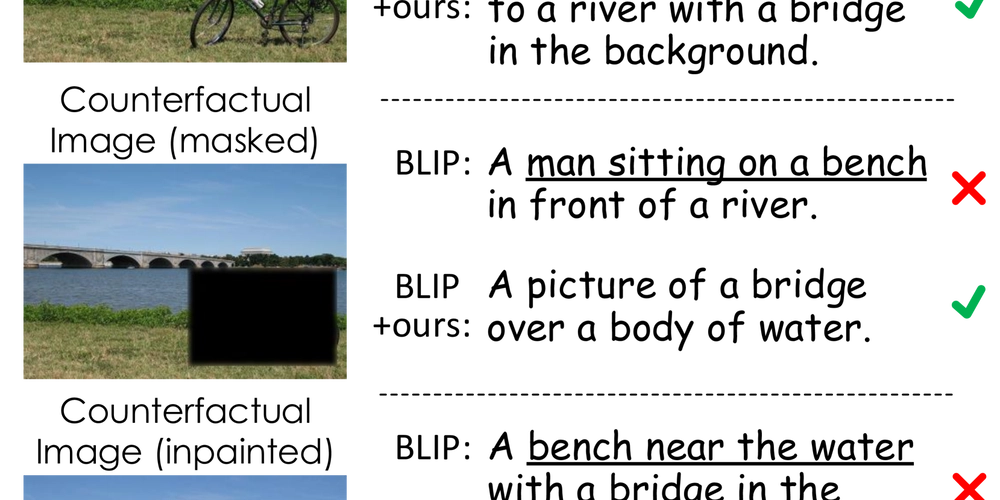

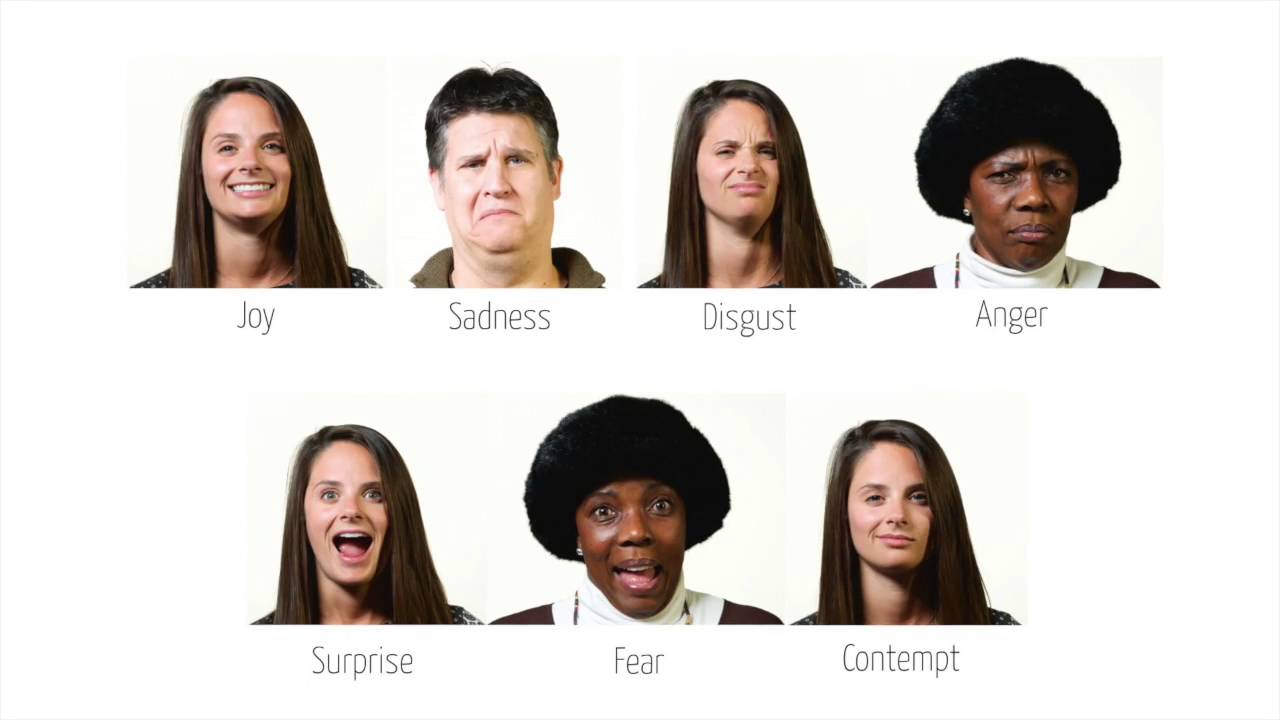

- Examines the problem of "object hallucination" in image captioning models

- Proposes a counterfactually regularized approach to address this issue

- Focuses on improving the reliability and faithfulness of image captions

Plain English Explanation

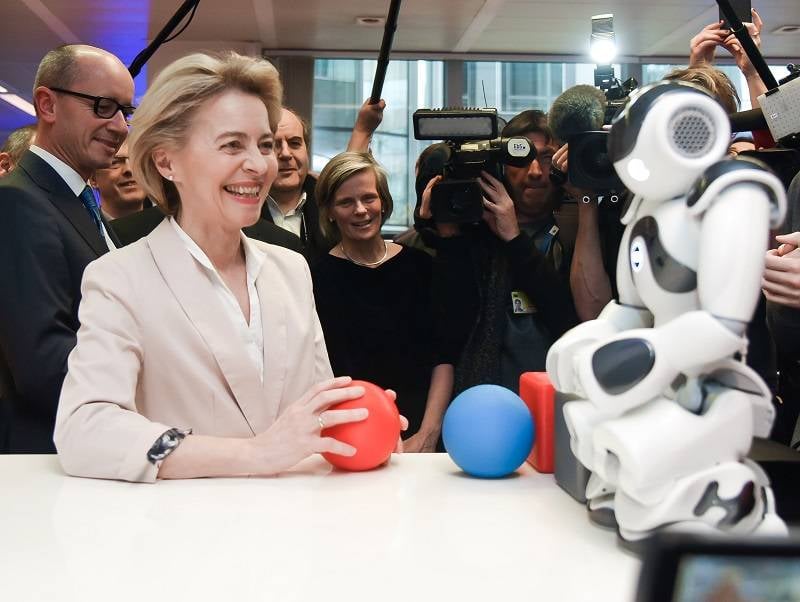

Image captioning models are designed to generate textual descriptions of images. However, these models can sometimes "hallucinate" objects or details that are not actually present in the image. This can lead to inaccurate or misleading captions.

The researchers behind this pap...

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpeg?#)

-11.11.2024-4-49-screenshot.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_jvphoto_Alamy.jpg?#)

.png?#)

![Apple Debuts Official Trailer for 'Murderbot' [Video]](https://www.iclarified.com/images/news/96972/96972/96972-640.jpg)

![Alleged Case for Rumored iPhone 17 Pro Surfaces Online [Image]](https://www.iclarified.com/images/news/96969/96969/96969-640.jpg)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)