You Don't Need Your Own Backend to Call LLM from Front End

Until now, creating any AI experience using large language models (LLMs) required building your own backend and integrating it with the frontend. Even for simple projects or proofs of concept, this approach was time-consuming and demanded a broad skill set. Why couldn’t you just call an LLM directly from the frontend? Most LLM providers, like OpenAI or Anthropic, offer APIs designed to be accessed from backend servers, not directly from frontend applications. To expose any LLM functionality to the frontend, you typically have to build an entire backend yourself, implement all necessary endpoints, and ensure proper security. You don’t want just anyone overusing your AI endpoint, so you also need to implement custom rate limiting. That’s a lot of work. Now, there's an alternative. You no longer need a backend or have to deal with backend-related challenges. You can simply make requests directly from your frontend code. // index.html const gateway = frontLLM(''); const response = await gateway.complete('Hello world!'); const content = response.choices[0].message.content; // index.html const gateway = frontLLM(''); const response = await gateway.completeStreaming({ model: 'gpt-4', messages: [{ role: 'user', content: 'Where is Europe?' }], temperature: 0.7 }); for (;;) { const { finished, chunks } = await response.read(); for (const chunk of chunks) { console.log(chunk.choices[0].delta.content); } if (finished) { break; } } FrontLLM is a public LLM gateway that forwards requests from your frontend (via CORS), to your LLM provider, such as OpenAI, Anthropic, and others. It includes built-in security features that let you control costs and monitor usage in real time. For example, you can: Set a maximum number of requests from a unique IP per hour or per day. Limit the total number of requests across the entire gateway. Restrict the maximum number of tokens that your LLM provider can generate. Define which models are allowed to be used. And much more. Note that you can use FrontLLM with any front-end framework, such as Angular, React, or Vue. You don't need a framework at all, vanilla JavaScript works just fine too. How to Get Started Create a new account at FrontLLM.com. In the console, create a new gateway. Choose your LLM provider (e.g., OpenAI) and enter your API key from the LLM Provider Developer Console. Specify the allowed domains from which you want to call the gateway. To test it locally, add localhost. Save the gateway. On the right side, you'll see a code snippet showing how to call the LLM directly from the front end. For more options and usage examples, check out the documentation. More For more information, visit the FrontLLM website. You'll find several demos showcasing what you can build by calling LLMs directly from the front end.

Until now, creating any AI experience using large language models (LLMs) required building your own backend and integrating it with the frontend. Even for simple projects or proofs of concept, this approach was time-consuming and demanded a broad skill set. Why couldn’t you just call an LLM directly from the frontend?

Most LLM providers, like OpenAI or Anthropic, offer APIs designed to be accessed from backend servers, not directly from frontend applications. To expose any LLM functionality to the frontend, you typically have to build an entire backend yourself, implement all necessary endpoints, and ensure proper security. You don’t want just anyone overusing your AI endpoint, so you also need to implement custom rate limiting. That’s a lot of work.

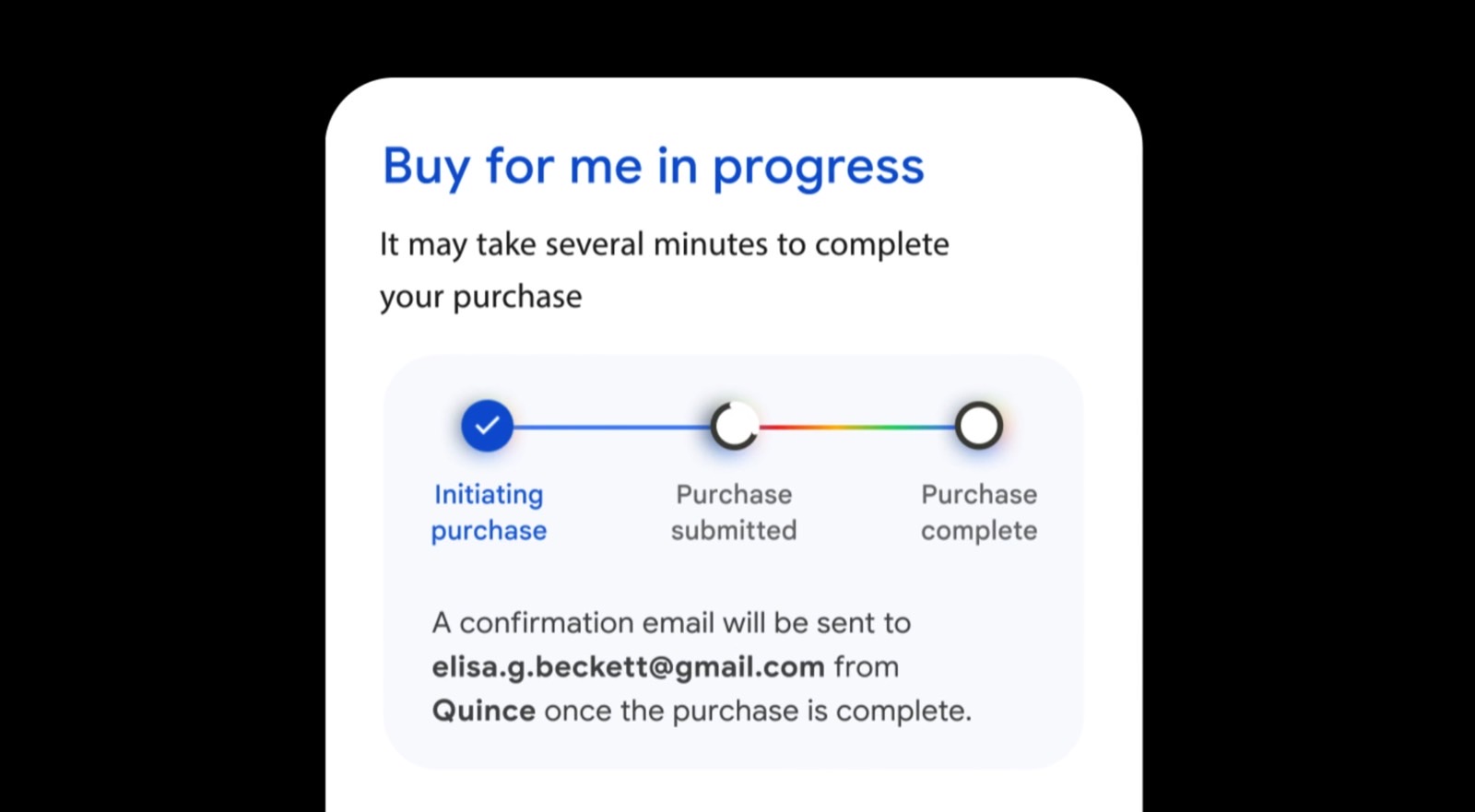

Now, there's an alternative. You no longer need a backend or have to deal with backend-related challenges. You can simply make requests directly from your frontend code.

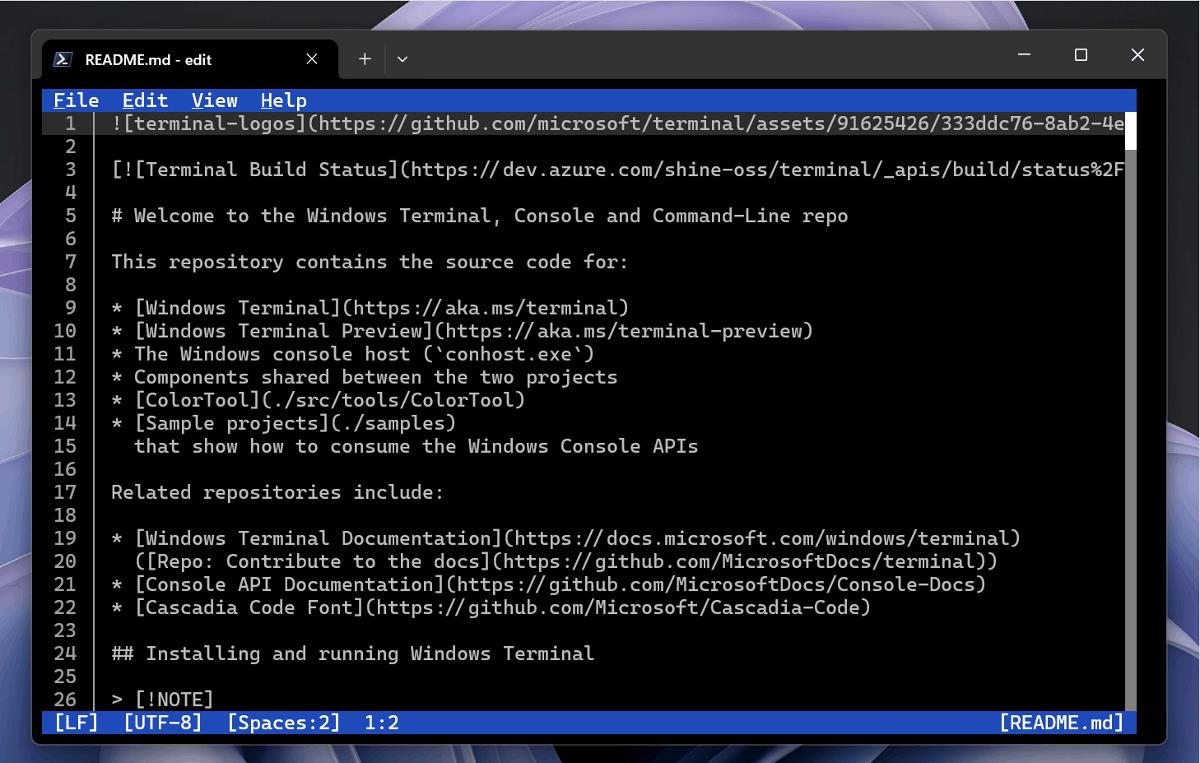

// index.html

const gateway = frontLLM('// index.html

const gateway = frontLLM('FrontLLM is a public LLM gateway that forwards requests from your frontend (via CORS), to your LLM provider, such as OpenAI, Anthropic, and others. It includes built-in security features that let you control costs and monitor usage in real time.

For example, you can:

- Set a maximum number of requests from a unique IP per hour or per day.

- Limit the total number of requests across the entire gateway.

- Restrict the maximum number of tokens that your LLM provider can generate.

- Define which models are allowed to be used.

And much more.

Note that you can use FrontLLM with any front-end framework, such as Angular, React, or Vue. You don't need a framework at all, vanilla JavaScript works just fine too.

How to Get Started

- Create a new account at FrontLLM.com.

- In the console, create a new gateway.

- Choose your LLM provider (e.g., OpenAI) and enter your API key from the LLM Provider Developer Console.

- Specify the allowed domains from which you want to call the gateway. To test it locally, add

localhost. - Save the gateway.

On the right side, you'll see a code snippet showing how to call the LLM directly from the front end.

For more options and usage examples, check out the documentation.

More

For more information, visit the FrontLLM website. You'll find several demos showcasing what you can build by calling LLMs directly from the front end.

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

_Prostock-studio_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)