Writing Software That Works with the Machine, Not Against It in GO

Hi, my name is Walid, a backend developer who’s currently learning Go and sharing my journey by writing about it along the way. Resource : The Go Programming Language book by Alan A. A. Donovan & Brian W. Kernighan Matt Holiday go course In high-performance computing, mechanical sympathy refers to writing software that aligns with the way hardware operates, ensuring that the code leverages CPU architecture efficiently rather than working against it. Go, while being a high-level language, allows developers to write performance-sensitive code when necessary. This article dives deep into key performance principles, covering CPU architecture, memory hierarchies, data structures, false sharing, allocation strategies, and execution patterns—all with a focus on writing Go code that maximizes hardware efficiency. Understanding CPU Cores and Memory Hierarchy Modern CPUs are built with multiple cores, each with its own cache hierarchy: L1 Cache (Fastest, ~1ns latency) – Closest to the CPU core but small in size (32KB–64KB per core). L2 Cache (Fast, ~4-10ns latency) – Larger (256KB–1MB per core), shared between threads within the same core. L3 Cache (Slower, ~10-30ns latency) – Shared across multiple cores, much larger (4MB–128MB). RAM (Much slower, ~100ns latency) – Main system memory, much larger but orders of magnitude slower than caches. When a program accesses data, the CPU first looks for it in L1, then L2, then L3, before going to RAM. Cache misses (when the requested data isn’t in L1/L2/L3) cause significant slowdowns. GOAL: Structure data for locality of reference so that frequently accessed data stays in cache as long as possible. Dynamic Dispatch: Why Too Many Short Method Calls Are Expensive In Go, calling methods through an interface involves dynamic dispatch, which introduces an extra layer of indirection: The vtable (virtual method table) lookup adds an overhead. Indirect calls prevent the CPU from prefetching the next instruction, leading to branch mispredictions. The function call may jump to a memory location outside the CPU cache, causing cache misses. Example: Indirect vs. Direct Method Calls type Shape interface { Area() float64 } type Circle struct { radius float64 } func (c Circle) Area() float64 { return 3.14 * c.radius * c.radius } func main() { c := Circle{radius: 10} var s Shape = c // Dynamic dispatch (interface method call) _ = s.Area() // Indirect call via interface (slower) _ = c.Area() // Direct call (faster) }

Hi, my name is Walid, a backend developer who’s currently learning Go and sharing my journey by writing about it along the way.

Resource :

- The Go Programming Language book by Alan A. A. Donovan & Brian W. Kernighan

- Matt Holiday go course

In high-performance computing, mechanical sympathy refers to writing software that aligns with the way hardware operates, ensuring that the code leverages CPU architecture efficiently rather than working against it. Go, while being a high-level language, allows developers to write performance-sensitive code when necessary.

This article dives deep into key performance principles, covering CPU architecture, memory hierarchies, data structures, false sharing, allocation strategies, and execution patterns—all with a focus on writing Go code that maximizes hardware efficiency.

Understanding CPU Cores and Memory Hierarchy

Modern CPUs are built with multiple cores, each with its own cache hierarchy:

- L1 Cache (Fastest, ~1ns latency) – Closest to the CPU core but small in size (32KB–64KB per core).

- L2 Cache (Fast, ~4-10ns latency) – Larger (256KB–1MB per core), shared between threads within the same core.

- L3 Cache (Slower, ~10-30ns latency) – Shared across multiple cores, much larger (4MB–128MB).

- RAM (Much slower, ~100ns latency) – Main system memory, much larger but orders of magnitude slower than caches.

When a program accesses data, the CPU first looks for it in L1, then L2, then L3, before going to RAM. Cache misses (when the requested data isn’t in L1/L2/L3) cause significant slowdowns.

GOAL: Structure data for locality of reference so that frequently accessed data stays in cache as long as possible.

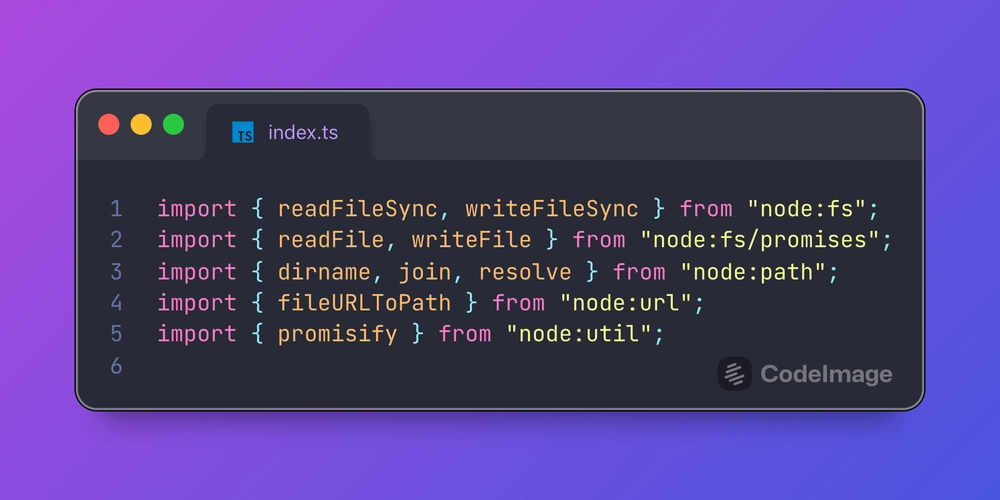

Dynamic Dispatch: Why Too Many Short Method Calls Are Expensive

In Go, calling methods through an interface involves dynamic dispatch, which introduces an extra layer of indirection:

- The vtable (virtual method table) lookup adds an overhead.

- Indirect calls prevent the CPU from prefetching the next instruction, leading to branch mispredictions.

- The function call may jump to a memory location outside the CPU cache, causing cache misses.

Example: Indirect vs. Direct Method Calls

type Shape interface {

Area() float64

}

type Circle struct {

radius float64

}

func (c Circle) Area() float64 {

return 3.14 * c.radius * c.radius

}

func main() {

c := Circle{radius: 10}

var s Shape = c // Dynamic dispatch (interface method call)

_ = s.Area() // Indirect call via interface (slower)

_ = c.Area() // Direct call (faster)

}

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

.jpeg?#)

.jpeg?#)

-Date-Everything!-Release-Date-Trailer-00-00-09.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Andriy_Popov_Alamy.jpg?#)

.png?#)

![Apple Watch to Get visionOS Inspired Refresh, Apple Intelligence Support [Rumor]](https://www.iclarified.com/images/news/96976/96976/96976-640.jpg)

![New Apple Watch Ad Features Real Emergency SOS Rescue [Video]](https://www.iclarified.com/images/news/96973/96973/96973-640.jpg)

![Apple Debuts Official Trailer for 'Murderbot' [Video]](https://www.iclarified.com/images/news/96972/96972/96972-640.jpg)