Why Do LLMs Need GPUs? Here's What I Found Out

Lately, I've been seeing a lot of headlines about how the latest GPUs are unlocking crazy new capabilities for AI. Things like GPT-4, text-to-video models, and even real-time AI-powered gaming characters. Everywhere I looked, there was talk about how important GPUs are - but if you're like me, you might be wondering: why are GPUs such a big deal for AI in the first place? I'll be honest: when I first heard "GPU," my mind immediately went to gaming - powerful graphics cards that help run beautiful 3D worlds. What I didn't realize until recently is that GPUs are just as important (if not more so) in the world of AI. I did a little digging, and in this post, I'm going to share what I learned - explained the way I wish someone had explained it to me. CPUs vs GPUs: The Speed vs Specialization Trade-off First, let's talk about what a GPU actually is, and how it's different from the CPU (the regular brain of your computer). CPUs (Central Processing Units) are designed for general-purpose tasks. They're great at doing a wide variety of things, one (or a few) at a time, very quickly and flexibly. GPUs (Graphics Processing Units) are designed for highly specialized tasks - originally rendering images by doing the same type of math operation thousands or millions of times in parallel. Imagine you had to solve a giant pile of math problems. A CPU is like hiring one genius mathematician who can solve any problem, one by one. A GPU is like hiring an army of thousands of good mathematicians who can each handle a problem at the same time. Now, here's where it clicked for me: training and running an AI model - like a Large Language Model (LLM) - is less like solving a single huge problem and more like solving millions of small ones at the same time. The Math Behind AI Models (It's Simpler Than You Think) At its core, AI - especially deep learning - is really just lots and lots of matrix math. (If you've ever seen those arrays of numbers in school, that's what matrices are.) An LLM like GPT-4 or Claude processes information by taking input (like words), turning them into numbers (embeddings), and then applying a bunch of matrix operations over and over to make predictions about what should come next. These operations include things like: Multiplying huge matrices Adding numbers together Applying simple functions (like ReLU, softmax, etc.) across many values at once All of these tasks happen millions or even billions of times during a single training run. Matrix operations are: Predictable Repetitive Embarrassingly parallel (meaning they can be split up and done at the same time without causing problems) And that's exactly what GPUs were born to do. Why GPUs are Perfect for AI When I realized this, it made perfect sense why GPUs have become the foundation of AI. Here's what they bring to the table: Thousands of cores ➔ They can process tons of small calculations at once. High memory bandwidth ➔ They can move huge amounts of data around very quickly. Specialized tensor cores (on modern GPUs) ➔ Extra hardware specifically optimized for matrix operations, which AI uses constantly. In short: the same things that made GPUs good for rendering beautiful video games also make them insanely good for training AI models. But even more interesting - companies like NVIDIA, AMD, and others have now purpose-built their newest GPUs (like the NVIDIA H100) specifically with AI in mind. They're no longer just for gamers; they're AI powerhouses. What Happens Without GPUs? I found it wild to learn that without GPUs (or other specialized accelerators like TPUs), training a cutting-edge AI model would be almost impossible. Training GPT-3, for example, reportedly took thousands of GPUs running for months non-stop. Without GPUs, it would have taken hundreds of years on regular CPUs to train the same model. Even running an AI model at scale (what's called "inference") can be painfully slow without GPUs - and in a world where users expect instant responses from their AI assistants, speed is everything. So… Could the Future Be Even Faster? One thing that got me super excited is that GPU technology is still improving fast. Every year, newer GPUs are: More powerful More energy-efficient More specialized for AI workloads And companies are even developing completely new kinds of hardware (like tensor processing units, AI accelerators, and even optical computing) to make things even faster. In other words: the amazing AI tools we're seeing today are just the beginning - and the hardware race behind the scenes is fueling it all. Final Thoughts At the start of this journey, I thought GPUs were just something gamers cared about. Now, I see that they're absolutely vital to AI. If CPUs are like versatile chefs who can cook anything, GPUs are like pizza shops that can churn out 1,000 perfect pizzas an hour. And in the world of AI, we don't need fancy meals

Lately, I've been seeing a lot of headlines about how the latest GPUs are unlocking crazy new capabilities for AI. Things like GPT-4, text-to-video models, and even real-time AI-powered gaming characters. Everywhere I looked, there was talk about how important GPUs are - but if you're like me, you might be wondering: why are GPUs such a big deal for AI in the first place?

I'll be honest: when I first heard "GPU," my mind immediately went to gaming - powerful graphics cards that help run beautiful 3D worlds. What I didn't realize until recently is that GPUs are just as important (if not more so) in the world of AI.

I did a little digging, and in this post, I'm going to share what I learned - explained the way I wish someone had explained it to me.

CPUs vs GPUs: The Speed vs Specialization Trade-off

First, let's talk about what a GPU actually is, and how it's different from the CPU (the regular brain of your computer).

CPUs (Central Processing Units) are designed for general-purpose tasks. They're great at doing a wide variety of things, one (or a few) at a time, very quickly and flexibly.

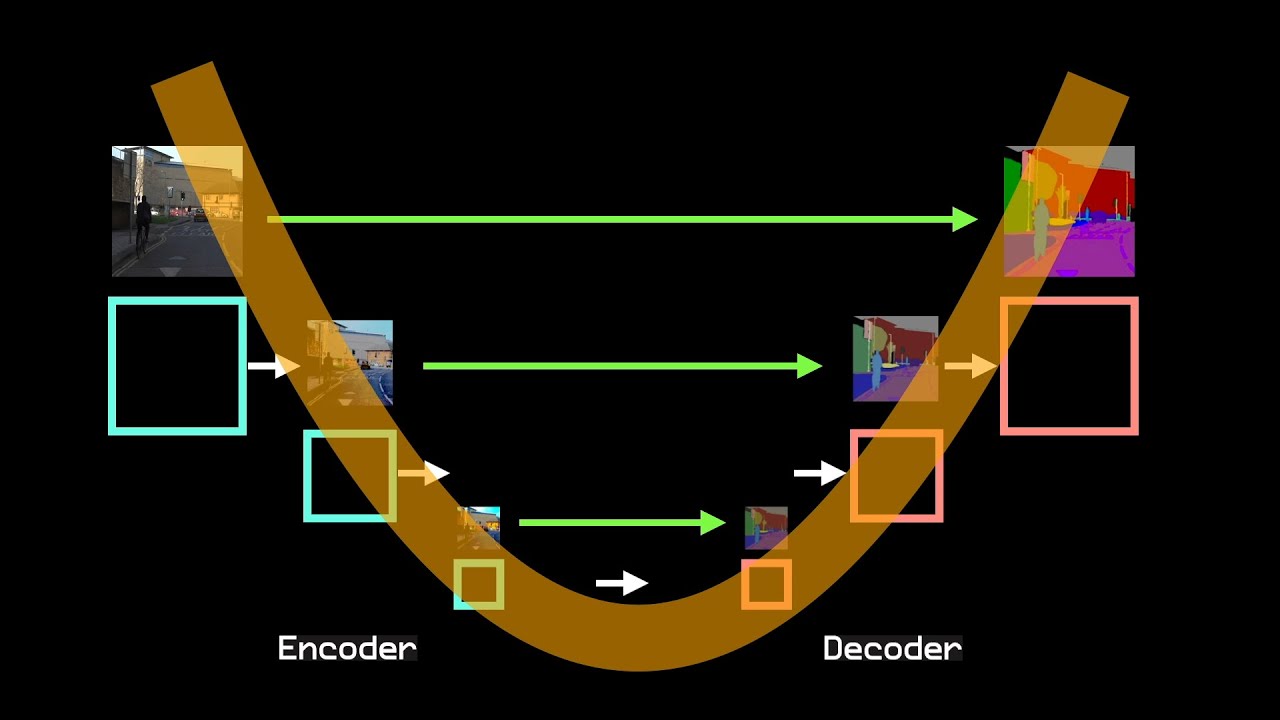

GPUs (Graphics Processing Units) are designed for highly specialized tasks - originally rendering images by doing the same type of math operation thousands or millions of times in parallel.

Imagine you had to solve a giant pile of math problems. A CPU is like hiring one genius mathematician who can solve any problem, one by one. A GPU is like hiring an army of thousands of good mathematicians who can each handle a problem at the same time.

Now, here's where it clicked for me: training and running an AI model - like a Large Language Model (LLM) - is less like solving a single huge problem and more like solving millions of small ones at the same time.

The Math Behind AI Models (It's Simpler Than You Think)

At its core, AI - especially deep learning - is really just lots and lots of matrix math.

(If you've ever seen those arrays of numbers in school, that's what matrices are.)

An LLM like GPT-4 or Claude processes information by taking input (like words), turning them into numbers (embeddings), and then applying a bunch of matrix operations over and over to make predictions about what should come next.

These operations include things like:

- Multiplying huge matrices

- Adding numbers together

- Applying simple functions (like ReLU, softmax, etc.) across many values at once

All of these tasks happen millions or even billions of times during a single training run.

Matrix operations are:

- Predictable

- Repetitive

- Embarrassingly parallel (meaning they can be split up and done at the same time without causing problems)

And that's exactly what GPUs were born to do.

Why GPUs are Perfect for AI

When I realized this, it made perfect sense why GPUs have become the foundation of AI. Here's what they bring to the table:

Thousands of cores

➔ They can process tons of small calculations at once.

High memory bandwidth

➔ They can move huge amounts of data around very quickly.

Specialized tensor cores (on modern GPUs)

➔ Extra hardware specifically optimized for matrix operations, which AI uses constantly.

In short: the same things that made GPUs good for rendering beautiful video games also make them insanely good for training AI models.

But even more interesting - companies like NVIDIA, AMD, and others have now purpose-built their newest GPUs (like the NVIDIA H100) specifically with AI in mind. They're no longer just for gamers; they're AI powerhouses.

What Happens Without GPUs?

I found it wild to learn that without GPUs (or other specialized accelerators like TPUs), training a cutting-edge AI model would be almost impossible.

- Training GPT-3, for example, reportedly took thousands of GPUs running for months non-stop.

- Without GPUs, it would have taken hundreds of years on regular CPUs to train the same model.

Even running an AI model at scale (what's called "inference") can be painfully slow without GPUs - and in a world where users expect instant responses from their AI assistants, speed is everything.

So… Could the Future Be Even Faster?

One thing that got me super excited is that GPU technology is still improving fast.

Every year, newer GPUs are:

- More powerful

- More energy-efficient

- More specialized for AI workloads

And companies are even developing completely new kinds of hardware (like tensor processing units, AI accelerators, and even optical computing) to make things even faster.

In other words: the amazing AI tools we're seeing today are just the beginning - and the hardware race behind the scenes is fueling it all.

Final Thoughts

At the start of this journey, I thought GPUs were just something gamers cared about. Now, I see that they're absolutely vital to AI.

If CPUs are like versatile chefs who can cook anything, GPUs are like pizza shops that can churn out 1,000 perfect pizzas an hour. And in the world of AI, we don't need fancy meals - we need a whole lot of perfect pizzas, fast.

I hope this explanation helped you the way it helped me. If you're just starting to learn about AI like I am, it's incredible how much of it boils down to simple ideas - just operating at mind-blowing scales.

I'm still new to the AI world, so if you've got tips, corrections, or cool resources to share, I'd love to hear from you in the comments! Let's learn together.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Vladimir_Stanisic_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Standalone Meta AI App Released for iPhone [Download]](https://www.iclarified.com/images/news/97157/97157/97157-640.jpg)

![AirPods Pro 2 With USB-C Back On Sale for Just $169! [Deal]](https://www.iclarified.com/images/news/96315/96315/96315-640.jpg)

![Apple Releases iOS 18.5 Beta 4 and iPadOS 18.5 Beta 4 [Download]](https://www.iclarified.com/images/news/97145/97145/97145-640.jpg)