How to Ensure Your AI Solution Does What You Expect iI to Do

A Kind Introduction to AI Evals The post How to Ensure Your AI Solution Does What You Expect iI to Do appeared first on Towards Data Science.

Predictive models aren’t really new: as humans we have been predicting things for years, starting formaly with statistics. However, GenAI has revolutionized the predictive field for many reasons:

- No need to train your own model or to be a Data Scientist to build AI solutions

- AI is now easy to use through chat interfaces and to integrate through APIs

- Unlocking of many things that couldn’t be done or were really hard to do before

All these things make GenAI very exciting, but also risky. Unlike traditional software — or even classical machine learning — GenAI introduces a new level of unpredictability. You’re not implementic deterministic logics, you’re using a model trained on vast amounts of data, hoping it will respond as needed. So how do we know if an AI system is doing what we intend it to do? How do we know if it’s ready to go live? The answer is Evaluations (evals), the concept that we’ll be exploring in this post:

- Why Genai systems can’t be tested the same way as traditional software or even classical Machine Learning (ML)

- Why evaluations are key to understand the quality of your AI system and aren’t optional (unless you like surprises)

- Different types of evaluations and techniques to apply them in practice

Whether you’re a Product Manager, Engineer, or anyone working or interested in AI, I hope this post will help you understand how to think critically about AI systems quality (and why evals are key to achieve that quality!).

GenAI Can’t Be Tested Like Traditional Software— Or Even Classical ML

In traditional software development, systems follow deterministic logics: if X happens, then Y will happen — always. Unless something breaks in your platform or you introduce an error in the code… which is the reason you add tests, monitoring and alerts. Unit tests are used to validate small blocks of code, integration tests to ensure components work well together, and monitoring to detect if something breaks in production. Testing traditional software is like checking if a calculator works. You input 2 + 2, and you expect 4. Clear and deterministic, it’s either right or wrong.

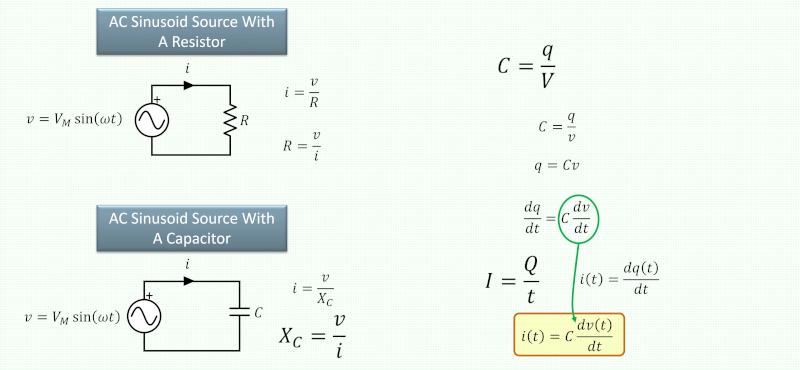

However, ML and AI introduce non-determinism and probabilities. Instead of defining behavior explicitly through rules, we train models to learn patterns from data. In AI, if X happens, the output is no longer a hard-coded Y, but a prediction with a certain degree of probability, based on what the model learned during training. This can be very powerful, but also introduces uncertainty: identical inputs might have different outputs over time, plausible outputs might actually be incorrect, unexpected behavior for rare scenarios might arise…

This makes traditional testing approaches insufficient, not even plausible at times. The calculator example gets closer to trying to evaluate a student’s performance on an open-ended exam. For each question, and many possible ways to answer the question, is an answer provided correct? Is it above the level of knowledge the student should have? Did the student make everything up but sound very convincing? Just like answers in an exam, AI systems can be evaluated, but need a more general and flexible way to adapt to different inputs, contexts and use cases (or types of exams).

In traditional Machine Learning (ML), evaluations are already a well-established part of the project lifecycle. Training a model on a narrow task like loan approval or disease detection always includes an evaluation step – using metrics like accuracy, precision, RMSE, MAE… This is used to measure how well the model performs, to compare between different model options, and to decide if the model is good enough to move forward to deployment. In GenAI this usually changes: teams use models that are already trained and have already passed general-purpose evaluations both internally on the model provider side and on public benchmarks. These models are so good at general tasks – like answering questions or drafting emails – there’s a risk of overtrusting them for our specific use case. However, it is important to still ask “is this amazing model good enough for my use case?”. That’s where evaluation comes in – to assess whether preditcions or generations are good for your specific use case, context, inputs and users.

There is another big difference between ML and GenAI: the variety and complexity of the model outputs. We are no longer returning classes and probabilities (like probability a client will return the loan), or numbers (like predicted house price based on its characteristics). GenAI systems can return many types of output, of different lengths, tone, content, and format. Similarly, these models no longer require structured and very determined input, but can usually take nearly any type of input — text, images, even audio or video. Evaluating therefore becomes much harder.

Why Evals aren’t Optional (Unless You Like Surprises)

Evals help you measure whether your AI system is actually working the way you want it to, whether the system is ready to go live, and if once live it keeps performing as expected. Breaking down why evals are essential:

- Quality Assessment: Evals provide a structured way to understand the quality of your AI’s predictions or outputs and how they will integrate in the overall system and use case. Are responses accurate? Helpful? Coherent? Relevant?

- Error Quantification: Evaluations help quantify the percentage, types, and magnitudes of errors. How often things go wrong? What kinds of errors occur more frequently (e.g. false positives, hallucinations, formatting mistakes)?

- Risk Mitigation: Helps you spot and prevent harmful or biased behavior before it reaches users — protecting your company from reputational risk, ethical issues, and potential regulatory problems.

Generative AI, with its free input-output relationships and long text generation, makes evaluations even more critical and complex. When things go wrong, they can go very wrong. We’ve all seen headlines about chatbots giving dangerous advice, models generating biased content, or AI tools hallucinating false facts.

“AI will never be perfect, but with evals you can reduce the risk of embarrassment – which can cost you money, credibility, or a viral moment on Twitter.“

How Do You Define an Evaluation Strategy?

So how do we define our evaluations? Evals aren’t one-size-fits-all. They are use-case dependent and should align with the specific goals of your AI application. If you’re building a search engine, you might care about result relevance. If it’s a chatbot, you might care about helpfulness and safety. If it’s a classifier, you probably care about accuracy and precision. For systems with multiple steps (like an AI system that performs search, prioritizes results and then generates an answer) it’s often necessary to evaluate each step. The idea here is to measure if each step is helping reach the general success metric (and through this understand where to focus iterations and improvements).

Common evaluation areas include:

- Correctness & Hallucinations: Are the outputs factually accurate? Are they making things up?

- Relevance: Is the content aligned with the user’s query or the provided context?

- safety, bias, and toxicity

- Format: Are outputs in the expected format (e.g., JSON, valid function call)?

- Safety, Bias & Toxicity: Is the system generating harmful, biased, or toxic content?

Task-Specific Metrics. For example in classification tasks measures such as accuracy and precision, in summarization tasks ROUGE or BLEU, and in code generation tasks regex and execution without error check.

How Do You Actually Compute Evals?

Once you know what you want to measure, the next step is designing your test cases. This will be a set of examples (the more examples the better, but always balancing value and costs) where you have:

- Input example: A realistic input of your system once in production.

- Expected Output (if applicable): Ground truth or example of desirable results.

- Evaluation Method: A scoring mechanism to assess the result.

- Score or Pass/Fail: computed metric that evaluates your test case

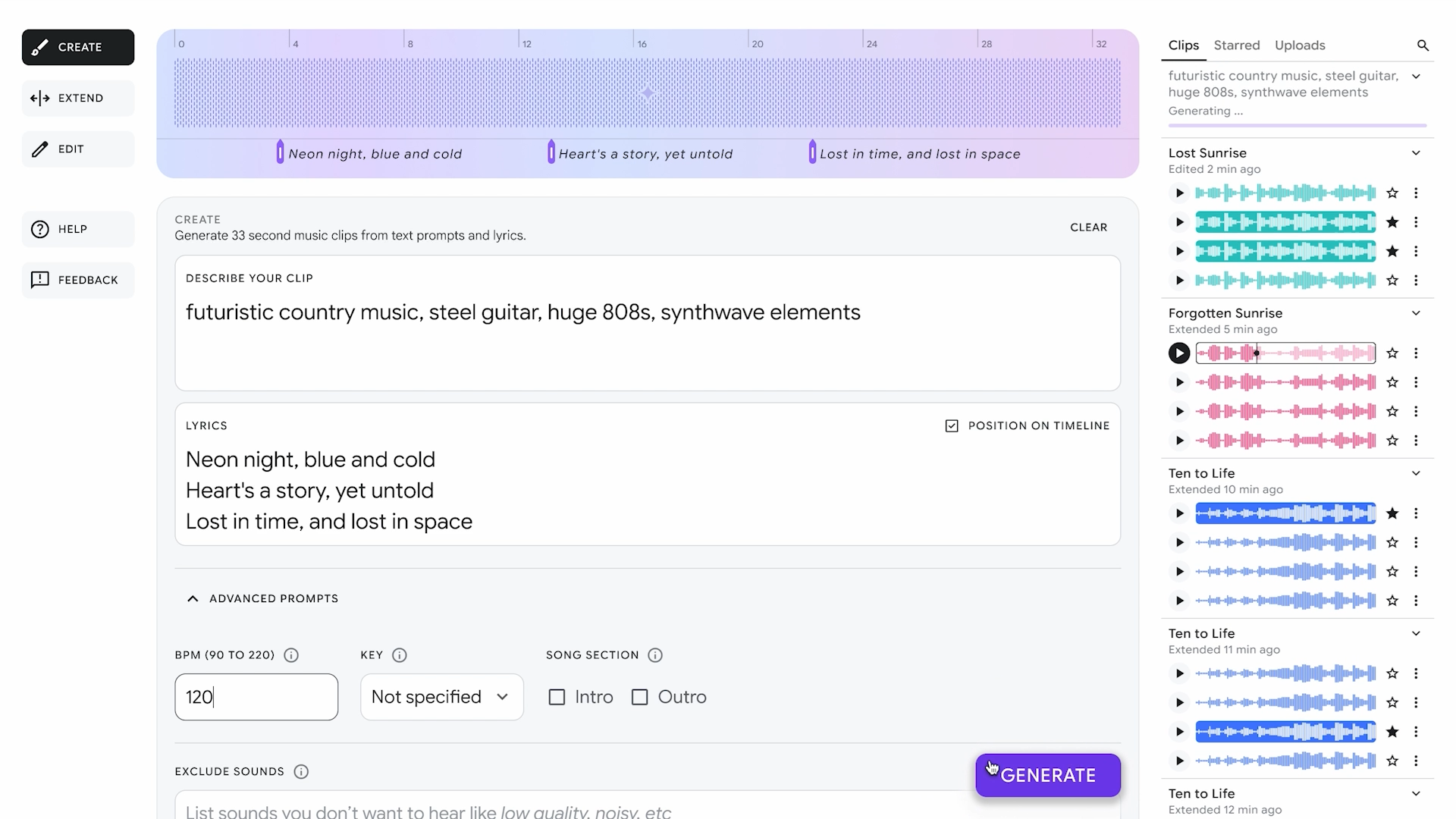

Depending on your needs, time, and budget, there are several techniques you can use as evaluation methods:

- Statistical Scorers like BLEU, ROUGE, METEOR, or cosine similarity between embeddings — good for comparing generated text to reference outputs.

- Traditional ML Metrics like Accuracy, precision, recall, and AUC — best for classification with labeled data.

- LLM-as-a-Judge Use a large language model to rate outputs (e.g., “Is this answer correct and helpful?”). Especially useful when labeled data isn’t available or when evaluating open-ended generation.

Code-Based Evals Use regex, logic rules, or test case execution to validate formats.

Wrapping it up

Let’s bring everything together with a concrete example. Imagine you’re building a sentiment analysis system to help your customer support team prioritize incoming emails.

The goal is to make sure the most urgent or negative messages get faster responses — ideally reducing frustration, improving satisfaction, and decreasing churn. This is a relatively simple use case, but even in a system like this, with limited outputs, quality matters: bad predictions could lead to prioritizing emails randomly, meaning your team wastes time with a system that costs money.

So how do you know your solution is working with the needed quality? You evaluate. Here are some examples of things that might be relevant to assess in this specific use case:

- Format Validation: Are the outputs of the LLM call to predict the sentiment of the email returned in the expected JSON format? This can be evaluated via code-based checks: regex, schema validation, etc.

- Sentiment Classification Accuracy: Is the system correctly classifying sentiments across a range of texts — short, long, multilingual? This can be evaluated with labeled data using traditional ML metrics — or, if labels aren’t available, using LLM-as-a-judge.

Once the solution is live, you would want to include also metrics that are more related to the final impact of your solution:

- Prioritization Effectiveness: Are support agents actually being guided toward the most critical emails? Is the prioritization aligned with the desired business impact?

- Final Business Impact Over time, is this system reducing response times, lowering customer churn, and improving satisfaction scores?

Evals are key to ensure we build useful, safe, valuable, and user-ready AI systems in production. So, whether you’re working with a simple classifier or an open ended chatbot, take the time to define what “good enough” means (Minimum Viable Quality) — and build the evals around it to measure it!

References

[1] Your AI Product Needs Evals, Hamel Husain

[2] LLM Evaluation Metrics: The Ultimate LLM Evaluation Guide, Confident AI

[3] Evaluating AI Agents, deeplearning.ai + Arize

The post How to Ensure Your AI Solution Does What You Expect iI to Do appeared first on Towards Data Science.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

_Muhammad_R._Fakhrurrozi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_NicoElNino_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![macOS 15.5 beta 4 now available for download [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/macOS-Sequoia-15.5-b4.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![AirPods Pro 2 With USB-C Back On Sale for Just $169! [Deal]](https://www.iclarified.com/images/news/96315/96315/96315-640.jpg)

![Apple Releases iOS 18.5 Beta 4 and iPadOS 18.5 Beta 4 [Download]](https://www.iclarified.com/images/news/97145/97145/97145-640.jpg)

![Apple Seeds watchOS 11.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97147/97147/97147-640.jpg)

![Apple Seeds visionOS 2.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97150/97150/97150-640.jpg)

![Apple Seeds Fourth Beta of iOS 18.5 to Developers [Update: Public Beta Available]](https://images.macrumors.com/t/uSxxRefnKz3z3MK1y_CnFxSg8Ak=/2500x/article-new/2025/04/iOS-18.5-Feature-Real-Mock.jpg)

![Apple Seeds Fourth Beta of macOS Sequoia 15.5 [Update: Public Beta Available]](https://images.macrumors.com/t/ne62qbjm_V5f4GG9UND3WyOAxE8=/2500x/article-new/2024/08/macOS-Sequoia-Night-Feature.jpg)