What Web Developers Really Think About AI in 2025

I took a picture of my street yesterday and fed it to ChatGPT. After a few seconds of thinking, it was able to correctly pinpoint the photo's location, down to the viewing orientation. Accomplishing something like this complex that quickly would've seemed like total science fiction just a few short years ago. Yet no matter how fast AI is evolving, there's something that's moving even faster: AI hype. If social media is to be believed, there is a whole vanguard of programmers already living in the future, periodically sending back tales of vibe coding entire apps before the rest of us have even finished running npm install. But is this really a glimpse into the future that awaits us all, or just a passing mirage that will soon go the way of the NFT? By the way, if you have a minute please consider participating in the first ever State of Devs survey. This time, we're asking about everything that's not code, from health, to hobbies, to career! The State of Web Dev AI That's where the State of Web Dev AI survey comes in. We recently surveyed over 4000 web developers about how they actually use AI tools as part of their day-do-day process. And we learned some pretty interesting things in the process. Models It's no surprise that ChatGPT dominates the usage rankings for model providers, given its head start and populatity. But what's more surprising is that it's also the most-loved model family by far. 53.1% of respondents had a positive opinion of it, while only 7.3% had negative feelings (with the rest remaining neutral). Claude also fared quite well, coming in second in terms of usage, with a similar 45.9% positive opinions vs 8.6% negative. On the other end of the spectrum, Microsoft Copilot only had 20.4% of positive opinions, and 28.5% of negative opinions. Another interesting fact: the average respondent had tried out 3.9 different models on average – which goes to show that no matter how dominant ChatGPT may be right now, developers are not afraid to seek out alternatives as they come along. We also asked respondents about the specific pain points that were preventing them from making full use of AI models. As expected, hallucinations and other inaccuracies were the big one: after all, it doesn't matter how cheap, fast, or convenient a model is if you can't trust its output. Another common issue was context limitations, which becomes especially relevant when you try to apply these models to large existing codebases, as opposed to using them to prototype new ideas. Prompt engineering difficulties were another frustrating obstacle, especially since it's often hard to know if you're getting incorrect output because of a badly phrased prompt, or because of a model's inherent limitations. Takeaway 1 It doesn't matter how cheap, fast, or intelligent a model is if you can't trust its output. Other AI Tools When looking at dedicated AI IDEs, Cursor came in first both in terms of usage and positive sentiment. Unlike with models, the majority of respondents had not yet tried using an AI IDE, with only 42% having used one or more tool in this category. And as more AI features become baked into “regular” IDEs such as VS Code, it remains to be seen if this category will endure. GitHub Copilot topped the rankings for Coding Assistants, with 75% of respondents having used one or more tools in this category. Finally, v0 came in number one in terms of dedicated Code Generation services – but with only 31% of respondents having used a tool in this category. Based on these numbers, it seems like, at least currently, the coding assistant form factor is resonating more with developers compared to dedicated IDEs or stand-alone services. It's hard to beat the convience of receiving suggestions right in your usual code editor. Takeaway 2 At least for now, plug-in code assistants are more popular than dedicated IDEs or code generation services. Usage 91% of respondents are using AI to generate code, making it by far the most common use case. This was confirmed by asking respondents what proportion of the code they produce was AI-generated: only 12% answered that none of it was. Interestingly, this is comparable to the proportion of developers who generate most of their code (>50%) using AI, which was 13%. In other words, while the vast majority of developers do use AI, they still hand-code most of their output, with an average of *28% of code being produced by AI across all respondents. It's worth pointing out though that just because AI writes the first draft of your code doesn't mean you can call it a day. Developers had to refactor 61% of AI-produced code on average. If you apply this value to the previous ratio of 28%, you end up with a back-of-the-envelope value of just 17% of all code being produced by AI with no human refactoring whatsoever. The most common reasons for refactoring were poor readability, variable renam

I took a picture of my street yesterday and fed it to ChatGPT. After a few seconds of thinking, it was able to correctly pinpoint the photo's location, down to the viewing orientation.

Accomplishing something like this complex that quickly would've seemed like total science fiction just a few short years ago. Yet no matter how fast AI is evolving, there's something that's moving even faster: AI hype.

If social media is to be believed, there is a whole vanguard of programmers already living in the future, periodically sending back tales of vibe coding entire apps before the rest of us have even finished running npm install.

But is this really a glimpse into the future that awaits us all, or just a passing mirage that will soon go the way of the NFT?

By the way, if you have a minute please consider participating in the first ever State of Devs survey. This time, we're asking about everything that's not code, from health, to hobbies, to career!

The State of Web Dev AI

That's where the State of Web Dev AI survey comes in. We recently surveyed over 4000 web developers about how they actually use AI tools as part of their day-do-day process. And we learned some pretty interesting things in the process.

Models

It's no surprise that ChatGPT dominates the usage rankings for model providers, given its head start and populatity. But what's more surprising is that it's also the most-loved model family by far. 53.1% of respondents had a positive opinion of it, while only 7.3% had negative feelings (with the rest remaining neutral).

Claude also fared quite well, coming in second in terms of usage, with a similar 45.9% positive opinions vs 8.6% negative.

On the other end of the spectrum, Microsoft Copilot only had 20.4% of positive opinions, and 28.5% of negative opinions.

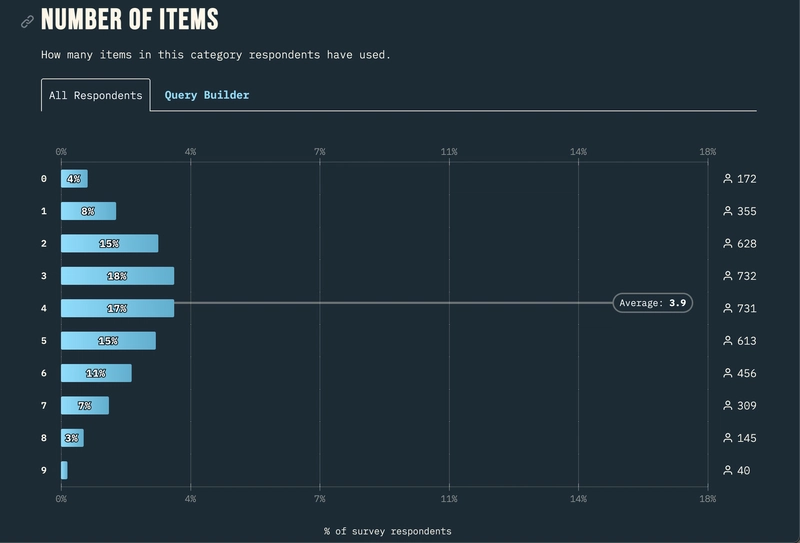

Another interesting fact: the average respondent had tried out 3.9 different models on average – which goes to show that no matter how dominant ChatGPT may be right now, developers are not afraid to seek out alternatives as they come along.

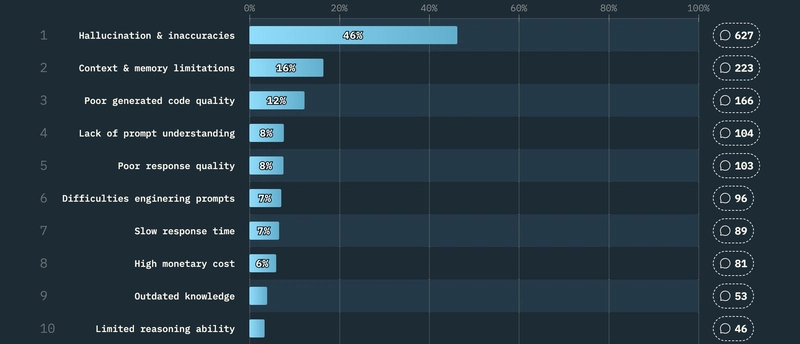

We also asked respondents about the specific pain points that were preventing them from making full use of AI models.

As expected, hallucinations and other inaccuracies were the big one: after all, it doesn't matter how cheap, fast, or convenient a model is if you can't trust its output.

Another common issue was context limitations, which becomes especially relevant when you try to apply these models to large existing codebases, as opposed to using them to prototype new ideas.

Prompt engineering difficulties were another frustrating obstacle, especially since it's often hard to know if you're getting incorrect output because of a badly phrased prompt, or because of a model's inherent limitations.

Takeaway 1

It doesn't matter how cheap, fast, or intelligent a model is if you can't trust its output.

Other AI Tools

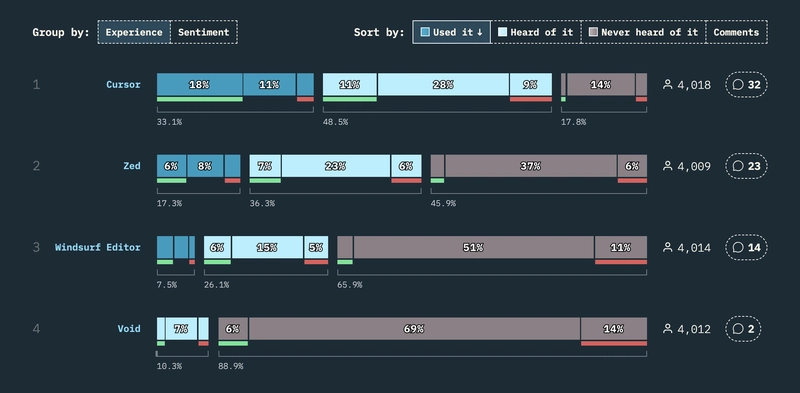

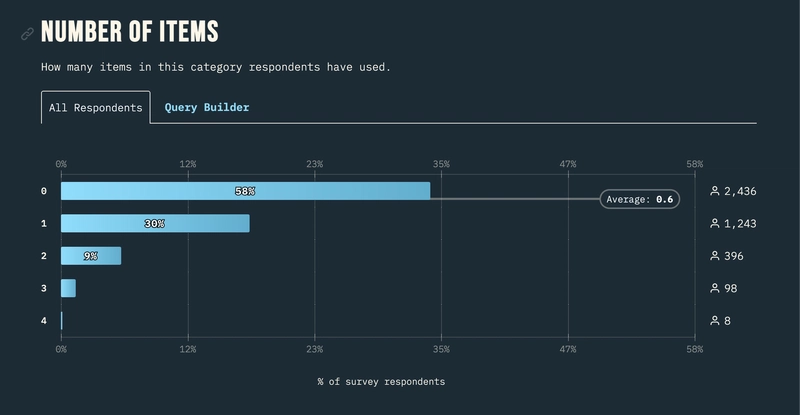

When looking at dedicated AI IDEs, Cursor came in first both in terms of usage and positive sentiment.

Unlike with models, the majority of respondents had not yet tried using an AI IDE, with only 42% having used one or more tool in this category. And as more AI features become baked into “regular” IDEs such as VS Code, it remains to be seen if this category will endure.

GitHub Copilot topped the rankings for Coding Assistants, with 75% of respondents having used one or more tools in this category.

Finally, v0 came in number one in terms of dedicated Code Generation services – but with only 31% of respondents having used a tool in this category.

Based on these numbers, it seems like, at least currently, the coding assistant form factor is resonating more with developers compared to dedicated IDEs or stand-alone services. It's hard to beat the convience of receiving suggestions right in your usual code editor.

Takeaway 2

At least for now, plug-in code assistants are more popular than dedicated IDEs or code generation services.

Usage

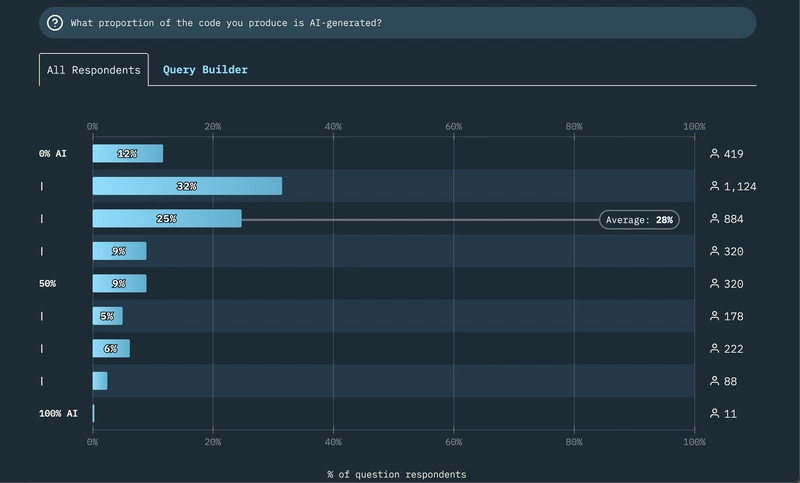

91% of respondents are using AI to generate code, making it by far the most common use case.

This was confirmed by asking respondents what proportion of the code they produce was AI-generated: only 12% answered that none of it was.

Interestingly, this is comparable to the proportion of developers who generate most of their code (>50%) using AI, which was 13%. In other words, while the vast majority of developers do use AI, they still hand-code most of their output, with an average of *28% of code being produced by AI across all respondents.

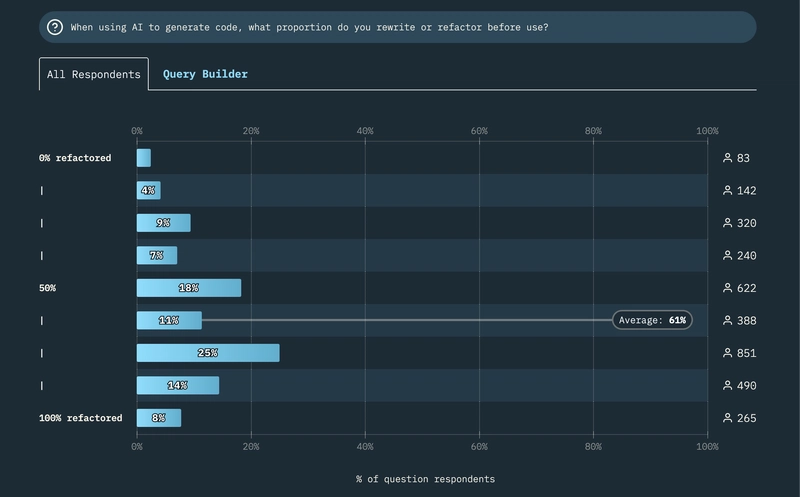

It's worth pointing out though that just because AI writes the first draft of your code doesn't mean you can call it a day. Developers had to refactor 61% of AI-produced code on average.

If you apply this value to the previous ratio of 28%, you end up with a back-of-the-envelope value of just 17% of all code being produced by AI with no human refactoring whatsoever.

The most common reasons for refactoring were poor readability, variable renaming, and excessive repetition. Which I expect should all be solved soon enough.

Takeaway 3

While AI code generation is common, the amount of code being generated by AI with no human oversight is still relatively small.

A Free Ride?

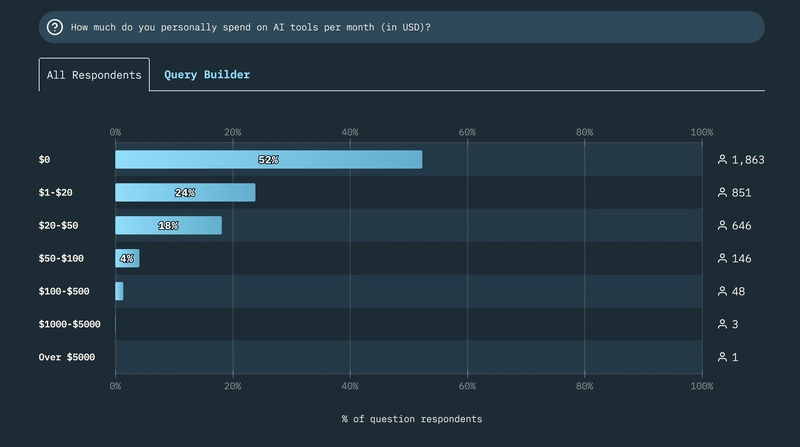

With new models making the news on a weekly basis and everybody and their neighbor asking ChatGPT what to make for dinner, you'd expect AI companies to be swimming in cash.

But the data seems to indicate that might not necessarily be the case. 52% of respondents were not paying for AI services personally, while 39% of respondents said their companies weren't spending anything either (although to be fait, many also reported simply not knowing the correct figure).

78% of respondents also reported being interested in running their own models locally (or having already done so), which would presumably let them avoid paying for a costly monthly subscription.

Takeaway 4

Despite AI's popularity, there are also signs that monetization might still be a struggle, especially given AI's high operation costs.

Opinions

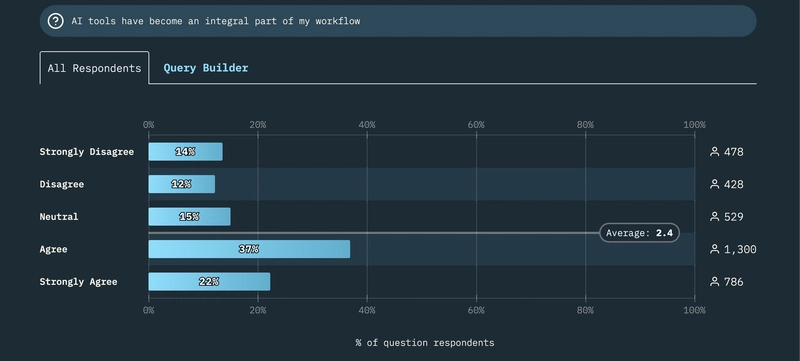

Despite all the talk about pain points, limitations, and frustrations, it was clear that the vast majority of respondents would not want to go back to a pre-AI world, with 59% agreeing with the statement that “AI tools have become an integral part of [their] workflow” - and the same amount judging that AI tools had made them “a lot more productive”.

Yet ironically, 60% of respondents also agreed that “relying on AI tools will make for less skilled developers overall”.

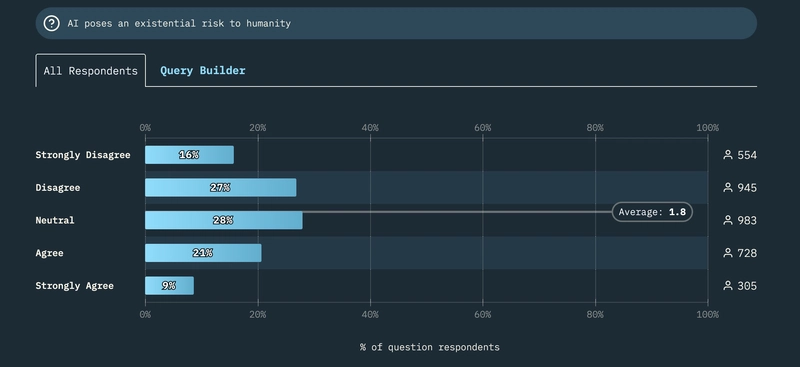

I have to admit I did not think I would ever be asking web developers for their thoughts on the end of humanity. Thankfully, most respondents were neutral when it comes to the existential risk posed by AI, with a slight bias towards optimism.

Finally, opinion was split when it came to predicting whether AGI would be reached within the next 10 years or not – with many rightly pointing that “AGI” isn't really that well defined to begin with.

Takeaway 5

Despite its very real flaws, AI is now an integral part of developers' workflows – yet they are also conscious of its potential drawbacks.

Conclusion

I really encourage you to read the full report, as it contains a lot more insights.

But if like me you're an AI-skeptic developer wondering whether to jump on yet another bandwagon, I think I can safely say that AI is not going anywhere, and that code generation promises to be one of its major superpowers.

That's not to say you should go all-in right from the start: I expect 6 months from now we'll start hearing horror stories about half-baked AI-generated codebases, and the poor human coders tasked with maintaining them.

But if you use AI responsibly and treat it like one more tool in your toolbelt, I think you won't regret making the jump!

P.S. you may have noticed that the survey mostly left out questions of AI ethics and AI's environmental impact. While I consider them both to be extremely important, I have to confess I did not have time to properly address them for this first edition of the survey. If you have suggestions on how to best ask about these issues in the next edition then please don't hesitate to let me know!

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

-All-will-be-revealed-00-35-05.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

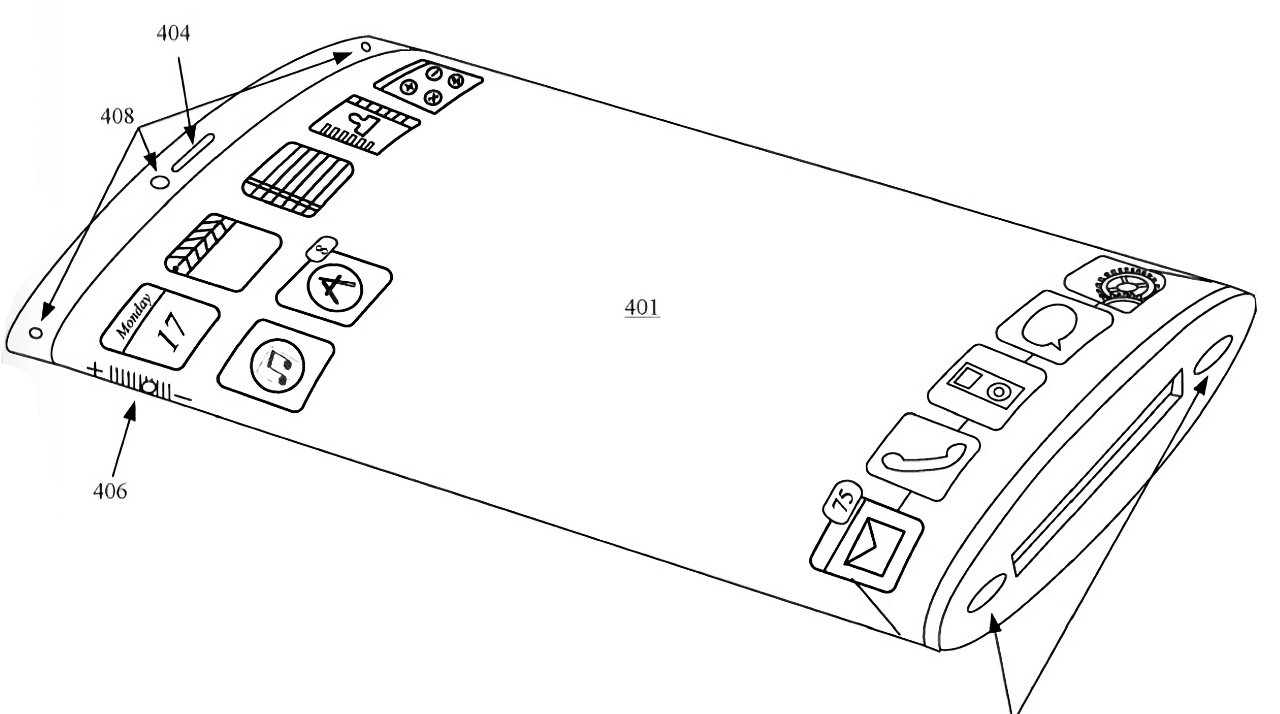

![What iPhone 17 model are you most excited to see? [Poll]](https://9to5mac.com/wp-content/uploads/sites/6/2025/04/iphone-17-pro-sky-blue.jpg?quality=82&strip=all&w=290&h=145&crop=1)

![Hands-On With 'iPhone 17 Air' Dummy Reveals 'Scary Thin' Design [Video]](https://www.iclarified.com/images/news/97100/97100/97100-640.jpg)

![Mike Rockwell is Overhauling Siri's Leadership Team [Report]](https://www.iclarified.com/images/news/97096/97096/97096-640.jpg)

![Instagram Releases 'Edits' Video Creation App [Download]](https://www.iclarified.com/images/news/97097/97097/97097-640.jpg)

![Inside Netflix's Rebuild of the Amsterdam Apple Store for 'iHostage' [Video]](https://www.iclarified.com/images/news/97095/97095/97095-640.jpg)