The Broader Implications of Model-Context-Protocol (MCP)

The history of technology platforms reveals a consistent pattern: what begins as a technical protocol often ends up reshaping entire industries. TCP/IP gave us the internet. REST APIs enabled the API economy. Could Model-Context-Protocol (MCP) have the same transformative impact on AI systems? Introduction In recent months, Model-Context-Protocol (MCP) has gained traction in AI circles, particularly on Twitter and developer forums. Most discussions focus on its tool-calling capabilities—the ability to let an AI model invoke external functions dynamically. While this aspect is certainly important, it only scratches the surface of what MCP enables. In this article, I argue that MCP is not just an API framework for extending LLMs with external tools. Instead, it represents a fundamental shift in how AI assistants function, how they integrate with third-party services, and how they could reshape software ecosystems. To understand this shift, let’s take a step back and examine how AI products evolve. Historically, technological platforms tend to absorb and replace specialised tools as they mature. Over time, this can make standalone AI products obsolete — just as platform companies like Apple, Microsoft, and Google have historically outcompeted niche software tools by bundling their features into the operating system itself. MCP, as an emerging framework, plays into this competition in a unique way. Instead of forcing AI assistants to be either all-in-one platforms or narrow, specialized tools, it enables a new kind of AI ecosystem—one where AI assistants and external services interact in a modular, dynamic, and interoperable way. To explore this, I'll present four different lenses for understanding MCP's impact: AI Competitive Dynamics — How AI assistants tend to absorb specialized tools over time, and how this natural evolution shapes the competitive landscape of AI products. Economics of Complements: How AI Assistants Win by Enabling External Tools – How MCP transforms AI assistants into platforms that derive value from external tools rather than competing with them, creating mutually beneficial ecosystems. MCP as a Personal Agentic Platform – How MCP empowers AI agents to integrate seamlessly with user workflows and data, shifting from siloed applications to embedded, context-aware partners. MCP as an Agent-to-Agent Protocol – How MCP establishes the foundation for structured communication between AI systems, enabling a decentralized network where specialized agents coordinate without human intermediation. By the end of this article, I hope to show that MCP is not just a technical upgrade—it’s a step toward a new AI paradigm, where assistants act as gateways to a vast network of AI-powered services rather than self-contained tools. The Bigger Picture: AI Product Evolution & Competitive Dynamics To fully grasp the significance of MCP, we need to understand the broader forces shaping AI products. AI-powered tools are not just competing on capabilities; they are evolving along two key dimensions: specificity and autonomy. Lukas Petersson, in his AI Founder’s Bitter Lesson series, presents a useful 2×2 framework for categorizing AI products: Specificity: How focused a product is on solving a particular problem. Vertical AI products are designed for a single, well-defined task (e.g., a legal document analyzer). Horizontal AI products can handle a broad range of use cases (e.g., ChatGPT). Autonomy: The degree to which AI determines its own workflows. Predefined workflows involve strict, rule-based interactions (e.g., a chatbot that follows a script). Agentic workflows allow AI to make decisions dynamically (e.g., an assistant that independently decides what actions to take). Petersson argues that with increasing computing power and model improvements, horizontal, agentic AI products will gradually absorb vertical ones. This follows the same pattern we’ve seen in traditional software development—where general-purpose platforms eventually replace niche applications. This pattern is not new. In fact, it has played out repeatedly in the tech industry: When Apple introduced Sherlock 3 for macOS, it diminished the demand for third-party tools like Watson. When Apple launched Notes, Reminders and later Passwords, it put pressure on apps like Evernote, OmniFocus and 1Password, forcing them to evolve. When Google added built-in translation to Chrome, many casual users stopped needing dedicated translation applications. This trend is already unfolding in AI. Consider ChatGPT’s search functionality: Before, people used services like Perplexity AI for AI-powered search. But when OpenAI introduced browsing capabilities directly in ChatGPT, it immediately made Perplexity less relevant — why use a separate tool when ChatGPT already has built-in search? Similarly, if future AI models perfect code-writing capabilities, tools like Cursor and Cline (AI-powered IDE

The history of technology platforms reveals a consistent pattern: what begins as a technical protocol often ends up reshaping entire industries. TCP/IP gave us the internet. REST APIs enabled the API economy. Could Model-Context-Protocol (MCP) have the same transformative impact on AI systems?

Introduction

In recent months, Model-Context-Protocol (MCP) has gained traction in AI circles, particularly on Twitter and developer forums. Most discussions focus on its tool-calling capabilities—the ability to let an AI model invoke external functions dynamically. While this aspect is certainly important, it only scratches the surface of what MCP enables.

In this article, I argue that MCP is not just an API framework for extending LLMs with external tools. Instead, it represents a fundamental shift in how AI assistants function, how they integrate with third-party services, and how they could reshape software ecosystems.

To understand this shift, let’s take a step back and examine how AI products evolve. Historically, technological platforms tend to absorb and replace specialised tools as they mature. Over time, this can make standalone AI products obsolete — just as platform companies like Apple, Microsoft, and Google have historically outcompeted niche software tools by bundling their features into the operating system itself.

MCP, as an emerging framework, plays into this competition in a unique way. Instead of forcing AI assistants to be either all-in-one platforms or narrow, specialized tools, it enables a new kind of AI ecosystem—one where AI assistants and external services interact in a modular, dynamic, and interoperable way.

To explore this, I'll present four different lenses for understanding MCP's impact:

- AI Competitive Dynamics — How AI assistants tend to absorb specialized tools over time, and how this natural evolution shapes the competitive landscape of AI products.

- Economics of Complements: How AI Assistants Win by Enabling External Tools – How MCP transforms AI assistants into platforms that derive value from external tools rather than competing with them, creating mutually beneficial ecosystems.

- MCP as a Personal Agentic Platform – How MCP empowers AI agents to integrate seamlessly with user workflows and data, shifting from siloed applications to embedded, context-aware partners.

- MCP as an Agent-to-Agent Protocol – How MCP establishes the foundation for structured communication between AI systems, enabling a decentralized network where specialized agents coordinate without human intermediation.

By the end of this article, I hope to show that MCP is not just a technical upgrade—it’s a step toward a new AI paradigm, where assistants act as gateways to a vast network of AI-powered services rather than self-contained tools.

The Bigger Picture: AI Product Evolution & Competitive Dynamics

To fully grasp the significance of MCP, we need to understand the broader forces shaping AI products. AI-powered tools are not just competing on capabilities; they are evolving along two key dimensions: specificity and autonomy.

Lukas Petersson, in his AI Founder’s Bitter Lesson series, presents a useful 2×2 framework for categorizing AI products:

-

Specificity: How focused a product is on solving a particular problem.

- Vertical AI products are designed for a single, well-defined task (e.g., a legal document analyzer).

- Horizontal AI products can handle a broad range of use cases (e.g., ChatGPT).

-

Autonomy: The degree to which AI determines its own workflows.

- Predefined workflows involve strict, rule-based interactions (e.g., a chatbot that follows a script).

- Agentic workflows allow AI to make decisions dynamically (e.g., an assistant that independently decides what actions to take).

Petersson argues that with increasing computing power and model improvements, horizontal, agentic AI products will gradually absorb vertical ones. This follows the same pattern we’ve seen in traditional software development—where general-purpose platforms eventually replace niche applications.

This pattern is not new. In fact, it has played out repeatedly in the tech industry:

- When Apple introduced Sherlock 3 for macOS, it diminished the demand for third-party tools like Watson.

- When Apple launched Notes, Reminders and later Passwords, it put pressure on apps like Evernote, OmniFocus and 1Password, forcing them to evolve.

- When Google added built-in translation to Chrome, many casual users stopped needing dedicated translation applications.

This trend is already unfolding in AI. Consider ChatGPT’s search functionality:

- Before, people used services like Perplexity AI for AI-powered search.

- But when OpenAI introduced browsing capabilities directly in ChatGPT, it immediately made Perplexity less relevant — why use a separate tool when ChatGPT already has built-in search?

- Similarly, if future AI models perfect code-writing capabilities, tools like Cursor and Cline (AI-powered IDE assistants) could face the same fate.

The severity of this threat depends on how quickly generalist AI assistants can absorb new capabilities. If OpenAI’s ChatGPT, Anthropic’s Claude, or Google’s Gemini can continuously expand their toolset, specialized AI products will have a shrinking window of opportunity before they are made redundant.

This dynamic presents a dilemma for AI startups:

- If they compete directly with AI assistants, they risk being outpaced once general models integrate their capabilities.

- If they differentiate through data access or specialization, they might survive longer but still face pressure as AI assistants improve.

As a result, vertical AI startups often don't fail due to lack of innovation—they fail because general AI platforms catch up and integrate their core functionality, reducing the need for standalone tools.

Rather than playing this zero-sum game, MCP offers a way for AI assistants and specialized AI products to coexist. Instead of AI assistants absorbing every feature, MCP enables them to orchestrate external tools dynamically.

This isn't just about tool-calling—it's about transforming the competition from "AI assistant vs. vertical AI tool" to "AI assistant as a gateway to external AI services".

But for this strategy to work, there needs to be an economic rationale for why AI assistants should enable external tools instead of just absorbing their features. That’s where the Economics of Complements comes in, which we’ll explore next.

Economics of Complements: How AI Assistants Win by Enabling External Tools

So far, we’ve explored how AI assistants tend to absorb specialized tools over time, making it difficult for vertical AI products to survive. But rather than seeing AI assistants and external tools as competitors, there’s another way to think about their relationship — one that follows a well-known pattern in technology: the shift from standalone products to platforms.

Model-Context-Protocol (MCP) enables this shift by allowing AI assistants to move beyond being self-contained monoliths and instead function as platforms that connect users to specialized AI capabilities. This approach mirrors the strategies that have made Windows, iOS, and Ethereum dominant in their respective domains.

To fully appreciate this, we need to examine how platform strategy intersects with economic complementarity — not in the strict pricing sense, but in the broader sense of how ecosystems create value.

In traditional economics, complementary goods exhibit negative cross-elasticity of demand—meaning that when the price of one decreases, demand for its complement increases (e.g., cheaper game consoles drive demand for more games). But in platform economics, the relationship isn’t strictly about price—it’s about network effects and ecosystem value. A platform becomes exponentially more valuable when it supports a rich ecosystem of complementary tools.

We’ve seen this play out in major technological shifts:

- Windows became dominant by supporting a vast ecosystem of third-party software.

- iOS provided an App Store, ensuring that as more apps were built, the iPhone became more valuable.

- Ethereum didn’t define every financial instrument or decentralized application upfront — it provided a framework where developers could create custom smart contracts, allowing the best ideas to emerge organically and later shape the ecosystem.

Each of these platforms succeeded by enabling external innovation rather than absorbing everything in-house.

MCP provides the foundation for AI assistants to do the same. If we think of AI assistants as the next major computing platform, then MCP is akin to an operating system API that allows external AI applications to function as native extensions.

- Without MCP, AI assistants are closed systems — they must either build every capability themselves or let external tools interact in limited ways.

- With MCP, AI assistants become open platforms, where external AI services can integrate seamlessly, making the assistant more powerful without it having to absorb every feature.

This is a fundamental shift. Instead of AI assistants competing with specialized tools, they become the hub that connects users to those tools—just as an operating system connects users to software.

This creates a reinforcing loop:

- The more MCP-compatible tools exist, the more valuable the AI assistant becomes.

- The more users rely on AI assistants, the stronger the incentive for developers to create MCP-powered services.

- As the ecosystem grows, AI assistants become essential not just because of their core capabilities, but because of the tools they enable.

This ensures that as AI models themselves become commoditized, the assistant’s value actually increases over time.

History shows that platforms always outlast standalone products. MCP offers AI assistants a way to follow this trajectory—not by replacing external tools, but by making them an integral part of the AI experience. The next question is how this changes the fundamental nature of AI assistants themselves.

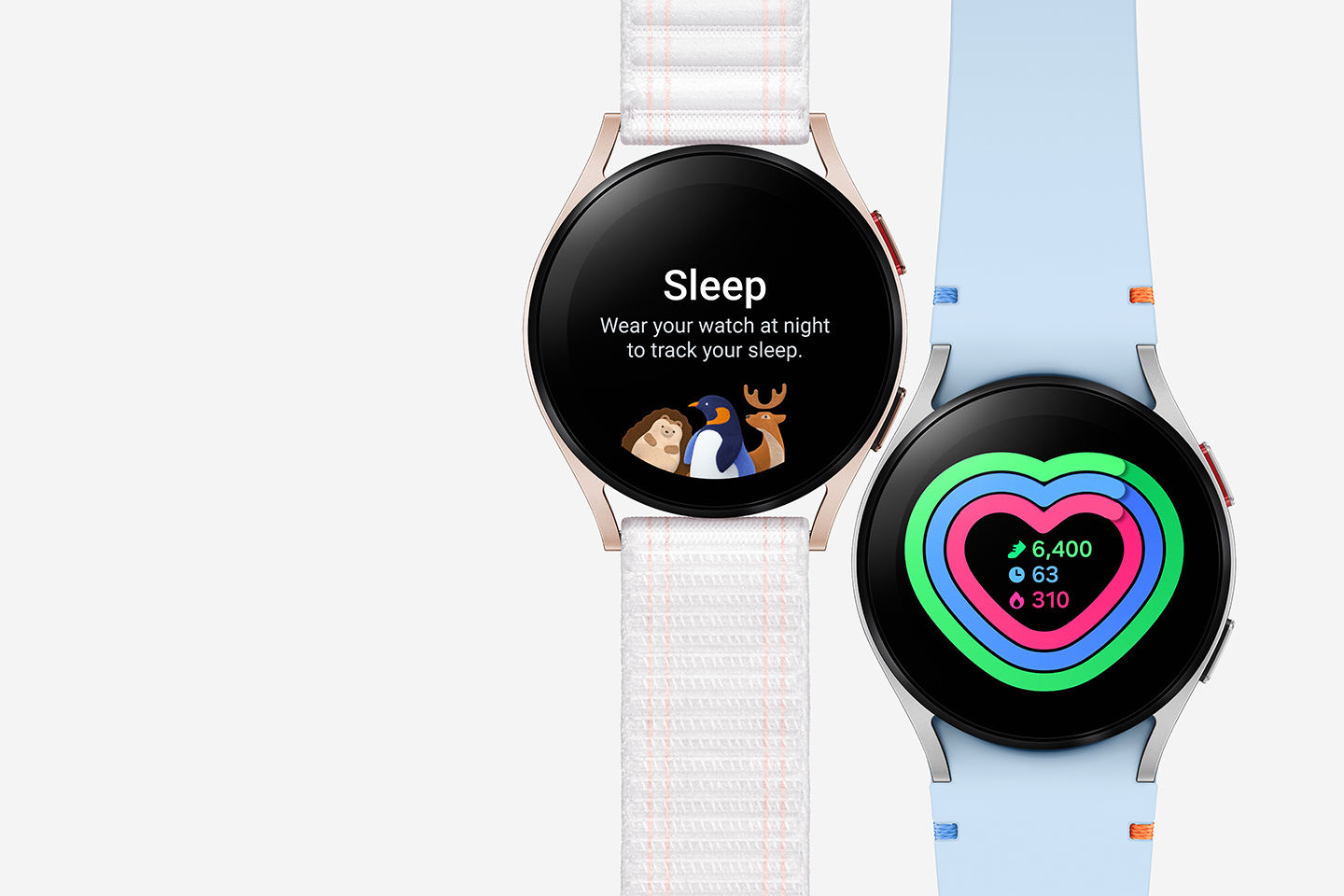

MCP-enhanced AI assistant as a Personal Agentic Platform

Following the familiar SaaS playbook, people build AI agents as separate applications with distinct interfaces — something a user must go to, query, and wait for a response. But true AI agents don’t need to be siloed away from where work actually happens.

A real agent isn’t just an API call, nor is it just an LLM answering questions. It’s a system that integrates into the user’s workflow, operates alongside them, and acts as a thinking extension of their intent — bridging structured workflows with on-demand reasoning powered by an LLM

In the current AI landscape, most “agents” are just thin wrappers around an LLM with a predefined set of tool calls: user makes a request, agent queries an LLM, agent calls some APIs, agent returns a response. But this design is inherently limited. It requires the user to context-switch between their environment and the AI agent, and the agent itself remains stateless and passive — waiting for the user’s input rather than proactively participating in workflows.

MCP flips this dynamic. Instead of treating AI agents as external services, it enables them to function as embedded thinking assistants—always available, context-aware, and capable of both executing structured workflows and reasoning through complex tasks dynamically.

The key to this transformation is Sampling, a feature that allows an MCP server to call an LLM for reasoning while staying integrated into the user's workspace. This capability is profoundly important—it enables an MCP server to tap into LLM reasoning exactly when needed, rather than relying on pre-defined decision trees or separate model instances, which turns a dumb piece of software into a full AI agent.

Here’s how this changes the AI assistant’s role:

- No Separate Interface Needed: the AI agent isn’t a separate chatbot or a tool that users need to “consult” — it’s already part of the workflow. Example: A developer working in an IDE doesn’t need to copy-paste errors into ChatGPT — the agent sees the issue from a console invocation and proposes a fix inside the workspace.

- Built-in LLM Access for Adaptive Decision-Making: Unlike traditional agents that must maintain their own LLM connection, an MCP-powered agent inherits access to the same LLM it was called from. This allows it to seamlessly integrate dynamic reasoning into structured workflows. Example: Instead of blindly cleaning up log files, an MCP agent can query the LLM on demand, determine whether certain files might be important, and request user confirmation—all without requiring a separate language model integration.

- Retains Context Without Exposing User Data: Since the MCP server is under user control, the assistant doesn’t need to send all user data to an external LLM provider. Instead, it queries only what’s necessary, making the assistant privacy-preserving and deeply integrated at the same time.

This is the true evolution of AI assistants—not just smarter chatbots, but integrated, privacy-respecting, context-aware partners that enhance how users work where they work.

This isn't just theoretical. When Cursor IDE added MCP support in early 2025, developers quickly began creating powerful integrations that transformed their workflows. One ML engineer described it as "an absolute game-changer" after connecting Cursor's AI capabilities with GroundX, an open-source RAG system. By building an MCP server with tools to retrieve available documentation topics and search relevant context, they seamlessly integrated their entire project documentation—markdown files, source code, HTML, and PDF documents—directly into their coding environment. The result? "I went from getting mediocre, sometimes wrong answers to 100% truthful, complete answers," all without leaving their IDE or disrupting their workflow.

Interestingly, the Sampling feature is not even properly supported in Claude Desktop, the origin of MCP protocol. This highlights MCP's untapped potential: even the application that introduced the protocol hasn't fully implemented its most powerful capabilities. As third-party developers explore MCP's possibilities, we're likely to see implementations that push beyond what its creators initially envisioned.

While this transformation changes how humans and AI interact, it also opens up new possibilities for how AI systems interact with each other.

MCP as an Agent-to-Agent Protocol

As AI assistants like ChatGPT, Claude, and Gemini become more powerful, they increasingly interact with external data sources—retrieving order statuses, pulling in knowledge base articles, or even handling transactions. This raises a fundamental question:

Should general AI assistants retrieve information directly from raw APIs, or should they communicate with domain-specific AI agents that structure and interpret that data?

Customer support provides a clear example of this dilemma. Today, companies deploy AI chatbots to manage customer inquiries. But if ChatGPT or Claude can answer those same questions—pulling information directly from websites or documentation—does the chatbot become redundant?

At first glance, this seems efficient. Instead of needing separate support bots, users can just ask their general AI assistant. But this comes with risks:

- loss of control – companies have no oversight over how AI presents their information,

- inaccurate or conflicting answers – AI assistants may hallucinate policies, leading to customer confusion,

- missed business opportunities – AI assistants don’t prioritize engagement, loyalty, or upselling like a company’s own chatbot would.

This raises a broader question: If AI assistants are going to serve as intermediaries for business data, shouldn't businesses control how that data is structured and delivered?

A possible alternative is AI-to-AI communication, where general AI assistants don't directly scrape business data but instead query domain-specific AI agents that serve as structured, authoritative sources of truth.

This approach elegantly resolves the tension in Petersson's framework between specificity and autonomy. Rather than forcing a choice between vertical specificity and horizontal versatility, MCP enables a network of specialized agents that collectively provide both:

- Vertical agents handle domain-specific tasks with deep expertise and controlled data access

- Horizontal assistants provide the unified interface and orchestration layer

- Together, they balance autonomy (through AI-driven decision making) with control (through structured, business-authorized information channels)

For customer support, the flow might look like this:

- User asks a general AI assistant: "What’s my order status on Shopify?"

- The AI assistant forwards the query to Shopify’s official MCP-compatible AI endpoint.

- Shopify’s AI agent retrieves the correct response from internal APIs and formats it properly.

- The AI assistant delivers the verified answer back to the user.

This approach ensures that:

- Users still interact with AI assistants natively—no need to visit a separate chatbot.

- Businesses retain oversight over responses, ensuring brand consistency.

- AI assistants avoid misinformation, since they rely on structured, validated sources instead of freeform web data.

This principle extends far beyond customer support. If AI assistants can delegate questions to domain-specific AI agents, they can avoid the pitfalls of direct API access while maintaining adaptability across industries:

- enterprise AI automation – internal AI agents managing HR, finance, IT, and legal tasks could expose structured interfaces for AI assistants to interact with;

- AI in marketplaces and e-Commerce – supply chain, logistics, and procurement AI agents could negotiate and coordinate decisions autonomously;

- decentralized AI collaboration — instead of isolated AI models, companies could deploy self-hosted AI agents that communicate securely over MCP;

- personal AI assistants — individuals could maintain multiple AI agents (personal finance, work, health) that share information securely while preserving privacy.

AI assistants could still call APIs directly, but structured, AI-to-AI communication ensures better context, security, and structured reasoning.

For AI-to-AI communication to work, assistants need to discover, authenticate, and interact with domain-specific AI agents in a structured way. MCP just recently started provided three essential capabilities:

- authentication — ensures that AI agents communicate only with trusted sources, preventing unauthorized queries;

- statelessness — allows scalable interactions between multiple agents without requiring persistent user sessions;

- discovery — enables AI agents to find and interact with business-approved AI endpoints, instead of relying on web scraping or hardcoded integrations.

This is not about replacing APIs but about creating a structured layer where AI assistants communicate through agent interfaces instead of raw data queries. The extent to which this model will take hold remains an open question. But as AI assistants become more deeply embedded in workflows, the need for structured, business-controlled AI interfaces will likely become more apparent.

Conclusion: The Road Ahead for MCP

MCP is often framed as a tool-calling protocol, but that description barely scratches the surface. I believe it’s not just a mechanism for making AI assistants “do things” — it quietly challenges some of our assumptions about what AI assistants are becoming and how they should interact with their environment.

This article is an attempt to explore where applied AI might go if we push MCP to its limits. It’s not a manifesto or a prediction of inevitability—rather, it’s an effort to highlight some fundamental questions that emerge when thinking about this somewhat niche technology:

- Specialization vs. Absorption – As general AI assistants expand their built-in capabilities, will vertical AI products continue to thrive, or will they be absorbed into larger platforms?

- Embedded vs. Standalone AI Agents – If AI agents can function as fully integrated, context-aware assistants within user workflows, do they even need distinct interfaces anymore?

- AI-to-AI Coordination – If AI systems are expected to collaborate rather than compete, shouldn’t they communicate in structured ways instead of relying on users to mediate between them?

MCP offers a fresh perspective on how we might approach these questions. If fully leveraged, the AI ecosystem might take a different shape than what we see today. Instead of separate AI tools being at risk of absorption, they could become natively enshrined within assistants. Rather than forcing AI assistants to be either passive chatbots or fully autonomous, specialized agentic applications, MCP may allow them to exist as structured participants in familiar user workflows. And instead of fragmented, standalone services, AI could function more like a network of interoperable agents, dynamically discovering and calling relevant capabilities.

None of this is inevitable. MCP doesn’t dictate how AI must evolve, but it provides a framework that makes these directions possible — offering an alternative to the usual paths of centralization, monolithic assistants, or isolated vertical tools struggling for relevance. Rather than just making AI assistants incrementally smarter or more tool-capable, MCP opens the door for them to act as gateways, not just to better answers, but to a broader ecosystem of intelligence. Those who continue to view MCP as merely a tool-calling mechanism risk missing the larger paradigm shift happening right before our eyes—one that will likely determine the architecture of AI systems for years to come.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)