Guide to Dify 1.1.3 on AWS using docker-compose, connected to Postgres RDS, with TLS through an AWS ALB

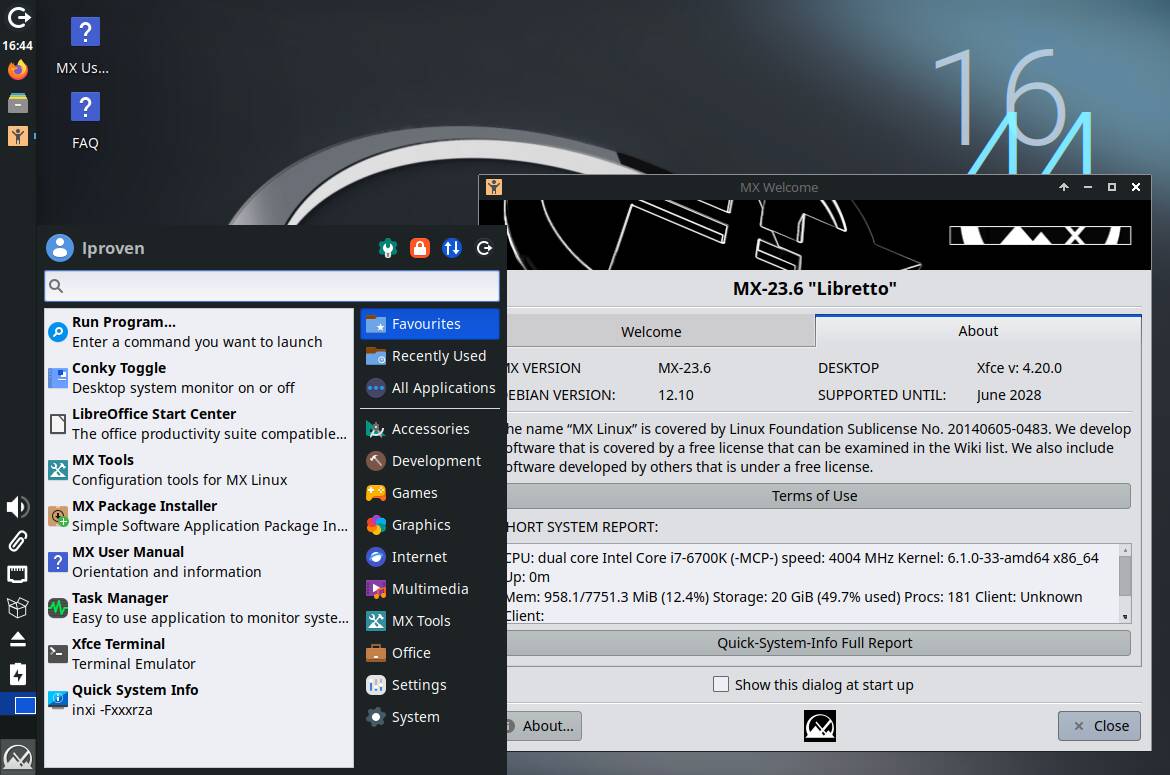

After spending several hours searching the web and tinkering with Dify using docker (more specifically docker-compose) on an EC2 instance, I realized that there are no updated guides to self-hosted Dify on AWS. Or rather, none that replace the default postgres database with RDS. Why on earth would I want to connect my Dify to RDS? Since it is a containerized deployment of Dify, there are plenty of reasons to store your data in RDS. Whenever I want to update Dify, all I have to do is update it in place and my data stored on RDS doesn't disappear on accident. I'm not a veteran engineer or architect, and I am quite aware that accidentally deleting entire knowledge bases from Dify could cause a few headaches. I don't want to spew out the same information as all those AI written posts with fancy buzzwords, but I quite like RDS's high availability, scalability, and easy backups. Even if it does cost a tiny bit of money to run. With all of that out of the way, the reason you're probably here is for a guide on how to set it up. So, I will just get straight to it. I will try to keep it extremely simple, as well as cover parts that I believe may trip you up. Guide on how to set it up Don't expect this guide to cover ever OS and every issue that comes up. I apologize if my way of setting it up causes issues for your specific requirements. If you are running another app on port 80 or 443, then I explain how to change the dify port below. Requirements (Skipping AWS account and VPC setup) An EC2 instance running Amazon Linux 2023 (if not, then this guide may not work for you perfectly) Note: that the instance type is recommended to be a medium sized instance or larger. I am running different containers on my instance, so I chose a t3.large instance. EBS storage of 25GB or larger. Type gp3 is fine. Note: 25GB is enough for just Dify. I have a separate instance for my old Dify deployment and it is using 22GB of EBS storage. For my CURRENT t3.large instance I chose 40GB. This allows me to run other containers on EBS without running into strange OOM (Out of memory. Not mana, but I suppose they are the same thing, huh?) EC2 instance profile with permissions for EC2 to assume the role (principal: ec2.amazonaws.com, action: sts:AssumeRole) and for whatever permissions may be required in the future for Dify. Example: If you are using Amazon Bedrock, then you will need to give the instance Bedrock permissions or it will fail! For TLS (newer SSL, HTTPS) connection to your deployment I suggest an application load balancer. If you are deploying for your own specific use, and not for a group of people, then try fiddling around with certbot and letsencrypt. It is included in the docker-compose file we will get from github in a moment. I won't explain how to set up an ALB and target group, since there are tons of guides online. However, note that the ALB itself will remain listening to ports 80 and 443, but the 443 (TLS) listener's target group should be pointed at port 8080. The port 80 listener on the ALB should redirect traffic to the 443 listener. Postgres RDS instance, size 20GiB (Could probably be smaller, but the free tier is 20GiB per month!) The security group for the instance must allow ingress on tcp port 5432 from the security group you gave to your EC2 instance! For simplicity of managing your RDS instance you may want to just connect to RDS from your local computer. In that case you'll need to open port 5432 to your local IP as well. This will allow you to manage the databases on the instance using psql or pgadmin. NOTE: Take a memo of your RDS login information. You have to give it to Dify in the .env file, which I explain down below. Installations Install docker-compose Optional: First install the new aws cli and ssm if you are going to be using it to pull RDS secrets or variables from secrets manager or parameter store. To install docker, docker-compose, git and update system: sudo dnf update -y sudo dnf install -y docker sudo dnf install -y git sudo curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose Change docker-compose permissions and add a user to docker group: sudo chmod +x /usr/local/bin/docker-compose newgrp docker sudo usermod -aG docker ec2-user Optional, install AWS CLI v2: sudo dnf install -y unzip sudo dnf remove -y awscli curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" unzip awscliv2.zip sudo ./aws/install rm awscliv2.zip -rf sudo ln -s /usr/local/bin/aws /usr/bin/aws Note: that last line using "ln" I had to add to link /usr/local/bin/aws to /usr/bin/aws. For some reason when running the aws cli commands it errored without it. So that's my fix. Install dify and set up the DB. sudo dnf install -y postgresql15-server PGPASSWORD="(INSTERT YOUR RDS INSTANCE PASSWORD HERE)" createdb -h (INS

After spending several hours searching the web and tinkering with Dify using docker (more specifically docker-compose) on an EC2 instance, I realized that there are no updated guides to self-hosted Dify on AWS. Or rather, none that replace the default postgres database with RDS.

Why on earth would I want to connect my Dify to RDS?

Since it is a containerized deployment of Dify, there are plenty of reasons to store your data in RDS. Whenever I want to update Dify, all I have to do is update it in place and my data stored on RDS doesn't disappear on accident. I'm not a veteran engineer or architect, and I am quite aware that accidentally deleting entire knowledge bases from Dify could cause a few headaches. I don't want to spew out the same information as all those AI written posts with fancy buzzwords, but I quite like RDS's high availability, scalability, and easy backups. Even if it does cost a tiny bit of money to run.

With all of that out of the way, the reason you're probably here is for a guide on how to set it up. So, I will just get straight to it. I will try to keep it extremely simple, as well as cover parts that I believe may trip you up.

Guide on how to set it up

Don't expect this guide to cover ever OS and every issue that comes up. I apologize if my way of setting it up causes issues for your specific requirements.

If you are running another app on port 80 or 443, then I explain how to change the dify port below.

Requirements (Skipping AWS account and VPC setup)

An EC2 instance running Amazon Linux 2023 (if not, then this guide may not work for you perfectly)

Note: that the instance type is recommended to be a medium sized instance or larger. I am running different containers on my instance, so I chose a t3.large instance.EBS storage of 25GB or larger. Type gp3 is fine.

Note: 25GB is enough for just Dify. I have a separate instance for my old Dify deployment and it is using 22GB of EBS storage. For my CURRENT t3.large instance I chose 40GB. This allows me to run other containers on EBS without running into strange OOM (Out of memory. Not mana, but I suppose they are the same thing, huh?)EC2 instance profile with permissions for EC2 to assume the role (principal: ec2.amazonaws.com, action: sts:AssumeRole) and for whatever permissions may be required in the future for Dify. Example: If you are using Amazon Bedrock, then you will need to give the instance Bedrock permissions or it will fail!

For TLS (newer SSL, HTTPS) connection to your deployment I suggest an application load balancer. If you are deploying for your own specific use, and not for a group of people, then try fiddling around with certbot and letsencrypt. It is included in the docker-compose file we will get from github in a moment.

I won't explain how to set up an ALB and target group, since there are tons of guides online. However, note that the ALB itself will remain listening to ports 80 and 443, but the 443 (TLS) listener's target group should be pointed at port 8080. The port 80 listener on the ALB should redirect traffic to the 443 listener.Postgres RDS instance, size 20GiB (Could probably be smaller, but the free tier is 20GiB per month!) The security group for the instance must allow ingress on tcp port 5432 from the security group you gave to your EC2 instance! For simplicity of managing your RDS instance you may want to just connect to RDS from your local computer. In that case you'll need to open port 5432 to your local IP as well. This will allow you to manage the databases on the instance using psql or pgadmin.

NOTE: Take a memo of your RDS login information. You have to give it to Dify in the .env file, which I explain down below.

Installations

- Install docker-compose Optional: First install the new aws cli and ssm if you are going to be using it to pull RDS secrets or variables from secrets manager or parameter store.

To install docker, docker-compose, git and update system:

sudo dnf update -y

sudo dnf install -y docker

sudo dnf install -y git

sudo curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

Change docker-compose permissions and add a user to docker group:

sudo chmod +x /usr/local/bin/docker-compose

newgrp docker

sudo usermod -aG docker ec2-user

Optional, install AWS CLI v2:

sudo dnf install -y unzip

sudo dnf remove -y awscli

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install

rm awscliv2.zip -rf

sudo ln -s /usr/local/bin/aws /usr/bin/aws

Note: that last line using "ln" I had to add to link /usr/local/bin/aws to /usr/bin/aws. For some reason when running the aws cli commands it errored without it. So that's my fix.

- Install dify and set up the DB.

sudo dnf install -y postgresql15-server

PGPASSWORD="(INSTERT YOUR RDS INSTANCE PASSWORD HERE)" createdb -h (INSERT YOUR RDS DATABASE ENDPOINT HERE) -p 5432 -U (INSERT YOUR USERNAME HERE) dify

Note: Don't include the parenthesis around the "INSERT..." sections. For example, PGPASSWORD="mypassword"

- Clone the repository

git clone https://github.com/langgenius/dify.git

Note: If you are experiencing issues with the current release of Dify (such as issues connecting to RDS that won't fix no matter how many times you google the issue) then you may need to downgrade the dify version to the last working version. Use the following command to do so:

git checkout -b main-(VERSION NUMBER HERE) reds/tags/(VERSION NUMBER HERE)

- Set up the .env file

cd /home/ec2-user/dify/docker

cp .env.example .env

sed -i '/EXPOSE_NGINX_PORT=/ s/=.*/=8080/' .env

sed -i '/EXPOSE_NGINX_SSL_PORT=/ s/=.*/=8443/' .env

Note: In this example I am changing the port to access Dify to 8080 and 8443 instead of the original 80 (HTTP) and 443 (HTTPS). If that is not a requirement then don't use the sed command above.

Changing the docker-compose.yaml file to set up RDS

Within the docker-compose.yaml file there are 3 things we need to change.

i. Comment out the "db" block using #

# The postgres database.

# db:

# image: postgres:15-alpine

# restart: always

# environment:

# PGUSER: ${PGUSER:-postgres}

# POSTGRES_PASSWORD: ${POSTGRES_PASSWORD:-difyai123456}

# POSTGRES_DB: ${POSTGRES_DB:-dify}

# PGDATA: ${PGDATA:-/var/lib/postgresql/data/pgdata}

# command: >

# postgres -c 'max_connections=${POSTGRES_MAX_CONNECTIONS:-100}'

# -c 'shared_buffers=${POSTGRES_SHARED_BUFFERS:-128MB}'

# -c 'work_mem=${POSTGRES_WORK_MEM:-4MB}'

# -c 'maintenance_work_mem=${POSTGRES_MAINTENANCE_WORK_MEM:-64MB}'

# -c 'effective_cache_size=${POSTGRES_EFFECTIVE_CACHE_SIZE:-4096MB}'

# volumes:

# - ./volumes/db/data:/var/lib/postgresql/data

# healthcheck:

# test: [ 'CMD', 'pg_isready' ]

# interval: 1s

# timeout: 3s

# retries: 30

But why?

Because, this sets up a local postgres database. Which we don't need, because we are connecting our dify to RDS.

ii. Comment out the "depends on: db" sections of the worker, api, and plugin daemon services. Each section has something like this:

# depends_on:

# - db

Only comment out the - db for the api and worker, but comment out the depends_on block for the plugin daemon.

iii. Add SSL required to the plugin daemon (the last line in this block):

plugin_daemon:

image: langgenius/dify-plugin-daemon:0.0.6-local

restart: always

environment:

# Use the shared environment variables.

<<: *shared-api-worker-env

DB_DATABASE: ${DB_PLUGIN_DATABASE:-dify_plugin}

DB_SSL_MODE: ${DB_SSL_MODE:-require}

But why? Because without it your RDS connection will error when you run Dify.

- Set up the .env file

Whatever vector store you use is up to you. If you want to keep the default as weviate then just leave the .env vector store section alone, however you can set up pgvector to be used with RDS, so I will explain how shortly below. However, note that I am not a master at vector stores and have never used pgvector besides this one single time. Do your own research on vector stores and make an informed decision.

First and foremost, what the heck is a vector?

A vector embedding is, simply put, numbers that correlate to meanings of sentences, words, etc. A vector store stores those numbers, and allows us to search for not only matches to words, sentences, etc. but also the meanings of them. So in Dify, when we give it a knowledge base, it stores that knowledge and the meanings in a vector store. Then, when we ask it a question it will search for the meaning in the vector store.

On to the pgvector setup:

To set up RDS to use a vector store, you should run this command on the RDS instance, using psql or query it in pgadmin.

CREATE EXTENSION vector;

Once finished, open .env in your favorite file editor and change the following variables to match whatever you want it to be. However, note that the default pgvector DB is the same name as the dify database default name we gave it, "dify". You should be able to safely use the same database name, since the vector store and the dify db store entirely different data. We just use the pgvector extension to allow our DB to handle vector embeddings.

VECTOR_STORE=pgvector

PGVECTOR_HOST=(RDS ENDPOINT HERE)

PGVECTOR_PORT=5432

PGVECTOR_USER=(RDS USERNAME HERE)

PGVECTOR_PASSWORD=(RDS PASSWORD HERE)

PGVECTOR_DATABASE=dify (CAN CHANGE IF YOU MAKE A NEW DB)

PGVECTOR_MIN_CONNECTION=1

PGVECTOR_MAX_CONNECTION=5

PGVECTOR_PG_BIGM=false

PGVECTOR_PG_BIGM_VERSION=1.2-20240606

Next, we need to give the .env file our RDS information for our normal Dify DB (not the vector store, but the one that stores user data and the such):

DB_USERNAME=(RDS USERNAME HERE)

DB_PASSWORD=(RDS PASSWORD HERE)

DB_HOST=(RDS ENDPOINT HERE)

DB_PORT=5432(THE PORT TO RDS HERE)

DB_DATABASE=dify(THE NAME OF YOUR DB HERE)

DB_SSL_MODE=require

Note: You should add the DB_SSL_MODE=require line underneath the already existing DB variables!!

- Start up Dify

docker-compose -f docker-compose.yaml up -d

Note: You may need to provide the path to the docker-compose.yaml file. The -f flag is used to tell docker-compose the path to the file. Also, the -d flag is used to tell docker-compose to continue running it in the background. Without it, you'll have to stare at the docker container running in your terminal all day to keep it alive.

Congratulations on making it this far. I pray that your Dify deployment worked the first time. Be careful though, you should check your docker-compose logs to see if there are any errors occurring.

docker-compose -f docker-compose.yaml logs

Troubleshooting

The most common errors in my experience are DB related errors. If you get an error saying "no encryption" for the connection to your RDS instance, then that means something is wrong with the SSL require line we added above (DB_SSL_MODE=require and DB_SSL_MODE: ${DB_SSL_MODE:-require}).

If you run into any strange errors that don't necessarily point at much, try checking the memory or storage of the instance. Check the ebs volume using df -hT

If you have an OOM message when trying to run docker-compose up -d, then you either need to increase EBS size or delete old images you aren't using for docker. This can be done by running:

docker image prune -a -f

You could also try pruning old volumes:

docker volume prune -f

For more troubleshooting help, feel free to comment and if I can then I will try to help find the cause.

If you have any questions, concerns, or tips then please leave them in the comments as well! As I stated before, I am a new engineer with only half a year under my belt.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![GrandChase tier list of the best characters available [April 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.webp?#)

![Quick Share redesign looks more like a normal app with prep for ‘Receive’ and ‘Send’ tabs [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/12/quick-share-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![New Beats USB-C Charging Cables Now Available on Amazon [Video]](https://www.iclarified.com/images/news/97060/97060/97060-640.jpg)

![Apple M4 13-inch iPad Pro On Sale for $200 Off [Deal]](https://www.iclarified.com/images/news/97056/97056/97056-640.jpg)

![Apple Shares New 'Mac Does That' Ads for MacBook Pro [Video]](https://www.iclarified.com/images/news/97055/97055/97055-640.jpg)