MCP – Model Context Protocol: Standardizing AI-Data Access

The world of Generative AI and AI Agents is evolving rapidly, increasing the demand for seamless integration between AI-powered applications and external data sources such as databases, APIs, and file systems. Anthropic's Model Context Protocol (MCP) is an open-source standard designed to bridge this gap by enabling structured, two-way communication between AI applications and various data sources. It provides a universal, open standard for connecting AI systems with external data, replacing fragmented integrations with a single, standardized protocol. One of MCP’s most important features is its separation of concerns—MCP tools, resources, and prompts can be used independently with different applications rather than being tightly coupled to a single AI application or agent. What is MCP? At its core, MCP standardizes how AI applications access and interact with external data. Developers can: Expose their data through MCP servers Build AI applications (MCP clients) that connect to these servers Anthropic’s Claude Cloud Desktop is the first AI-powered application to implement an MCP client, enabling it to connect with various MCP servers. Key Components of MCP MCP consists of three primary building blocks: MCP Hosts – AI-powered applications that need access to external data via MCP (e.g., Claude Desktop). MCP Clients – These act as a bridge between AI applications and MCP servers, maintaining one-to-one connections. MCP Servers – Applications or services that expose functionalities via MCP. These can be written in Python, Go, Java, or JavaScript and serve various purposes, such as a FastAPI-based MCP server for calculations or user profile management. MCP Communication Models MCP supports two transport models, both using JSON-RPC 2.0 for structured data exchange: STDIO (Standard Input/Output) – Uses local input/output streams, making it ideal for local integrations. SSE (Server-Sent Events) – Works over HTTP, allowing remote AI applications to interact with MCP servers through SSE (server-to-client) and POST (client-to-server) requests. Getting Started with MCP In this blog, we'll focus on STDIO-based MCP servers, which are locally hosted and perfect for local AI integrations. If you’re developing remote AI applications, the SSE model provides a more scalable alternative. MCP is paving the way for structured, secure, and efficient AI-data interactions. As AI-powered applications become more embedded in our workflows, standards like MCP will be essential for ensuring seamless interoperability across different systems. MCP Architecture MCP Server/Client Implementation in Python Required python libs or packages ( install using pip command) langchain-mcp-adapters==0.0.2 langchain-openai==0.3.7 langchain-openai==0.3.7 MCP Server # math_server.py from mcp.server.fastmcp import FastMCP mcp = FastMCP("Math") @mcp.tool() def add(a: int, b: int) -> int: """Add two numbers""" return a + b @mcp.tool() def multiply(a: int, b: int) -> int: """Multiply two numbers""" return a * b if __name__ == "__main__": mcp.run() MCP Host and Client # Create server parameters for stdio connection from mcp import ClientSession, StdioServerParameters from mcp.client.stdio import stdio_client from langchain_mcp_adapters.tools import load_mcp_tools from langgraph.prebuilt import create_react_agent from langchain_openai import ChatOpenAI from dotenv import load_dotenv import os from typing import Dict, List, Union from langchain.schema import AIMessage, HumanMessage def extract_ai_message_content(response: Dict[str, List[Union[AIMessage, HumanMessage]]]) -> str: for message in response.get('messages', []): if isinstance(message, AIMessage) and message.content: return message.content return "" # Return empty string if no non-empty AIMessage is found load_dotenv() model = ChatOpenAI(model="gpt-4o") import asyncio server_params = StdioServerParameters( command="python", # Make sure to update to the full absolute path to your math_server.py file args=["server.py"], ) async def main(): async with stdio_client(server_params) as (read, write): async with ClientSession(read, write) as session: # Initialize the connection await session.initialize() # Get tools tools = await load_mcp_tools(session) # Create and run the agent agent = create_react_agent(model, tools) agent_response = await agent.ainvoke({"messages": "what's (5000 + 18) then calculate 12x12 ?"}) print(extract_ai_message_content(agent_response)) asyncio.run(main()) Output How to use Prebuilt MCP Servers. Here i am going to show how we can use FileSystem MCP servers which is open source import asyncio import os from langcha

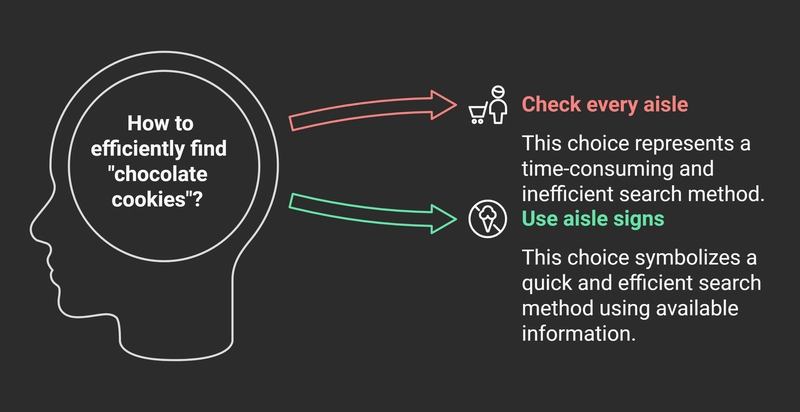

The world of Generative AI and AI Agents is evolving rapidly, increasing the demand for seamless integration between AI-powered applications and external data sources such as databases, APIs, and file systems.

Anthropic's Model Context Protocol (MCP) is an open-source standard designed to bridge this gap by enabling structured, two-way communication between AI applications and various data sources. It provides a universal, open standard for connecting AI systems with external data, replacing fragmented integrations with a single, standardized protocol.

One of MCP’s most important features is its separation of concerns—MCP tools, resources, and prompts can be used independently with different applications rather than being tightly coupled to a single AI application or agent.

What is MCP?

At its core, MCP standardizes how AI applications access and interact with external data. Developers can:

- Expose their data through MCP servers

- Build AI applications (MCP clients) that connect to these servers

Anthropic’s Claude Cloud Desktop is the first AI-powered application to implement an MCP client, enabling it to connect with various MCP servers.

Key Components of MCP

MCP consists of three primary building blocks:

- MCP Hosts – AI-powered applications that need access to external data via MCP (e.g., Claude Desktop).

- MCP Clients – These act as a bridge between AI applications and MCP servers, maintaining one-to-one connections.

- MCP Servers – Applications or services that expose functionalities via MCP. These can be written in Python, Go, Java, or JavaScript and serve various purposes, such as a FastAPI-based MCP server for calculations or user profile management.

MCP Communication Models

MCP supports two transport models, both using JSON-RPC 2.0 for structured data exchange:

- STDIO (Standard Input/Output) – Uses local input/output streams, making it ideal for local integrations.

- SSE (Server-Sent Events) – Works over HTTP, allowing remote AI applications to interact with MCP servers through SSE (server-to-client) and POST (client-to-server) requests.

Getting Started with MCP

In this blog, we'll focus on STDIO-based MCP servers, which are locally hosted and perfect for local AI integrations. If you’re developing remote AI applications, the SSE model provides a more scalable alternative.

MCP is paving the way for structured, secure, and efficient AI-data interactions. As AI-powered applications become more embedded in our workflows, standards like MCP will be essential for ensuring seamless interoperability across different systems.

MCP Architecture

MCP Server/Client Implementation in Python

Required python libs or packages ( install using pip command)

langchain-mcp-adapters==0.0.2

langchain-openai==0.3.7

langchain-openai==0.3.7

MCP Server

# math_server.py

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("Math")

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + b

@mcp.tool()

def multiply(a: int, b: int) -> int:

"""Multiply two numbers"""

return a * b

if __name__ == "__main__":

mcp.run()

MCP Host and Client

# Create server parameters for stdio connection

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from langchain_mcp_adapters.tools import load_mcp_tools

from langgraph.prebuilt import create_react_agent

from langchain_openai import ChatOpenAI

from dotenv import load_dotenv

import os

from typing import Dict, List, Union

from langchain.schema import AIMessage, HumanMessage

def extract_ai_message_content(response: Dict[str, List[Union[AIMessage, HumanMessage]]]) -> str:

for message in response.get('messages', []):

if isinstance(message, AIMessage) and message.content:

return message.content

return "" # Return empty string if no non-empty AIMessage is found

load_dotenv()

model = ChatOpenAI(model="gpt-4o")

import asyncio

server_params = StdioServerParameters(

command="python",

# Make sure to update to the full absolute path to your math_server.py file

args=["server.py"],

)

async def main():

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

# Initialize the connection

await session.initialize()

# Get tools

tools = await load_mcp_tools(session)

# Create and run the agent

agent = create_react_agent(model, tools)

agent_response = await agent.ainvoke({"messages": "what's (5000 + 18) then calculate 12x12 ?"})

print(extract_ai_message_content(agent_response))

asyncio.run(main())

Output

How to use Prebuilt MCP Servers.

Here i am going to show how we can use FileSystem MCP servers which is open source

import asyncio

import os

from langchain_mcp_adapters.client import MultiServerMCPClient

from langgraph.prebuilt import create_react_agent

from langchain_openai import ChatOpenAI

from dotenv import load_dotenv

from typing import Dict, List, Union

from langchain.schema import AIMessage, HumanMessage

def extract_ai_message_content(response: Dict[str, List[Union[AIMessage, HumanMessage]]]) -> str:

for message in response.get('messages', []):

if isinstance(message, AIMessage) and message.content:

return message.content

return "" # Return empty string if no non-empty AIMessage is found

load_dotenv()

model = ChatOpenAI(model="gpt-4o")

async def run_dynamic_directory_agent(directory):

async with MultiServerMCPClient() as client:

await client.connect_to_server(

"filesystem",

command="npx",

args=["-y", "@modelcontextprotocol/server-filesystem", directory]

)

agent = create_react_agent(model, client.get_tools())

response = await agent.ainvoke({"messages": f"List files in the directory: {directory}"})

print(extract_ai_message_content(response))

def get_user_directory():

while True:

directory = input("Enter the directory path (or press Enter for current directory): ").strip()

if directory == "":

return os.getcwd()

elif os.path.isdir(directory):

return os.path.abspath(directory)

else:

print("Invalid directory. Please try again.")

def main():

user_directory = get_user_directory()

print(f"Using directory: {user_directory}")

asyncio.run(run_dynamic_directory_agent(user_directory))

if __name__ == "__main__":

main()

Here, you will find a collection of pre-built MCP servers that can be leveraged in AI Agents or LLM-powered applications.

https://github.com/punkpeye/awesome-mcp-servers

Thanks

Sreeni Ramadorai

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)