Larger AI Models Like GPT-4 Better at Compressing Their Own Reasoning, Study Shows

This is a Plain English Papers summary of a research paper called Larger AI Models Like GPT-4 Better at Compressing Their Own Reasoning, Study Shows. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Overview Research examines how well LLMs compress their own reasoning Introduces token complexity to measure compression effectiveness Shows LLMs struggle to efficiently compress their own reasoning Claude and GPT-4 have better self-compression than smaller models Compression ability correlates with reasoning performance Chain-of-Thought increases token usage but improves accuracy Plain English Explanation When we solve problems, we often think through steps before arriving at an answer. Large language models (LLMs) like GPT-4 and Claude do this too, in a process called Chain-of-Thought (CoT) reasoning. But this thinking takes up valuable space - each word or "token" costs comput... Click here to read the full summary of this paper

This is a Plain English Papers summary of a research paper called Larger AI Models Like GPT-4 Better at Compressing Their Own Reasoning, Study Shows. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

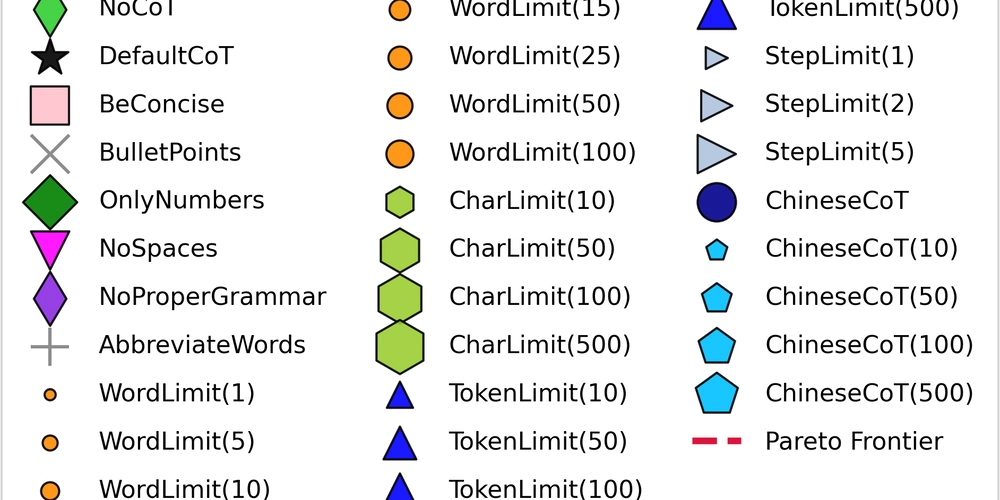

- Research examines how well LLMs compress their own reasoning

- Introduces token complexity to measure compression effectiveness

- Shows LLMs struggle to efficiently compress their own reasoning

- Claude and GPT-4 have better self-compression than smaller models

- Compression ability correlates with reasoning performance

- Chain-of-Thought increases token usage but improves accuracy

Plain English Explanation

When we solve problems, we often think through steps before arriving at an answer. Large language models (LLMs) like GPT-4 and Claude do this too, in a process called Chain-of-Thought (CoT) reasoning. But this thinking takes up valuable space - each word or "token" costs comput...

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)