How I Reduced My Docker Image Size by 80% With One Simple Trick

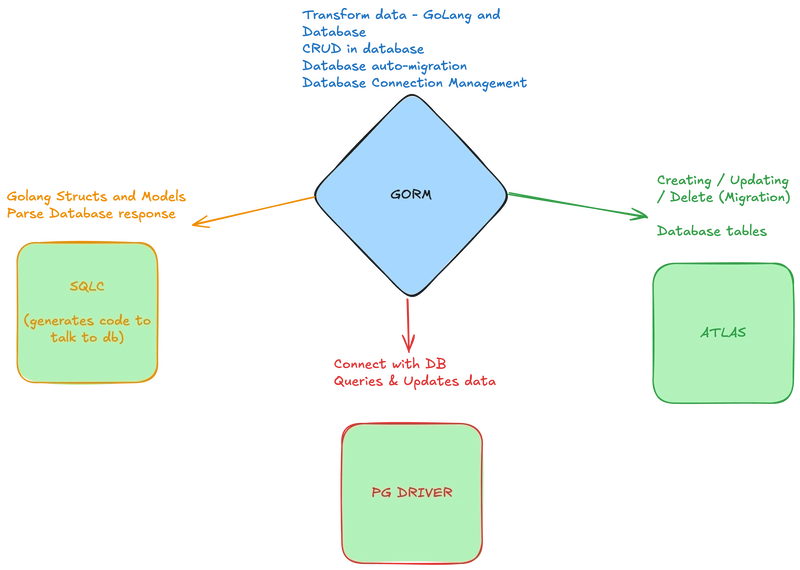

Docker is an incredible tool for containerizing applications but bloated images can slow down deployments, increase storage costs, and even introduce security risks. A few months ago, I discovered that one of my Docker images was over 1.2GB. Ridiculous for a simple Python microservice! After some research and experimentation, I managed to shrink it down to just 230MB (an 80% reduction without sacrificing functionality) Here’s how I did it. The Problem: Why Are Docker Images So Big? When I first ran: docker images I saw my image was massive: REPOSITORY TAG SIZE my-python-app latest 1.21GB This was shocking. My app was just a Flask API with a few dependencies. So, I dug deeper. Common Culprits of Docker Bloat Using a heavy base image (python:3.9 instead of python:3.9-slim) Including unnecessary files (cache, logs, dev dependencies) Not using multi-stage builds (leaving build-time dependencies in the final image) Copying entire directories (.git, __pycache__, node_modules, etc.) The Solution: Optimizing the Dockerfile 1. Start With a Slimmer Base Image Originally, I used: FROM python:3.9 This pulls the full Python image, which includes many tools I didn’t need (like gcc, make, etc.). I switched to: FROM python:3.9-slim This alone reduced the image size by over 50%. 2. Use Multi-Stage Builds For packages that require build tools (like gcc), use a multi-stage build to keep them out of the final image: # Stage 1: Build dependencies FROM python:3.9 as builder WORKDIR /app COPY requirements.txt . RUN pip install --user --no-cache-dir -r requirements.txt # Stage 2: Runtime image FROM python:3.9-slim WORKDIR /app COPY --from=builder /root/.local /root/.local COPY app.py . ENV PATH=/root/.local/bin:$PATH CMD ["python", "app.py"] Now, the final image has only the necessary dependencies, no compilers or build tools. 3. Clean Up After Installing Packages Even with --no-cache-dir, it's a good idea to remove temporary files if your build process creates any: RUN rm -rf /tmp/* /var/tmp/* In most cases, this step is optional but good hygiene if you're generating any custom build artifacts. 4. Be Selective With COPY Instead of: COPY . . Use: COPY app.py requirements.txt /app/ This avoids accidentally including large or sensitive files like .git, .env, or __pycache__. 5. Use a .dockerignore File To make sure unnecessary files don’t sneak into the build context, use a .dockerignore file: .git __pycache__ *.log .env You can exclude things like README.md or Dockerfile too, but only if you know they're not needed during the build. The Final Result After applying these changes: docker images Now shows: REPOSITORY TAG SIZE my-python-app latest 230MB From 1.2GB to 230MB: an 80% reduction. Key Takeaways Use -slim or -alpine base images when possible. Multi-stage builds keep your final image clean. Clean up caches and temp files when necessary. Be deliberate with what you COPY. Use .dockerignore to avoid accidental bloat. Smaller images mean faster deployments, lower cloud costs, and better security (fewer packages = smaller attack surface).

Docker is an incredible tool for containerizing applications but bloated images can slow down deployments, increase storage costs, and even introduce security risks.

A few months ago, I discovered that one of my Docker images was over 1.2GB. Ridiculous for a simple Python microservice!

After some research and experimentation, I managed to shrink it down to just 230MB (an 80% reduction without sacrificing functionality) Here’s how I did it.

The Problem: Why Are Docker Images So Big?

When I first ran:

docker images

I saw my image was massive:

REPOSITORY TAG SIZE

my-python-app latest 1.21GB

This was shocking. My app was just a Flask API with a few dependencies. So, I dug deeper.

Common Culprits of Docker Bloat

-

Using a heavy base image (

python:3.9instead ofpython:3.9-slim) - Including unnecessary files (cache, logs, dev dependencies)

- Not using multi-stage builds (leaving build-time dependencies in the final image)

-

Copying entire directories (

.git,__pycache__,node_modules, etc.)

The Solution: Optimizing the Dockerfile

1. Start With a Slimmer Base Image

Originally, I used:

FROM python:3.9

This pulls the full Python image, which includes many tools I didn’t need (like gcc, make, etc.). I switched to:

FROM python:3.9-slim

This alone reduced the image size by over 50%.

2. Use Multi-Stage Builds

For packages that require build tools (like gcc), use a multi-stage build to keep them out of the final image:

# Stage 1: Build dependencies

FROM python:3.9 as builder

WORKDIR /app

COPY requirements.txt .

RUN pip install --user --no-cache-dir -r requirements.txt

# Stage 2: Runtime image

FROM python:3.9-slim

WORKDIR /app

COPY --from=builder /root/.local /root/.local

COPY app.py .

ENV PATH=/root/.local/bin:$PATH

CMD ["python", "app.py"]

Now, the final image has only the necessary dependencies, no compilers or build tools.

3. Clean Up After Installing Packages

Even with --no-cache-dir, it's a good idea to remove temporary files if your build process creates any:

RUN rm -rf /tmp/* /var/tmp/*

In most cases, this step is optional but good hygiene if you're generating any custom build artifacts.

4. Be Selective With COPY

Instead of:

COPY . .

Use:

COPY app.py requirements.txt /app/

This avoids accidentally including large or sensitive files like .git, .env, or __pycache__.

5. Use a .dockerignore File

To make sure unnecessary files don’t sneak into the build context, use a .dockerignore file:

.git

__pycache__

*.log

.env

You can exclude things like README.md or Dockerfile too, but only if you know they're not needed during the build.

The Final Result

After applying these changes:

docker images

Now shows:

REPOSITORY TAG SIZE

my-python-app latest 230MB

From 1.2GB to 230MB: an 80% reduction.

Key Takeaways

- Use

-slimor-alpinebase images when possible. - Multi-stage builds keep your final image clean.

- Clean up caches and temp files when necessary.

- Be deliberate with what you

COPY. - Use

.dockerignoreto avoid accidental bloat.

Smaller images mean faster deployments, lower cloud costs, and better security (fewer packages = smaller attack surface).

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] The ChatGPT & AI Super Bundle (91% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)

![Apple May Not Update AirPods Until 2026, Lighter AirPods Max Coming in 2027 [Kuo]](https://www.iclarified.com/images/news/97350/97350/97350-640.jpg)

![iPhone 17 Air Could Get a Boost From TDK's New Silicon Battery Tech [Report]](https://www.iclarified.com/images/news/97344/97344/97344-640.jpg)

![Vision Pro Owners Say They Regret $3,500 Purchase [WSJ]](https://www.iclarified.com/images/news/97347/97347/97347-640.jpg)