Advances in Robotics: Safety, Generalization, and Human-Robot Interaction in 2025 Research

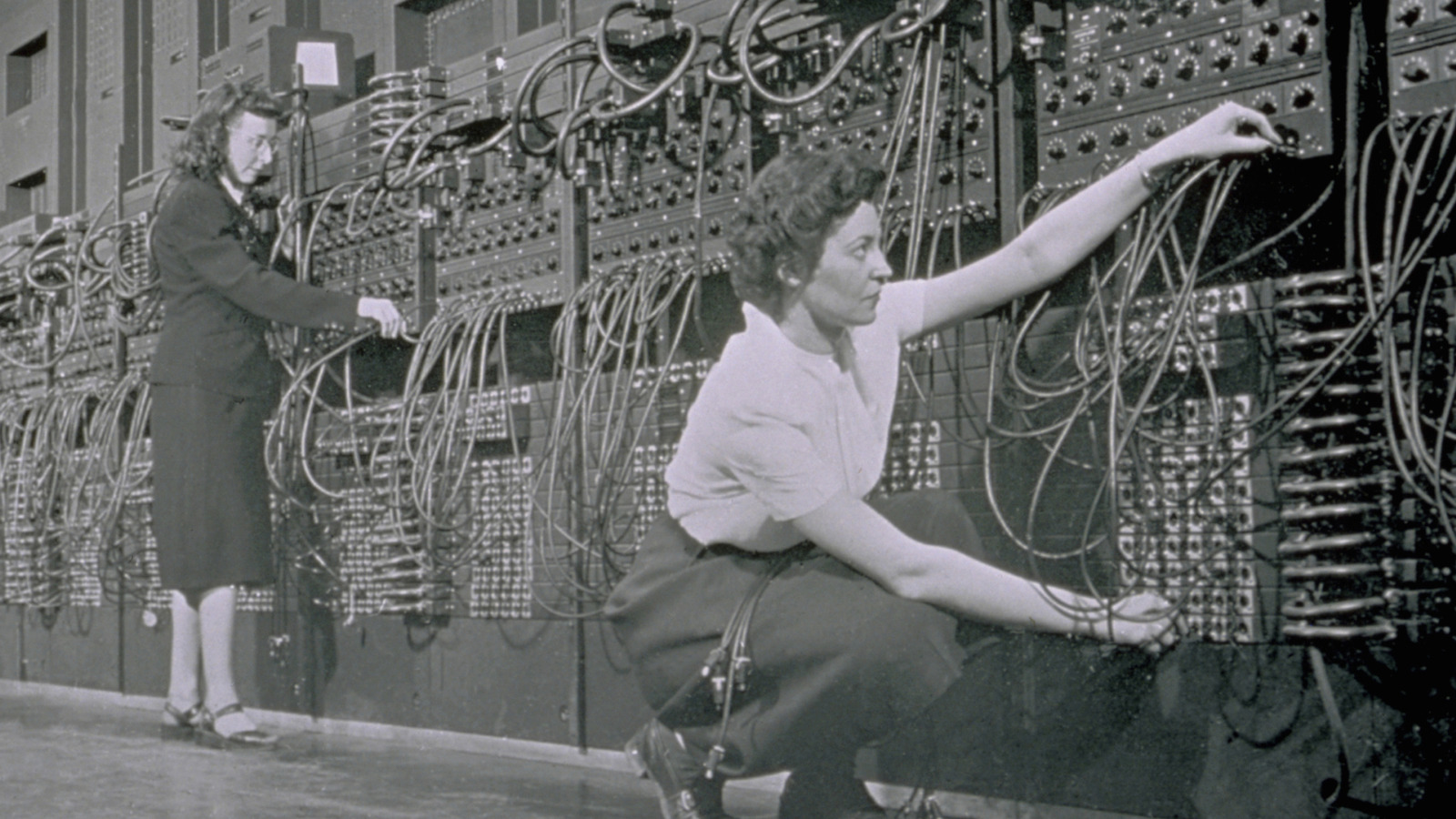

This article is part of AI Frontiers, a series exploring groundbreaking computer science and artificial intelligence research from arXiv. We summarize key papers, demystify complex concepts in machine learning and computational theory, and highlight innovations shaping our technological future. The research discussed here spans papers published on May 16, 2025, representing a snapshot of cutting-edge advancements in robotics. These studies collectively demonstrate progress in safety, generalization, and human-robot interaction, marking a shift toward more adaptable and reliable robotic systems. Robotics, as a field, integrates hardware design, artificial intelligence, and control theory to create autonomous systems capable of perceiving, reasoning, and acting in dynamic environments. The interdisciplinary nature of robotics is evident in the diversity of applications covered in these papers, including agriculture, surgery, and disaster response. The significance of these advancements lies in their potential to bring robots out of controlled laboratory settings and into real-world scenarios where unpredictability and human interaction are central challenges. One major theme emerging from this research is the pursuit of lifelike movement in robots. Humanoid locomotion, for instance, has seen notable improvements in stability and adaptability. Lizhi Yang et al. (2025) addressed the challenge of humanoid robots recovering from sudden disturbances while walking. By integrating arm movements that allow robots to lean against walls for stability, their work enables humanoids to withstand forces up to 100 newtons while moving at half a meter per second. Similarly, Nikita Rudin et al. (2025) demonstrated legged robots performing parkour, showcasing agility in navigating gaps and obstacles. These developments are not merely about acrobatic feats but about enabling robots to operate in unstructured environments where terrain and interactions are unpredictable. Another prominent theme is the emphasis on safety in reinforcement learning (RL) and autonomous systems. Yang et al. (2025) introduced SHIELD, a safety layer for RL policies that enforces probabilistic safety guarantees using control barrier functions. This approach reduces dangerous behaviors by 41% without retraining the underlying policy, addressing a critical limitation of RL in real-world applications. Heye Huang et al. (2025) further contributed to this theme with REACT, a system for autonomous vehicles that models risk fields in real time to enhance collision avoidance. These innovations highlight the growing recognition that performance must be balanced with safety, particularly as robots increasingly interact with humans in shared spaces. Perception has also seen significant advancements, particularly in multimodal integration. Akash Sharma et al. (2025) developed Sparsh-skin, a self-supervised tactile encoder for robotic hands that enables object recognition through touch without extensive labeled data. This breakthrough is particularly relevant for tasks requiring delicate manipulation, such as assembly or fruit picking. On the visual front, Abhishek Kashyap et al. (2025) utilized radiance fields to generate novel object views, improving grasp planning for robotic arms. These studies underscore the importance of combining sensory modalities—touch, vision, and even language—to enhance robotic perception and decision-making. Generalization remains a central challenge in robotics, and recent work has made strides toward more adaptable systems. Narayanan PP et al. (2025) proposed GROQLoco, a unified locomotion policy capable of controlling quadrupeds of varying sizes without robot-specific tuning. This approach, which leverages attention-based architectures, achieved a 2.4-fold improvement in success rates over prior methods. Jiahui Zhang et al. (2025) tackled generalization from a different angle with Counterfactual Behavior Cloning, which infers human intent from imperfect demonstrations. By optimizing over counterfactual examples, this method doubled sample efficiency in real-world tasks, demonstrating robustness to noisy human input. Human-robot interaction has evolved beyond simple command-based systems, focusing instead on contextual understanding and adaptability. Wei Zhao et al. (2025) introduced OE-VLA, a model that processes open-ended instructions by integrating vision, language, and action. Chenxi Jiang et al. (2025) addressed ambiguity in human requests with REI-Bench, enabling robots to seek clarification when instructions are vague. These advancements are critical as robots move into environments where communication is often imprecise and context-dependent, such as homes and workplaces. Methodologically, these studies employ a range of techniques tailored to their specific challenges. Model predictive control (MPC) is widely used, as seen in Yang et al. (2025)'s hybrid LIP dynamics for humanoid walking. While MPC excels at handling nonlinea

This article is part of AI Frontiers, a series exploring groundbreaking computer science and artificial intelligence research from arXiv. We summarize key papers, demystify complex concepts in machine learning and computational theory, and highlight innovations shaping our technological future. The research discussed here spans papers published on May 16, 2025, representing a snapshot of cutting-edge advancements in robotics. These studies collectively demonstrate progress in safety, generalization, and human-robot interaction, marking a shift toward more adaptable and reliable robotic systems. Robotics, as a field, integrates hardware design, artificial intelligence, and control theory to create autonomous systems capable of perceiving, reasoning, and acting in dynamic environments. The interdisciplinary nature of robotics is evident in the diversity of applications covered in these papers, including agriculture, surgery, and disaster response. The significance of these advancements lies in their potential to bring robots out of controlled laboratory settings and into real-world scenarios where unpredictability and human interaction are central challenges. One major theme emerging from this research is the pursuit of lifelike movement in robots. Humanoid locomotion, for instance, has seen notable improvements in stability and adaptability. Lizhi Yang et al. (2025) addressed the challenge of humanoid robots recovering from sudden disturbances while walking. By integrating arm movements that allow robots to lean against walls for stability, their work enables humanoids to withstand forces up to 100 newtons while moving at half a meter per second. Similarly, Nikita Rudin et al. (2025) demonstrated legged robots performing parkour, showcasing agility in navigating gaps and obstacles. These developments are not merely about acrobatic feats but about enabling robots to operate in unstructured environments where terrain and interactions are unpredictable. Another prominent theme is the emphasis on safety in reinforcement learning (RL) and autonomous systems. Yang et al. (2025) introduced SHIELD, a safety layer for RL policies that enforces probabilistic safety guarantees using control barrier functions. This approach reduces dangerous behaviors by 41% without retraining the underlying policy, addressing a critical limitation of RL in real-world applications. Heye Huang et al. (2025) further contributed to this theme with REACT, a system for autonomous vehicles that models risk fields in real time to enhance collision avoidance. These innovations highlight the growing recognition that performance must be balanced with safety, particularly as robots increasingly interact with humans in shared spaces. Perception has also seen significant advancements, particularly in multimodal integration. Akash Sharma et al. (2025) developed Sparsh-skin, a self-supervised tactile encoder for robotic hands that enables object recognition through touch without extensive labeled data. This breakthrough is particularly relevant for tasks requiring delicate manipulation, such as assembly or fruit picking. On the visual front, Abhishek Kashyap et al. (2025) utilized radiance fields to generate novel object views, improving grasp planning for robotic arms. These studies underscore the importance of combining sensory modalities—touch, vision, and even language—to enhance robotic perception and decision-making. Generalization remains a central challenge in robotics, and recent work has made strides toward more adaptable systems. Narayanan PP et al. (2025) proposed GROQLoco, a unified locomotion policy capable of controlling quadrupeds of varying sizes without robot-specific tuning. This approach, which leverages attention-based architectures, achieved a 2.4-fold improvement in success rates over prior methods. Jiahui Zhang et al. (2025) tackled generalization from a different angle with Counterfactual Behavior Cloning, which infers human intent from imperfect demonstrations. By optimizing over counterfactual examples, this method doubled sample efficiency in real-world tasks, demonstrating robustness to noisy human input. Human-robot interaction has evolved beyond simple command-based systems, focusing instead on contextual understanding and adaptability. Wei Zhao et al. (2025) introduced OE-VLA, a model that processes open-ended instructions by integrating vision, language, and action. Chenxi Jiang et al. (2025) addressed ambiguity in human requests with REI-Bench, enabling robots to seek clarification when instructions are vague. These advancements are critical as robots move into environments where communication is often imprecise and context-dependent, such as homes and workplaces. Methodologically, these studies employ a range of techniques tailored to their specific challenges. Model predictive control (MPC) is widely used, as seen in Yang et al. (2025)'s hybrid LIP dynamics for humanoid walking. While MPC excels at handling nonlinear dynamics, it struggles with high-dimensional action spaces. Control barrier functions, as utilized in SHIELD, provide formal safety guarantees but depend on accurate dynamics models. Self-supervised learning, exemplified by Sparsh-skin, reduces reliance on labeled data but requires diverse interaction datasets. Diffusion policies, such as those in Zibin Dong et al. (2025)'s Cocos, generate high-dimensional actions but face computational bottlenecks. Multi-expert distillation, employed in GROQLoco, generalizes across tasks but necessitates careful curation of expert diversity. These methodological trade-offs underscore the complexity of robotics research and the need for tailored solutions. Key findings from these studies reveal both progress and persistent challenges. SHIELD's ability to enforce safety constraints without policy retraining represents a significant step toward reliable RL-based control. GROQLoco's success in generalizing across robot morphologies suggests a path toward plug-and-play robotics. Counterfactual Behavior Cloning's robustness to imperfect demonstrations highlights the potential for more efficient robot learning from human input. However, real-time computation remains a bottleneck for methods like MPC and diffusion policies, and data efficiency continues to limit self-supervised techniques. Human alignment tools, while improving, must further evolve to handle the full spectrum of human ambiguity. Critical assessment of these advancements points to several future directions. Lifelong learning, where robots adapt over extended periods, is an area ripe for exploration. Integrating embodied AI for richer environmental understanding could enhance perception and decision-making. Low-cost hardware development will be pivotal in democratizing access to advanced robotic systems. Additionally, interdisciplinary collaboration—bridging robotics with fields like cognitive science and materials engineering—may yield novel solutions to longstanding challenges. The studies discussed here collectively signal a shift toward generalist, adaptable robots capable of safe and intuitive interaction with humans and their environments. References: Yang et al. (2025). SHIELD: Safety-Aware Reinforcement Learning with Probabilistic Guarantees. arXiv:xxxx.xxxx. Rudin et al. (2025). Parkour Learning for Legged Robots. arXiv:xxxx.xxxx. Huang et al. (2025). REACT: Real-Time Risk Field Modeling for Autonomous Vehicles. arXiv:xxxx.xxxx. Sharma et al. (2025). Sparsh-skin: Self-Supervised Tactile Perception for Robotic Manipulation. arXiv:xxxx.xxxx. Narayanan PP et al. (2025). GROQLoco: Unified Locomotion for Diverse Quadrupeds. arXiv:xxxx.xxxx.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] The ChatGPT & AI Super Bundle (91% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)

![Apple May Not Update AirPods Until 2026, Lighter AirPods Max Coming in 2027 [Kuo]](https://www.iclarified.com/images/news/97350/97350/97350-640.jpg)

![iPhone 17 Air Could Get a Boost From TDK's New Silicon Battery Tech [Report]](https://www.iclarified.com/images/news/97344/97344/97344-640.jpg)

![Vision Pro Owners Say They Regret $3,500 Purchase [WSJ]](https://www.iclarified.com/images/news/97347/97347/97347-640.jpg)