Google goes all-in on AI at I/O 2025, launches 20 new products

The company has doubled down on AI apps for filmmaking, an agentic coding assistant, video communication platforms, AI mode in search, and much more.

Tech behemoth introduced over a dozen AI tools and a series of research breakthroughs at its annual I/O developer conference held from May 20–21, 2025, in Mountain View, California.

AI primarily took the centre stage across the event, spanning over 20 major products and features. The company has doubled down on AI apps for filmmaking, an agentic coding assistant, video communication platforms, AI mode in search, and much more.

Here's a list of the major announcements made at Google I/O 2025:

Upgrades to Gemini 2.5 series

Google has announced new updates to its Gemini 2.5 model series, introducing enhancements such as improved reasoning capabilities, native audio output for more natural interactions, and security features.

The newer versions, Gemini 2.5 Pro and 2.5 Flash, will support native audio generation, advanced safeguards, and Project Mariner's computer usage capabilities.

Gemini 2.5 Pro will also include Deep Think, an experimental mode designed for complex mathematical and coding tasks through enhanced reasoning techniques.

“Over 7 million developers are building with Gemini, five times more than this time last year, and Gemini usage on Vertex AI is up 40 times. The Gemini app now has over 400 million monthly active users. We are seeing strong growth and engagement, particularly with the 2.5 series of models. For those using 2.5 Pro in the Gemini app, usage has gone up 45%,” said Sundar Pichai, CEO of Google.

The company stated that Gemini 2.5 Pro with Deep Think scored highly on the 2025 USAMO, one of the hardest math tests, ranked first on LiveCodeBench for competitive coding, and achieved 84.0% on MMMU, which measures how well models handle different types of information together.

Beam: 3D video communication

A few years ago, the Mountain View, California-based firm first introduced Project Starline, aiming to recreate the experience of being in the same room with someone during a video call using advanced 3D video technology.

Building on that effort, the firm has now rolled out Google Beam—a new AI-first video communication platform. Beam uses a state-of-the-art video model to convert 2D video feeds into a realistic 3D experience. It combines input from six cameras and applies AI to merge and render participants on a 3D lightfield display.

The system features precise head tracking down to the millimeter and operates at 60 frames per second in real time, allowing immersive interactions.

“Over the years, we’ve also been creating much more immersive experiences in Google Meet. That includes technology that’s helping people break down language barriers with speech translation, coming to Google Meet. In near real time, it can match the speaker’s voice and tone, and even their expressions — bringing us closer to natural and free-flowing conversation across languages,” said Pichai.

Google is partnering with HP to bring the first Beam devices to early customers later this year.

Flow for AI-powered filmmaking

Alongside its model updates, Google is introducing Flow, an AI-driven filmmaking tool to help users generate short video clips from text prompts and images.

Built ‘by and for creatives’, Flow is built on top of Google’s advanced models— Veo for video generation, Imagen for image creation, and Gemini for natural language prompting, to produce eight-second video segments that can be combined into longer scenes using built-in scene-building tools.

The tool also allows users to reuse created elements across different scenes, keeping things consistent and making it easier to build and edit videos quickly.

Gemini Ultra (only in the U.S. for now) delivers the “highest level of access” to Google’s AI-powered apps and services, according to Google. It’s priced at $249.99 per month and includes Google’s Veo 3 video generator, the company’s new Flow video editing app, and a powerful AI capability called Gemini 2.5 Pro Deep Think mode, which hasn’t launched yet.

Project Aura: XR Glasses

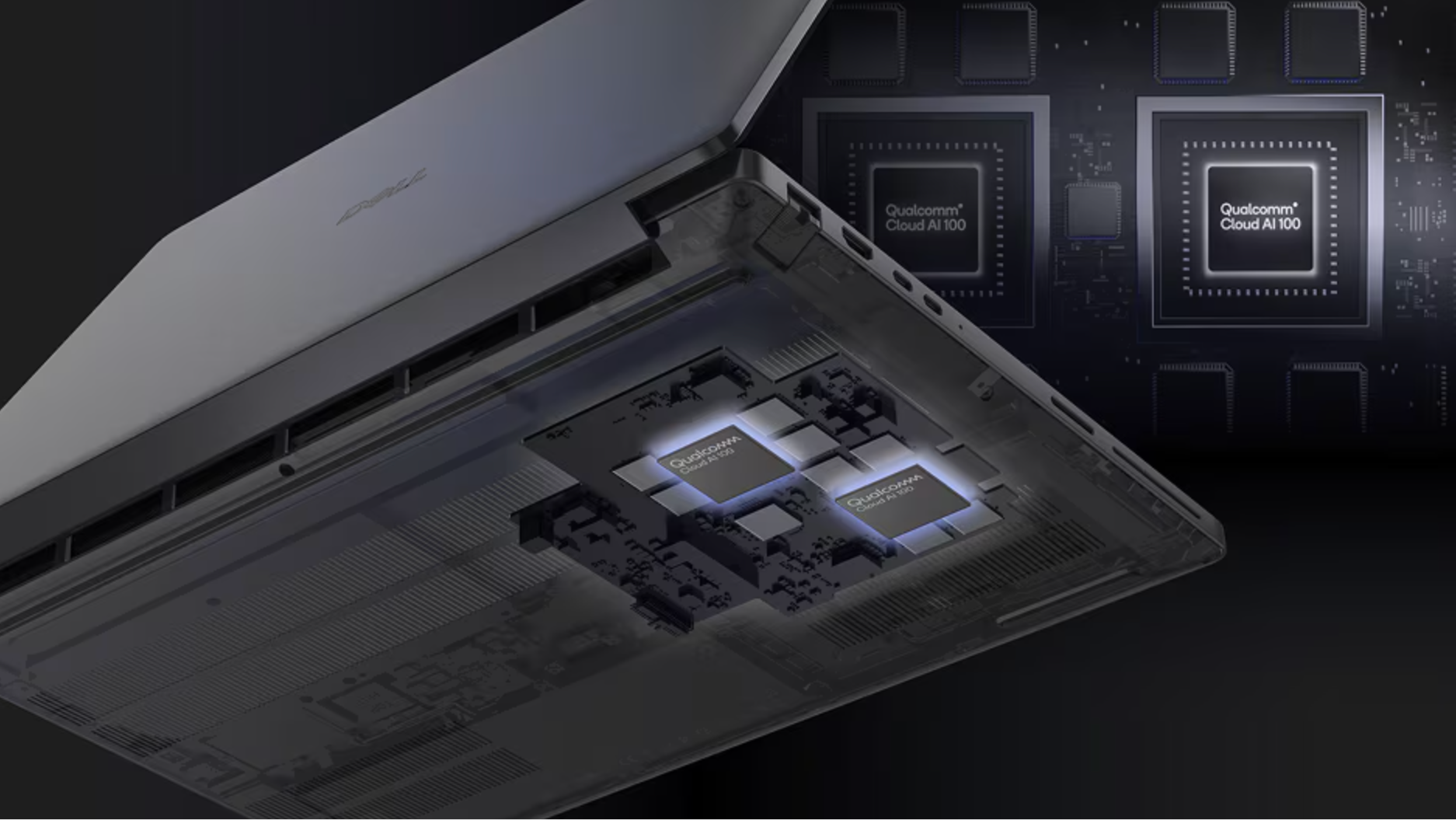

Google has formed a partnership with Xreal, a Chinese augmented reality (AR) company backed by Alibaba, to develop Project Aura, a new line of lightweight extended reality (XR) glasses.

The devices will run on Google's Android XR software platform and be powered by Qualcomm's specialised Snapdragon XR chipsets. The glasses reportedly will operate while tethered to an external device, though Google has not yet revealed which specific devices will be compatible.

Both companies have kept additional technical specifications confidential. Google and Xreal have not disclosed pricing details or planned release dates for Project Aura.

Jules: AI-powered coding assistant

Google has launched Jules, an autonomous coding assistant now available in public beta.

The new AI tool operates by cloning developers' existing repositories into secure Google Cloud virtual machines, allowing it to gain a comprehensive context of projects. Jules works independently in the background to perform various development tasks, including writing tests, building new features, fixing bugs, updating dependency versions, and even generating audio changelogs.

This release comes just days after competing announcements from Microsoft and OpenAI regarding updates to GitHub Copilot and Codex. Jules lets developers continue their workflows uninterrupted while the AI agent handles both routine and complex coding tasks and streamlining software delivery processes.

Project Mariner

The search giant is expanding its AI capabilities with an "Agent Mode" for the Gemini app alongside major updates to Project Mariner.

Project Mariner, Google's experimental AI agent that can browse and interact with the web on its own, will now support managing up to 10 concurrent tasks.

“We think of agents as systems that combine the intelligence of advanced AI models with access to tools, so they can take actions on your behalf and under your control. Our early research prototype, Project Mariner, is an early step forward in agents with computer-use capabilities to interact with the web and get stuff done for you,” said Pichai.

First released as a research prototype in December last year, the new feature has since evolved with multitasking capabilities. The system now features a "teach and repeat" function that allows users to show a task once, enabling the AI to learn patterns and execute similar tasks in the future.

Other major updates

The tech giant is also integrating Gemini directly into the Chrome browser, creating an intelligent browsing companion to analyse webpage content and help users with completing online tasks.

For mobile and portable computing users, Google rolled out Gemma 3n, a versatile model built for optimal performance on smartphones, notebooks, and tablet devices. Released in preview form, this model is capable of processing multiple media formats, including sound, written content, image, and video.

Workspace applications received major AI updates as well. Gmail is getting customised smart response suggestions and organisation tools. Meanwhile, Google Vids will have upgraded features for creating and editing content.

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Laid off but not afraid with X-senior Microsoft Dev MacKevin Fey [Podcast #173]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747965474270/ae29dc33-4231-47b2-afd1-689b3785fb79.png?#)

_MEGHzTK.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-1-52-screenshot.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_David_Hall_-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Andriy_Popov_Alamy_Stock_Photo.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl.jpg)

![Xiaomi Tops Wearables Market as Apple Slips to Second in Q1 2025 [Chart]](https://www.iclarified.com/images/news/97417/97417/97417-640.jpg)

![Apple Shares Official Trailer for Season 2 of 'The Buccaneers' [Video]](https://www.iclarified.com/images/news/97414/97414/97414-640.jpg)