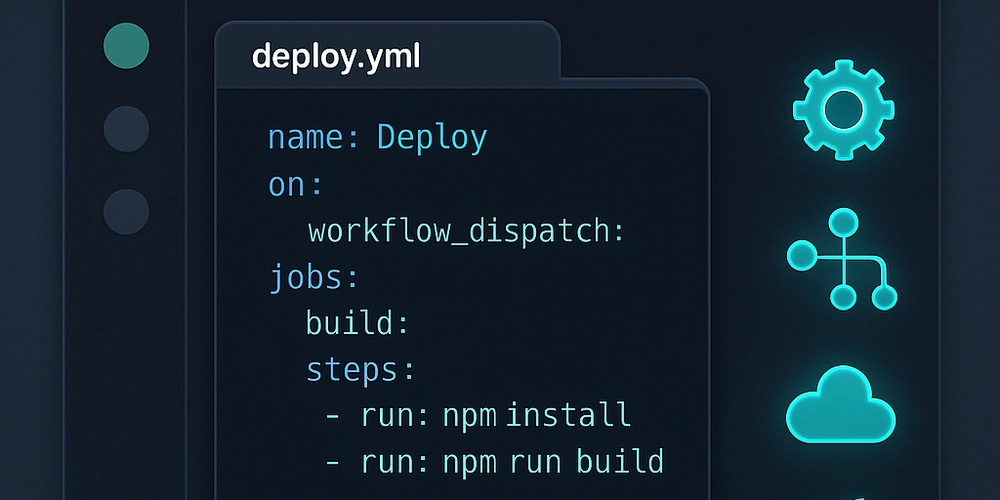

Docker Multi-Stage Builds: Your Secret Weapon for Lean, Mean Container Machines

Picture this: You've just finished building your latest web application. It's beautiful, it works perfectly, and you're ready to containerize it. You write your Dockerfile, build the image, and... it's 2GB. For a simple Node.js app. Your deployment pipeline is crying, your servers are groaning, and your wallet is getting lighter with every cloud storage bill. Sound familiar? Welcome to the world before multi-stage builds – where Docker images were bloated with build tools, source code, and dependencies that had no business being in production. The Problem: When Docker Images Go on a Diet (But Refuse to Lose Weight) Let's start with a real-world scenario. You're building a React application that needs to be compiled and served by an Nginx server. Here's what your Dockerfile might look like without multi-stage builds: dockerfile FROM node:18 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . RUN npm run build RUN npm install -g serve EXPOSE 3000 CMD ["serve", "-s", "build"] This approach has several problems: Your final image includes Node.js, npm, and all development dependencies The source code and intermediate build files are still there The image size is unnecessarily large You're potentially exposing security vulnerabilities through unused tools It's like moving houses but taking all your old furniture, broken appliances, and that box of cables you'll "definitely use someday" – except in this case, you're paying for storage and bandwidth for all that digital clutter. Enter Multi-Stage Builds: The Marie Kondo of Docker Multi-stage builds allow you to use multiple FROM statements in your Dockerfile. Each FROM instruction starts a new build stage, and you can selectively copy artifacts from one stage to another, leaving behind everything you don't want in the final image. Think of it as a relay race where each runner (stage) passes only what's necessary to the next runner, rather than carrying the entire team's equipment to the finish line. Real-World Example 1: The React Application Transformation Let's transform our bloated React app Dockerfile into a lean, multi-stage masterpiece: dockerfile # Stage 1: Build the application FROM node:18-alpine AS builder WORKDIR /app COPY package*.json ./ RUN npm ci --only=production COPY . . RUN npm run build # Stage 2: Serve the application FROM nginx:alpine COPY --from=builder /app/build /usr/share/nginx/html EXPOSE 80 CMD ["nginx", "-g", "daemon off;"] What just happened here? Stage 1 (builder): We use Node.js to install dependencies and build our React app Stage 2 (final): We use a lightweight Nginx image and copy only the built files The results are dramatic: Original image: ~1.2GB Multi-stage image: ~25MB That's a 98% reduction in size! Real-World Example 2: Go Application - From Gigabytes to Megabytes Go applications are perfect candidates for multi-stage builds because Go compiles to static binaries. Here's a typical Go web service: dockerfile # Stage 1: Build the Go binary FROM golang:1.21-alpine AS builder WORKDIR /app COPY go.mod go.sum ./ RUN go mod download COPY . . RUN CGO_ENABLED=0 GOOS=linux go build -o main . # Stage 2: Create minimal runtime image FROM alpine:latest RUN apk --no-cache add ca-certificates WORKDIR /root/ COPY --from=builder /app/main . EXPOSE 8080 CMD ["./main"] Even better - using scratch: dockerfile # Stage 1: Build FROM golang:1.21-alpine AS builder WORKDIR /app COPY go.mod go.sum ./ RUN go mod download COPY . . RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o main . # Stage 2: Ultra-minimal image FROM scratch COPY --from=builder /app/main / EXPOSE 8080 ENTRYPOINT ["/main"] This creates an image that's literally just your binary – we're talking about images under 10MB for most Go applications! Real-World Example 3: Python Flask Application with Poetry Python applications often have complex dependency management. Here's how to handle a Flask app using Poetry: dockerfile # Stage 1: Build dependencies FROM python:3.11-slim AS builder RUN pip install poetry WORKDIR /app COPY pyproject.toml poetry.lock ./ RUN poetry config virtualenvs.create false \ && poetry install --only=main --no-root COPY . . RUN poetry build # Stage 2: Runtime image FROM python:3.11-slim WORKDIR /app COPY --from=builder /app/dist/*.whl ./ RUN pip install *.whl && rm *.whl COPY --from=builder /app/src ./src EXPOSE 5000 CMD ["python", "-m", "flask", "run", "--host=0.0.0.0"] Advanced Multi-Stage Patterns The Testing Stage Pattern Want to run tests during your build but not include testing dependencies in your final image? dockerfile # Stage 1: Dependencies FROM node:18-alpine AS deps WORKDIR /app COPY package*.json ./ RUN npm ci # Stage 2: Testing FROM deps AS testing COPY . . RUN npm test # Stage 3: Build FROM deps AS builder COPY . . RUN npm run build # Stage 4: Prod

Picture this: You've just finished building your latest web application. It's beautiful, it works perfectly, and you're ready to containerize it. You write your Dockerfile, build the image, and... it's 2GB. For a simple Node.js app. Your deployment pipeline is crying, your servers are groaning, and your wallet is getting lighter with every cloud storage bill.

Sound familiar? Welcome to the world before multi-stage builds – where Docker images were bloated with build tools, source code, and dependencies that had no business being in production.

The Problem: When Docker Images Go on a Diet (But Refuse to Lose Weight)

Let's start with a real-world scenario. You're building a React application that needs to be compiled and served by an Nginx server. Here's what your Dockerfile might look like without multi-stage builds:

dockerfile

FROM node:18

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

RUN npm install -g serve

EXPOSE 3000

CMD ["serve", "-s", "build"]

This approach has several problems:

Your final image includes Node.js, npm, and all development dependencies

The source code and intermediate build files are still there

The image size is unnecessarily large

You're potentially exposing security vulnerabilities through unused tools

It's like moving houses but taking all your old furniture, broken appliances, and that box of cables you'll "definitely use someday" – except in this case, you're paying for storage and bandwidth for all that digital clutter.

Enter Multi-Stage Builds: The Marie Kondo of Docker

Multi-stage builds allow you to use multiple FROM statements in your Dockerfile. Each FROM instruction starts a new build stage, and you can selectively copy artifacts from one stage to another, leaving behind everything you don't want in the final image.

Think of it as a relay race where each runner (stage) passes only what's necessary to the next runner, rather than carrying the entire team's equipment to the finish line.

Real-World Example 1: The React Application Transformation

Let's transform our bloated React app Dockerfile into a lean, multi-stage masterpiece:

dockerfile

# Stage 1: Build the application

FROM node:18-alpine AS builder

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production

COPY . .

RUN npm run build

# Stage 2: Serve the application

FROM nginx:alpine

COPY --from=builder /app/build /usr/share/nginx/html

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]

What just happened here?

Stage 1 (builder): We use Node.js to install dependencies and build our React app

Stage 2 (final): We use a lightweight Nginx image and copy only the built files

The results are dramatic:

Original image: ~1.2GB

Multi-stage image: ~25MB

That's a 98% reduction in size!

Real-World Example 2: Go Application - From Gigabytes to Megabytes

Go applications are perfect candidates for multi-stage builds because Go compiles to static binaries. Here's a typical Go web service:

dockerfile

# Stage 1: Build the Go binary

FROM golang:1.21-alpine AS builder

WORKDIR /app

COPY go.mod go.sum ./

RUN go mod download

COPY . .

RUN CGO_ENABLED=0 GOOS=linux go build -o main .

# Stage 2: Create minimal runtime image

FROM alpine:latest

RUN apk --no-cache add ca-certificates

WORKDIR /root/

COPY --from=builder /app/main .

EXPOSE 8080

CMD ["./main"]

Even better - using scratch:

dockerfile

# Stage 1: Build

FROM golang:1.21-alpine AS builder

WORKDIR /app

COPY go.mod go.sum ./

RUN go mod download

COPY . .

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o main .

# Stage 2: Ultra-minimal image

FROM scratch

COPY --from=builder /app/main /

EXPOSE 8080

ENTRYPOINT ["/main"]

This creates an image that's literally just your binary – we're talking about images under 10MB for most Go applications!

Real-World Example 3: Python Flask Application with Poetry

Python applications often have complex dependency management. Here's how to handle a Flask app using Poetry:

dockerfile

# Stage 1: Build dependencies

FROM python:3.11-slim AS builder

RUN pip install poetry

WORKDIR /app

COPY pyproject.toml poetry.lock ./

RUN poetry config virtualenvs.create false \

&& poetry install --only=main --no-root

COPY . .

RUN poetry build

# Stage 2: Runtime image

FROM python:3.11-slim

WORKDIR /app

COPY --from=builder /app/dist/*.whl ./

RUN pip install *.whl && rm *.whl

COPY --from=builder /app/src ./src

EXPOSE 5000

CMD ["python", "-m", "flask", "run", "--host=0.0.0.0"]

Advanced Multi-Stage Patterns

The Testing Stage Pattern

Want to run tests during your build but not include testing dependencies in your final image?

dockerfile

# Stage 1: Dependencies

FROM node:18-alpine AS deps

WORKDIR /app

COPY package*.json ./

RUN npm ci

# Stage 2: Testing

FROM deps AS testing

COPY . .

RUN npm test

# Stage 3: Build

FROM deps AS builder

COPY . .

RUN npm run build

# Stage 4: Production

FROM nginx:alpine

COPY --from=builder /app/dist /usr/share/nginx/html

You can then build with: docker build --target testing . to run tests, or without the target to get the production image.

The Development vs Production Pattern

dockerfile

# Base stage with common dependencies

FROM node:18-alpine AS base

WORKDIR /app

COPY package*.json ./

# Development stage

FROM base AS development

RUN npm install

COPY . .

EXPOSE 3000

CMD ["npm", "run", "dev"]

# Production build stage

FROM base AS builder

RUN npm ci --only=production

COPY . .

RUN npm run build

# Production runtime stage

FROM nginx:alpine AS production

COPY --from=builder /app/build /usr/share/nginx/html

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]

Build for development: docker build --target development -t myapp:dev .

Build for production: docker build --target production -t myapp:prod .

Best Practices: Making Multi-Stage Builds Sing

- Order Matters - Cache Like a Pro Put the least frequently changing layers first:

dockerfile

# Good: Dependencies change less frequently than source code

COPY package*.json ./

RUN npm install

COPY . .

# Bad: This invalidates cache for every source code change

COPY . .

RUN npm install

- Use Specific Base Images

dockerfile

# Good: Explicit and smaller

FROM node:18-alpine AS builder

# Bad: Unpredictable and potentially larger

FROM node AS builder

- Clean Up in the Same Layer

dockerfile

# Good: Cleanup in same layer

RUN apt-get update && \

apt-get install -y build-essential && \

npm install && \

apt-get remove -y build-essential && \

apt-get autoremove -y && \

rm -rf /var/lib/apt/lists/*

# Bad: Each RUN creates a layer

RUN apt-get update

RUN apt-get install -y build-essential

RUN npm install

RUN apt-get remove -y build-essential

- Use .dockerignore

Create a

.dockerignorefile to prevent unnecessary files from being sent to the Docker daemon:

node_modules

npm-debug.log

.git

.gitignore

README.md

.env

coverage

.nyc_output

Common Pitfalls and How to Avoid Them

Pitfall 1: Copying Unnecessary Files Between Stages

dockerfile

# Bad: Copying everything

COPY --from=builder /app /app

# Good: Being selective

COPY --from=builder /app/dist ./dist

COPY --from=builder /app/package.json ./

Pitfall 2: Not Using Build Arguments Effectively

dockerfile

FROM node:18-alpine AS base

ARG NODE_ENV=production

ENV NODE_ENV=$NODE_ENV

FROM base AS development

# Development-specific setup

FROM base AS production

# Production-specific setup

Build with: docker build --build-arg NODE_ENV=development --target development .

Pitfall 3: Ignoring Security in Multi-Stage Builds

dockerfile

# Good: Using non-root user

FROM alpine:latest

RUN addgroup -g 1001 -S nodejs

RUN adduser -S nextjs -u 1001

COPY --from=builder --chown=nextjs:nodejs /app/build ./build

USER nextjs

The Bottom Line

Multi-stage builds aren't just about smaller images (though that 99% size reduction is pretty sweet). They're about:

Faster deployments: Smaller images mean faster pushes and pulls

Lower costs: Less storage, less bandwidth, less money

Better security: Fewer tools in production mean fewer attack vectors

Cleaner architecture: Separation of build and runtime concerns

Multi-stage builds transform Docker from a somewhat clunky virtualization tool into a precision instrument for creating exactly the runtime environment your application needs – nothing more, nothing less.

The next time you're writing a Dockerfile, ask yourself: "What does my application actually need to run in production?" Chances are, it's a lot less than what you're currently shipping. Multi-stage builds help you ship exactly that – and your deployment pipeline will thank you for it.

Remember: In the world of containers, less is definitely more. Your future self (and your infrastructure bill) will thank you for making the switch to multi-stage builds.

.jpg)

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![From electrical engineering student to CTO with Hitesh Choudhary [Podcast #175]](https://cdn.hashnode.com/res/hashnode/image/upload/v1749158756824/3996a2ad-53e5-4a8f-ab97-2c77a6f66ba3.png?#)

![Galaxy Watch 8 Classic goes up for sale with hands-on photos showing new design, more [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/galaxy-watch-8-classic-ebay-1_a53c3c.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_WindVector_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Planning Futuristic 'Glasswing' iPhone With Curved Glass and No Cutouts [Gurman]](https://www.iclarified.com/images/news/97534/97534/97534-640.jpg)