Comprehensive guide to understanding and building effective AI agents

1. Introduction and Problem Statement The field of AI agents is rapidly evolving, leading to a vast and often overwhelming amount of information. This report aims to distill the most critical insights from leading AI research labs – Google, Anthropic, and OpenAI – into a cohesive and actionable guide. The objective is to provide a clear understanding of what AI agents are, the fundamental principles for building them effectively, and the best practices to ensure their reliability and safety. 2. Core Resources Utilized for Synthesis The foundational knowledge for this report is drawn from three pivotal documents: Google's "Agents" Whitepaper: Provides a broad overview and foundational concepts of agentic systems. Anthropic's "Building effective agents" Article: Focuses on practical patterns and successful implementations, emphasizing simple, composable approaches. OpenAI's "A practical guide to building agents" PDF: Offers practical guidance, particularly on agent architecture, tooling, and safety considerations. 3. Defining an AI Agent An AI agent is fundamentally a system designed to perceive its environment, reason about its goals, and take actions to achieve those goals autonomously or semi-autonomously. Key characteristics include: LLM-Powered Reasoning: At its core, an AI agent utilizes a Large Language Model (LLM) – such as OpenAI's GPT series, Google's Gemini, or Anthropic's Claude – as its "brain" for understanding, planning, and decision-making. Action Capability through Tools: Agents are not limited to text generation. They can interact with the digital (and potentially physical) world by employing "tools." These tools can be: APIs (e.g., for weather data, stock prices, calendar management) Code execution environments Search engines Databases Other software functions Iterative Operational Loop (The AI Agent Cycle): Agents typically operate in a cyclical manner to refine their actions and achieve their objectives. This cycle, often referred to as the ReAct (Reason, Act, Observe) framework or similar, involves: Reason: The LLM analyzes the current situation, its goals, and available tools to formulate a plan or decide on the next best action. Take Action: The agent executes the chosen action using one or more of its designated tools. This might involve making an API call, running a script, or querying a database. Observe: The agent receives the outcome or feedback resulting from its action. This observation is then fed back into its reasoning process. Repeat/Reflect: Based on the observation, the agent iterates. It might refine its plan, choose a different tool, or conclude that the task is complete. This reflective step is crucial for learning and adaptation. Distinction from Simple Chatbots: While chatbots primarily engage in conversational exchanges, AI agents are designed for more complex, multi-step problem-solving. They can autonomously manage tasks such as: Booking travel arrangements Generating comprehensive reports Debugging code Managing complex workflows 4. Strategic Considerations: When to Build an AI Agent Building an AI agent is not always the optimal solution. It's crucial to identify scenarios where their advanced capabilities offer genuine advantages, versus situations where simpler automation would suffice (to avoid over-engineering). Favorable Scenarios for AI Agents: Complex Decision-Making: When tasks require nuanced judgment that goes beyond simple rule-based systems (e.g., evaluating insurance claims with multiple variables, dynamic resource allocation). High Context and Multi-Step Processes: For tasks involving many interdependent steps or requiring the synthesis of large amounts of information from diverse sources. Brittle Rule-Based Logic: When traditional rule-based systems become too complex to maintain, are prone to errors with slight input variations, or cannot adapt to new situations. Agents offer more flexibility. Ambiguity and Dynamic Environments: When the task environment is not fully predictable and requires the agent to adapt its strategy based on real-time observations. Scenarios to Avoid Over-Engineering with Agents: Single-Step Answers: If a task can be accomplished with a direct, single-step solution (e.g., a simple database lookup or a direct API call without complex interpretation), an agent might be unnecessary. No Tool Usage Required: If the problem can be solved with stable, well-defined logic within the LLM itself without needing external interactions, a simpler LLM call or a traditional program might be more efficient. Highly Predictable and Static Workflows: For tasks with very clear, unchanging steps, a traditional workflow automation tool might be more robust and less resource-intensive. 5. Core Architectural Components of an AI Agent (The "Agent Stack") Every AI agent, regardless of its specific

1. Introduction and Problem Statement

The field of AI agents is rapidly evolving, leading to a vast and often overwhelming amount of information. This report aims to distill the most critical insights from leading AI research labs – Google, Anthropic, and OpenAI – into a cohesive and actionable guide. The objective is to provide a clear understanding of what AI agents are, the fundamental principles for building them effectively, and the best practices to ensure their reliability and safety.

2. Core Resources Utilized for Synthesis

The foundational knowledge for this report is drawn from three pivotal documents:

- Google's "Agents" Whitepaper: Provides a broad overview and foundational concepts of agentic systems.

- Anthropic's "Building effective agents" Article: Focuses on practical patterns and successful implementations, emphasizing simple, composable approaches.

- OpenAI's "A practical guide to building agents" PDF: Offers practical guidance, particularly on agent architecture, tooling, and safety considerations.

3. Defining an AI Agent

An AI agent is fundamentally a system designed to perceive its environment, reason about its goals, and take actions to achieve those goals autonomously or semi-autonomously. Key characteristics include:

- LLM-Powered Reasoning: At its core, an AI agent utilizes a Large Language Model (LLM) – such as OpenAI's GPT series, Google's Gemini, or Anthropic's Claude – as its "brain" for understanding, planning, and decision-making.

- Action Capability through Tools: Agents are not limited to text generation. They can interact with the digital (and potentially physical) world by employing "tools." These tools can be:

- APIs (e.g., for weather data, stock prices, calendar management)

- Code execution environments

- Search engines

- Databases

- Other software functions

- Iterative Operational Loop (The AI Agent Cycle): Agents typically operate in a cyclical manner to refine their actions and achieve their objectives. This cycle, often referred to as the ReAct (Reason, Act, Observe) framework or similar, involves:

- Reason: The LLM analyzes the current situation, its goals, and available tools to formulate a plan or decide on the next best action.

- Take Action: The agent executes the chosen action using one or more of its designated tools. This might involve making an API call, running a script, or querying a database.

- Observe: The agent receives the outcome or feedback resulting from its action. This observation is then fed back into its reasoning process.

- Repeat/Reflect: Based on the observation, the agent iterates. It might refine its plan, choose a different tool, or conclude that the task is complete. This reflective step is crucial for learning and adaptation.

- Distinction from Simple Chatbots: While chatbots primarily engage in conversational exchanges, AI agents are designed for more complex, multi-step problem-solving. They can autonomously manage tasks such as:

- Booking travel arrangements

- Generating comprehensive reports

- Debugging code

- Managing complex workflows

4. Strategic Considerations: When to Build an AI Agent

Building an AI agent is not always the optimal solution. It's crucial to identify scenarios where their advanced capabilities offer genuine advantages, versus situations where simpler automation would suffice (to avoid over-engineering).

-

Favorable Scenarios for AI Agents:

- Complex Decision-Making: When tasks require nuanced judgment that goes beyond simple rule-based systems (e.g., evaluating insurance claims with multiple variables, dynamic resource allocation).

- High Context and Multi-Step Processes: For tasks involving many interdependent steps or requiring the synthesis of large amounts of information from diverse sources.

- Brittle Rule-Based Logic: When traditional rule-based systems become too complex to maintain, are prone to errors with slight input variations, or cannot adapt to new situations. Agents offer more flexibility.

- Ambiguity and Dynamic Environments: When the task environment is not fully predictable and requires the agent to adapt its strategy based on real-time observations.

-

Scenarios to Avoid Over-Engineering with Agents:

- Single-Step Answers: If a task can be accomplished with a direct, single-step solution (e.g., a simple database lookup or a direct API call without complex interpretation), an agent might be unnecessary.

- No Tool Usage Required: If the problem can be solved with stable, well-defined logic within the LLM itself without needing external interactions, a simpler LLM call or a traditional program might be more efficient.

- Highly Predictable and Static Workflows: For tasks with very clear, unchanging steps, a traditional workflow automation tool might be more robust and less resource-intensive.

5. Core Architectural Components of an AI Agent (The "Agent Stack")

Every AI agent, regardless of its specific implementation, is generally built upon four essential components:

- Large Language Model (LLM) - The Brain:

- Function: Provides the core reasoning, language understanding, and decision-making capabilities. It interprets user requests, formulates plans, selects tools, and processes observations.

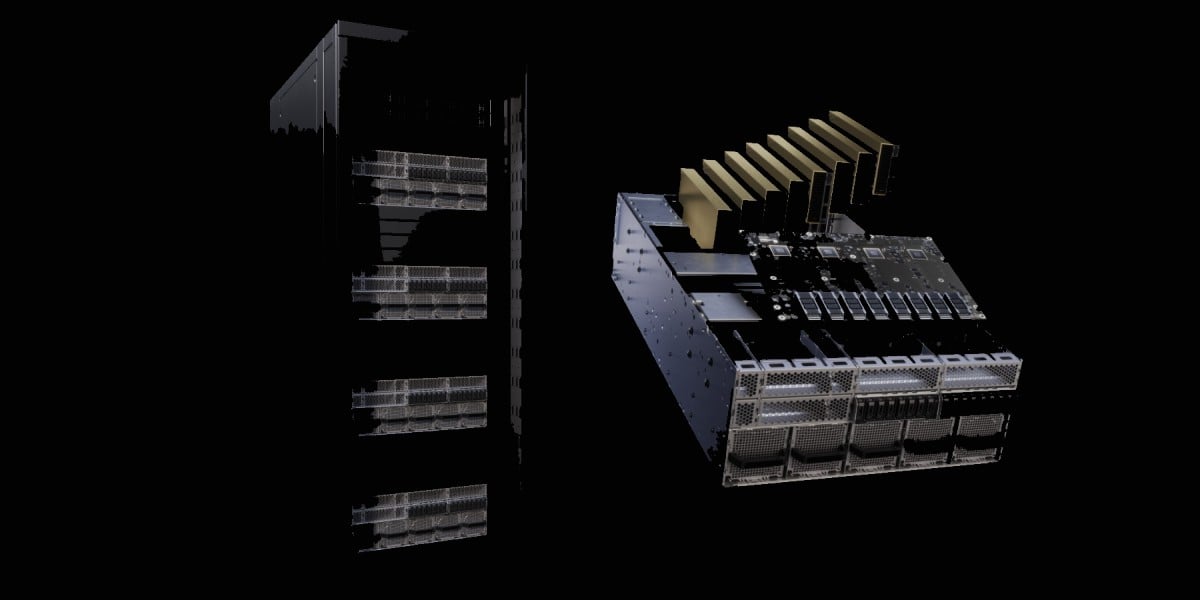

- Considerations: The choice of LLM (e.g., GPT-4, Claude 3, Gemini) is critical and depends on the task's complexity, cost constraints, and desired capabilities (e.g., multimodal input, long context windows).

- Tools - The Hands:

- Function: Enable the agent to interact with its environment beyond simple text generation. Tools allow the agent to fetch external data, perform computations, or execute actions in other systems.

- Examples: External APIs (weather, financial data), databases, search engines, calculators, code interpreters, functions to interact with local files or other applications.

- Instructions (System Prompt) - The Guide:

- Function: Defines the agent's overall behavior, persona, goals, constraints, and safety boundaries. It's the primary way to steer the LLM's operation within the agentic framework.

- Content: Can include:

- The agent's role or persona (e.g., "You are a helpful financial assistant").

- Specific goals for the current task.

- Ethical guidelines and safety protocols.

- Formatting instructions for its output.

- Information about available tools and how to use them.

- Memory - The Long-Term Brain:

- Function: Allows the agent to retain information from past interactions and context, enabling more coherent and personalized behavior over time.

- Types:

- Short-Term Memory: Typically the conversation history within the current session, allowing the agent to refer to earlier parts of the dialogue.

- Long-Term Memory: Persistent storage of information across sessions. This is often implemented using vector databases to store and retrieve relevant past experiences, user preferences, or learned knowledge. Session state can also be a form of long-term memory for a specific user interaction.

6. Reasoning Patterns: How Agents "Think"

Agents employ various strategies to process information and decide on actions. These patterns help structure the LLM's "thought process":

- ReAct (Reason, then Act, then Observe):

- Description: This is a widely adopted and effective pattern. The agent explicitly verbalizes its reasoning process, decides on an action (tool use), executes it, observes the result, and then reflects on the observation to inform its next step.

- Cycle:

- Reason: Analyze the current situation and available information to form a hypothesis or plan.

- Act: Choose and execute a specific tool/action based on the reasoning.

- Observe: Evaluate the outcome and feedback from the executed action.

- Reflect (and Repeat): Review the observation, adjust the strategy if necessary, and loop back to reasoning for the next step, or conclude if the goal is met.

- Significance: Google's whitepaper particularly emphasizes this as a standard and effective approach.

- Chain-of-Thought (CoT):

- Description: Encourages the LLM to break down a problem into intermediate reasoning steps before arriving at a final answer. This improves performance on complex reasoning tasks.

- Application: Often implemented by prompting the LLM to "think step-by-step."

- Tree-of-Thought (ToT):

- Description: A more advanced technique where the agent explores multiple reasoning paths or plans in parallel. It can evaluate different branches and backtrack if a path seems unpromising.

- Application: Useful for problems with large search spaces or where multiple solutions might exist.

7. Common Agent Building Patterns & Architectures

Several established patterns facilitate the construction of sophisticated agentic systems:

- Prompt Chaining: Simple sequential execution of tasks, where the output of one LLM call (or agent step) becomes the input for the next.

- Routing: An initial LLM or a classification model directs an incoming request to the most appropriate specialized agent or tool based on the nature of the query.

- Tool Use: The fundamental ability of an agent to select and utilize predefined functions or APIs to interact with external systems or data.

- Evaluator Loops (Self-Correction): An agent's output is reviewed by another LLM (an "evaluator" or "critic") or a set of predefined checks. If the output is unsatisfactory, feedback is provided, and the original agent attempts to correct its response.

- Orchestrator/Worker: A central "orchestrator" agent breaks down a complex task and delegates sub-tasks to specialized "worker" agents. The orchestrator then synthesizes the results from the workers.

- Autonomous Loops: The agent is given a high-level goal and operates with minimal human intervention, making all decisions about tool use and next steps. This pattern requires robust guardrails and should be used carefully.

- Single-Agent vs. Multi-Agent Systems: OpenAI's guide advises starting with a single-agent system where possible. Transitioning to multi-agent systems (like orchestrator-worker or routing to specialized agents) is recommended when a single agent becomes overloaded with too many tools (generally >10-15) or when the logic for handling different task types becomes overly complex for one agent.

8. Safety and Guardrails: Ensuring Responsible Agent Behavior

Given the potential for LLMs to "hallucinate" (generate incorrect or nonsensical information) or act unpredictably, implementing robust safety measures and guardrails is paramount.

- Necessity: To prevent agents from taking harmful actions, overreaching their intended scope, or producing inappropriate content.

- Key Guardrail Strategies (AI Safety and Guardrails Funnel):

- Limit Actions: Restrict the agent's operational capabilities, especially when interacting with sensitive systems (e.g., read-only access to databases, requiring confirmation for destructive actions). Define clear iteration limits.

- Human Review (Human-in-the-Loop): Involve human oversight for critical decisions or before an agent takes high-impact actions. This allows for verification and correction.

- Filter Outputs: Implement mechanisms to remove or flag toxic, biased, or insecure content generated by the agent.

- Sandbox Testing: Always test agents thoroughly in a controlled, isolated environment before deploying them to production. This helps identify potential issues without real-world consequences.

- Types of Guardrails (as detailed in OpenAI's guide):

- Relevance Classifier: Ensures agent responses stay within the intended scope by flagging off-topic queries.

- Safety Classifier: Detects unsafe inputs (e.g., jailbreaks, prompt injections) that attempt to exploit vulnerabilities.

- PII (Personally Identifiable Information) Filter: Prevents unnecessary exposure of PII by vetting model output.

- Moderation: Flags harmful or inappropriate inputs (hate speech, harassment, violence).

- Tool Safeguards: Assess the risk of each tool by assigning ratings (low, medium, high) based on factors like read-only vs. write access, reversibility, and financial impact, triggering checks before use.

9. Best Practices for Achieving Effective AI Agent Implementation

A systematic approach to agent development leads to more robust and useful systems:

- Start Simple: Begin with basic AI models and a limited set of functionalities to establish a solid foundation. Gradually add complexity.

- Visible Reasoning: Design agents so their decision-making processes are transparent and understandable. Logging the agent's internal "thoughts" or justifications is crucial for debugging and building trust.

- Clear Instructions: Provide well-defined system prompts and clear, unambiguous descriptions for tools. This helps the agent understand its role, goals, and how to use its capabilities effectively.

- Evaluate Performance Consistently: Regularly assess the agent's performance against predefined metrics and real-world scenarios. Identify areas for improvement and iterate on the design.

- Maintain Human Oversight: Especially for critical applications, ensure human involvement in the loop for key decisions, ethical considerations, and ongoing monitoring.

10. Real-World Use Cases for AI Agents

AI agents are already being applied across various domains:

- Customer Service: Classifying incoming queries, providing automated responses, and escalating complex issues to human agents.

- Business Operations: Automating tasks like refund approvals, document review and summarization, and data entry.

- Research Tasks: Breaking down complex research topics, gathering information from multiple sources, and synthesizing findings.

- Development Tools: Assisting with writing and fixing code, testing pull requests, and generating documentation.

- Scheduling Tasks: Planning meetings, sending calendar invitations, and managing personal or team schedules.

- Inbox Management: Prioritizing emails, drafting replies, and organizing communication.

11. Tooling and Frameworks (Optional, Keep it Light)

While the core principles are paramount, several tools and libraries can facilitate agent development:

- LangChain: A popular open-source framework for building applications powered by LLMs, including agents.

- OpenAI Agents SDK: A toolkit specifically for developing AI agents using OpenAI's models.

- Vertex AI Agents (Google): Google's cloud platform offering for creating and deploying AI agents, often leveraging Gemini models.

- ReAct / CoT / ToT Prompt Templates: Pre-structured prompts that implement these reasoning patterns.

- Other frameworks mentioned or implied: LangGraph, Agno, CrewAI, Small Agents (Hugging Face), Pydantic AI. The advice is to keep tooling "light" initially, focusing on mastering the fundamental concepts before adopting complex frameworks.

12. Final Concluding Thought: Outcome-Focused Approach

The ultimate measure of an AI agent's success is its ability to achieve desired outcomes effectively and safely. While the underlying technology can be complex, the development process should prioritize:

- Desired Outcomes: Clearly define what the agent is supposed to achieve.

- Simplified Processes: Streamline methods to reach those outcomes.

- Clarity and Direction: Maintain a clear focus on goals and the steps to achieve them.

- Goal Alignment: Ensure the agent's efforts consistently match the desired results. The guiding principle should be: "Always focus on outcomes – not complexity. Build smart. Build safe. Build simple."

This detailed report encapsulates the critical knowledge shared in the video presentation, providing a robust foundation for anyone looking to delve into the development of AI agents.

References

- Google. (2024). Agents

- Anthropic. (2024). Building effective agents

- OpenAI. (2024). A practical guide to building agents

Source

Cole Medin. (2025). Google, Anthropic, and OpenAI's Guides to AI Agents ALL in 18 Minutes

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)

-(1).jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![A rare look inside the TSMC Arizona plant making chips for Apple [Video]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/05/A-look-inside-the-TSMC-Arizona-plant-making-chips-for-Apple.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Why Apple Still Can't Catch Up in AI and What It's Doing About It [Report]](https://www.iclarified.com/images/news/97352/97352/97352-640.jpg)

![Sonos Move 2 On Sale for 25% Off [Deal]](https://www.iclarified.com/images/news/97355/97355/97355-640.jpg)

![Apple May Not Update AirPods Until 2026, Lighter AirPods Max Coming in 2027 [Kuo]](https://www.iclarified.com/images/news/97350/97350/97350-640.jpg)