Building Blocks of Modern Log Management Strategies

Architecting a Comprehensive Log Management Framework Security Operations Center People, Process and Technology First, I’d like to provide an overview of the Security Operations Center (SOC). A SOC encompasses the people, processes, and technologies responsible for monitoring, analyzing, maintaining, and enhancing an organization’s cybersecurity. A SOC is not merely a room with large monitors on the wall; it is a capability built on three fundamental pillars: people, process, and technology. The first pillar is People: A SOC comprises various roles, including SOC analysts, incident responders, threat hunters, and SOC managers. Some mature Security Operations Centers also include roles such as compliance auditors and security architects. The second pillar is Processes: Defining clear, robust, and repeatable processes provides a solid foundation for the actions taken by SOC members. Without these processes, a SOC lacks guidance and produces inconsistent results. We will explore key SOC operations in the next section. The third pillar is Technology: An effective SOC integrates data from multiple sources and manages and analyzes this data. The core technology of a successful SOC is an enterprise-wide solution for data collection, management, detection, and analytics. Reactive SOC’s to Proactive SOC’s The proactive SOC concept defines a transformed Security Operations Center where visibility is enhanced and maintained to contextualize threats and attack surface continuously, instead of unselective data collection to achieve visibility. Detection capabilities are constantly updated against the anticipated attacks to filter out noise, and detection capabilities are updated and fixed against the changing adversaries landscape instead of unselective alert triage by triaging as many alerts as possible. And finally, response capabilities allow focusing on dealing with advanced threats by establishing an agile and automated incident response process and driving mitigation on network and endpoint security controls to achieve a more robust defense baseline. Five key SOC Operations Log Management: According to NIST’s Special Publication 800–92, a log is a record of events occurring within an organization’s systems and networks. Logs consist of entries containing information about specific events. By correlating events, relationships between log entries can be identified. In essence, log management involves generating, transmitting, storing, analyzing, and disposing of log data. Alert Management: When monitoring tools send out alerts, the SOC examines each one carefully, discards false positives, and assesses the severity and targets of real threats. This process helps prioritize and resolve the most critical issues first. Prevention and Detection: Prevention is more effective than reaction in cybersecurity. A SOC monitors the network 24/7, allowing the team to detect and prevent malicious activity before it causes damage. SOC analysts gather detailed information to investigate any unusual activity. Incident Management and Response: SOC analysts investigate suspicious activity to determine the nature of threats. Once an incident is confirmed, the SOC coordinates the response to resolve the issue, aiming to mitigate the impact on business continuity. Compliance Management: SOCs must stay updated on government and industry regulations to ensure organizational compliance. This is crucial due to the SOC’s use of data, which may be subject to strict standards based on location, sector, or intended use. Compliance is essential for the organization’s ongoing operations. Core SOC Operations A SOC’s success hinges on several key activities. Let’s break them down: Log Management: Logs are records of events within systems and networks, as outlined in NIST SP 800–92. Each log entry details a specific occurrence, and correlating these entries reveals connections between events. Log management covers the creation, transmission, storage, analysis, and disposal of this data. Alert Management: When monitoring tools flag potential issues, the SOC evaluates each alert, weeds out false positives, and prioritizes real threats for swift action. Prevention and Detection: Proactive monitoring beats reactive fixes. SOC teams watch networks 24/7, spotting and stopping suspicious activity before it causes harm. Incident Management and Response: When something slips through, analysts investigate, assess the threat, and coordinate a response to minimize disruption while keeping business running smoothly. Compliance Management: With regulations constantly shifting, SOCs ensure data handling meets legal and industry standards, protecting the organization from penalties. Why Log Management Matters? Firstly, almost every SOC, small or big, invested in a SIEM platform. But as indicated by many analysts, most of these platforms are significantly underutilized. SIEMs running with years-old detection rules and creating alert noise is

Architecting a Comprehensive Log Management Framework

Security Operations Center

People, Process and Technology

First, I’d like to provide an overview of the Security Operations Center (SOC). A SOC encompasses the people, processes, and technologies responsible for monitoring, analyzing, maintaining, and enhancing an organization’s cybersecurity. A SOC is not merely a room with large monitors on the wall; it is a capability built on three fundamental pillars: people, process, and technology.

The first pillar is People: A SOC comprises various roles, including SOC analysts, incident responders, threat hunters, and SOC managers. Some mature Security Operations Centers also include roles such as compliance auditors and security architects.

The second pillar is Processes: Defining clear, robust, and repeatable processes provides a solid foundation for the actions taken by SOC members. Without these processes, a SOC lacks guidance and produces inconsistent results. We will explore key SOC operations in the next section.

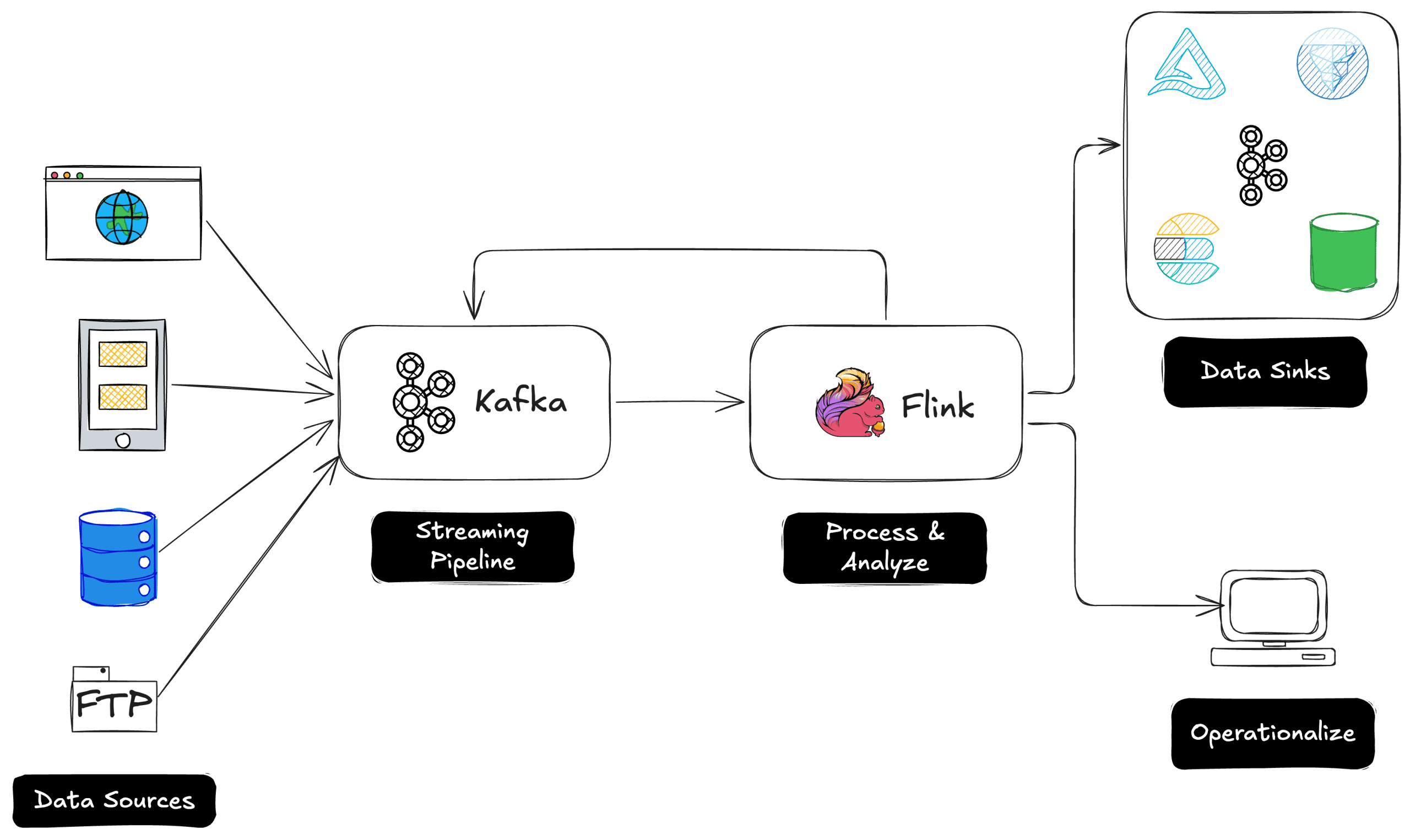

The third pillar is Technology: An effective SOC integrates data from multiple sources and manages and analyzes this data. The core technology of a successful SOC is an enterprise-wide solution for data collection, management, detection, and analytics.

Reactive SOC’s to Proactive SOC’s

The proactive SOC concept defines a transformed Security Operations Center where visibility is enhanced and maintained to contextualize threats and attack surface continuously, instead of unselective data collection to achieve visibility.

Detection capabilities are constantly updated against the anticipated attacks to filter out noise, and detection capabilities are updated and fixed against the changing adversaries landscape instead of unselective alert triage by triaging as many alerts as possible.

And finally, response capabilities allow focusing on dealing with advanced threats by establishing an agile and automated incident response process and driving mitigation on network and endpoint security controls to achieve a more robust defense baseline.

Five key SOC Operations

Log Management: According to NIST’s Special Publication 800–92, a log is a record of events occurring within an organization’s systems and networks. Logs consist of entries containing information about specific events. By correlating events, relationships between log entries can be identified. In essence, log management involves generating, transmitting, storing, analyzing, and disposing of log data.

Alert Management: When monitoring tools send out alerts, the SOC examines each one carefully, discards false positives, and assesses the severity and targets of real threats. This process helps prioritize and resolve the most critical issues first.

Prevention and Detection: Prevention is more effective than reaction in cybersecurity. A SOC monitors the network 24/7, allowing the team to detect and prevent malicious activity before it causes damage. SOC analysts gather detailed information to investigate any unusual activity.

Incident Management and Response: SOC analysts investigate suspicious activity to determine the nature of threats. Once an incident is confirmed, the SOC coordinates the response to resolve the issue, aiming to mitigate the impact on business continuity.

Compliance Management: SOCs must stay updated on government and industry regulations to ensure organizational compliance. This is crucial due to the SOC’s use of data, which may be subject to strict standards based on location, sector, or intended use. Compliance is essential for the organization’s ongoing operations.

Core SOC Operations

A SOC’s success hinges on several key activities. Let’s break them down:

Log Management: Logs are records of events within systems and networks, as outlined in NIST SP 800–92. Each log entry details a specific occurrence, and correlating these entries reveals connections between events. Log management covers the creation, transmission, storage, analysis, and disposal of this data.

Alert Management: When monitoring tools flag potential issues, the SOC evaluates each alert, weeds out false positives, and prioritizes real threats for swift action.

Prevention and Detection: Proactive monitoring beats reactive fixes. SOC teams watch networks 24/7, spotting and stopping suspicious activity before it causes harm.

Incident Management and Response: When something slips through, analysts investigate, assess the threat, and coordinate a response to minimize disruption while keeping business running smoothly.

Compliance Management: With regulations constantly shifting, SOCs ensure data handling meets legal and industry standards, protecting the organization from penalties.

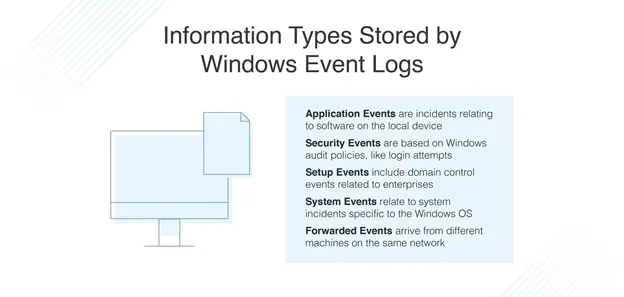

Why Log Management Matters?

Firstly, almost every SOC, small or big, invested in a SIEM platform. But as indicated by many analysts, most of these platforms are significantly underutilized. SIEMs running with years-old detection rules and creating alert noise is not a rarity.

Gartner Magic Quadrant for Security Information and Event Management (SIEM)

Secondly, SOC functions are consequential, and each process’s success depends on the success of the preceding ones. For example, if detection is not handled well, and if there are too many alerts and too many false positives, incident response actions will not be effective either. Log Management is the most fundamental SOC function as threat detection and mitigation, monitoring and troubleshooting, incident investigation and forensics, government and industry compliance, auditing, and threat hunting are entirely dependent on internal and external context, which is provided by logs.

Therefore, If we are to talk about the concept of proactive SOC, SIEM log management is the right place to start as every SOC function primarily depends on log data, and SIEMs took center stage at SOCs.

Log Sources

Primary Log Sources

The first one is Security Controls, which are safeguards or countermeasures to prevent, detect, correct, compensate, or counteract security risks to physical property, information, computer systems, or other assets.

The second group of log sources is Network Infrastructure, the hardware and software resources of an entire network that enable network connectivity, communication, operations, and management of an enterprise network.

The third one is Operating Systems Microsoft Windows, macOS, Linux distributions, and other operating systems are valuable log sources.

The last group of log sources is Applications.

Security Controls

Let’s start with Security Controls. To detect or prevent malicious activity, protect systems and data, and support incident response and other efforts, most organizations use a variety of network-based and host-based security controls. As a result, security controls are major sources of security log data.

Intrusion Prevention Systems (IPS), Next-Generation Firewalls (NGFW), Network Access Control (NAC) solutions, Email Security Gateways are examples of network-based security controls. Successful and failed connection attempts, connection states, suspicious requests, detected or prevented attacks are some of the log types you can collect from network security devices.

Endpoint Detection and Response (EDR) and anti-malware solutions are examples of host-based endpoint security solutions. Malware scan activities, software updates, suspicious activities, and exploitation attempts are some logs of host- based security controls.

Other than network and host- based security controls, physical security controls are also essential log sources. For cases involving insider threats, obtaining event logs from physical security devices such as camera systems, biometric card access readers, or alarm system is highly useful. To prove a person’s existence at the time of the violation or if their passwords were compromised, you could combine these events with other information gathered from servers, workstations, firewalls, VPNs, and remote access devices. The distinction between the physical security team and the IT security team is one of the most challenging aspects of accessing these logs.

Network Infrastructures

Types of Coputer Networks

I’d now like to move on to the second group of the log sources, Network Infrastructure. Network infrastructure refers to hardware and software resources that make network connectivity and communication possible.

Routers, switches, wireless access points, DNS servers, and DHCP servers are only a few network infrastructure components. Network devices can be set up to monitor very comprehensive connection activities, but they are normally set up to log only the most basic details. These logs show a lot about what’s going on in the network.

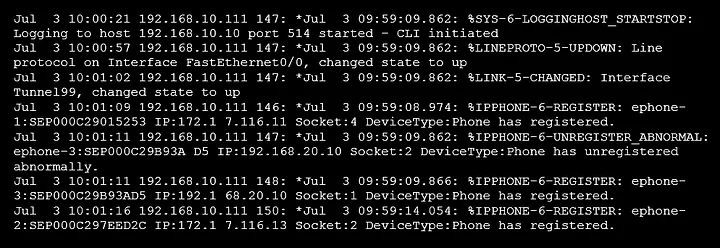

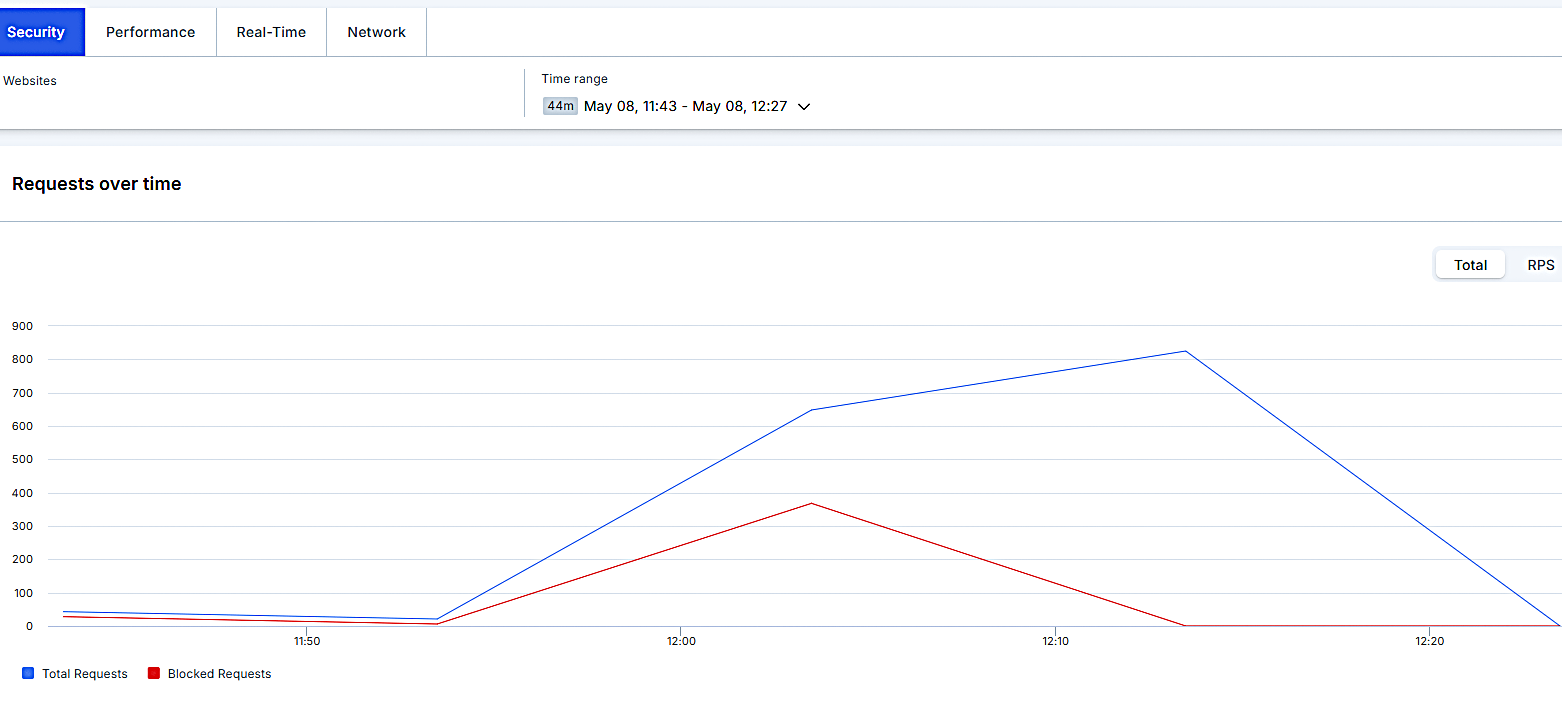

Some network devices can also analyze connections and describe the attack type with IP, port, and time details, as shown in the screenshot.

Operating Systems

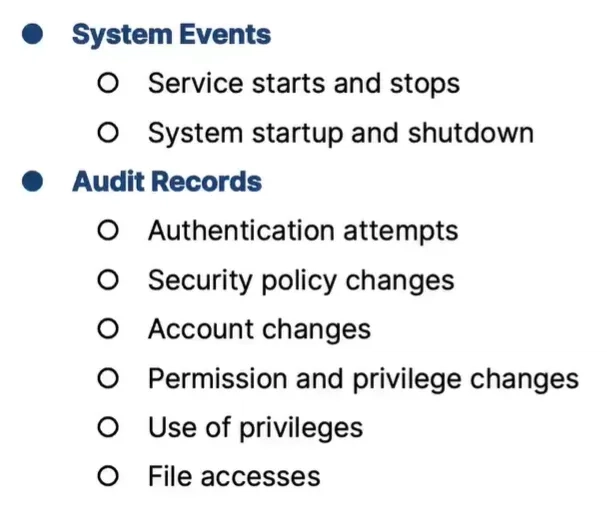

As the third log source group, Operating Systems typically keep track of a variety of security-related logs. We can organize OS logs as system events and audit records.

System events include operational actions performed by the operating system’s components, such as service starts and stops, and system startup and shutdown events.

Audit records consist of successful and failed authentication attempts, changes in security policies, accounts permissions and privileges, use of privileges, and file accesses.

4616(S): The system time was changed

SSH authentication logs in Linux

Applications

Not only security controls, network infrastructure, and operating systems, but also applications provide security related logs.

Organizations use email, database, file, and web servers and clients to continue their business. Moreover, they use various commercial off-the-shelf (COTS) applications relevant to business requirements such as Supply Chain Management, Customer Relations Management (CRM), Enterprise Resource Planning software. Moreover, some organizations also use custom-developed applications.

All of these applications are a very valuable source of information to detect security issues. They provide various logs such as account and configuration changes, authentication attempts, application failures, client requests and server responses.

Records in Database

Message Trace in Exchange Server

Message Trace in Exchange Online

Event Attributes

Log sources provide events and an event’s attributes consist of detailed information about the event. Event date and time, event status, name of the application or service, source and destination IPs and ports, URL, source address, user ID, and event type are only a small part of the event attributes.

SOC teams need to decide on the level of granularity of events.

If the content is defined too wide, logs can overwhelm the resources unnecessarily and delay the investigation processes.

If the content is too narrow, it will not be possible to achieve effective alerting, threat hunting, and incident response processes.

Defining Log Collection Targets and Scope

Given all this complexity, deciding on log sources, event types that will be retrieved from those sources, and type of event attributes is where knowledge and technology should blend to guide SIEM practitioners. Not every log has the same importance and relevance.

You should select log sources first; then you should decide the event types of the selected log sources. Finally, you should determine which attributes of the selected event types are collected.

Let me elaborate further on the decision factors of log sources.

One of the criteria SOC teams use on deciding log sources is based on their impact on the detection, how frequently logs are used to detect malicious activity. You must consider your organization’s attack surface, types of threats you are concerned about, possible attack vectors. Impact on alert triage, incident response, threat hunting, and business objectives are also important. It would be best if you ask this question to yourselves: “Am I collecting the right data to detect, respond, investigate, and hunt threats effectively and achieve business objectives. Moreover, some log sources provide necessary context data for other log sources.

In terms of integration, ease-of-use is also essential. Whether logs can be easily integrated and parsed in SIEMs are among the factors SIEM practitioners consider.

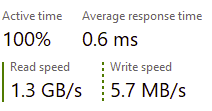

Clearly, you should also consider budget constraints. Most enterprise SIEM components are priced by data velocity in terms of events per second (EPS), the number of users, the sensors deployed, and the assets monitored.

Compliance and audit requirements are also crucial for choosing the right data sources. For example, Requirement 10 of the Payment Card Industry (PCI), Data Security Standard (DSS) is “track and monitor all access to network sources and cardholder data”. GDPR, HIPAA, and FISMA are some other regulations for picking data sources. Requirements of audit frameworks, such as COBIT, ITIL, and COSO, are also important decision factors.

Prioritizing Log Sources

Let’s move to log prioritization using the MITRE ATT&CKframework. Prioritization of data sources takes place in three steps:

1- Focus on the prevalent data sources.

2- Focus on a class of data products, a technology that is prevalent in delivering the data.

3- Focus on the most relevant attack techniques. By detecting the most relevant TTP’s, we can identify threats. We will explain each of these steps.

The MITRE ATT&CK framework helps to identify data sources for techniques. In MITRE ATT&CK, there are about 60 listed data sources. Looking at the data sources considering a technique, MITRE ATT&CK tells us which data sources we need to observe the technique. But we don’t need to consume data from all data sources linked to a technique. As you can see in the screenshot below, this is a list of all data sources for a particular technique. Using all data sources would be very resource-intensive. On the contrary, we need to prioritize sources.

Mapping various sources to ATT&CK can help you to build a deep understanding of the adversary behavior to prioritize data sources and logs. Some data sources happened to be more efficient than others to detect adversary techniques in the MITRE ATT&CK framework. You need to consider a small set of data sources to detect the majority of known techniques.

These are the most relevant sources:

Process and process command-line monitoring, often collected by Windows event logs and EDR platforms, can help identify almost 70% of MITRE ATT&CK techniques.

File and registry monitoring or other powerful and meaningful data sources. Windows event logs also collect file and registry monitoring.

API monitoring.

When it comes to sources, endpoints are the most prevalent data source. You can collect data from network or cloud devices as well. But collecting data from endpoints allows us to cover more than 50% of known techniques, as you can see from the graph.

Collecting logs from endpoints has broader coverage than the network or cloud. But, it does not mean that these data sources are not required.

You can optimize log coverage by considering the most used adversary techniques. Recent studies analyzing thousands of malware samples have found that around 19% of them use process injection techniques. This means that having strong logging and detection systems for process injection could help catch about 19% of malware, improving your security against these threats.

Prioritizing log sources based on security value

Log Management Policies and Procedures

After identifying the requirements for log collection, you must develop standard processes to perform log management.

First, you must find your organization’s logging goals. Then, you must define mandatory security requirements for security, regulations, and laws.

You may also define non-mandatory requirements, which are other data sources that should be logged and analyzed if time and resources permit.

It would help if you also defined suggested recommendations for log generations, storage, transmission, analysis, disposal, and preservation of originals logs.

Finally, you must define roles and responsibilities for log management processes.

Log Management Challenges

Ensuring Log Data Quality

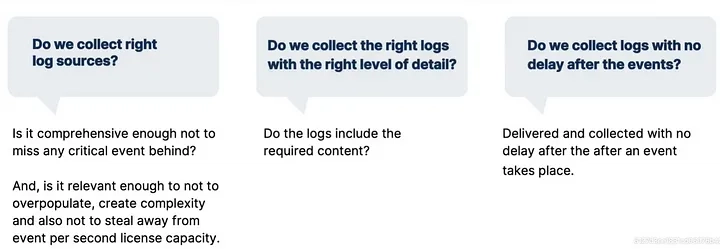

So let’s have a quick look at how we can define good log data. We can identify three criteria in defining the quality and performance factors of data provided by log management:

If there are missing logs or not,

If logs are relevant, and

If the logs are delivered to SIEMs with no delay.

If you look at these briefly,

Firstly, the coverage should be comprehensive to ensure all required data from systems, security devices, and others are collected so that no critical events stay out of the SOC visibility.

It is also important not to inflate the logging infrastructure with data that does not add good enough context. This would create complexity, performance issues, storage shortage, and unnecessarily taking away from the event per second (EPS) license capacity.

Secondly, the level of detail logs contain is also very important. The SIEM may have received the log, but the event types and event attributes may be off to pinpoint an event. Or, conversely, logging maybe in the verbose level that contains unnecessary details.

Thirdly, the timing of a log delivery is a crucial factor in detecting incidents early on. Infrastructural issues or policy configurations may delay the log delivery.

So, to summarize, SIEM practitioners that are in charge of the log management needs to make sure that they strike the right balance on the number of sources they collect logs from.

logs should contain the required detail with no excess information, and

logs are to be delivered and collected with no delay.

Navigating Log Collection Challenges

Now I’d like to talk about log management challenges about having good data. As you guessed, it is not easy, and many factors create challenges for Effective log management and make good data available continuously.

Firstly, SIEMs need to collect logs from various data sources that may be located on-premises networks, collected in the data center, or in the cloud. Different platform characteristics and connectivity modes are prone to create log delivery and collection issues.

As another challenge, due to network interruptions,API related issues, and software bugs, configuration errors, log delivery may have stopped altogether from a particular network segment or endpontsystem. For example, SOC teams may not be aware of it.

The last challenge is about the size of logs.Statistics are showing that in enterprise networks, log size can easily reach 10 terabytes each month. Collecting, storing, analyzing, and normalizing such big volumes also makes log management difficult.

Adapting to Changing Environments

Continuing with the challenges, another important dimension is related to keeping up with internal and external changes.

At any time, a new machine, software, or network device can be turned on and start producing new log data, and it is not easily spotted the new log sources in the networks.

Cloud instances can be launched for a few days or hours and then get shut down.

So, SOC teams must be aware of architectural changes, new deployments, new applications, and retiring technologies to keep log management aligned with these changes that are handled by network operations, IT security, applications, teams, DevOps, and others.

Changes in the adversarial landscape can also create blind spots in networks. New attack vectors and attack techniques may require that some log sources, event types, and attributes excluded before are to be included going forward.

For example, attackers started obfuscating PowerShell commands to evade security controls and stay under the radar. Once this new TTP activity is identified, it has become necessary to collect logs from Windows Event ID 4104 to detect obfuscated PowerShell commands from now on. So logging should be updated to gain visibility on such new TTPs.

Normalization

And finally, on the challenges, the log data is diverse. Systems, applications, and network devices each have their logs containing different types of data, but a single log source may contain multiple logs. Applications, for example, often have several log files, each containing a different form of data. The contents of logs vary considerably.

Some logs are constructed to be read by humans, while others aren’t; some use standard formats, while others use proprietary ones.

Some log formats use commas to separate fields, others use spaces to separate fields within a single log message, and still, others use symbols or other character delimiters.

Even if two sources record the same values, they may have different representations.

For example, a date may be formatted month month / day day / year year year year or day day / month month / year year year year.

For a human reviewer, parsing dates in different formats might be easy but considering an FTP session being recorded by one log source as “POP3” and another as “110”, the default port number of the POP3 protocol.

So putting all these challenges together: the difficulty of collecting, keeping logs up-to-date, and normalizing a variety of formats tells us that things can quickly go wrong without SOC teams noticing and therefore validating the consistency of log infrastructure, coverage, adaptability needs to be part of the log management processes.

Good event normalization example

Bad event normalization example

Log Security and Protection

The last challenge that I want to mention is Log Security and Protection.

First of all, you must ensure that the processes that produce log entries are secured. The integrity of log collectors, configuration files, and other components of log sources must be assured.

You also need to secure log data transfer by using secure mechanisms such as encryption.

Confidentiality and integrity of log files in storage are also crucial. You are required to protect physical logging infrastructure and prevent unauthorized access.

Finally, you have to maintain business continuity.

Log Management and Best Practices

Proactive Log Management

So now, we come to the next point, which is proactive log management. This is where building at adapting proactive capabilities can help sharpen the knife for SOC teams

to widen the log original sensible way,

to spot and remove irrelevant log sources to open up capacity,

to identify new log sources against new TTPs with no delay,

to identify shortcomings that can affect the flow of data.

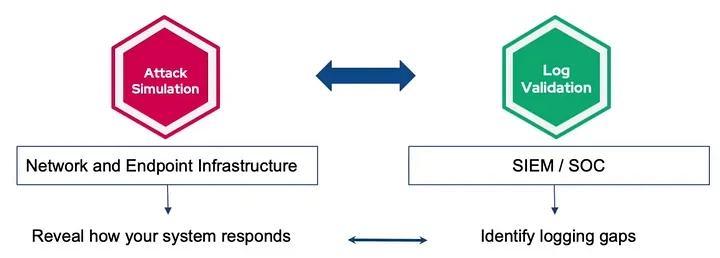

To gain capabilities for being proactive, our proposition is making the threat-centric validation part of log management processes.

Suppose an attack simulation can provide a large set of threat samples, automation, and rich context about how the network response to the simulations. In that case, this insight can be used to pinpoint login gaps on SIEMs and opens up the road to fixing them.

We come across quite often that log management involves a lot of assumptions; those assumptions mislead SIEM admins that logs are collected alright, and they have the required details. In most cases, logging problems are identified after an incident takes place.

Proactively Validate Log Status for a Particular Threat

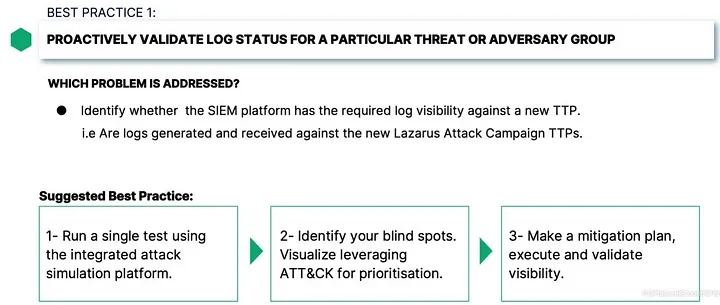

So now, we will explore five best practices for proactive log management. In this last section of the course, I want to share with you five threat-centric validation best practices through which SIEM admins can identify the logging shortcomings themselves before an incident occurs.

The first best practice is validating log status for a particular threat or an adversary group. It aims to help identify whether a SIEM platform has the required log visibility against a new TTP or TTPs. For example, a SOC analyst may want to check if the SIEM would receive logs related to Lazarus Group’s latest attack campaign TTPs if an actual attack took place.

Suppose that an attack scenario of the Lazarus group contains 12 unique actions. It starts with system information discovery and continues with system network configuration discovery, system network connections discovery and goes all the way to C2 over HTTPS port 443. Using an integrated attack simulation platform, security analysts can safely replicate Lazarus Group’s malicious actions, identify which of them are detected and missed, and mirror these findings to the SIEM platform to see whether everything matches or there are log visibility gaps that need addressing.

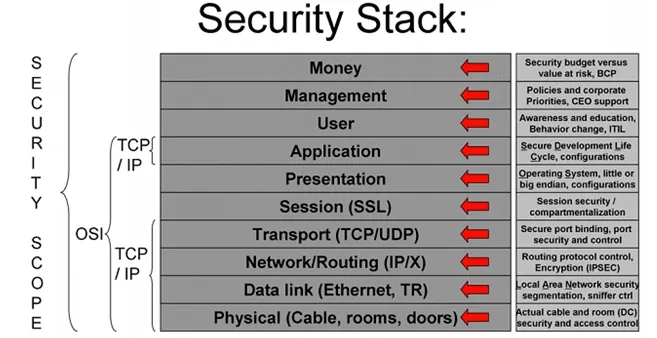

Look for Detection Opportunities in Your Security Stack

The second best practice is related to the detection capabilities of the security stack, which can be Network IPS, WAF, Firewall, endpoint protection, and other defense technologies.

In a scenario where a threat is not detected on the defense level, no logs are generated ,and the SOC teams have no way of knowing what type of malicious events that particular threat may be generating in the network.

The best practice we suggest here is:

as the first step, frequently or continuously simulate a comprehensive set of threats across the security stack

the second step is to identify detection gaps on the security stack based on these simulations. These first two steps reveal thread activities that security teams would not know if that threat is used in an actual attack.

Outside The Box

Look for Detection Opportunities in Your Infrastructure

For this best practice, simulate an attack first. If the simulation has not been detected, the log source of the TTP used in the simulation can be identified and enabled on the endpoint platform.

Here on the slide as an example, you can see that the recent HAFNIUM campaign targeting MS Exchange platforms can be detected if Powershell Script Block Logging is enabled.

Visualize to Better Understand, Manage, Communicate and Coordinate

The fourth best practice aims to put logging performance in a context, for example, the MITRE ATT&CK framework. By identifying the MITRE ATT&CK techniques where no logs are received based on attack simulations, security teams can take corrective actions, identify gaps against the new threats or variants and monitor positive and negative log coverage changes.

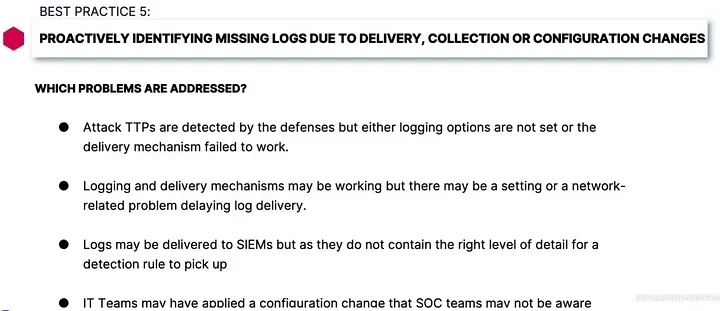

Proactively Identifying Missing Logs Due to Delivery, Collection Or Collection Or Configuration Changes

The last best practice aims at identifying and fixing log delivery and collection problems.

In this best practice, security controls detected or prevented an attack, generated logs, but due to configuration mistakes, network related delays, API limitations, changes made by the IT teams, and other possible reasons, the SIEM may not have received the log.

The best practice we suggest is to continually or frequently running attack simulations using an extensive threat sample database to address these types of infrastructural problems. Then, you can determine the delta between how security controls responded and what the SIEM has seen and establishing a regular validation process.

While previous use cases aim to optimize and widen logging coverage proactively, this best practice aims to keep this optimized login infrastructure up and running.

Threat-Centric Log Validation with Breach and Attack Simulation (BAS) Tools

Given the complexity of the log management function, SOC practitioners have to deal with all combinations of failures involving malfunctioning log sources, invalid log format, or temporary service disruption while adapting the scope of log collection to the changing adversarial landscape.

Besides many other features, BAS tools offers a threat-centric log validation process that allows SOC teams to proactively and consciously address these challenges by identifying:

links between logging gaps and attack techniques

problems with originating or transferring logs

challenges in identifying log sources and level of verbose.

The Top 9 Breach And Attack Simulation Solutions — 2025

In conclusion, the five best practices for proactive log management in SOCs form a robust framework for enhancing threat detection and response capabilities, particularly for technically experienced cybersecurity professionals. Proactively validating log status for specific threats ensures alignment with adversary TTPs, leveraging Windows Event Logs (e.g., Event ID 4688, 4104) and network logs for comprehensive coverage, critical for detecting advanced persistent threats (APTs) like the Lazarus Group. Assessing the security stack for new detection opportunities involves configuring EDR for process hollowing (Sysmon Event ID 8) and NIPS for signature-based detection, reducing false negatives by tuning machine learning models for anomaly detection. Looking for detection opportunities in the IT environment expands visibility to application logs (e.g., IIS 401 errors) and system metrics, requiring parsers like Logstash for integration and correlation rules for cross-source analysis, enhancing early warning capabilities.

Visualizing log coverage using SIEM dashboards (e.g., QRadar’s query language(AQL), Splunk SPL queries, Elastic DSL) and MITRE ATT&CK Navigator maps gaps in techniques like Lateral Movement (T1021), facilitating compliance with standards like PCI DSS Requirement 10 and enabling real-time gap analysis for large datasets. Finally, proactively identifying missing logs due to delivery and collection problems involves monitoring EPS drops with SIEM alerts, auditing log forwarders (e.g., Fluentd, syslog over UDP 514), and troubleshooting via packet captures, ensuring data integrity against network latency or configuration errors, crucial for maintaining visibility into obfuscated PowerShell commands (Event ID 4104).

Implementing these practices requires a deep understanding of SIEM query languages, log formats (JSON, CEF), and network protocols, with continuous adaptation to the evolving threat landscape. By integrating attack simulations for validation, tuning security tools for advanced logging, and leveraging visualization for gap analysis, SOCs can build a resilient log management system, ready to face tomorrow’s challenges, ensuring both operational efficiency and compliance with regulatory frameworks.

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![How to Enable Remote Access on Windows 10 [Allow RDP]](https://bigdataanalyticsnews.com/wp-content/uploads/2025/05/remote-access-windows.jpg)

![[DEALS] The 2025 Ultimate GenAI Masterclass Bundle (87% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Legends Reborn tier list of best heroes for each class [May 2025]](https://media.pocketgamer.com/artwork/na-33360-1656320479/pg-magnum-quest-fi-1.jpeg?#)

-Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brian_Jackson_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Watch Aston Martin and Top Gear Show Off Apple CarPlay Ultra [Video]](https://www.iclarified.com/images/news/97336/97336/97336-640.jpg)

![Trump Tells Cook to Stop Building iPhones in India and Build in the U.S. Instead [Video]](https://www.iclarified.com/images/news/97329/97329/97329-640.jpg)