Apple Explains How AI-Generated App Store Review Summaries Work in iOS 18.4

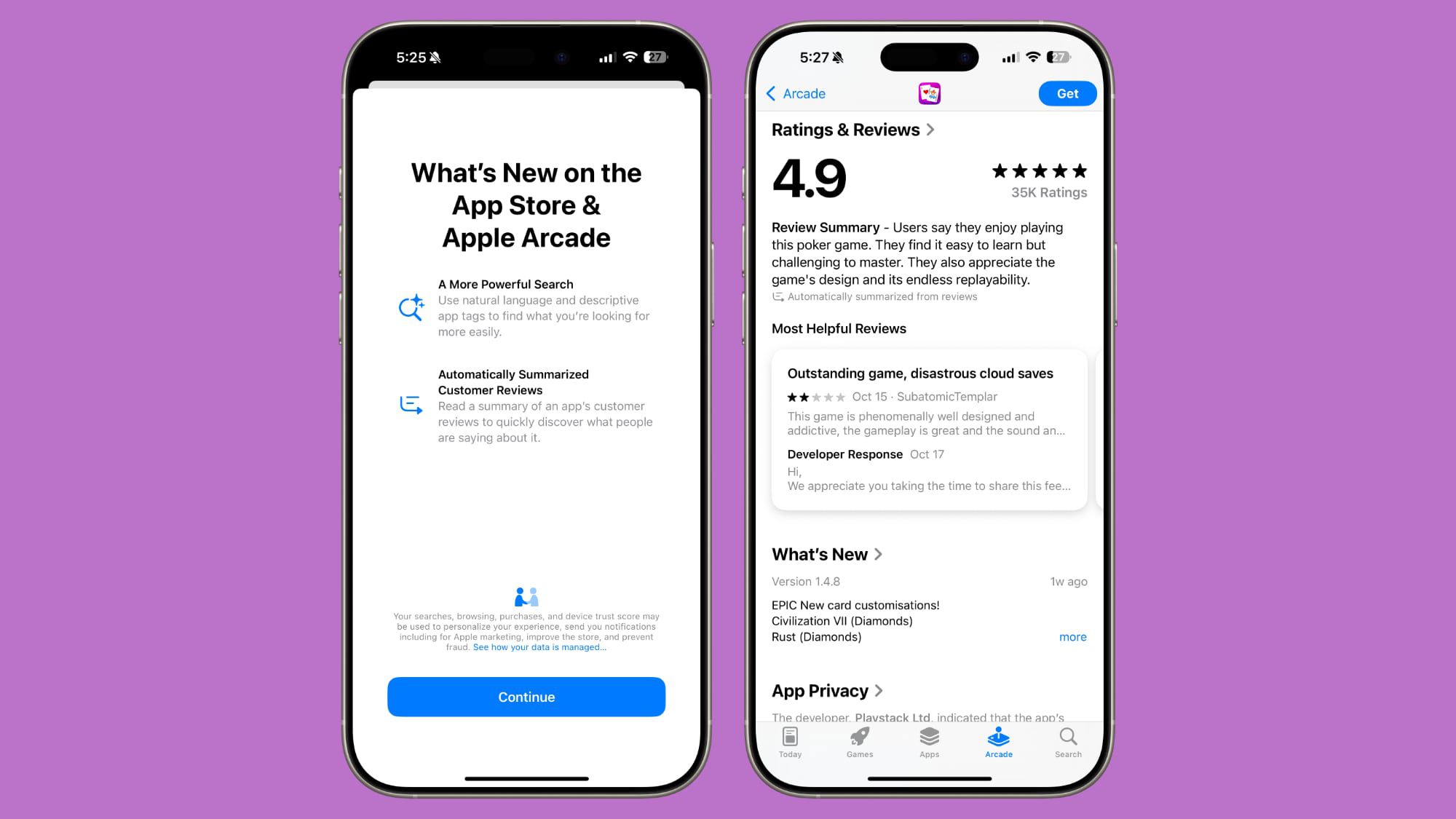

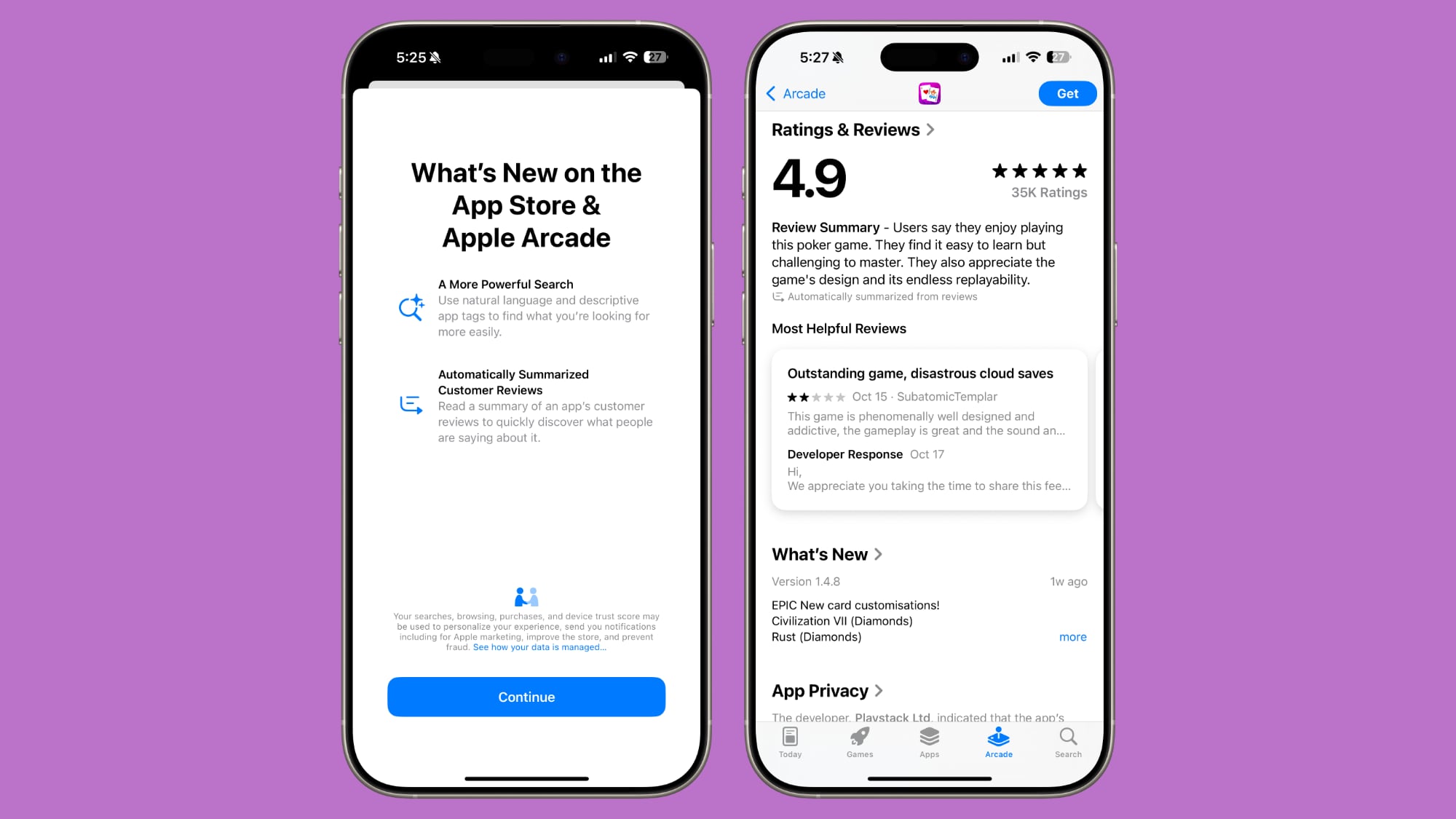

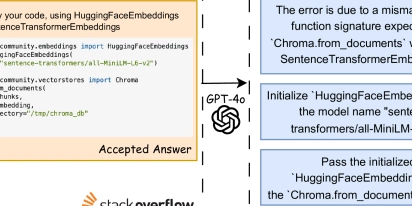

With the launch of iOS 18.4, Apple introduced a new App Store feature that summarizes multiple user reviews to provide an at-a-glance summary of what people think of an app or a game. In a new blog post on its Machine Learning Research blog, Apple provides some detail on how App Store review summaries work. Apple is using a multi-step large language model (LLM) system to generate the summaries, with the aim of creating overviews that are inclusive, balanced, and accurately reflect the user's voice. Apple says that it prioritizes "safety, fairness, truthfulness, and helpfulness" in its summaries, while outlining some of the challenges in aggregating App Store reviews. With new app releases, features, and bug fixes, reviews can change, so Apple's summarizations have to dynamically adapt to stay relevant, while also being able to aggregate both short and long reviews. Some reviews also include off-topic comments or noise, which the LLM needs to filter out. To begin with, Apple's LLM ignores reviews that have spam, profanity, or fraud. Remaining reviews are then processed through a sequence of LLM-powered modules that extract key insights from each review, aggregating themes that reoccur, balancing positive and negative takes, and then generating a summary that's around 100 to 300 characters in length. Apple uses specially trained LLMs for each step in the process, ensuring that the summaries are an accurate reflection of user sentiment. During the development of the feature, thousands of summaries were reviewed by human raters to assess factors like helpfulness, composition, and safety. Apple's full blog post goes into more detail on each step of the summary generation process, and it is worth checking out for those who are interested in the way that Apple is approaching LLMs.Tag: App StoreThis article, "Apple Explains How AI-Generated App Store Review Summaries Work in iOS 18.4" first appeared on MacRumors.comDiscuss this article in our forums

Apple is using a multi-step large language model (LLM) system to generate the summaries, with the aim of creating overviews that are inclusive, balanced, and accurately reflect the user's voice. Apple says that it prioritizes "safety, fairness, truthfulness, and helpfulness" in its summaries, while outlining some of the challenges in aggregating App Store reviews.

With new app releases, features, and bug fixes, reviews can change, so Apple's summarizations have to dynamically adapt to stay relevant, while also being able to aggregate both short and long reviews. Some reviews also include off-topic comments or noise, which the LLM needs to filter out.

To begin with, Apple's LLM ignores reviews that have spam, profanity, or fraud. Remaining reviews are then processed through a sequence of LLM-powered modules that extract key insights from each review, aggregating themes that reoccur, balancing positive and negative takes, and then generating a summary that's around 100 to 300 characters in length.

Apple uses specially trained LLMs for each step in the process, ensuring that the summaries are an accurate reflection of user sentiment. During the development of the feature, thousands of summaries were reviewed by human raters to assess factors like helpfulness, composition, and safety.

Apple's full blog post goes into more detail on each step of the summary generation process, and it is worth checking out for those who are interested in the way that Apple is approaching LLMs.

This article, "Apple Explains How AI-Generated App Store Review Summaries Work in iOS 18.4" first appeared on MacRumors.com

Discuss this article in our forums

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] Sterling Stock Picker: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)

![Apple to Shift Robotics Unit From AI Division to Hardware Engineering [Report]](https://www.iclarified.com/images/news/97128/97128/97128-640.jpg)

![Apple Shares New Ad for iPhone 16: 'Trust Issues' [Video]](https://www.iclarified.com/images/news/97125/97125/97125-640.jpg)