Code Your Own Llama 4 LLM from Scratch

Large language models (LLMs) are at the forefront of modern artificial intelligence, enabling applications that can understand and generate human-like language. Meta's latest release, Llama 4, represents a significant advancement in this field, intro...

Large language models (LLMs) are at the forefront of modern artificial intelligence, enabling applications that can understand and generate human-like language. Meta's latest release, Llama 4, represents a significant advancement in this field, introducing new architectural innovations and capabilities.

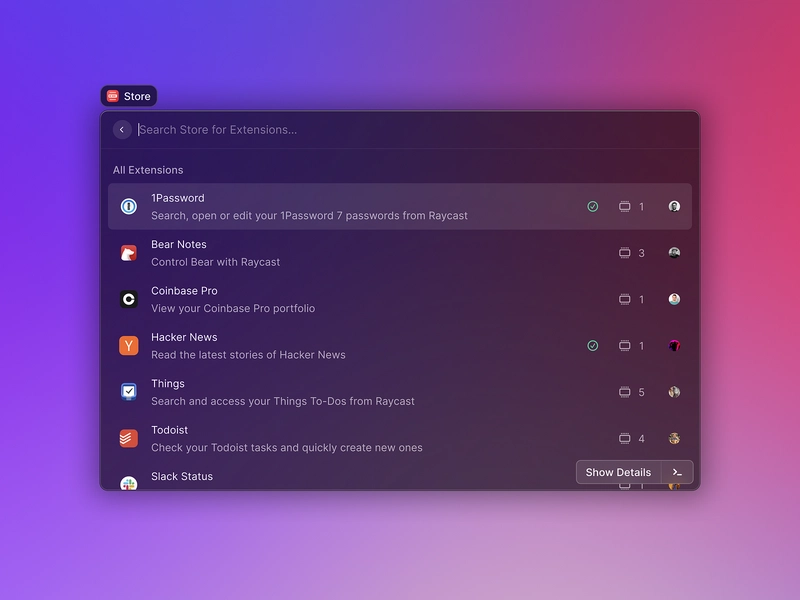

We just published a course on the freeCodeCamp.org YouTube channel that will teach you all about how to implement Llama 4 from scratch, taught by Vuk Roshik. This hands-on course breaks down the architecture and components of a modern large language model, guiding you step by step through the process of coding each part. From understanding how language models work to grasping the role of tokens and attention mechanisms, this course offers a detailed look into building a cutting-edge model.

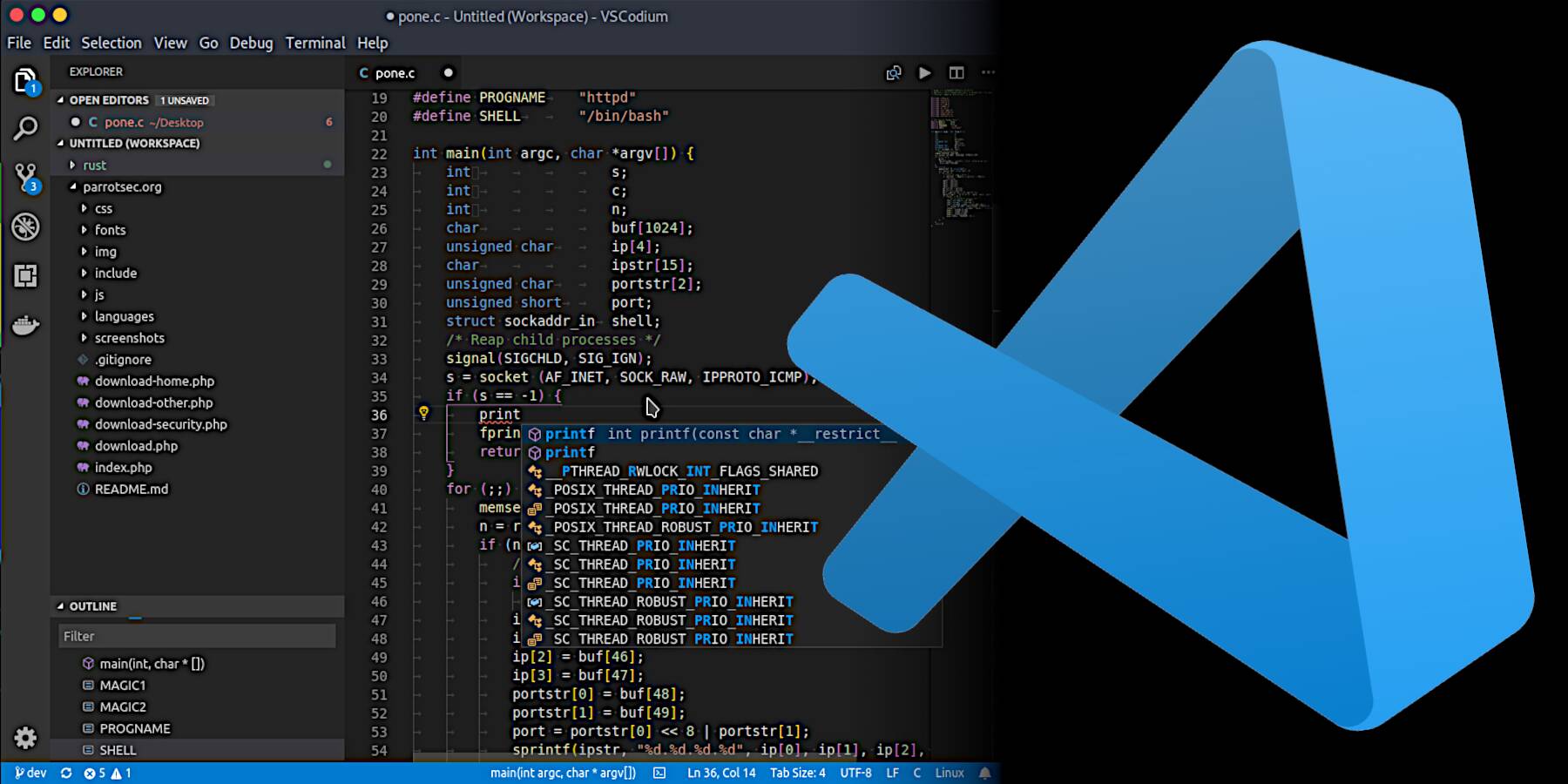

The course begins with an overview of how LLMs function, introducing the concept of tokens. You'll learn how to build a tokenizer, which converts text into these tokens, and understand how models interpret them. The course then delves into the attention mechanism, a core component that allows models to focus on relevant parts of the input when generating output. You'll explore how attention works conceptually and implement it in code.

A significant part of the course is dedicated to Rotary Positional Embeddings (RoPE), a technique that helps models understand the order of tokens in a sequence. You'll learn how RoPE integrates with the attention mechanism and how to implement it effectively. Finally, the course covers the feedforward networks that process the attended information to produce the model's output.

Understanding Llama 4's architecture is crucial for implementing it effectively. Llama 4 introduces a mixture-of-experts (MoE) design, where the model consists of multiple expert networks, but only a subset is activated for a given input. This approach enhances efficiency and allows the model to scale effectively. Llama 4 also supports multimodal inputs, meaning it can process both text and images, and has been trained on a diverse dataset, including publicly available and licensed data.

Whether you're a machine learning enthusiast or a developer looking to deepen your understanding of AI, this course offers a unique opportunity to learn how a powerful model like Llama 4 works. Watch the full course on the freeCodeCamp.org YouTube channel (3-hour watch).

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] Sterling Stock Picker: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)

![Apple to Shift Robotics Unit From AI Division to Hardware Engineering [Report]](https://www.iclarified.com/images/news/97128/97128/97128-640.jpg)

![Apple Shares New Ad for iPhone 16: 'Trust Issues' [Video]](https://www.iclarified.com/images/news/97125/97125/97125-640.jpg)