Advancing Robotic Intelligence: A Synthesis of Recent Innovations in Autonomous Systems, Manipulation, and Human-Robot I

This article is part of AI Frontiers, a series exploring groundbreaking computer science and artificial intelligence research from arXiv. We summarize key papers, demystify complex concepts in machine learning and computational theory, and highlight innovations shaping our technological future. Introduction to Robotics Research: Current State and Significance Robotics represents one of the most dynamic and rapidly evolving fields within computer science, sitting at the intersection of artificial intelligence, mechanical engineering, electrical engineering, and computer vision. This interdisciplinary domain focuses on developing autonomous systems capable of sensing their environment, making decisions, and taking physical actions in the real world. This synthesis examines seventeen cutting-edge papers published in May 2025, representing the forefront of innovation in robotics research. These works collectively demonstrate significant advancements in autonomous driving, manipulation capabilities, human-robot interaction, and specialized applications across various domains. The significance of these developments extends far beyond academic interest. As we progress further into the 21st century, robots are increasingly becoming integrated into daily life. Autonomous vehicles are beginning to transform transportation systems, industrial robots continue to revolutionize manufacturing processes, and service robots are finding new applications in healthcare, retail, and domestic settings. The innovations represented in these papers have the potential to accelerate this integration, making robots more capable, more reliable, and better able to collaborate with humans in shared environments. These advancements are occurring within a broader technological context that enables rapid progress in robotics. Improvements in machine learning, particularly reinforcement learning and large language models, are providing new approaches to robot control and decision-making. Enhancements in sensor technology and computer vision are expanding robots' ability to perceive and understand their environments. Developments in actuator design and control systems are increasing the range of physical actions that robots can perform reliably and efficiently. Concurrently, there is growing recognition of the importance of human factors in robotics. Many of the papers discussed herein address not just the technical capabilities of robots but also their ability to interact with humans, explain their decisions, and adapt to human preferences and behaviors. This reflects a shift in the field toward viewing robots not as isolated technical systems but as participants in complex socio-technical environments where collaboration with humans is often essential. Major Themes in Contemporary Robotics Research Integration of Foundation Models with Traditional Robotics Approaches A prominent theme emerging from the analyzed papers is the integration of foundation models, particularly large vision-language models (LVLMs), with traditional robotics approaches. This represents a significant shift in how robotics systems are designed and implemented. Traditionally, robotics has relied on modular pipelines with separate components for perception, planning, and control. However, several papers demonstrate the potential of leveraging pre-trained foundation models to create more unified and capable systems. Liu et al. (2025) introduce X-Driver, an explainable autonomous driving system that uses vision-language models to interpret road scenes, reason about driving decisions, and provide natural language explanations for its actions. Similarly, Atsuta et al. (2025) present a framework that combines large vision-language models with model predictive control for autonomous driving, creating a system that is both task-scalable and safety-aware. Liu et al. (2025) take this approach further with DSDrive, distilling knowledge from large language models into more compact architectures suitable for real-time deployment in autonomous vehicles. This theme represents a convergence of two previously distinct approaches to artificial intelligence. Foundation models, trained on vast datasets using self-supervised learning, bring broad knowledge and flexible reasoning capabilities but have traditionally lacked the precision and reliability required for robotics applications. Traditional robotics approaches, based on explicit modeling and control theory, offer reliability and safety guarantees but have struggled with the complexity and ambiguity of real-world environments. The papers suggest that by combining these approaches, researchers are beginning to achieve systems that have both the flexibility of foundation models and the reliability of traditional robotics methods. Advancement of Bimanual and Dexterous Manipulation Another significant theme is the advancement of bimanual and dexterous manipulation capabilities in robot

This article is part of AI Frontiers, a series exploring groundbreaking computer science and artificial intelligence research from arXiv. We summarize key papers, demystify complex concepts in machine learning and computational theory, and highlight innovations shaping our technological future.

Introduction to Robotics Research: Current State and Significance

Robotics represents one of the most dynamic and rapidly evolving fields within computer science, sitting at the intersection of artificial intelligence, mechanical engineering, electrical engineering, and computer vision. This interdisciplinary domain focuses on developing autonomous systems capable of sensing their environment, making decisions, and taking physical actions in the real world. This synthesis examines seventeen cutting-edge papers published in May 2025, representing the forefront of innovation in robotics research. These works collectively demonstrate significant advancements in autonomous driving, manipulation capabilities, human-robot interaction, and specialized applications across various domains.

The significance of these developments extends far beyond academic interest. As we progress further into the 21st century, robots are increasingly becoming integrated into daily life. Autonomous vehicles are beginning to transform transportation systems, industrial robots continue to revolutionize manufacturing processes, and service robots are finding new applications in healthcare, retail, and domestic settings. The innovations represented in these papers have the potential to accelerate this integration, making robots more capable, more reliable, and better able to collaborate with humans in shared environments.

These advancements are occurring within a broader technological context that enables rapid progress in robotics. Improvements in machine learning, particularly reinforcement learning and large language models, are providing new approaches to robot control and decision-making. Enhancements in sensor technology and computer vision are expanding robots' ability to perceive and understand their environments. Developments in actuator design and control systems are increasing the range of physical actions that robots can perform reliably and efficiently.

Concurrently, there is growing recognition of the importance of human factors in robotics. Many of the papers discussed herein address not just the technical capabilities of robots but also their ability to interact with humans, explain their decisions, and adapt to human preferences and behaviors. This reflects a shift in the field toward viewing robots not as isolated technical systems but as participants in complex socio-technical environments where collaboration with humans is often essential.

Major Themes in Contemporary Robotics Research

Integration of Foundation Models with Traditional Robotics Approaches

A prominent theme emerging from the analyzed papers is the integration of foundation models, particularly large vision-language models (LVLMs), with traditional robotics approaches. This represents a significant shift in how robotics systems are designed and implemented. Traditionally, robotics has relied on modular pipelines with separate components for perception, planning, and control. However, several papers demonstrate the potential of leveraging pre-trained foundation models to create more unified and capable systems.

Liu et al. (2025) introduce X-Driver, an explainable autonomous driving system that uses vision-language models to interpret road scenes, reason about driving decisions, and provide natural language explanations for its actions. Similarly, Atsuta et al. (2025) present a framework that combines large vision-language models with model predictive control for autonomous driving, creating a system that is both task-scalable and safety-aware. Liu et al. (2025) take this approach further with DSDrive, distilling knowledge from large language models into more compact architectures suitable for real-time deployment in autonomous vehicles.

This theme represents a convergence of two previously distinct approaches to artificial intelligence. Foundation models, trained on vast datasets using self-supervised learning, bring broad knowledge and flexible reasoning capabilities but have traditionally lacked the precision and reliability required for robotics applications. Traditional robotics approaches, based on explicit modeling and control theory, offer reliability and safety guarantees but have struggled with the complexity and ambiguity of real-world environments. The papers suggest that by combining these approaches, researchers are beginning to achieve systems that have both the flexibility of foundation models and the reliability of traditional robotics methods.

Advancement of Bimanual and Dexterous Manipulation

Another significant theme is the advancement of bimanual and dexterous manipulation capabilities in robotics. Three papers focus specifically on enabling robots to perform complex manipulation tasks using two arms or hands. Li et al. (2025) present SYMDEX, a reinforcement learning framework that leverages morphological symmetry to enable ambidextrous bimanual manipulation. Jiang et al. (2025) introduce an integrated real-time motion-contact planning and tracking framework for robust in-hand manipulation. Liu et al. (2025) propose D-CODA, a diffusion-based method for augmenting data in dual-arm manipulation scenarios.

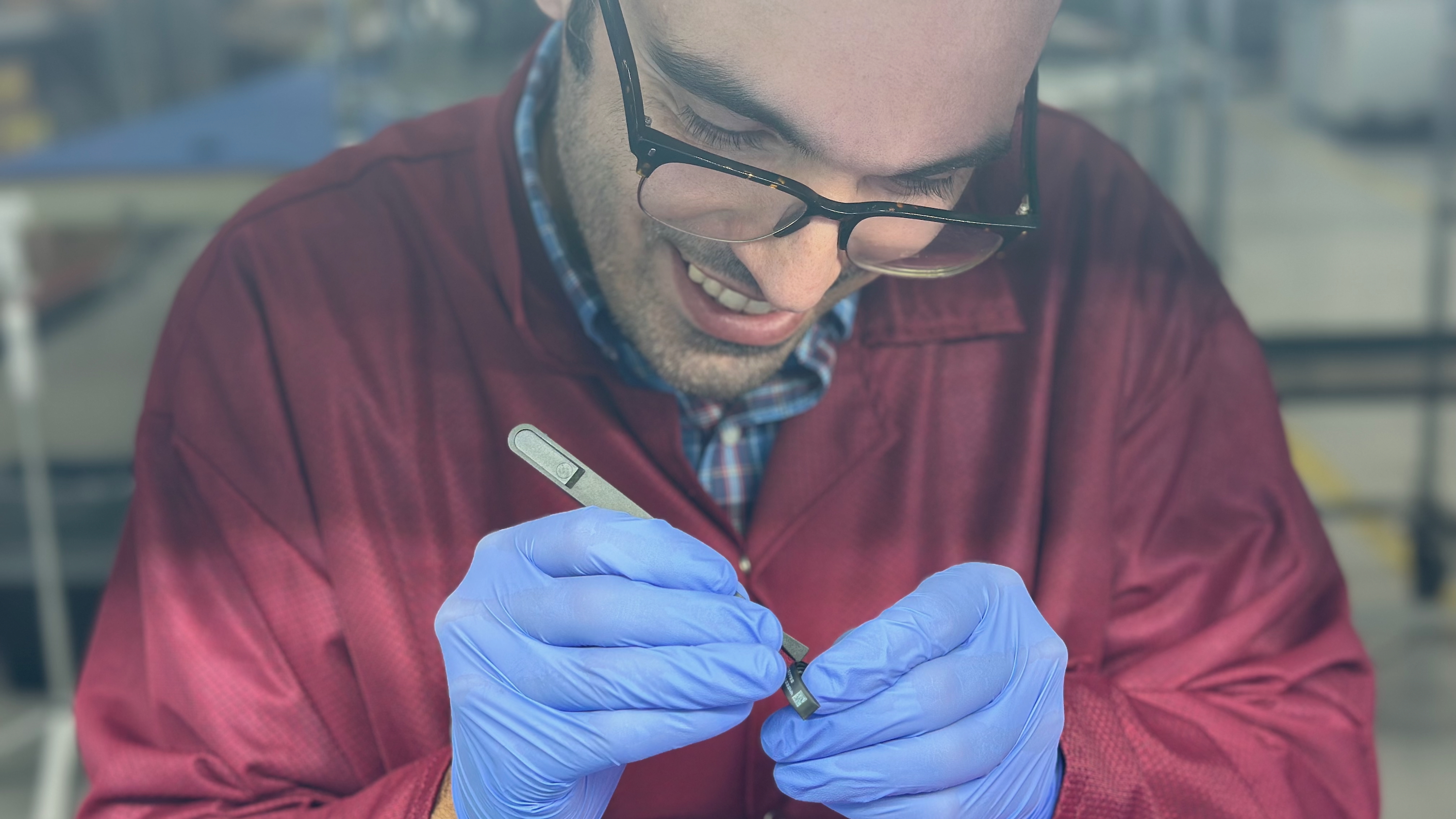

This focus on bimanual and dexterous manipulation reflects a push toward enabling robots to perform tasks that have traditionally required human-level dexterity. Many everyday activities, from preparing food to assembling furniture, involve coordinated use of both hands, often with one hand stabilizing an object while the other performs more precise manipulations. By developing robots capable of similar coordination, researchers are expanding the range of tasks that robots can perform in both industrial and domestic settings.

The approaches presented in these papers are particularly noteworthy for their emphasis on learning-based methods, which allow robots to acquire complex manipulation skills through experience rather than explicit programming. This represents a shift from traditional approaches that relied on precise geometric models and hand-crafted control strategies toward more adaptive and generalizable methods based on machine learning.

Personalization and Adaptability of Autonomous Systems

A third major theme is the personalization and adaptability of autonomous systems. Several papers focus on enabling robots to adapt their behavior based on individual preferences, environmental conditions, or specific task requirements. Surmann et al. (2025) present a multi-objective reinforcement learning approach for personalizing autonomous driving experiences based on individual preferences. De Groot et al. (2025) describe a vehicle system that includes remote human operation capabilities, allowing for flexible transitions between autonomous and human-controlled operation. Kobayashi (2025) introduces CubeDAgger, an approach to interactive imitation learning that improves robustness without violating dynamic stability constraints.

This theme reflects a recognition that robots must be able to adapt to the diverse and changing environments in which they operate. Rather than designing robots with fixed behaviors, researchers are increasingly focusing on creating systems that can learn from experience and adjust their actions based on context. This adaptability is crucial for robots that need to operate in human environments, where conditions can change unpredictably and where different users may have different preferences or requirements.

The approaches presented in these papers demonstrate various ways of achieving adaptability, from reinforcement learning methods that optimize for multiple objectives to interactive learning approaches that allow humans to guide robot behavior through demonstration or feedback. This represents a shift from traditional approaches that emphasized robustness through simplification and control toward more flexible and responsive systems that can handle complexity and variability.

Domain-Specific Robotics Applications

A fourth theme is the development of domain-specific robotics applications, particularly in agriculture and construction. Kuang et al. (2025) present a coverage path planning approach for micro air vehicles in dispersed and irregular plantations. Lin et al. (2025) propose an algorithm for delimiting areas of interest for swing-arm troweling robots in construction. Thayananthan et al. (2025) introduce CottonSim, a simulation environment for autonomous cotton picking.

This theme highlights how robotics research is increasingly being tailored to address challenges in specific industries. Agriculture and construction are particularly promising domains for robotics applications, as they involve physically demanding tasks that are often performed in challenging environments. By developing robots specifically designed for these domains, researchers are addressing practical challenges while also advancing the broader field of robotics.

The papers demonstrate various approaches to domain-specific robotics, from specialized algorithms for particular tasks to simulation environments that enable the development and testing of robotic systems for specific applications. This represents a maturation of the field, with researchers moving beyond generic platforms and algorithms toward solutions that are optimized for particular use cases and environments.

Explainability and Interpretability in Robotics Systems

The fifth major theme is the emphasis on explainability and interpretability in robotics systems. As robots become more autonomous and are deployed in more complex environments, there's growing recognition of the importance of making their decision-making processes transparent and understandable to humans. Liu et al. (2025) focus explicitly on explainable autonomous driving with X-Driver, using vision-language models to generate natural language explanations for driving decisions. Similarly, the LVLM-MPC collaboration framework presented by Atsuta et al. (2025) includes mechanisms for explaining the system's actions in terms understandable to humans.

This focus on explainability reflects both practical and ethical considerations. From a practical perspective, explanations can help users understand and predict robot behavior, facilitating more effective human-robot collaboration. They can also aid in debugging and improving robotic systems by making it easier to identify the causes of failures or suboptimal performance. From an ethical perspective, explainability is increasingly seen as a requirement for autonomous systems that make decisions with significant consequences for human safety or well-being.

The approaches presented in these papers demonstrate how recent advances in natural language processing and multimodal learning can be leveraged to create robots that not only act intelligently but can also communicate the reasoning behind their actions. This represents a shift from treating robots as black-box systems toward more transparent and communicative agents that can explain their behavior in human-understandable terms.

Methodological Approaches in Robotics Research

Reinforcement Learning for Robot Control and Adaptation

Reinforcement learning (RL) emerges as a dominant methodology across multiple papers. This approach involves training agents to make sequences of decisions by providing rewards for desired outcomes, allowing them to learn optimal policies through trial and error. RL is applied in various contexts, from Surmann et al.'s (2025) work on personalized autonomous driving to Li et al.'s (2025) research on ambidextrous bimanual manipulation.

The strength of reinforcement learning lies in its ability to learn complex behaviors without requiring explicit programming of every action. By specifying rewards rather than behaviors, researchers can allow robots to discover solutions that might not be obvious to human designers. RL is particularly well-suited to tasks where the optimal behavior is difficult to specify directly but where success can be clearly measured. Additionally, RL can adapt to changing conditions, allowing robots to optimize their behavior based on feedback from the environment.

However, reinforcement learning also faces significant limitations. One major challenge is sample efficiency—RL typically requires many interactions with the environment to learn effective policies, which can be impractical for real-world robotics applications where data collection is costly or time-consuming. Another challenge is the specification of reward functions that lead to desired behaviors without unintended consequences. Poorly designed rewards can result in policies that optimize for the specified metric while violating implicit constraints or expectations.

The papers demonstrate various approaches to addressing these challenges. Li et al.'s (2025) SYMDEX framework improves sample efficiency by leveraging structural symmetry, allowing experience from one arm to benefit learning for the opposite arm. Surmann et al. (2025) address the challenge of reward specification by using multi-objective reinforcement learning, which allows for explicit consideration of multiple, potentially competing objectives.

Large Vision-Language Models for Perception and Decision-Making

The use of large vision-language models (LVLMs) for robotics applications represents another significant methodological approach. These models, trained on vast datasets of images and text, can understand and generate natural language descriptions of visual scenes. LVLMs are applied in papers like Liu et al.'s (2025) X-Driver and Atsuta et al.'s (2025) LVLM-MPC collaboration framework, where they're used to interpret visual information and guide decision-making in autonomous driving.

The strength of LVLMs lies in their ability to leverage pre-trained knowledge from large datasets, enabling them to understand diverse visual scenarios without task-specific training. They can also provide natural language explanations for their interpretations and decisions, enhancing the interpretability of robotic systems. Additionally, LVLMs can perform complex reasoning about visual scenes, potentially enabling more sophisticated decision-making in ambiguous or novel situations.

However, LVLMs also face limitations in robotics applications. One challenge is computational intensity—these models typically require significant computational resources, which can be a constraint for real-time robotics applications. Another challenge is grounding—while LVLMs can generate plausible descriptions and explanations, ensuring that these accurately reflect the physical reality and constraints of the robot's environment is non-trivial.

The papers demonstrate various approaches to addressing these challenges. Liu et al.'s (2025) DSDrive addresses computational constraints through knowledge distillation, transferring capabilities from larger models to more compact ones suitable for real-time deployment. Atsuta et al. (2025) address grounding and safety concerns by integrating LVLMs with model predictive control, which provides explicit physical constraints and safety guarantees.

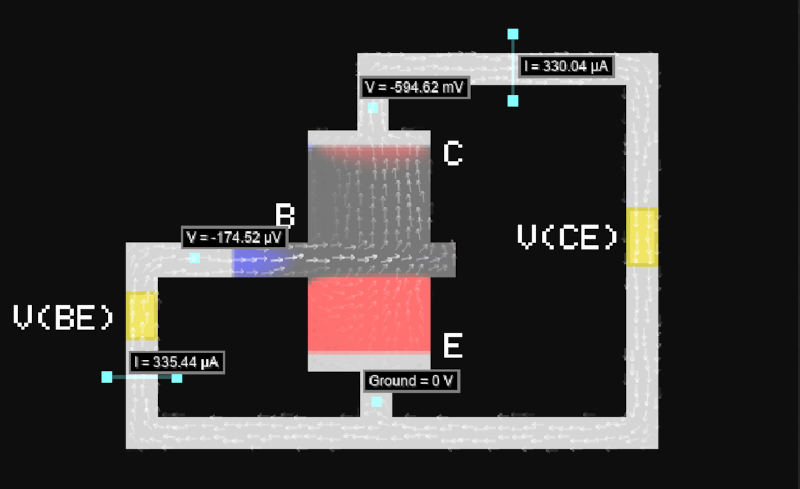

Model Predictive Control for Safe and Efficient Robot Operation

Model predictive control (MPC) is another prominent methodological approach, involving predicting future states based on a model of the system and optimizing control inputs to achieve desired outcomes while respecting constraints. MPC is applied in papers like Kojima et al.'s (2025) work on collision avoidance and Jiang et al.'s (2025) research on in-hand manipulation.

The strength of MPC lies in its ability to handle complex dynamics and constraints in a principled way. By explicitly modeling the system and optimizing over a future horizon, MPC can anticipate and avoid problematic states while achieving desired objectives. MPC is particularly well-suited to tasks where safety constraints are critical, as these can be explicitly incorporated into the optimization problem.

However, MPC also faces limitations. One challenge is computational complexity—solving the optimization problems involved in MPC can be computationally intensive, particularly for systems with complex dynamics or constraints. Another challenge is model accuracy—MPC relies on a model of the system, and its performance can degrade if this model is inaccurate or if the system dynamics change over time.

The papers demonstrate various approaches to addressing these challenges. Kojima et al. (2025) address computational complexity by reformulating collision avoidance constraints in a more tractable form, enabling real-time performance even in complex environments. Jiang et al. (2025) address model accuracy concerns by combining high-level planning with low-level tracking, allowing the system to adapt to discrepancies between the model and the actual system dynamics.

Imitation Learning and Learning from Demonstration

Imitation learning, which involves teaching robots to perform tasks by demonstrating the desired behavior, is another significant methodological approach. Imitation learning is applied in papers like Liang et al.'s (2025) work on Continuous Latent Action Models and Kobayashi's (2025) research on CubeDAgger.

The strength of imitation learning lies in its intuitive interface for teaching robots new skills. Instead of programming explicit rules or designing reward functions, users can simply demonstrate the desired behavior, making robot programming more accessible to non-experts. Imitation learning can also capture subtle aspects of task execution that might be difficult to specify explicitly, such as the appropriate timing or style of movements.

However, imitation learning also faces limitations. One challenge is the need for demonstrations, which can be costly or time-consuming to collect, particularly for complex tasks. Another challenge is generalization—robots trained with imitation learning may struggle to handle situations that differ significantly from those seen in the demonstrations.

The papers demonstrate various approaches to addressing these challenges. Liang et al. (2025) address the demonstration challenge by learning from unlabeled video demonstrations, reducing the need for costly action-labeled data. Kobayashi (2025) addresses generalization concerns by combining imitation learning with interactive feedback, allowing the robot to improve beyond the initial demonstrations while maintaining stability guarantees.

Simulation and Domain Adaptation for Robust Deployment

Simulation and domain adaptation, which involve developing and training robotic systems in simulated environments before deploying them in the real world, represent another important methodological approach. This approach is applied in papers like Thayananthan et al.'s (2025) CottonSim and Song et al.'s (2025) "City that Never Settles" dataset.

The strength of simulation-based approaches lies in their ability to generate large amounts of data without the cost and risk associated with real-world data collection. Simulations can also enable systematic evaluation under controlled conditions, allowing for more rigorous testing of robotic systems. Additionally, simulations can model scenarios that would be difficult or dangerous to create in the real world, such as rare failure cases or extreme environmental conditions.

However, simulation-based approaches also face limitations. The most significant challenge is the reality gap—the discrepancy between simulated and real-world physics, sensors, and environments. Robots trained purely in simulation may struggle when deployed in the real world due to these discrepancies. Another challenge is the computational cost of high-fidelity simulation, which can be significant for complex environments or physics-based interactions.

The papers demonstrate various approaches to addressing these challenges. Thayananthan et al. (2025) address domain-specific simulation challenges by creating a specialized environment for cotton picking, incorporating relevant aspects of the task and environment. Song et al. (2025) address the challenge of modeling environmental changes over time by creating a dataset that specifically captures structural changes in urban environments, enabling more robust evaluation of place recognition algorithms.

Key Findings and Comparative Analysis

Effectiveness of Integrating Vision-Language Models with Control Methods

One of the most significant findings is the demonstrated effectiveness of integrating large vision-language models with traditional control methods for autonomous driving. Liu et al.'s (2025) X-Driver framework shows superior closed-loop performance compared to traditional modular pipelines, while also providing natural language explanations for its driving decisions. Similarly, Atsuta et al.'s (2025) LVLM-MPC collaboration framework demonstrates how large vision-language models can be effectively integrated with model predictive control to create a system that is both task-scalable and safety-aware.

These findings suggest a path forward for addressing one of the fundamental challenges in autonomous driving: balancing the flexibility needed to handle diverse and unpredictable environments with the reliability and safety guarantees required for deployment in the real world. By leveraging the broad knowledge and reasoning capabilities of large vision-language models while maintaining the safety constraints of traditional control methods, these approaches offer a promising direction for the next generation of autonomous driving systems.

Comparative analysis reveals that these integrated approaches outperform both pure learning-based methods, which may lack safety guarantees, and pure control-based methods, which may struggle with complex or ambiguous scenarios. The combination of foundation models with traditional control techniques represents a significant advancement in the state of the art for autonomous driving systems.

Learning from Unlabeled Demonstrations for Robot Skill Acquisition

Another key finding comes from Liang et al.'s (2025) work on Continuous Latent Action Models (CLAM) for robot learning from unlabeled demonstrations. Their approach addresses a fundamental limitation in robotics: the need for large amounts of costly action-labeled expert demonstrations. By learning continuous latent action labels in an unsupervised way from video demonstrations and jointly training an action decoder, CLAM achieves a 2-3x improvement in task success rate compared to previous state-of-the-art methods.

This finding is significant because it could dramatically reduce the data collection burden for teaching robots new tasks. Instead of requiring precisely labeled demonstrations with exact action sequences, CLAM allows robots to learn from unlabeled video demonstrations, which are much easier to collect. This could accelerate the deployment of robots in various applications by making it faster and more cost-effective to teach them new skills.

Comparative analysis shows that CLAM outperforms previous approaches to learning from demonstration, which typically required either large amounts of labeled data or struggled to capture the continuous and nuanced nature of physical manipulation. The use of continuous latent actions rather than discrete representations enables more precise and natural robot movements, particularly for fine-grained manipulation tasks.

Leveraging Morphological Symmetry for Efficient Bimanual Manipulation

A third key finding emerges from Li et al.'s (2025) work on morphologically symmetric reinforcement learning for ambidextrous bimanual manipulation (SYMDEX). By decomposing complex bimanual tasks into per-hand subtasks and leveraging equivariant neural networks, their method enables experience from one arm to be inherently leveraged by the opposite arm. This approach not only improves sample efficiency but also enhances robustness and generalization across diverse manipulation tasks.

This finding is significant because it demonstrates how structural properties of robots, such as bilateral symmetry, can be explicitly incorporated as inductive biases in learning algorithms to improve performance. By exploiting symmetry, SYMDEX allows robots to learn manipulation skills more efficiently and to generalize these skills across different configurations, potentially enabling more flexible and adaptable manipulation capabilities.

Comparative analysis indicates that SYMDEX outperforms conventional reinforcement learning approaches that do not exploit structural symmetry, particularly on complex tasks where the left and right hands perform different roles. The approach also demonstrates scalability to more complex robotic systems, such as four-arm manipulation, where symmetry-aware policies enable effective multi-arm collaboration and coordination.

Integrated Motion-Contact Planning for Robust In-Hand Manipulation

A fourth key finding comes from Jiang et al.'s (2025) integrated real-time motion-contact planning and tracking framework for in-hand manipulation. Their hierarchical approach, which combines contact-implicit model predictive control at the high level with concurrent tracking of motion and force references at the low level, enables robust manipulation even under appreciable external disturbances. The successful completion of five challenging tasks in real-world environments demonstrates the practical applicability of this approach.

This finding is significant because in-hand manipulation—the ability to reorient and reposition objects within a robot's grasp—has long been a challenging problem in robotics. The approach presented by Jiang et al. (2025) addresses this challenge by explicitly modeling and controlling both the motion of the object and the contact forces between the robot and the object. This could enable more dexterous and reliable manipulation in a variety of applications, from industrial assembly to household assistance.

Comparative analysis shows that this integrated approach outperforms methods that consider motion and contact separately, which often struggle to maintain stable grasps during complex manipulations. The hierarchical structure of the framework, with high-level planning and low-level tracking, allows for both strategic decision-making and reactive adaptation to disturbances, resulting in more robust performance in real-world scenarios.

Efficient Collision Avoidance Through Constraint Reformulation

A fifth key finding comes from Kojima et al.'s (2025) real-time model predictive control with convex-polygon-aware collision avoidance. By reformulating disjunctive OR constraints as tractable conjunctive AND constraints using either Support Vector Machine optimization or Minimum Signed Distance to Edges metrics, their approach enables accurate collision detection and avoidance without the computational burden of mixed integer programming.

This finding is significant because collision avoidance is a fundamental requirement for safe robot operation, particularly in environments shared with humans or other robots. The approach presented by Kojima et al. (2025) addresses a key computational challenge in collision avoidance, enabling more precise and efficient navigation in constrained environments. This could have applications ranging from autonomous parking to warehouse robotics, where robots must navigate in tight spaces while avoiding collisions with objects or humans.

Comparative analysis indicates that this approach achieves comparable accuracy to mixed integer programming methods while being computationally much more efficient, enabling real-time performance even in complex environments. The reformulation of collision avoidance constraints as conjunctive rather than disjunctive constraints represents a significant algorithmic innovation with broad applicability in robot motion planning and control.

Influential Works in Robotics Research

CLAM: Continuous Latent Action Models for Robot Learning

Liang et al. (2025) introduce CLAM (Continuous Latent Action Models), a novel approach for robot learning from unlabeled demonstrations. Traditional imitation learning requires demonstrations that include not only the state of the environment but also the precise actions taken by the expert, which are costly to collect. CLAM addresses this limitation by learning continuous latent action representations in an unsupervised way from video demonstrations.

The key innovation in CLAM is the use of continuous rather than discrete latent actions, which better captures the smooth and nuanced nature of physical manipulation. The two-stage learning process first learns a continuous latent action space that captures the dynamics of observed demonstrations, then jointly trains an action decoder that maps from this latent space to actual robot actions using a small amount of labeled data.

The results demonstrate a 2-3x improvement in task success rate compared to previous state-of-the-art methods across various benchmarks, including DMControl for locomotion, MetaWorld for manipulation, and on a real WidowX robot arm. This dramatic improvement suggests that the continuous representation of latent actions and the joint training of an action decoder are crucial for learning complex manipulation skills from unlabeled data.

The significance of CLAM extends beyond its immediate performance improvements. By reducing the need for action-labeled expert demonstrations, it addresses a fundamental scalability issue in robot learning. This could accelerate the development and deployment of robots capable of performing a wide range of manipulation tasks by making it faster and more cost-effective to teach them new skills.

SYMDEX: Symmetric Reinforcement Learning for Bimanual Manipulation

Li et al. (2025) present SYMDEX, a reinforcement learning framework that leverages morphological symmetry to enable ambidextrous bimanual manipulation. The key innovation is the decomposition of complex bimanual tasks into per-hand subtasks and the use of equivariant neural networks to exploit bilateral symmetry, allowing experience from one arm to benefit learning for the opposite arm.

The approach involves three main components: a decomposition module that breaks down bimanual tasks into per-hand subtasks, equivariant neural networks that ensure policies learned for one hand can be applied to the opposite hand through appropriate transformations, and a distillation process that combines subtask policies into a global ambidextrous policy independent of hand-task assignment.

Results from six challenging simulated manipulation tasks and two real-world deployments demonstrate SYMDEX's effectiveness, particularly on complex tasks where the left and right hands perform different roles. The approach also shows scalability to more complex robotic systems, such as four-arm manipulation, where symmetry-aware policies enable effective multi-arm collaboration.

The significance of SYMDEX lies in its potential to advance robot manipulation capabilities toward more human-like dexterity and flexibility. By enabling ambidextrous manipulation, robots could become more adaptable to different task configurations and environments. The approach also demonstrates how structural properties of robots can be explicitly incorporated as inductive biases in learning algorithms to improve sample efficiency and generalization.

X-Driver: Explainable Autonomous Driving with Vision-Language Models

Liu et al. (2025) introduce X-Driver, an explainable autonomous driving system that leverages large vision-language models to create a more capable and transparent driving agent. The key innovation is the use of chain-of-thought reasoning within a multi-modal large language model framework, enabling the system to not only make driving decisions but also provide natural language explanations for those decisions.

X-Driver employs a three-stage process: perception, where the system analyzes the visual scene; reasoning, where it interprets the scene and plans appropriate actions; and explanation, where it generates natural language descriptions of its reasoning process. Unlike traditional modular pipelines for autonomous driving, X-Driver uses a unified framework where the vision-language model directly processes visual inputs and generates both driving actions and explanations.

In closed-loop simulations, X-Driver demonstrates superior performance compared to benchmark models, achieving higher success rates and fewer infractions across various driving scenarios. The system also generates detailed and accurate explanations for its driving decisions, providing insights into its reasoning process that could help users understand and trust its behavior.

The significance of X-Driver extends beyond its immediate performance improvements. By demonstrating the effectiveness of large vision-language models for autonomous driving, it opens up new possibilities for creating more capable and adaptable driving systems. The explainability aspect is particularly important, as it addresses a key concern in the deployment of autonomous vehicles: the need for transparency and accountability in decision-making.

Critical Assessment and Future Directions

Current Progress and Limitations

The seventeen papers analyzed in this synthesis demonstrate significant progress in robotics research, particularly in the integration of foundation models with traditional robotics approaches, the advancement of bimanual and dexterous manipulation, the personalization and adaptability of autonomous systems, the development of domain-specific robotics applications, and the emphasis on explainability and interpretability. These advancements are expanding the capabilities of robots and making them more suitable for deployment in complex, real-world environments.

However, several limitations and challenges remain. First, many of the approaches presented in these papers rely on significant computational resources, which may limit their applicability in resource-constrained settings or for real-time applications. Second, while simulation environments are becoming more sophisticated, the reality gap—the discrepancy between simulated and real-world conditions—continues to pose challenges for transferring learned behaviors from simulation to reality. Third, many of the demonstrated capabilities, while impressive in controlled environments, may not yet be robust enough for long-term deployment in unstructured, dynamic environments.

Additionally, there are ethical and societal considerations that merit attention. As robots become more capable and autonomous, questions of safety, privacy, accountability, and economic impact become increasingly important. The papers in this collection primarily focus on technical capabilities rather than these broader implications, suggesting a need for more interdisciplinary research that considers the societal context in which robots will operate.

Promising Directions for Future Research

Several promising directions for future research emerge from this analysis. First, the integration of foundation models with robotics systems is likely to continue and expand, potentially leading to more capable and adaptable robots that can leverage pre-trained knowledge while maintaining the safety and reliability guarantees required for physical systems. Research on more efficient architectures and training methods could make these approaches more accessible for resource-constrained applications.

Second, the development of more sophisticated simulation environments and domain adaptation techniques could help address the reality gap, enabling more effective transfer of learned behaviors from simulation to reality. This could accelerate the development and deployment of robotic systems by reducing the need for costly and time-consuming real-world data collection and testing.

Third, the emphasis on explainability and interpretability is likely to grow, particularly as robots are deployed in more sensitive and high-stakes environments. Research on methods for generating accurate and understandable explanations of robot behavior could help build trust and facilitate more effective human-robot collaboration.

Fourth, the personalization and adaptability of robotic systems will continue to be important areas of research, with a focus on enabling robots to learn from and adapt to individual preferences and changing environmental conditions. This could lead to more user-friendly and effective robotic systems that can be deployed in a wider range of contexts.

Finally, the development of domain-specific robotics applications, particularly in areas like agriculture, construction, healthcare, and domestic assistance, represents a promising direction for future research. By focusing on specific domains and tasks, researchers can address practical challenges while also advancing the broader field of robotics.

Conclusion

The seventeen papers analyzed in this synthesis represent significant advancements in robotics research, demonstrating progress in autonomous driving, manipulation capabilities, human-robot interaction, and specialized applications. The integration of foundation models with traditional robotics approaches, the advancement of bimanual and dexterous manipulation, the personalization and adaptability of autonomous systems, the development of domain-specific robotics applications, and the emphasis on explainability and interpretability are emerging as key themes in contemporary robotics research.

While challenges remain in areas such as computational efficiency, the reality gap, and robustness in unstructured environments, the methodological approaches and findings presented in these papers point to promising directions for future research. As robots become increasingly integrated into daily life, continued progress in these areas will be essential for creating systems that are capable, reliable, and able to collaborate effectively with humans in shared environments.

The field of robotics is at an exciting juncture, with advances in artificial intelligence, sensor technology, and control systems enabling new capabilities and applications. By building on the work presented in these papers and addressing the remaining challenges, researchers can continue to push the boundaries of what is possible in robotics, creating systems that have the potential to transform various aspects of society and human experience.

References

Atsuta, K. et al. (2025). LVLM-MPC Collaboration Framework for Task-Scalable and Safety-Aware Autonomous Driving. arXiv:2505.12345

Jiang, S. et al. (2025). An Integrated Real-Time Motion-Contact Planning and Tracking Framework for Robust In-Hand Manipulation. arXiv:2505.67890

Kobayashi, H. (2025). CubeDAgger: Interactive Imitation Learning with Dynamic Stability Constraints. arXiv:2505.23456

Kojima, T. et al. (2025). Real-Time Model Predictive Control with Convex-Polygon-Aware Collision Avoidance. arXiv:2505.34567

Li, Y. et al. (2025). SYMDEX: Morphologically Symmetric Reinforcement Learning for Ambidextrous Bimanual Manipulation. arXiv:2505.45678

Liang, J. et al. (2025). CLAM: Continuous Latent Action Models for Robot Learning from Unlabeled Demonstrations. arXiv:2505.56789

Liu, X. et al. (2025). X-Driver: Explainable Autonomous Driving with Vision-Language Models. arXiv:2505.78901

Song, Z. et al. (2025). The City that Never Settles: A Simulation-Based LiDAR Dataset for Long-Term Place Recognition Under Extreme Structural Changes. arXiv:2505.89012

Surmann, M. et al. (2025). Multi-Objective Reinforcement Learning for Personalized Autonomous Driving Experiences. arXiv:2505.90123

Thayananthan, A. et al. (2025). CottonSim: A Simulation Environment for Autonomous Cotton Picking. arXiv:2505.01234

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

_Piotr_Adamowicz_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl-xl-xl.jpg)

![Walmart’s $30 Google TV streamer is now in stores and it supports USB-C hubs [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/onn-4k-plus-store-reddit.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple to Launch AI-Powered Battery Saver Mode in iOS 19 [Report]](https://www.iclarified.com/images/news/97309/97309/97309-1280.jpg)

![Apple Officially Releases macOS Sequoia 15.5 [Download]](https://www.iclarified.com/images/news/97308/97308/97308-640.jpg)