Will You Spot the Leaks? A Data Science Challenge

When models fly too high: A perilous journey through data leakage The post Will You Spot the Leaks? A Data Science Challenge appeared first on Towards Data Science.

Not another explanation

You’ve probably heard of data leakage, and you might know both flavours well: Target Variable and Train-Test Split. But will you spot the holes in my faulty logic, or the oversights in my optimistic code? Let’s find out.

I’ve seen many articles on Data Leakage, and I thought they were are all quite insightful. However, I did notice they tended to focus on the theoretical aspect of it. And I found them somewhat lacking in examples that zero in on the lines of code or precise decisions that lead to an overly optimistic model.

My goal in this article is not a theoretical one; it is to truly put your Data Science skills to the test. To see if you can spot all the decisions I make that lead to data leakage in a real-world example.

Solutions at the end

An Optional Review

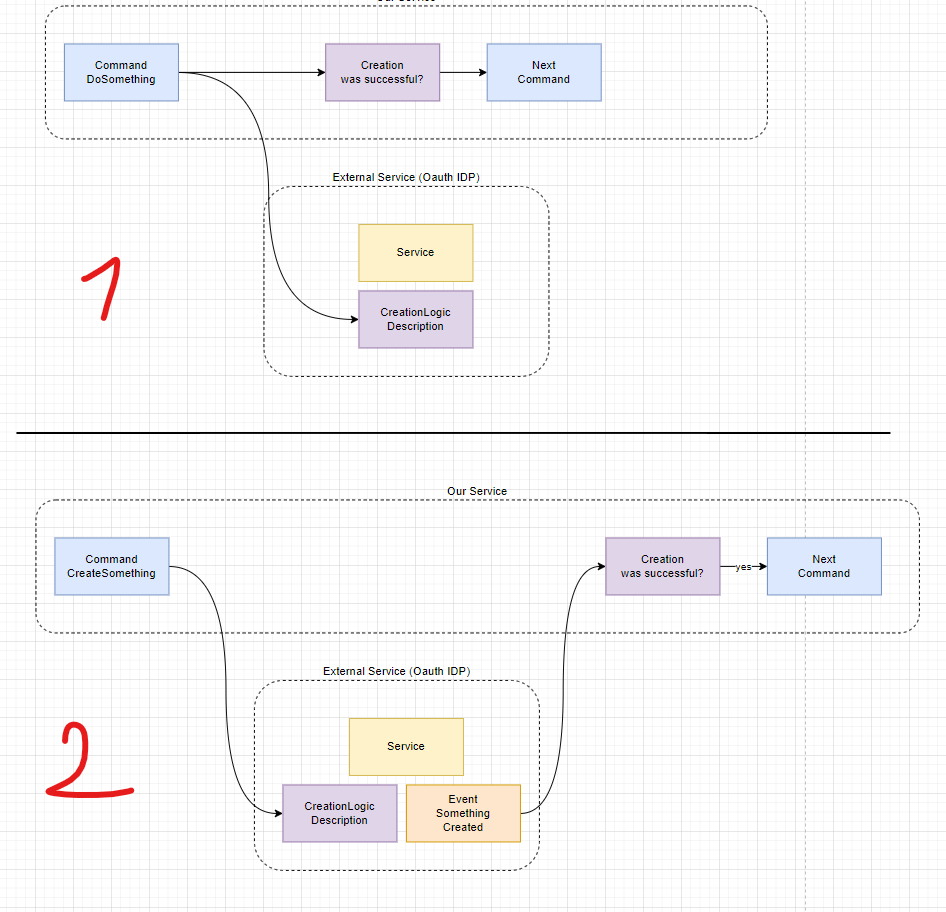

1. Target (Label) Leakage

When features contain information about what you’re trying to predict.

- Direct Leakage: Features computed directly from the target → Example: Using “days overdue” to predict loan default → Fix: Remove feature.

- Indirect Leakage: Features that serve as proxies for the target → Example: Using “insurance payout amount” to predict hospital readmission → Fix: Remove feature.

- Post-Event Aggregates: Using data from after the prediction point → Example: Including “total calls in first 30 days” for a 7-day churn model → Fix calculate aggregate on the fly

2. Train-Test (Split) Contamination

When test set information leaks into your training process.

- Data Analysis Leakage: Analyzing full dataset before splitting → Example: Examining correlations or covariance matrices of entire dataset → Fix: Perform exploratory analysis only on training data

- Preprocessing Leakage: Fitting transformations before splitting data → Examples: Computing covariance matrices, scaling, normalization on full dataset → Fix: Split first, then fit preprocessing on train only

- Temporal Leakage: Ignoring time order in time-dependent data → Fix: Maintain chronological order in splits.

- Duplicate Leakage: Same/similar records in both train and test → Fix: Ensure variants of an entity stay entirely in one split

- Cross-Validation Leakage: Information sharing between CV folds → Fix: Keep all transformations inside each CV loop

- Entity (Identifier) Leakage: When a high‑cardinality ID appears in both train and test, the model “learns” → Fix: Drop the columns or see Q3

Let the Games Begin

In total there at 17 points. The rules of the game are simple. At the end of each section pick your answers before moving ahead. The scoring is simple.

- +1 pt. identifying a column that leads to Data Leakage.

- +1 pt. identifying a problematic preprocessing.

- +1 pt. identifying when no data leakage has taken place.

Along the way, when you see

That is to tell you how many points are available in the above section.

Problems in the Columns

Let’s say we are hired by Hexadecimal Airlines to create a Machine Learning model that identifies planes most likely to have an accident on their trip. In other words, a supervised classification problem with the target variable Outcome in df_flight_outcome.

This is what we know about our data: Maintenance checks and reports are made first thing in the morning, prior to any departures. Our black-box data is recorded continuously for each plane and each flight. This monitors vital flight data such as Altitude, Warnings, Alerts, and Acceleration. Conversations in the cockpit are even recorded to help investigations in the event of a crash. At the end of every flight a report is generated, then an update is made to df_flight_outcome.

Question 1: Based on this information, what columns can we immediately remove from consideration?

A Convenient Categorical

Now, suppose we review the original .csv files we received from Hexadecimal Airlines and realize they went through all the work of splitting up the data into 2 files (no_accidents.csv and previous_accidents.csv). Separating planes with an accident history from planes with no accident history. Believing this to be useful data we add into our data-frame as a categorical column.

Question 2: Has data leakage taken place?

Needles in the Hay

Now let’s say we join our data on date and Tail#. To get the resulting data_frame, which we will use to train our model. In total, we have 12,345 entries, over 10 years of observation with 558 unique tail numbers, and 6 types maintenance checks. This data has no missing entries and has been joined together correctly using SQL so no temporal leakage takes place.

- Tail Number is a unique identifier for the plane.

- Flight Number is a unique identifier for the flight.

- Last Maintenance Day is always in the past.

- Flight hours since last maintenance are calculated prior to departure.

- Cycle count is the number of takeoffs and landings completed, used to track airframe stress.

- N1 fan speed is the rotational speed of the engine’s front fan, shown as a percentage of maximum RPM.

- EGT temperature stands for Exhaust Gas Temperature and measures engine combustion heat output.

Question 3: Could any of these features be a source of data leakage?

Question 4: Are there missing preprocessing steps that could lead to data leakage?

Hint — If there are missing preprocessing steps, or problematic columns, I do not fix them in the next section, i.e the mistake carries through.

Analysis and Pipelines

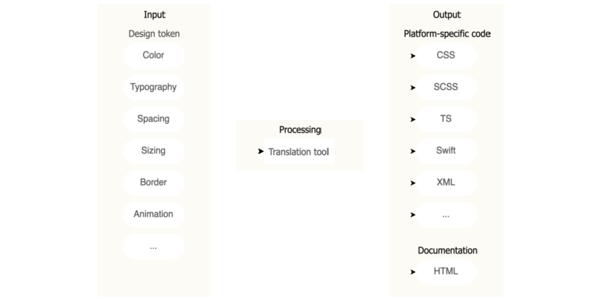

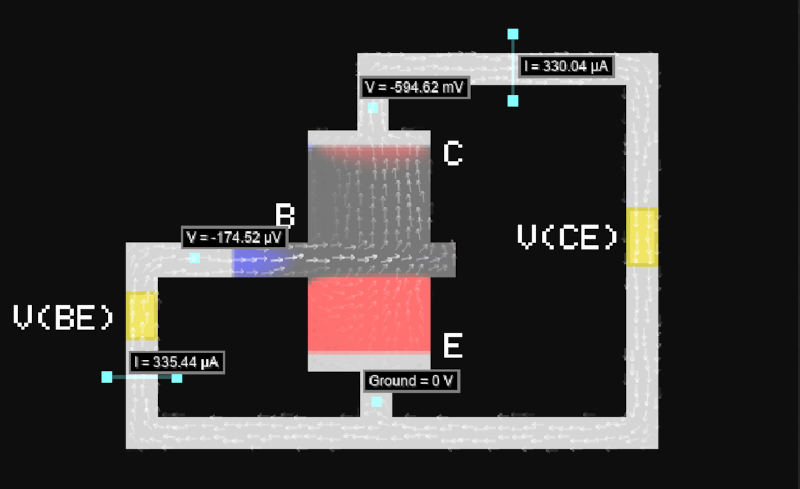

Now we focus our analysis on the numerical columns in df_maintenance. Our data shows a high amount of correlation between (Cycle, Flight hours) and (N1, EGT) so we make a note to use Principal Component Analysis (PCA) to reduce dimensionality.

We split our data into training and testing sets, use OneHotEncoder on categorical data, apply StandardScaler, then use PCA to reduce the dimensionality of our data.

# Errors are carried through from the above section

import pandas as pd

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler, OneHotEncoder

from sklearn.decomposition import PCA

from sklearn.compose import ColumnTransformer

n = 10_234

# Train-Test Split

X_train, y_train = df.iloc[:n].drop(columns=['Outcome']), df.iloc[:n]['Outcome']

X_test, y_test = df.iloc[n:].drop(columns=['Outcome']), df.iloc[n:]['Outcome']

# Define preprocessing steps

preprocessor = ColumnTransformer([

('cat', OneHotEncoder(handle_unknown='ignore'), ['Maintenance_Type', 'Tail#']),

('num', StandardScaler(), ['Flight_Hours_Since_Maintenance', 'Cycle_Count', 'N1_Fan_Speed', 'EGT_Temperature'])

])

# Full pipeline with PCA

pipeline = Pipeline([

('preprocessor', preprocessor),

('pca', PCA(n_components=3))

])

# Fit and transform data

X_train_transformed = pipeline.fit_transform(X_train)

X_test_transformed = pipeline.transform(X_test)Question 5: Has data leakage taken place?

Solutions

Answer 1: Remove all 4 columns from df_flight_outcome and all 8 columns from df_black_box, as this information is only available after landing, not at takeoff when predictions would be made. Including this post-flight data would create temporal leakage. (12 pts.)

Simply plugging data into a model is not enough we need to know how this data is being generated.

Answer 2: Adding the file names as a column is a source of data leakage as we could be essentially giving away the answer by adding a column that tells us if a plane has had an accident or not. (1 pt.)

As a rule of thumb you should always be incredibly critical in including file names or file paths.

Answer 3: Although all listed fields are available before departure, the high‐cardinality identifiers (Tail#, Flight#) causes entity (ID) leakage . The model simply memorizes “Plane X never crashes” rather than learning genuine maintenance signals. To prevent this leakage, you should either drop those ID columns entirely or use a group‑aware split so no single plane appears in both train and test sets. (2 pt.)

Corrected code for Q3 and Q4

df['Date'] = pd.to_datetime(df['Date'])

df = df.drop(columns='Flight#')

df = df.sort_values('Date').reset_index(drop=True)

# Group-aware split so no Tail# appears in both train and test

groups = df['Tail#']

gss = GroupShuffleSplit(n_splits=1, test_size=0.25, random_state=42)

train_idx, test_idx = next(gss.split(df, groups=groups))

train_df = df.iloc[train_idx].reset_index(drop=True)

test_df = df.iloc[test_idx].reset_index(drop=True)Answer 4: If we look carefully, we see that the date columns are not in order, and we did not sort the data chronologically. If you randomly shuffle time‐ordered records before splitting, “future” flights end up in your training set, letting the model learn patterns it wouldn’t have when actually predicting. That information leak inflates your performance metrics and fails to simulate real‐world forecasting. (1 pt.)

Answer 5: Data Leakage has taken place because we looked at the covariance matrix for df_maintenance which included both train and test data. (1 pt.)

Always do data analysis on the training data. Pretend the testing data does not exist, put it completely behind glass until its time to test you model.

Conclusion

The core principle sounds simple — never use information unavailable at prediction time — yet the application proves remarkably elusive. The most dangerous leaks slip through undetected until deployment, turning promising models into costly failures. True prevention requires not just technical safeguards but a commitment to experimental integrity. By approaching model development with rigorous skepticism, we transform data leakage from an invisible threat to a manageable challenge.

Key Takeaway: To spot data leakage, it is not enough to have a theoretical understanding of it; one must critically evaluate code and processing decisions, practice, and think critically about every decision.

All images by the author unless otherwise stated.

Let’s connect on Linkedin!

Follow me on X = Twitter

My previous story on TDS From a Point to L∞: How AI uses distance

The post Will You Spot the Leaks? A Data Science Challenge appeared first on Towards Data Science.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

_Piotr_Adamowicz_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl-xl-xl.jpg)

![Walmart’s $30 Google TV streamer is now in stores and it supports USB-C hubs [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/onn-4k-plus-store-reddit.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple to Launch AI-Powered Battery Saver Mode in iOS 19 [Report]](https://www.iclarified.com/images/news/97309/97309/97309-1280.jpg)

![Apple Officially Releases macOS Sequoia 15.5 [Download]](https://www.iclarified.com/images/news/97308/97308/97308-640.jpg)