What is Apache Flink? Exploring Its Open Source Business Model, Funding, and Community

Abstract This post provides an in-depth look at Apache Flink—a robust stream processing framework used for big data analytics. We discuss its technical features, open source funding models, community governance, and licensing under the Apache 2.0 License. In addition, we compare Flink’s traditional funding with emerging tokenization methods, examine its ecosystem, and explore practical use cases, challenges, and future trends. Readers will gain insights into how community contributions, corporate sponsorships, and innovative approaches like blockchain-enabled open source funding converge to sustain a platform that is both powerful and democratized. Introduction Apache Flink has emerged as a key player in the real-time processing of big data. As enterprises increasingly rely on real-time analytics for competitive edge, understanding how open source projects like Flink sustain their innovations is essential. In this post, we dive into the core aspects of Flink’s technical capabilities, explore its diverse funding streams—from volunteer contributions to corporate sponsorships—and discuss its transparent open source business model. We further look at how licensing freedoms foster innovation and community engagement, making Flink a benchmark for scalable, resilient data processing frameworks. Background and Context Apache Flink is a distributed processing engine purpose-built for both stream processing and batch processing. Created under the umbrella of the Apache Software Foundation, its design prioritizes low latency, high throughput, and fault tolerance. The project is open to contributions from developers worldwide and is maintained on GitHub, where its active community collaborates on code development, bug fixes, and new feature integrations. Historical Highlights Origins: Flink was designed to overcome limitations in earlier big data frameworks that struggled with real-time data streams. Ecosystem Growth: Over the years, Flink’s ecosystem has expanded, integrating with various data storage and messaging systems, increasing its adoption across industries like finance, IoT, and telecommunications. Community Impact: Open collaboration, thorough documentation, and innovation have been the hallmarks of Flink’s evolution, supporting the classic open source ethos. Core Concepts and Features Apache Flink stands out due to its innovative design and robust features that are adapted for both real-time and batch processing workloads. Some of its key features and technical concepts include: Key Technical Attributes High Throughput and Low Latency: Flink optimizes processing pipelines to deliver real-time insights even under heavy data loads. Fault Tolerance: With state snapshots and distributed recovery, Flink ensures resilient operations during failures. Scalability: Capable of scaling seamlessly to accommodate clocking data streams and large clusters, its distributed architecture makes it ideal for enterprise-level implementations. Flexible API Ecosystem: It offers APIs in Java, Scala, Python, and SQL, enabling developers to choose the right tools for their projects. Open Source Business Model Elements The business model around Apache Flink is rooted in transparency, collaboration, and open licensing. Key elements include: Element Description Licensing Freedom Uses the permissive Apache 2.0 License, allowing free modification and redistribution. Community-Driven Development Continuous contributions from developers worldwide lower development costs and accelerate innovation. Transparent Governance Open decision-making processes foster trust and collective ownership of the project. Revenue Ecosystem Companies build services around Flink, such as consulting, managed cloud offerings, and training for revenue generation. In addition, the ecosystem sometimes experiments with alternative funding models—like tokenized contributions—to complement traditional methods. For example, discussions on platforms like Arbitrum and Open Source Scaling Solutions indicate a growing interest in blending blockchain innovations with open source funding. Funding Channels Apache Flink receives funding through a mix of: Community Contributions: Volunteer code, bug reports, and documentation efforts. Corporate Sponsorships: Tech companies investing their resources to leverage Flink in production environments. Public Grants: Funding from governmental and institutional initiatives. Managed Services and Training: Professional support and certification programs that bolster the ecosystem. Applications and Use Cases Apache Flink’s versatility means it can be applied in a myriad of real-world scenarios. Here are a few practical examples where its features shine: Example 1: Real-Time Analytics in Finance Financial institutions can leverage Flink for fraud detection and high-frequency trading analyti

Abstract

This post provides an in-depth look at Apache Flink—a robust stream processing framework used for big data analytics. We discuss its technical features, open source funding models, community governance, and licensing under the Apache 2.0 License. In addition, we compare Flink’s traditional funding with emerging tokenization methods, examine its ecosystem, and explore practical use cases, challenges, and future trends. Readers will gain insights into how community contributions, corporate sponsorships, and innovative approaches like blockchain-enabled open source funding converge to sustain a platform that is both powerful and democratized.

Introduction

Apache Flink has emerged as a key player in the real-time processing of big data. As enterprises increasingly rely on real-time analytics for competitive edge, understanding how open source projects like Flink sustain their innovations is essential. In this post, we dive into the core aspects of Flink’s technical capabilities, explore its diverse funding streams—from volunteer contributions to corporate sponsorships—and discuss its transparent open source business model. We further look at how licensing freedoms foster innovation and community engagement, making Flink a benchmark for scalable, resilient data processing frameworks.

Background and Context

Apache Flink is a distributed processing engine purpose-built for both stream processing and batch processing. Created under the umbrella of the Apache Software Foundation, its design prioritizes low latency, high throughput, and fault tolerance. The project is open to contributions from developers worldwide and is maintained on GitHub, where its active community collaborates on code development, bug fixes, and new feature integrations.

Historical Highlights

- Origins: Flink was designed to overcome limitations in earlier big data frameworks that struggled with real-time data streams.

- Ecosystem Growth: Over the years, Flink’s ecosystem has expanded, integrating with various data storage and messaging systems, increasing its adoption across industries like finance, IoT, and telecommunications.

- Community Impact: Open collaboration, thorough documentation, and innovation have been the hallmarks of Flink’s evolution, supporting the classic open source ethos.

Core Concepts and Features

Apache Flink stands out due to its innovative design and robust features that are adapted for both real-time and batch processing workloads. Some of its key features and technical concepts include:

Key Technical Attributes

- High Throughput and Low Latency: Flink optimizes processing pipelines to deliver real-time insights even under heavy data loads.

- Fault Tolerance: With state snapshots and distributed recovery, Flink ensures resilient operations during failures.

- Scalability: Capable of scaling seamlessly to accommodate clocking data streams and large clusters, its distributed architecture makes it ideal for enterprise-level implementations.

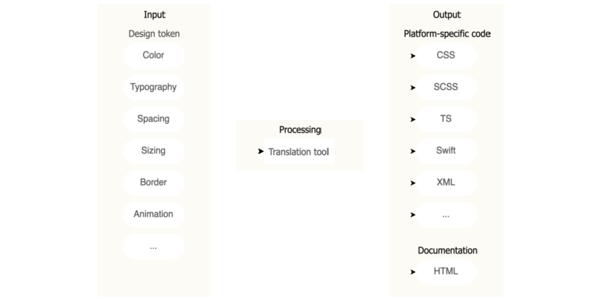

- Flexible API Ecosystem: It offers APIs in Java, Scala, Python, and SQL, enabling developers to choose the right tools for their projects.

Open Source Business Model Elements

The business model around Apache Flink is rooted in transparency, collaboration, and open licensing. Key elements include:

| Element | Description |

|---|---|

| Licensing Freedom | Uses the permissive Apache 2.0 License, allowing free modification and redistribution. |

| Community-Driven Development | Continuous contributions from developers worldwide lower development costs and accelerate innovation. |

| Transparent Governance | Open decision-making processes foster trust and collective ownership of the project. |

| Revenue Ecosystem | Companies build services around Flink, such as consulting, managed cloud offerings, and training for revenue generation. |

In addition, the ecosystem sometimes experiments with alternative funding models—like tokenized contributions—to complement traditional methods. For example, discussions on platforms like Arbitrum and Open Source Scaling Solutions indicate a growing interest in blending blockchain innovations with open source funding.

Funding Channels

Apache Flink receives funding through a mix of:

- Community Contributions: Volunteer code, bug reports, and documentation efforts.

- Corporate Sponsorships: Tech companies investing their resources to leverage Flink in production environments.

- Public Grants: Funding from governmental and institutional initiatives.

- Managed Services and Training: Professional support and certification programs that bolster the ecosystem.

Applications and Use Cases

Apache Flink’s versatility means it can be applied in a myriad of real-world scenarios. Here are a few practical examples where its features shine:

Example 1: Real-Time Analytics in Finance

Financial institutions can leverage Flink for fraud detection and high-frequency trading analytics. Its ability to process vast amounts of data in real time allows for instantaneous anomaly detection—a critical component for preventing fraudulent transactions.

Example 2: IoT Data Processing

In the rapidly expanding Internet of Things (IoT) space, sensors generate continuous data streams. Flink can aggregate, filter, and analyze this data, enabling predictive maintenance and operational health monitoring in industries such as manufacturing and utilities.

Example 3: Telecommunications and Social Media

Telecom companies use Flink to analyze call data records and network traffic. Similarly, social media platforms can use Flink’s stream processing to analyze user interactions, delivering personalized content recommendations in real time.

Challenges and Limitations

Like any robust technology, Apache Flink faces its share of challenges:

- Technical Complexity: The distributed nature of Flink and the high demands of low latency processing require deep technical expertise. This can be a barrier for smaller organizations without specialized talent.

- Resource Intensity: Running Flink at scale demands significant hardware and infrastructure investments, particularly for fault tolerance and state management.

- Adoption Barriers: Despite its strengths, integrating Flink with pre-existing systems may require a steep learning curve, especially in organizations accustomed to traditional batch processing frameworks.

- Evolving Funding Models: While traditional funding streams are solid, the integration of novel token-based models remains conceptual. Projects exploring Arbitrum and Community Governance are trying to bridge the gap, but these methods are still evolving.

Future Outlook and Innovations

The future of Apache Flink looks promising with continuous innovations on the horizon. Here are some trends and predictions:

- Increased Integration with AI and Machine Learning: Combining stream processing with AI could enable real-time decision-making in more complex scenarios. Flink’s API ecosystem might see enhanced support for integrating with ML libraries.

- Adoption of Blockchain for Open Source Funding: New funding mechanisms—such as those discussed on Arbitrum and Token Standards—may emerge to provide decentralized financial support for open source projects. This may further democratize developers’ access to resources.

- Enhanced Ecosystem Collaboration: As the community grows, we expect better interoperability with other big data tools and cloud platforms, creating more robust hybrid solutions.

- Scalability Improvements: Future versions of Flink may introduce more efficient state handling and resource management that reduce the overall cost of large-scale deployments.

- Developer Support and Onboarding: Vital initiatives such as extensive webinars and community-driven training programs will lower the entry barrier for new developers—ensuring the longevity of Flink’s developer community.

SEO Optimized Key Terms

To help with search engine optimization, here are some key phrases naturally woven into our discourse:

- Apache Flink streaming framework

- open source funding models

- Apache 2.0 License

- real-time data analytics

- distributed stream processing

- community governance in open source

- blockchain open source funding

- enterprise-level stream processing

- Flink scalability and fault tolerance

These keywords help encapsulate the essence of Apache Flink and highlight its multidimensional impact on modern data processing architectures.

Practical Tips for Developers

Developers looking to adopt Apache Flink should consider the following best practices:

- Engage with the Community: Contribute to forums, mailing lists, and GitHub discussions to stay updated on the latest improvements.

- Continuous Learning: Leverage online tutorials from the official Flink website and attend webinars for deeper insights.

- Experiment with Integration: Explore how Flink can be integrated with other tools in your technology stack to create a seamless, real-time processing pipeline.

- Monitor Resource Utilization: Given its resource-intensive nature, invest in infrastructure monitoring tools to ensure optimal performance.

Additional Insights from the Ecosystem

Beyond traditional funding, open source projects are increasingly exploring alternative economic models. For instance, some initiatives are examining the intersection of blockchain and open source licensing. This evolution is highlighted in discussions surrounding Arbitrum and Open Source License Compatibility, where decentralization and tokenization are emerging themes.

Moreover, topics like ethical funding methods are gaining traction in the developer community. Articles such as Open Source: A Goldmine for Indie Hackers provide further context on how innovative business models can impact project sustainability, reinforcing Apache Flink’s approach to transparency and community trust.

Bullet List: Advantages of Apache Flink

- Real-Time Processing: Delivers immediate analytics for time-critical applications.

- Fault Tolerance: Uses state snapshots and checkpointing to ensure data consistency.

- Scalable Architecture: Easily scales with increased data and processing demands.

- Cross-Language Support: API availability in multiple programming languages enhances developer flexibility.

- Open Governance: Transparent decision-making processes build community trust.

Summary

Apache Flink is more than just a stream processing framework—it represents a multifaceted ecosystem built on open source principles. From its technical innovations in real-time data analytics to its robust funding model combining community, corporate, and institutional support, Flink offers a shining example of sustainable, transparent software development. By leveraging the freedoms of the Apache 2.0 License and fostering a collaborative community, Flink continues to drive innovation in the realm of big data.

The exploration of alternative funding methods, such as those involving blockchain tokenization (as discussed in Arbitrum and Ethereum Interoperability), further underscores the evolving nature of open source sustainability. As developers, data engineers, and organizations navigate the challenges of modern data processing, Apache Flink stands as an emblem of resilience, scalability, and community-driven success.

In conclusion, whether you are seeking a robust real-time analytics engine, exploring the potential of decentralized funding, or simply interested in the future of open source software, Apache Flink serves as an invaluable resource for innovation. Continued collaboration, transparent governance, and a commitment to technical excellence ensure that it will remain at the forefront of data processing technologies for years to come.

Further Reading and Resources

For those interested in delving deeper into the world of Apache Flink and related open source ecosystems, consider exploring the following links:

- Apache Flink Official Website – Comprehensive documentation and updates.

- Apache Software Foundation – Learn more about the principles that guide open source projects.

- GitHub Repository for Apache Flink – Check out the code and contribute.

- Arbitrum and Community Governance – Insights on emerging governance models.

- Open Source: A Goldmine for Indie Hackers – An exploration of sustainable funding in the open source space.

This comprehensive guide to Apache Flink offers both technical insights and strategic perspectives, ensuring that whether you are a seasoned developer or a newcomer to the open source arena, you have a valuable resource at your fingertips. Happy coding, and may your journey into real-time data analytics be both innovative and sustainable!

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

_Piotr_Adamowicz_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl-xl-xl.jpg)

![Walmart’s $30 Google TV streamer is now in stores and it supports USB-C hubs [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/onn-4k-plus-store-reddit.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple to Launch AI-Powered Battery Saver Mode in iOS 19 [Report]](https://www.iclarified.com/images/news/97309/97309/97309-1280.jpg)

![Apple Officially Releases macOS Sequoia 15.5 [Download]](https://www.iclarified.com/images/news/97308/97308/97308-640.jpg)