VideoSnap Vision: Real-Time Object Recognition PWA Architecture

Real-Time Object Recognition in a React PWA with Hugging Face Transformers Hey folks! I recently built a super fun Progressive Web App (PWA) that does real-time object recognition using a small multimodal LLM from Hugging Face. Picture this: point your webcam at something, and the app instantly tells you what it sees—a dog, a cup, or even your favorite sneaker! It works right in your browser, even offline, and feels just like a native app. Pretty cool, right? Here’s how I pulled it off with React, TensorFlow.js, and a dash of PWA magic. Let’s dive in! Why Real-Time Video in a PWA? I'm a big fan of apps that are always ready to go, even if my internet connection isn't. Plus, who wants to rely on a beefy server for live video processing if you can do it on the device? PWAs are fantastic for this: they're installable, cache what they need for offline use, and work across all sorts of devices. For the brains of the operation—the Machine Learning part—I picked a small multimodal LLM from Hugging Face (think a lightweight version of CLIP). These models are champs at recognizing objects in images or video frames and are nimble enough to run smoothly in the browser. Setting Up the React PWA First things first, I got my React PWA project started using Create React App’s PWA template: npx create-react-app video-object-pwa --template cra-template-pwa cd video-object-pwa npm start This command set me up with a service-worker.js for handling offline caching and a manifest.json to give it that authentic app-like feel (like being installable on your home screen!). I popped into the manifest.json to name my app “VideoSnap” and gave it a snazzy icon. Our App's Blueprint: The Architecture Before we get into the nitty-gritty of code, let's take a bird's-eye view of how VideoSnap is put together. A picture is worth a thousand words, so here's a diagram (imagine this rendered beautifully with Eraser.io!): Let's break down what's happening: User's Device: Everything happens right here! No servers involved for the core functionality. Web Browser: This is our app's home. VideoSnap PWA (React App): This is our actual application code. App Shell & UI: The main interface you see and interact with. VideoRecognizer Component: The star of the show, handling webcam input and displaying predictions. PWA Features: Manifest.json: Tells the browser how to treat our app (icon, name, installability). Service Worker: The background hero that caches assets and the ML model, enabling offline use and speeding up subsequent loads. It intercepts network requests and can serve files directly from the... Browser Caches: PWAAssetsCache: Stores our app's code (JS, CSS, images). ModelCache: Crucially, this holds the downloaded ML model files. Once downloaded, they're available offline! In-Browser ML Stack:

Real-Time Object Recognition in a React PWA with Hugging Face Transformers

Hey folks! I recently built a super fun Progressive Web App (PWA) that does real-time object recognition using a small multimodal LLM from Hugging Face. Picture this: point your webcam at something, and the app instantly tells you what it sees—a dog, a cup, or even your favorite sneaker! It works right in your browser, even offline, and feels just like a native app. Pretty cool, right? Here’s how I pulled it off with React, TensorFlow.js, and a dash of PWA magic. Let’s dive in!

Why Real-Time Video in a PWA?

I'm a big fan of apps that are always ready to go, even if my internet connection isn't. Plus, who wants to rely on a beefy server for live video processing if you can do it on the device? PWAs are fantastic for this: they're installable, cache what they need for offline use, and work across all sorts of devices. For the brains of the operation—the Machine Learning part—I picked a small multimodal LLM from Hugging Face (think a lightweight version of CLIP). These models are champs at recognizing objects in images or video frames and are nimble enough to run smoothly in the browser.

Setting Up the React PWA

First things first, I got my React PWA project started using Create React App’s PWA template:

npx create-react-app video-object-pwa --template cra-template-pwa

cd video-object-pwa

npm start

This command set me up with a service-worker.js for handling offline caching and a manifest.json to give it that authentic app-like feel (like being installable on your home screen!). I popped into the manifest.json to name my app “VideoSnap” and gave it a snazzy icon.

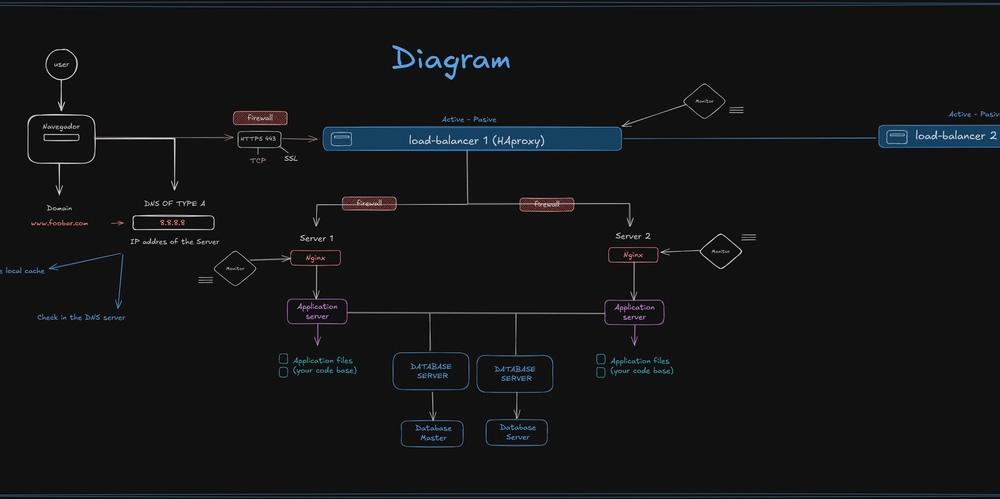

Our App's Blueprint: The Architecture

Before we get into the nitty-gritty of code, let's take a bird's-eye view of how VideoSnap is put together. A picture is worth a thousand words, so here's a diagram (imagine this rendered beautifully with Eraser.io!):

Let's break down what's happening:

- User's Device: Everything happens right here! No servers involved for the core functionality.

- Web Browser: This is our app's home.

- VideoSnap PWA (React App): This is our actual application code.

-

App Shell & UI: The main interface you see and interact with. -

VideoRecognizer Component: The star of the show, handling webcam input and displaying predictions. -

PWA Features:-

Manifest.json: Tells the browser how to treat our app (icon, name, installability). -

Service Worker: The background hero that caches assets and the ML model, enabling offline use and speeding up subsequent loads. It intercepts network requests and can serve files directly from the...

-

-

- Browser Caches:

-

PWAAssetsCache: Stores our app's code (JS, CSS, images). -

ModelCache: Crucially, this holds the downloaded ML model files. Once downloaded, they're available offline!

-

- In-Browser ML Stack:

-

- VideoSnap PWA (React App): This is our actual application code.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[FREE EBOOKS] Modern Generative AI with ChatGPT and OpenAI Models, Offensive Security Using Python & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)

![[Virtual Event] Strategic Security for the Modern Enterprise](https://eu-images.contentstack.com/v3/assets/blt6d90778a997de1cd/blt55e4e7e277520090/653a745a0e92cc040a3e9d7e/Dark_Reading_Logo_VirtualEvent_4C.png?width=1280&auto=webp&quality=80&disable=upscale#)

-xl-(1)-xl-xl.jpg)

![iPhone 17 Air Could Get a Boost From TDK's New Silicon Battery Tech [Report]](https://www.iclarified.com/images/news/97344/97344/97344-640.jpg)

![Vision Pro Owners Say They Regret $3,500 Purchase [WSJ]](https://www.iclarified.com/images/news/97347/97347/97347-640.jpg)

![Apple Showcases 'Magnifier on Mac' and 'Music Haptics' Accessibility Features [Video]](https://www.iclarified.com/images/news/97343/97343/97343-640.jpg)

![Sony WH-1000XM6 Unveiled With Smarter Noise Canceling and Studio-Tuned Sound [Video]](https://www.iclarified.com/images/news/97341/97341/97341-640.jpg)