The Silent Crisis in AI Implementation: Bridging the Data Science-Production Gap

I was analyzing different AI initiatives across multiple enterprises, and I noticed a troubling pattern: Organizations have brilliant data scientists building impressive models that never see the light of production. Let me explain why this matters - and how MLOps fundamentally changes the game. The $250K Jupyter Notebook Problem I was recently in a conversation with a VP of Data Science, who shared a painful truth: "We have a collection of sophisticated notebooks that cost us over $250K in talent hours to develop. Only 8% of them ever made it to production." Surprisingly, this is not an isolated case! Industry research suggests that upwards 80% of data science projects never make it to production deployment. The disconnect between research-oriented data science and production-grade engineering is costing companies millions while delaying AI-driven transformation. This is precisely where MLOps steps in. What MLOps Actually Solves? MLOps isn't just another technical buzzword - it's the structured approach that transforms experimental AI into reliable, scalable systems that create business value. Think of MLOps as the critical infrastructure connecting data science innovation with production reality: Model Versioning & Lineage Tracking Ever tried debugging why a model's performance suddenly declined in production? Without proper versioning that captures not just code but data, parameters, and environment configurations, it's nearly impossible. MLOps brings Git-like traceability to every aspect of the model lifecycle. Continuous Training & Integration Models aren't static artifacts - they're living systems that need updating as data patterns shift. In one of my recent programs, we automated closed-loop retraining pipeline that reduced model degradation by 37% through systematic evaluation and controlled deployment of updated models. Containerized Deployments & Orchestration The "it works on my machine" problem is exponentially worse with ML systems. Containerization ensures consistent runtime environments from development through testing and into production - drastically reducing the "why does it work in dev but not in prod?" troubleshooting sessions. Model Monitoring & Drift Detection A model slowly and silently degrading in production can cost millions before anyone actually notices. Comprehensive monitoring frameworks that detect data drift, concept drift, and performance degradation provide early warnings that prevent costly failures. Resource & Cost Optimization I can think of one success scenario, where implementing proper resource management for model serving reduced the infra spend by 4x. MLOps isn't just about making models work - it's also about making them economically viable at scale. The Organizational Challenge The most significant insight I've gained is that MLOps isn't primarily a technical challenge - it's also an organizational one. It requires: Breaking down silos between data scientists, ML engineers, and operations teams Creating shared ownership of model performance in production Building feedback loops that allow production insights to inform research directions Establishing clear metrics that bridge technical performance and business impact Moving Forward: The MLOps Maturity Journey In my experience with implementing MLOps practices, I've observed that success comes from progressive maturity: Stage 1: Manual Deployment Moving beyond notebooks to reproducible scripts and documented dependencies. Stage 2: Automated Training & Evaluation Establishing clear testing protocols and automated quality gates. Stage 3: Continuous Deployment Building confidence through progressive rollouts and automated canary testing. Stage 4: Observability & Automated Intervention Creating systems that not only detect issues but can respond appropriately. Stage 5: Full Lifecycle Integration Closing the loop between business outcomes and model development priorities. The organizations seeing the greatest ROI from AI investments are those methodically advancing through these maturity stages rather than attempting a massive transformation overnight. A Question for all I'm genuinely curious: What has been your biggest MLOps challenge or success story? Whether you're just beginning to move models to production or have built sophisticated MLOps platforms, I'd love to hear what you've learned along the way. Share your experiences below. #AIEngineering #MachineLearning #MLOps #MLOpsJourney #MachineLearningOps #DataScience #DataScienceReality #EnterpriseAI #ModelDeployment #ProductionML #AIInProduction #DevOps #TechTalkTuesday

I was analyzing different AI initiatives across multiple enterprises, and I noticed a troubling pattern:

Organizations have brilliant data scientists building impressive models that never see the light of production.

Let me explain why this matters - and how MLOps fundamentally changes the game.

The $250K Jupyter Notebook Problem

I was recently in a conversation with a VP of Data Science, who shared a painful truth: "We have a collection of sophisticated notebooks that cost us over $250K in talent hours to develop. Only 8% of them ever made it to production."

Surprisingly, this is not an isolated case! Industry research suggests that upwards 80% of data science projects never make it to production deployment. The disconnect between research-oriented data science and production-grade engineering is costing companies millions while delaying AI-driven transformation.

This is precisely where MLOps steps in.

What MLOps Actually Solves?

MLOps isn't just another technical buzzword - it's the structured approach that transforms experimental AI into reliable, scalable systems that create business value.

Think of MLOps as the critical infrastructure connecting data science innovation with production reality:

Model Versioning & Lineage Tracking

Ever tried debugging why a model's performance suddenly declined in production? Without proper versioning that captures not just code but data, parameters, and environment configurations, it's nearly impossible. MLOps brings Git-like traceability to every aspect of the model lifecycle.Continuous Training & Integration

Models aren't static artifacts - they're living systems that need updating as data patterns shift. In one of my recent programs, we automated closed-loop retraining pipeline that reduced model degradation by 37% through systematic evaluation and controlled deployment of updated models.Containerized Deployments & Orchestration

The "it works on my machine" problem is exponentially worse with ML systems. Containerization ensures consistent runtime environments from development through testing and into production - drastically reducing the "why does it work in dev but not in prod?" troubleshooting sessions.Model Monitoring & Drift Detection

A model slowly and silently degrading in production can cost millions before anyone actually notices. Comprehensive monitoring frameworks that detect data drift, concept drift, and performance degradation provide early warnings that prevent costly failures.Resource & Cost Optimization

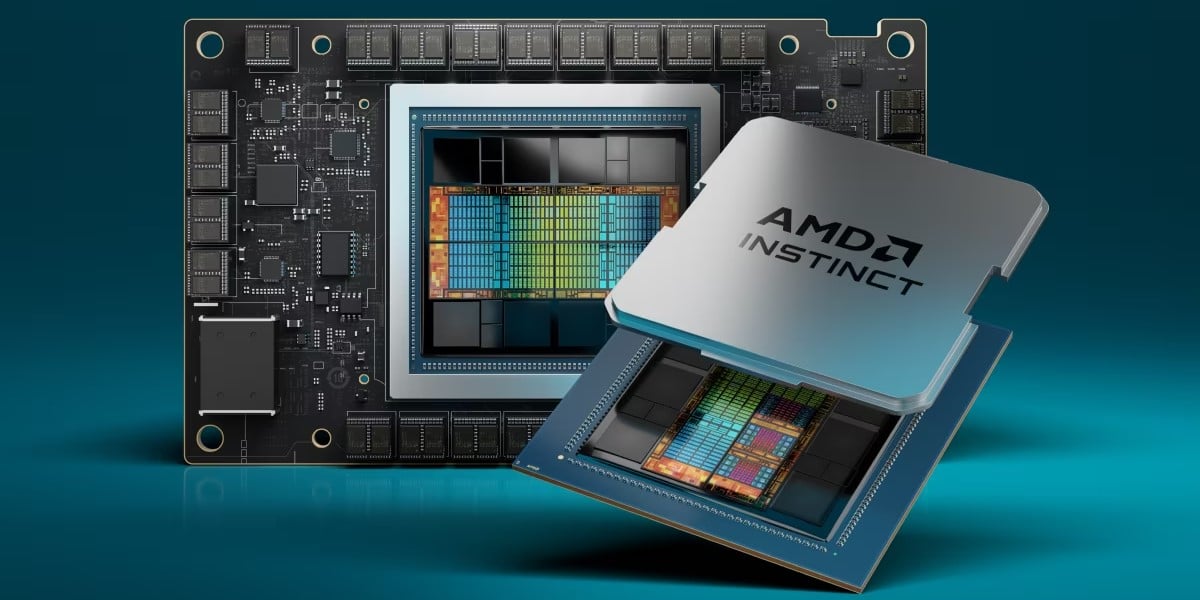

I can think of one success scenario, where implementing proper resource management for model serving reduced the infra spend by 4x. MLOps isn't just about making models work - it's also about making them economically viable at scale.

The Organizational Challenge

The most significant insight I've gained is that MLOps isn't primarily a technical challenge - it's also an organizational one. It requires:

Breaking down silos between data scientists, ML engineers, and operations teams

Creating shared ownership of model performance in production

Building feedback loops that allow production insights to inform research directions

Establishing clear metrics that bridge technical performance and business impact

Moving Forward: The MLOps Maturity Journey

In my experience with implementing MLOps practices, I've observed that success comes from progressive maturity:

Stage 1: Manual Deployment

Moving beyond notebooks to reproducible scripts and documented dependencies.

Stage 2: Automated Training & Evaluation

Establishing clear testing protocols and automated quality gates.

Stage 3: Continuous Deployment

Building confidence through progressive rollouts and automated canary testing.

Stage 4: Observability & Automated Intervention

Creating systems that not only detect issues but can respond appropriately.

Stage 5: Full Lifecycle Integration

Closing the loop between business outcomes and model development priorities.

The organizations seeing the greatest ROI from AI investments are those methodically advancing through these maturity stages rather than attempting a massive transformation overnight.

A Question for all

I'm genuinely curious: What has been your biggest MLOps challenge or success story? Whether you're just beginning to move models to production or have built sophisticated MLOps platforms, I'd love to hear what you've learned along the way.

Share your experiences below.

#AIEngineering #MachineLearning #MLOps #MLOpsJourney #MachineLearningOps #DataScience #DataScienceReality #EnterpriseAI #ModelDeployment #ProductionML #AIInProduction #DevOps #TechTalkTuesday

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![GrandChase tier list of the best characters available [April 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Global security vulnerability database gets 11 more months of funding [u]](https://photos5.appleinsider.com/gallery/63338-131616-62453-129471-61060-125967-51013-100774-49862-97722-Malware-Image-xl-xl-xl-(1)-xl-xl.jpg)

![Apple Shares New 'Mac Does That' Ads for MacBook Pro [Video]](https://www.iclarified.com/images/news/97055/97055/97055-640.jpg)

![Apple Releases tvOS 18.4.1 for Apple TV [Download]](https://www.iclarified.com/images/news/97047/97047/97047-640.jpg)

![Apple Releases macOS Sequoia 15.4.1 [Download]](https://www.iclarified.com/images/news/97049/97049/97049-640.jpg)