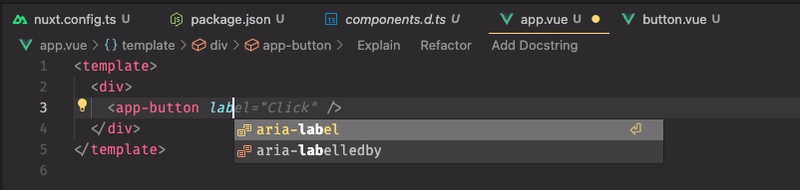

Study Note dlt Fundamentals Course - Lesson 2: dlt Sources and Resources, Create First dlt Pipeline

Overview In this lesson, you learned about creating a dlt pipeline by grouping resources into a source. You also learned about dlt transformers and how to use them to perform additional steps in the pipeline. Key Concepts dlt Resources: A resource is a logical grouping of data within a data source, typically holding data of similar structure and origin. dlt Sources: A source is a logical grouping of resources, e.g., endpoints of a single API. dlt Transformers: Special dlt resources that can be fed data from another resource to perform additional steps in the pipeline. Creating a dlt Pipeline A dlt pipeline is created by grouping resources into a source. Here's an example of how to create a pipeline using a list of dictionaries: @dlt.resource def my_dict_list(): return [ {"id": 1, "name": "Pikachu"}, {"id": 2, "name": "Charizard"} ] Using dlt Sources A source is a logical grouping of resources. You can declare a source by decorating a function that returns or yields one or more resources with @dlt.source. @dlt.source def my_source(): return [ my_dict_list(), other_resource() ] Using dlt Transformers dlt transformers are special resources that can be fed data from another resource to perform additional steps in the pipeline. @dlt.transformer def get_pokemon_info(data): for pokemon in data: response = requests.get(f"") pokemon['info'] = response.json() return data Exercise 1: Create a Pipeline for GitHub API - Repos Endpoint Explore the GitHub API and understand the endpoint to list public repositories for an organization. Build the pipeline using dlt.pipeline, dlt.resource, and dlt.source to extract and load data into a destination. Use duckdb connection, sql_client, or pipeline.dataset() to check the number of columns in the github_repos table. Exercise 2: Create a Pipeline for GitHub API - Stargazers Endpoint Create a dlt.transformer for the "stargazers" endpoint for the dlt-hub organization. Use the github_repos resource as a main resource for the transformer. Use duckdb connection, sql_client, or pipeline.dataset() to check the number of columns in the github_stargazer table. Reducing Nesting Level of Generated Tables You can limit how deep dlt goes when generating nested tables and flattening dicts into columns. By default, the library will descend and generate nested tables for all nested lists, without limit. @dlt.source def my_source(): return [ my_dict_list(nesting_level=1) ] Typical Settings nesting_level: The number of levels to descend and generate nested tables. Next Steps Proceed to the next lesson to learn more about dlt pipelines and how to use them to extract and load data into a destination.

Overview

In this lesson, you learned about creating a dlt pipeline by grouping resources into a source. You also learned about dlt transformers and how to use them to perform additional steps in the pipeline.

Key Concepts

- dlt Resources: A resource is a logical grouping of data within a data source, typically holding data of similar structure and origin.

- dlt Sources: A source is a logical grouping of resources, e.g., endpoints of a single API.

- dlt Transformers: Special dlt resources that can be fed data from another resource to perform additional steps in the pipeline.

Creating a dlt Pipeline

A dlt pipeline is created by grouping resources into a source. Here's an example of how to create a pipeline using a list of dictionaries:

@dlt.resource

def my_dict_list():

return [

{"id": 1, "name": "Pikachu"},

{"id": 2, "name": "Charizard"}

]

Using dlt Sources

A source is a logical grouping of resources. You can declare a source by decorating a function that returns or yields one or more resources with @dlt.source.

@dlt.source

def my_source():

return [

my_dict_list(),

other_resource()

]

Using dlt Transformers

dlt transformers are special resources that can be fed data from another resource to perform additional steps in the pipeline.

@dlt.transformer

def get_pokemon_info(data):

for pokemon in data:

response = requests.get(f"{pokemon['id']}>")

pokemon['info'] = response.json()

return data

Exercise 1: Create a Pipeline for GitHub API - Repos Endpoint

- Explore the GitHub API and understand the endpoint to list public repositories for an organization.

- Build the pipeline using

dlt.pipeline,dlt.resource, anddlt.sourceto extract and load data into a destination. - Use

duckdbconnection,sql_client, orpipeline.dataset()to check the number of columns in thegithub_repostable.

Exercise 2: Create a Pipeline for GitHub API - Stargazers Endpoint

- Create a

dlt.transformerfor the "stargazers" endpoint for thedlt-huborganization. - Use the

github_reposresource as a main resource for the transformer. - Use

duckdbconnection,sql_client, orpipeline.dataset()to check the number of columns in thegithub_stargazertable.

Reducing Nesting Level of Generated Tables

You can limit how deep dlt goes when generating nested tables and flattening dicts into columns. By default, the library will descend and generate nested tables for all nested lists, without limit.

@dlt.source

def my_source():

return [

my_dict_list(nesting_level=1)

]

Typical Settings

-

nesting_level: The number of levels to descend and generate nested tables.

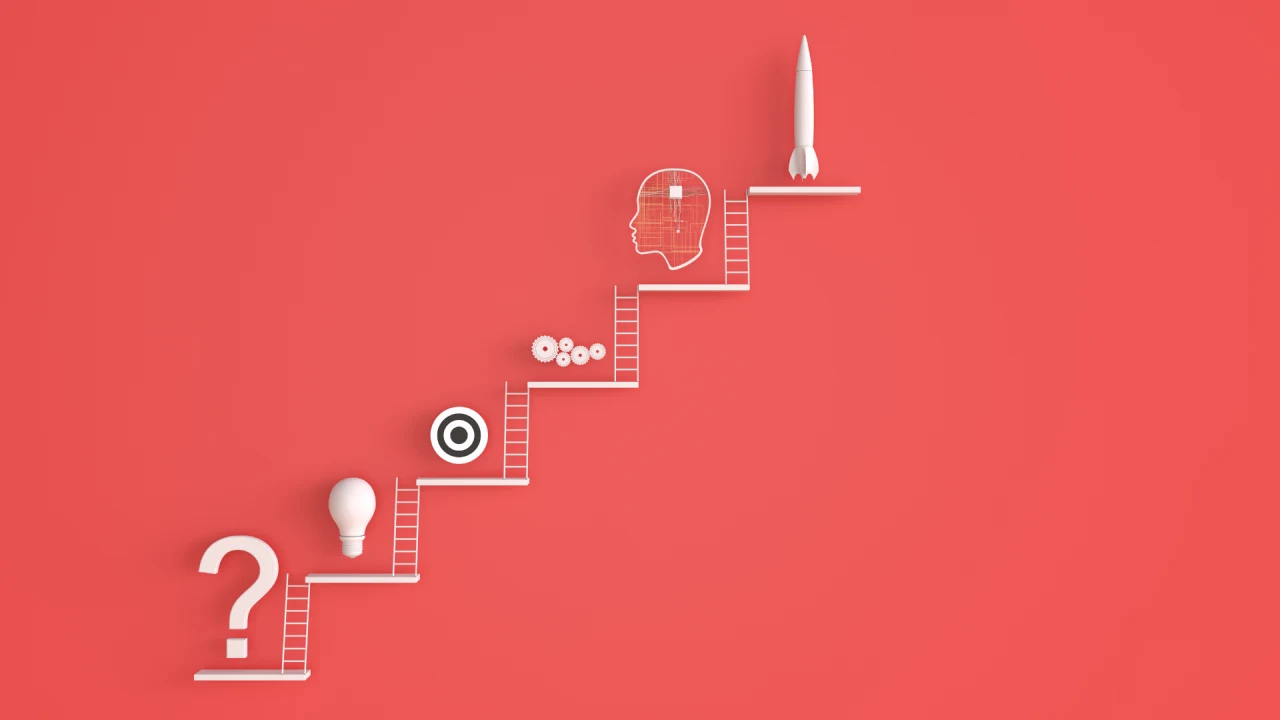

Next Steps

- Proceed to the next lesson to learn more about dlt pipelines and how to use them to extract and load data into a destination.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)