Streaming Middleware in Node.js: Transform Large HTTP Responses Without Buffering

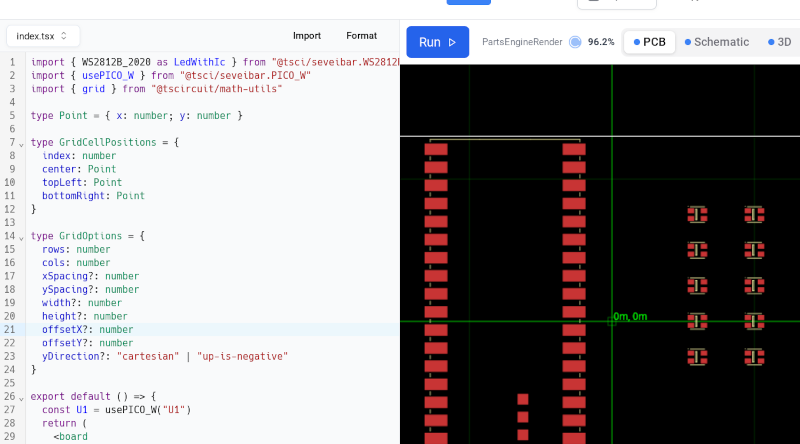

Most Node.js middleware assumes buffered bodies — great for JSON APIs, but terrible for performance when working with large files, proxied responses, or real-time content. In this article, you’ll learn how to build streaming middleware in Node.js that operates on-the-fly, without ever buffering the full response in memory — ideal for: HTML injection without latency JSON rewriting in proxies Compression/encryption on the fly Streaming large logs/files through a filter Step 1: Understand the Problem with Traditional Middleware Common middleware like body-parser, or response rewrites in express, assume the request/response is buffered: // This blocks until the full response is received app.use((req, res, next) => { let chunks = []; res.on('data', chunk => chunks.push(chunk)); res.on('end', () => { const body = Buffer.concat(chunks).toString(); // modify body here (too late for streaming) }); }); This doesn't scale. For large files or real-time proxies, we want transformations mid-stream, before the full body is received. Step 2: Use on-headers to Hook Into Streaming Response We’ll write a middleware that intercepts the response before headers are sent, and replaces res.write and res.end with our own streaming pipeline. Install on-headers: npm install on-headers Then create a middleware like this: const onHeaders = require('on-headers'); const { Transform } = require('stream'); function streamingTransformMiddleware(rewriteFn) { return (req, res, next) => { const originalWrite = res.write; const originalEnd = res.end; const transformStream = new Transform({ transform(chunk, encoding, callback) { const output = rewriteFn(chunk.toString()); callback(null, output); } }); // Delay piping until headers are about to be sent onHeaders(res, () => { res.write = (...args) => transformStream.write(...args); res.end = (...args) => transformStream.end(...args); transformStream.on('data', (chunk) => originalWrite.call(res, chunk)); transformStream.on('end', () => originalEnd.call(res)); }); next(); }; } This replaces the write stream with a transform that operates chunk-by-chunk, ideal for streaming. Step 3: Use It in Your Express App Let’s apply a simple example: rewrite every instance of “dog” to “cat” in streamed HTML: app.use(streamingTransformMiddleware((chunk) => { return chunk.replace(/dog/g, 'cat'); })); Now this middleware will modify every chunk as it’s being streamed to the client — no full buffer, no delay. You can also pipe incoming proxy streams (e.g., via http-proxy) directly through this transform for on-the-fly rewriting. Step 4: Bonus – Add Compression in the Same Stream Need gzip on top? Just add another transform layer using zlib: const zlib = require('zlib'); const gzip = zlib.createGzip(); transformStream .pipe(gzip) .on('data', (chunk) => originalWrite.call(res, chunk)) .on('end', () => originalEnd.call(res)); This allows stacked streaming transforms, such as: HTML injection Content rewriting Minification Compression All in a single pass, fully streamed. ✅ Pros:

Most Node.js middleware assumes buffered bodies — great for JSON APIs, but terrible for performance when working with large files, proxied responses, or real-time content.

In this article, you’ll learn how to build streaming middleware in Node.js that operates on-the-fly, without ever buffering the full response in memory — ideal for:

- HTML injection without latency

- JSON rewriting in proxies

- Compression/encryption on the fly

- Streaming large logs/files through a filter

Step 1: Understand the Problem with Traditional Middleware

Common middleware like body-parser, or response rewrites in express, assume the request/response is buffered:

// This blocks until the full response is received

app.use((req, res, next) => {

let chunks = [];

res.on('data', chunk => chunks.push(chunk));

res.on('end', () => {

const body = Buffer.concat(chunks).toString();

// modify body here (too late for streaming)

});

});

This doesn't scale. For large files or real-time proxies, we want transformations mid-stream, before the full body is received.

Step 2: Use on-headers to Hook Into Streaming Response

We’ll write a middleware that intercepts the response before headers are sent, and replaces res.write and res.end with our own streaming pipeline.

Install on-headers:

npm install on-headers

Then create a middleware like this:

const onHeaders = require('on-headers');

const { Transform } = require('stream');

function streamingTransformMiddleware(rewriteFn) {

return (req, res, next) => {

const originalWrite = res.write;

const originalEnd = res.end;

const transformStream = new Transform({

transform(chunk, encoding, callback) {

const output = rewriteFn(chunk.toString());

callback(null, output);

}

});

// Delay piping until headers are about to be sent

onHeaders(res, () => {

res.write = (...args) => transformStream.write(...args);

res.end = (...args) => transformStream.end(...args);

transformStream.on('data', (chunk) => originalWrite.call(res, chunk));

transformStream.on('end', () => originalEnd.call(res));

});

next();

};

}

This replaces the write stream with a transform that operates chunk-by-chunk, ideal for streaming.

Step 3: Use It in Your Express App

Let’s apply a simple example: rewrite every instance of “dog” to “cat” in streamed HTML:

app.use(streamingTransformMiddleware((chunk) => {

return chunk.replace(/dog/g, 'cat');

}));

Now this middleware will modify every chunk as it’s being streamed to the client — no full buffer, no delay.

You can also pipe incoming proxy streams (e.g., via http-proxy) directly through this transform for on-the-fly rewriting.

Step 4: Bonus – Add Compression in the Same Stream

Need gzip on top? Just add another transform layer using zlib:

const zlib = require('zlib');

const gzip = zlib.createGzip();

transformStream

.pipe(gzip)

.on('data', (chunk) => originalWrite.call(res, chunk))

.on('end', () => originalEnd.call(res));

This allows stacked streaming transforms, such as:

- HTML injection

- Content rewriting

- Minification

- Compression

All in a single pass, fully streamed.

✅ Pros:

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Google Home app fixes bug that repeatedly asked to ‘Set up Nest Cam features’ for Nest Hub Max [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2022/08/youtube-premium-music-nest-hub-max.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Epic Games Wins Major Victory as Apple is Ordered to Comply With App Store Anti-Steering Injunction [Updated]](https://images.macrumors.com/t/Z4nU2dRocDnr4NPvf-sGNedmPGA=/2250x/article-new/2022/01/iOS-App-Store-General-Feature-JoeBlue.jpg)