Scheduling Transformations in Reactivity

From pure event graphs to safely mutating state 1. Introduction: The Real-World Punch There’s a saying: “Every plan is perfect until you get punched in the face.” In software, that punch often comes the moment your pure logic has to interact with the real world. In our last post, we built a clean, composable model for events — one based on transformations, ownership, and simplicity. But so far, everything has been pure. We transform values, we log them, we react — but we haven’t changed anything. That changes now. Let’s take this innocent-looking example: const [onEvent, emitEvent] = createEvent() onEvent(() => console.log(state())) onEvent(() => setState("world")) onEvent(() => console.log(state())) What should this log? "hello" and then "world"? "world" twice? Or "hello" twice? The answer depends entirely on execution order. And without clear rules, even small graphs like this become hard to reason about. Push-based systems often run into this: If you mutate state mid-propagation, every downstream listener sees a different world. So now we ask: how do we bring consistency and predictability to a reactive event system — even when it mutates? The answer lies in something we borrowed from signals: phased execution. 2. Mutation Hazards in Push-Based Systems Push-based reactivity doesn’t have phases. It has flow. As soon as you emit a value, every subscriber runs immediately — in the order they were defined. That’s fine when you’re just transforming data. But once you introduce mutations, the cracks start to show. Take this setup: onEvent(() => console.log(state())) onEvent(() => setState("world")) onEvent(() => console.log(state())) Depending on how these handlers were registered, and in what order they run, the logs might print: "hello", then "world" — which seems logical or worse: "world" twice — because the state was changed mid-propagation There’s no real “before and after” in this model. Everything is firing during the same tick, and nothing is scheduled — so side effects collide with each other, and reads become unpredictable. Push-based graphs become fragile when reads and writes happen in the same pass. If we want predictable behavior — if we want to reason about event graphs like pure functions — we need to separate mutation from computation. Just like signals did. 3. Lessons from Signals — Phased Execution Signals hit the same wall events do: mixing computation and side effects creates unpredictable behavior. Solid’s answer was phased execution. In Solid 2.0, reactivity happens in layers, not all at once: Pure derivations (memos, derived signals) These run first and synchronously during a synthetic "clock cycle". No side effects. Just computation. Side effects (createEffect) These are deferred until after the graph stabilizes. Used to read from the DOM, notify external systems, or manage local resources. “All changes to the DOM or outside world happen after all pure computations are complete.” Even though some reactive work runs immediately, Solid 2.0 internally queues it and flushes the graph once it’s fully consistent. This guarantees that the entire reactive graph stabilizes in a single clock cycle, and only then do effects run. It’s a small shift, but a powerful one: Separate pure logic from side effects, and you get predictability. That’s what we want for events, too. 4. Bringing Phases to Events If signals needed phased execution to stay predictable, events need the same — maybe even more. Here’s the structure we adopt for event execution: Phase 1: Pure event graph All event handlers (transformers) run immediately. No state changes, no side effects — just pure mapping. Phase 2: Mutations Instead of calling setState() directly inside handlers, you schedule it. You use createMutation(handler, effectFn) — the handler runs in phase 1, and effectFn is deferred to phase 2. Phase 3+: Signals and UI After mutations, the rest of Solid’s reactive system proceeds as usual. Signals notify, DOM updates happen, effects fire — all downstream of a clean update. This lets you build composable flows like this: const onNext = onClick(() => count() + 1) createMutation(onNext, setCount) You now know: onClick runs onNext transforms the value setCount(...) is queued, not run immediately The UI updates after all pure logic completes 5. Blocking Unsafe Patterns For phased execution to work, we need clear boundaries — but we don’t want to over-restrict expressive patterns either. State mutations (setState) must never happen in phase 1. If setState() happens during the pure event phase, downstream handlers may see a changed world — and that breaks everything. To enforce this, any setState() inside a pure event handler should either: Throw a dev-time error, or Be automatically scheduled as a mutation onClick(() => s

From pure event graphs to safely mutating state

1. Introduction: The Real-World Punch

There’s a saying:

“Every plan is perfect until you get punched in the face.”

In software, that punch often comes the moment your pure logic has to interact with the real world.

In our last post, we built a clean, composable model for events — one based on transformations, ownership, and simplicity. But so far, everything has been pure. We transform values, we log them, we react — but we haven’t changed anything.

That changes now.

Let’s take this innocent-looking example:

const [onEvent, emitEvent] = createEvent()

onEvent(() => console.log(state()))

onEvent(() => setState("world"))

onEvent(() => console.log(state()))

What should this log?

-

"hello"and then"world"? -

"world"twice? - Or

"hello"twice?

The answer depends entirely on execution order. And without clear rules, even small graphs like this become hard to reason about.

Push-based systems often run into this:

If you mutate state mid-propagation, every downstream listener sees a different world.

So now we ask: how do we bring consistency and predictability to a reactive event system — even when it mutates?

The answer lies in something we borrowed from signals: phased execution.

2. Mutation Hazards in Push-Based Systems

Push-based reactivity doesn’t have phases. It has flow.

As soon as you emit a value, every subscriber runs immediately — in the order they were defined. That’s fine when you’re just transforming data. But once you introduce mutations, the cracks start to show.

Take this setup:

onEvent(() => console.log(state()))

onEvent(() => setState("world"))

onEvent(() => console.log(state()))

Depending on how these handlers were registered, and in what order they run, the logs might print:

-

"hello", then"world"— which seems logical - or worse:

"world"twice — because the state was changed mid-propagation

There’s no real “before and after” in this model. Everything is firing during the same tick, and nothing is scheduled — so side effects collide with each other, and reads become unpredictable.

Push-based graphs become fragile when reads and writes happen in the same pass.

If we want predictable behavior — if we want to reason about event graphs like pure functions — we need to separate mutation from computation.

Just like signals did.

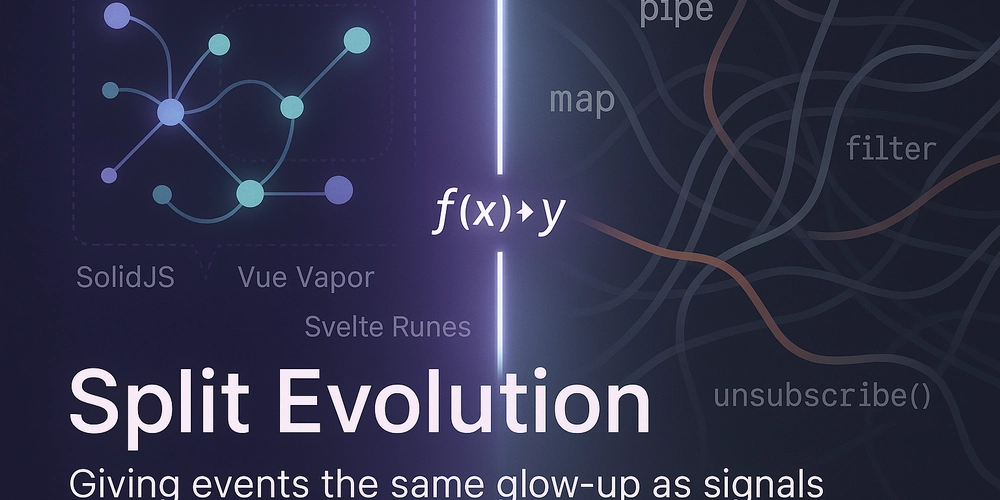

3. Lessons from Signals — Phased Execution

Signals hit the same wall events do: mixing computation and side effects creates unpredictable behavior.

Solid’s answer was phased execution.

In Solid 2.0, reactivity happens in layers, not all at once:

-

Pure derivations (memos, derived signals)

- These run first and synchronously during a synthetic "clock cycle".

- No side effects. Just computation.

-

Side effects (

createEffect)- These are deferred until after the graph stabilizes.

- Used to read from the DOM, notify external systems, or manage local resources.

“All changes to the DOM or outside world happen after all pure computations are complete.”

Even though some reactive work runs immediately, Solid 2.0 internally queues it and flushes the graph once it’s fully consistent. This guarantees that the entire reactive graph stabilizes in a single clock cycle, and only then do effects run.

It’s a small shift, but a powerful one:

Separate pure logic from side effects, and you get predictability.

That’s what we want for events, too.

4. Bringing Phases to Events

If signals needed phased execution to stay predictable, events need the same — maybe even more.

Here’s the structure we adopt for event execution:

Phase 1: Pure event graph

- All event handlers (transformers) run immediately.

- No state changes, no side effects — just pure mapping.

Phase 2: Mutations

- Instead of calling

setState()directly inside handlers, you schedule it. - You use

createMutation(handler, effectFn)— the handler runs in phase 1, andeffectFnis deferred to phase 2.

Phase 3+: Signals and UI

- After mutations, the rest of Solid’s reactive system proceeds as usual.

- Signals notify, DOM updates happen, effects fire — all downstream of a clean update.

This lets you build composable flows like this:

const onNext = onClick(() => count() + 1)

createMutation(onNext, setCount)

You now know:

-

onClickruns -

onNexttransforms the value -

setCount(...)is queued, not run immediately - The UI updates after all pure logic completes

5. Blocking Unsafe Patterns

For phased execution to work, we need clear boundaries — but we don’t want to over-restrict expressive patterns either.

State mutations (

setState) must never happen in phase 1.

If setState() happens during the pure event phase, downstream handlers may see a changed world — and that breaks everything.

To enforce this, any setState() inside a pure event handler should either:

- Throw a dev-time error, or

- Be automatically scheduled as a mutation

onClick(() => setState(count() + 1)) // ❌ not allowed directly

Instead, use:

createMutation(onClick, () => setCount(count() + 1))

What about emit()? Emitting from inside a handler is pure — it just expands the graph.

Still, it should be used thoughtfully. Overusing nested emits can make graphs harder to trace.

Mutation breaks the phase model.

Emission just grows the graph.

6. createMutation in Practice

This is your gateway to phase 2 — safe, scheduled mutation.

const onNext = onClick(() => count() + 1)

createMutation(onNext, setCount)

Want to perform multiple updates?

createMutation(onSubmit, () => {

setLoading(true)

setStatus("submitted")

})

You can also inject logging, analytics, or cleanups — all scoped, all deferred.

7. Sync Parity with Signals

Solid 2.0 uses auto-batching — effects run only after the graph stabilizes.

To match this behavior, we flush all queued mutations automatically after every emit().

emitIncrement() // queues the mutation

// mutation is flushed right after emit completes

Need to group multiple emits?

batchEvents(() => {

emitAdd()

emitSubtract()

})

The queue flushes after the batch — keeping mutation and UI updates consistent with signal behavior.

| Mechanism | Signals (2.0) | Events |

|---|---|---|

| Default | Auto-batched | Auto-flushed |

| Manual control | flushSync() |

batchEvents() |

8. Final Example — Predictable Flow

const [onIncrement, emitIncrement] = createEvent()

const [count, setCount] = createSignal(0)

const onNext = onIncrement(() => count() + 1)

createMutation(onNext, setCount)

-

emitIncrement()fires. -

onIncrementcomputescount() + 1. -

setCount(...)is queued. - The mutation is flushed after the event cycle.

Want logging?

const onLog = onNext((next) => {

console.log("Next count:", next)

return next

})

createMutation(onLog, setCount)

Want to batch updates?

batchEvents(() => {

emitSave()

emitClear()

})

Pure. Predictable. Phased.

Next time: async, errors, retries, and full integration with suspense.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Re-designing a Git/development workflow with best practices [closed]](https://i.postimg.cc/tRvBYcrt/branching-example.jpg)

![[DEALS] Microsoft 365: 1-Year Subscription (Family/Up to 6 Users) (23% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

![What Google Messages features are rolling out [May 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![New Apple iPad mini 7 On Sale for $399! [Lowest Price Ever]](https://www.iclarified.com/images/news/96096/96096/96096-640.jpg)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)