Running DeepSeek-R1 with Ollama using Only Docker: A Complete Guide

Introduction DeepSeek-R1 is a powerful open-source LLM (Large Language Model) that can be easily run using Ollama inside Docker. This guide will walk you through setting up DeepSeek-R1 on a normal laptop with just Docker. If you have an NVIDIA GPU, an optional section will cover GPU acceleration. By the end of this guide, you will be able to: Run Ollama in Docker with just a normal laptop. Pull and run DeepSeek-R1 using only CPU (no need for GPU). Enable GPU acceleration if your system has an NVIDIA GPU. Run the entire setup with a single command for ease of execution. Optionally use a Web UI for a better experience instead of CLI. Prerequisites (CPU Execution - Recommended for Most Users) This guide is structured to prioritize CPU usage, ensuring that any normal laptop with Docker installed can run DeepSeek-R1 efficiently. Only Docker is required (Install Docker). No special hardware is needed—your normal laptop will work! 16GB+ RAM recommended (for smooth performance). Step 1: Pull and Run Ollama in Docker (CPU Only) Ollama provides a convenient runtime for models like DeepSeek-R1. We will first run Ollama inside a Docker container. docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama This will: Start Ollama in a Docker container. Expose it on port 11434. Persist downloaded models using a volume (ollama:/root/.ollama). To verify the container is running: docker ps Step 2: Pull and Run DeepSeek-R1 Model (CPU Only) Now that Ollama is running, we can pull and execute DeepSeek-R1. Pull DeepSeek-R1 Model docker exec -it ollama ollama pull deepseek-r1:8b Run DeepSeek-R1 (CPU Mode) docker exec -it ollama ollama run deepseek-r1:8b Step 3: Running Everything in One Command (CPU Only) docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama && \ docker exec -it ollama ollama pull deepseek-r1:8b && \ docker exec -it ollama ollama run deepseek-r1:8b Optional: Running DeepSeek-R1 with Web UI If you prefer a graphical interface instead of using the command line, you can set up a Web UI for Ollama and DeepSeek-R1. Step 1: Run Open WebUI with Ollama docker run -d -p 3000:8080 -e OLLAMA_API_BASE_URL=http://host.docker.internal:11434 -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main This will: Start Open WebUI, which provides a web-based chat interface for DeepSeek-R1. Expose it on http://localhost:3000. Connect it to the running Ollama container. Now, open your browser and navigate to http://localhost:3000 to chat with DeepSeek-R1 using an easy-to-use UI. Optional: Running DeepSeek-R1 with GPU Acceleration If you have an NVIDIA GPU, you can enable GPU acceleration for improved performance. Prerequisites (GPU Execution) NVIDIA GPU (with CUDA support). NVIDIA Drivers installed (Check GPU Compatibility). Docker with NVIDIA Container Toolkit installed. Step 1: Run Ollama in Docker (With GPU Support) docker run -d --gpus=all -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama Step 2: Run DeepSeek-R1 with GPU docker exec -it ollama ollama run deepseek-r1:8b --gpu Step 3: Running Everything in One Command (GPU Enabled) docker run -d --gpus=all -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama && \ docker exec -it ollama ollama pull deepseek-r1:8b && \ docker exec -it ollama ollama run deepseek-r1:8b --gpu Step 4: Verify GPU Utilization To ensure DeepSeek-R1 is using your GPU, check NVIDIA System Management Interface (nvidia-smi): docker exec -it ollama nvidia-smi You should see processes running under the GPU Memory Usage section. Step 5: Stop and Remove Ollama Docker Container If you ever need to stop and remove the container, use: docker stop ollama && docker rm ollama This will: Stop the running Ollama container. Remove it from the system (but the model files will persist in the Docker volume). Conclusion In this guide, we covered how to: ✅ Set up Ollama in Docker. ✅ Pull and run DeepSeek-R1 using just a normal laptop (CPU only). ✅ Enable GPU acceleration if needed. ✅ Use a Web UI for a better experience. ✅ Execute everything in a single command. ✅ Verify GPU utilization (if applicable). By following these steps, you can easily deploy DeepSeek-R1 in a Dockerized environment with minimal setup.

Introduction

DeepSeek-R1 is a powerful open-source LLM (Large Language Model) that can be easily run using Ollama inside Docker. This guide will walk you through setting up DeepSeek-R1 on a normal laptop with just Docker. If you have an NVIDIA GPU, an optional section will cover GPU acceleration.

By the end of this guide, you will be able to:

- Run Ollama in Docker with just a normal laptop.

- Pull and run DeepSeek-R1 using only CPU (no need for GPU).

- Enable GPU acceleration if your system has an NVIDIA GPU.

- Run the entire setup with a single command for ease of execution.

- Optionally use a Web UI for a better experience instead of CLI.

Prerequisites (CPU Execution - Recommended for Most Users)

This guide is structured to prioritize CPU usage, ensuring that any normal laptop with Docker installed can run DeepSeek-R1 efficiently.

- Only Docker is required (Install Docker).

- No special hardware is needed—your normal laptop will work!

- 16GB+ RAM recommended (for smooth performance).

Step 1: Pull and Run Ollama in Docker (CPU Only)

Ollama provides a convenient runtime for models like DeepSeek-R1. We will first run Ollama inside a Docker container.

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

This will:

- Start Ollama in a Docker container.

- Expose it on port 11434.

- Persist downloaded models using a volume (

ollama:/root/.ollama).

To verify the container is running:

docker ps

Step 2: Pull and Run DeepSeek-R1 Model (CPU Only)

Now that Ollama is running, we can pull and execute DeepSeek-R1.

Pull DeepSeek-R1 Model

docker exec -it ollama ollama pull deepseek-r1:8b

Run DeepSeek-R1 (CPU Mode)

docker exec -it ollama ollama run deepseek-r1:8b

Step 3: Running Everything in One Command (CPU Only)

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama && \

docker exec -it ollama ollama pull deepseek-r1:8b && \

docker exec -it ollama ollama run deepseek-r1:8b

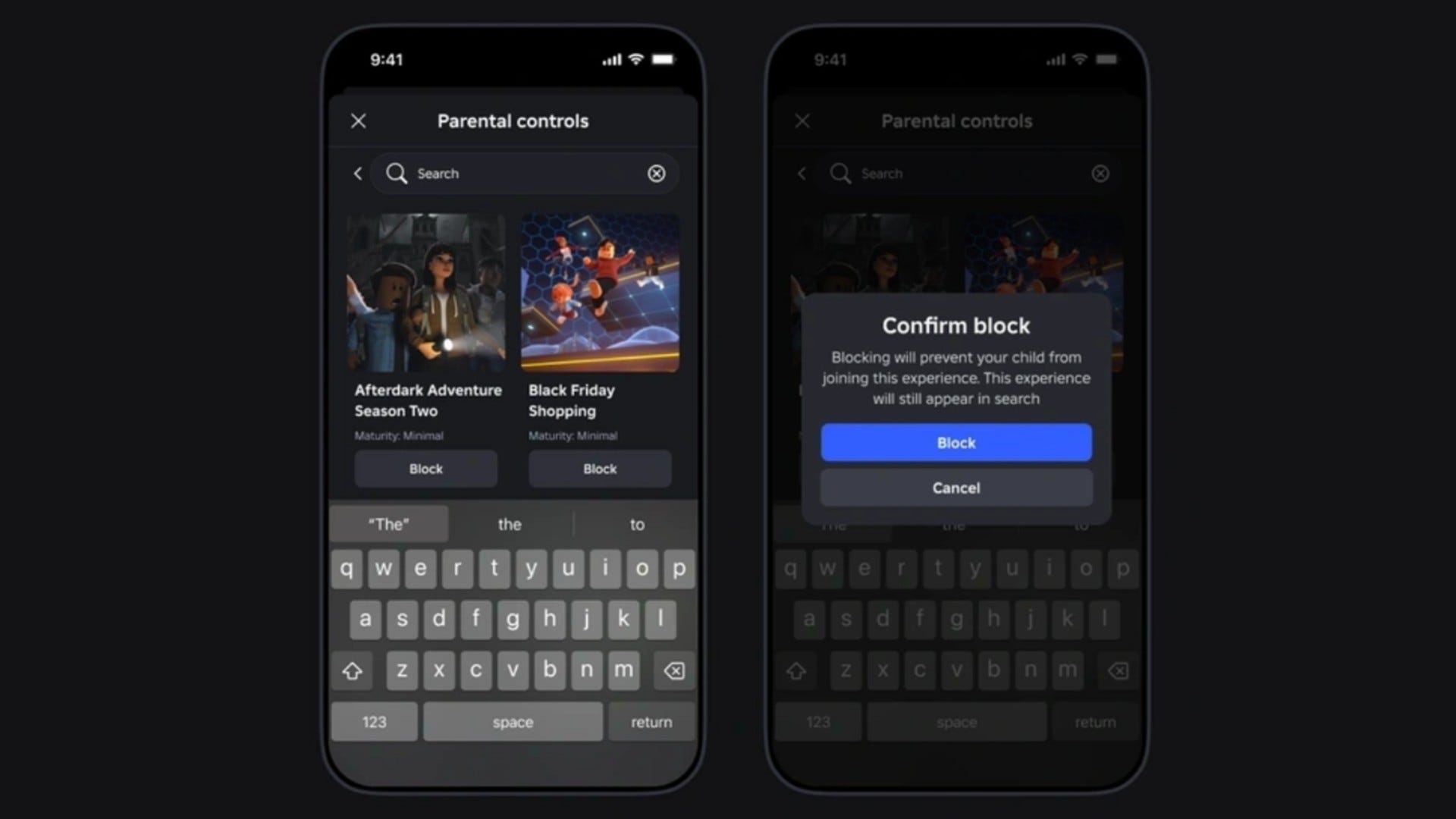

Optional: Running DeepSeek-R1 with Web UI

If you prefer a graphical interface instead of using the command line, you can set up a Web UI for Ollama and DeepSeek-R1.

Step 1: Run Open WebUI with Ollama

docker run -d -p 3000:8080 -e OLLAMA_API_BASE_URL=http://host.docker.internal:11434 -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

This will:

- Start Open WebUI, which provides a web-based chat interface for DeepSeek-R1.

- Expose it on http://localhost:3000.

- Connect it to the running Ollama container.

Now, open your browser and navigate to http://localhost:3000 to chat with DeepSeek-R1 using an easy-to-use UI.

Optional: Running DeepSeek-R1 with GPU Acceleration

If you have an NVIDIA GPU, you can enable GPU acceleration for improved performance.

Prerequisites (GPU Execution)

- NVIDIA GPU (with CUDA support).

- NVIDIA Drivers installed (Check GPU Compatibility).

- Docker with NVIDIA Container Toolkit installed.

Step 1: Run Ollama in Docker (With GPU Support)

docker run -d --gpus=all -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

Step 2: Run DeepSeek-R1 with GPU

docker exec -it ollama ollama run deepseek-r1:8b --gpu

Step 3: Running Everything in One Command (GPU Enabled)

docker run -d --gpus=all -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama && \

docker exec -it ollama ollama pull deepseek-r1:8b && \

docker exec -it ollama ollama run deepseek-r1:8b --gpu

Step 4: Verify GPU Utilization

To ensure DeepSeek-R1 is using your GPU, check NVIDIA System Management Interface (nvidia-smi):

docker exec -it ollama nvidia-smi

You should see processes running under the GPU Memory Usage section.

Step 5: Stop and Remove Ollama Docker Container

If you ever need to stop and remove the container, use:

docker stop ollama && docker rm ollama

This will:

- Stop the running Ollama container.

- Remove it from the system (but the model files will persist in the Docker volume).

Conclusion

In this guide, we covered how to:

✅ Set up Ollama in Docker.

✅ Pull and run DeepSeek-R1 using just a normal laptop (CPU only).

✅ Enable GPU acceleration if needed.

✅ Use a Web UI for a better experience.

✅ Execute everything in a single command.

✅ Verify GPU utilization (if applicable).

By following these steps, you can easily deploy DeepSeek-R1 in a Dockerized environment with minimal setup.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] Microsoft Office Professional 2021 for Windows: Lifetime License (75% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Anthony_Brown_Alamy.jpg?#)

_Hanna_Kuprevich_Alamy.jpg?#)

.png?#)

![Hands-on: We got to play Nintendo Switch 2 for nearly six hours yesterday [Video]](https://i0.wp.com/9to5toys.com/wp-content/uploads/sites/5/2025/04/Switch-FI-.jpg.jpg?resize=1200%2C628&ssl=1)

![Fitbit redesigns Water stats and logging on Android, iOS [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/03/fitbit-logo-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

![Apple Faces New Tariffs but Has Options to Soften the Blow [Kuo]](https://www.iclarified.com/images/news/96921/96921/96921-640.jpg)