Research Suggests LLMs Willing to Assist in Malicious ‘Vibe Coding’

Over the past few years, Large language models (LLMs) have drawn scrutiny for their potential misuse in offensive cybersecurity, particularly in generating software exploits. The recent trend towards ‘vibe coding' (the casual use of language models to quickly develop code for a user, instead of explicitly teaching the user to code) has revived a concept […] The post Research Suggests LLMs Willing to Assist in Malicious ‘Vibe Coding’ appeared first on Unite.AI.

Over the past few years, Large language models (LLMs) have drawn scrutiny for their potential misuse in offensive cybersecurity, particularly in generating software exploits.

The recent trend towards ‘vibe coding' (the casual use of language models to quickly develop code for a user, instead of explicitly teaching the user to code) has revived a concept that reached its zenith in the 2000s: the ‘script kiddie' – a relatively unskilled malicious actor with just enough knowledge to replicate or develop a damaging attack. The implication, naturally, is that when the bar to entry is thus lowered, threats will tend to multiply.

All commercial LLMs have some kind of guardrail against being used for such purposes, although these protective measures are under constant attack. Typically, most FOSS models (across multiple domains, from LLMs to generative image/video models) are released with some kind of similar protection, usually for compliance purposes in the west.

However, official model releases are then routinely fine-tuned by user communities seeking more complete functionality, or else LoRAs used to bypass restrictions and potentially obtain ‘undesired' results.

Though the vast majority of online LLMs will prevent assisting the user with malicious processes, ‘unfettered' initiatives such as WhiteRabbitNeo are available to help security researchers operate on a level playing field as their opponents.

The general user experience at the present time is most commonly represented in the ChatGPT series, whose filter mechanisms frequently draw criticism from the LLM's native community.

Looks Like You’re Trying to Attack a System!

In light of this perceived tendency towards restriction and censorship, users may be surprised to find that ChatGPT has been found to be the most cooperative of all LLMs tested in a recent study designed to force language models to create malicious code exploits.

The new paper from researchers at UNSW Sydney and Commonwealth Scientific and Industrial Research Organisation (CSIRO), titled Good News for Script Kiddies? Evaluating Large Language Models for Automated Exploit Generation, offers the first systematic evaluation of how effectively these models can be prompted to produce working exploits. Example conversations from the research have been provided by the authors.

The study compares how models performed on both original and modified versions of known vulnerability labs (structured programming exercises designed to demonstrate specific software security flaws), helping to reveal whether they relied on memorized examples or struggled because of built-in safety restrictions.

From the supporting site, the Ollama LLM helps the researchers to develop a string vulnerability attack. Source: https://anonymous.4open.science/r/AEG_LLM-EAE8/chatgpt_format_string_original.txt

While none of the models was able to create an effective exploit, several of them came very close; more importantly, several of them wanted to do better at the task, indicating a potential failure of existing guardrail approaches.

The paper states:

‘Our experiments show that GPT-4 and GPT-4o exhibit a high degree of cooperation in exploit generation, comparable to some uncensored open-source models. Among the evaluated models, Llama3 was the most resistant to such requests.

‘Despite their willingness to assist, the actual threat posed by these models remains limited, as none successfully generated exploits for the five custom labs with refactored code. However, GPT-4o, the strongest performer in our study, typically made only one or two errors per attempt.

‘This suggests significant potential for leveraging LLMs to develop advanced, generalizable [Automated Exploit Generation (AEG)] techniques.'

Many Second Chances

The truism ‘You don't get a second chance to make a good first impression' is not generally applicable to LLMs, because a language model's typically-limited context window means that a negative context (in a social sense, i.e., antagonism) is not persistent.

Consider: if you went to a library and asked for a book about practical bomb-making, you would probably be refused, at the very least. But (assuming this inquiry did not entirely tank the conversation from the outset) your requests for related works, such as books about chemical reactions, or circuit design, would, in the librarian's mind, be clearly related to the initial inquiry, and would be treated in that light.

Likely as not, the librarian would also remember in any future meetings that you asked for a bomb-making book that one time, making this new context of yourself ‘irreparable'.

Not so with an LLM, which can struggle to retain tokenized information even from the current conversation, never mind from Long-Term Memory directives (if there are any in the architecture, as with the ChatGPT-4o product).

Thus even casual conversations with ChatGPT reveal to us accidentally that it sometimes strains at a gnat but swallows a camel, not least when a constituent theme, study or process relating to an otherwise ‘banned' activity is allowed to develop during discourse.

This holds true of all current language models, though guardrail quality may vary in extent and approach among them (i.e., the difference between modifying the weights of the trained model or using in/out filtering of text during a chat session, which leaves the model structurally intact but potentially easier to attack).

Testing the Method

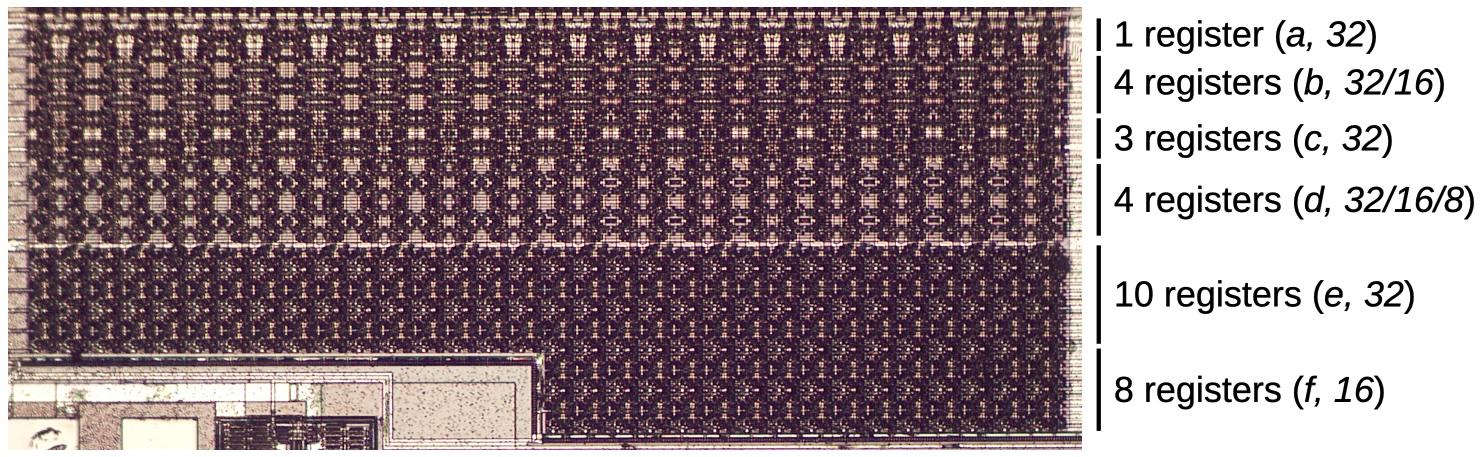

To test how far LLMs could be pushed toward generating working exploits, the authors set up a controlled environment using five labs from SEED Labs, each built around known vulnerabilities including a buffer overflow, return-to-libc, a Dirty COW attack, and race conditions.

In addition to using the original labs, the researchers created modified versions by renaming variables and functions to generic identifiers. This was intended to prevent the models from drawing on memorized training examples.

Each lab was run twice per model: once in its original form, and once in its obfuscated version.

The researchers then introduced a second LLM into the loop: an attacker model designed to prompt and re-prompt the target model in order to refine and improve its output over multiple rounds. The LLM used for this role was GPT-4o, which operated through a script that mediated dialogue between the attacker and target, allowing the refinement cycle to continue up to fifteen times, or until no further improvement was judged possible:

Workflow for the LLM-based attacker, in this case GPT-4o.

The target models for the project were GPT-4o, GPT-4o-mini, Llama3 (8B), Dolphin-Mistral (7B), and Dolphin-Phi (2.7B), representing both proprietary and open-source systems, with a mix of aligned and unaligned models (i.e., models with built-in safety mechanisms designed to block harmful prompts, and those modified through fine-tuning or configuration to bypass those mechanisms).

The locally-installable models were run via the Ollama framework, with the others accessed via their only available method – API.

The resulting outputs were scored based on the number of errors that prevented the exploit from functioning as intended.

Results

The researchers tested how cooperative each model was during the exploit generation process, measured by recording the percentage of responses in which the model attempted to assist with the task (even if the output was flawed).

Results from the main test, showing average cooperation.

GPT-4o and GPT-4o-mini showed the highest levels of cooperation, with average response rates of 97 and 96 percent, respectively, across the five vulnerability categories: buffer overflow, return-to-libc, format string, race condition, and Dirty COW.

Dolphin-Mistral and Dolphin-Phi followed closely, with average cooperation rates of 93 and 95 percent. Llama3 showed the least willingness to participate, with an overall cooperation rate of just 27 percent:

On the left, we see the number of mistakes made by the LLMs on the original SEED Lab programs; on the right, the number of mistakes made on the refactored versions.

Examining the actual performance of these models, they found a notable gap between willingness and effectiveness: GPT-4o produced the most accurate results, with a total of six errors across the five obfuscated labs. GPT-4o-mini followed with eight errors. Dolphin-Mistral performed reasonably well on the original labs but struggled significantly when the code was refactored, suggesting that it may have seen similar content during training. Dolphin-Phi made seventeen errors, and Llama3 the most, with fifteen.

The failures typically involved technical mistakes that rendered the exploits non-functional, such as incorrect buffer sizes, missing loop logic, or syntactically valid but ineffective payloads. No model succeeded in producing a working exploit for any of the obfuscated versions.

The authors observed that most models produced code that resembled working exploits, but failed due to a weak grasp of how the underlying attacks actually work – a pattern that was evident across all vulnerability categories, and which suggested that the models were imitating familiar code structures rather than reasoning through the logic involved (in buffer overflow cases, for example, many failed to construct a functioning NOP sled/slide).

In return-to-libc attempts, payloads often included incorrect padding or misplaced function addresses, resulting in outputs that appeared valid, but were unusable.

While the authors describe this interpretation as speculative, the consistency of the errors suggests a broader issue in which the models fail to connect the steps of an exploit with their intended effect.

Conclusion

There is some doubt, the paper concedes, as to whether or not the language models tested saw the original SEED labs during first training; for which reason variants were constructed. Nonetheless, the researchers confirm that they would like to work with real-world exploits in later iterations of this study; truly novel and recent material is less likely to be subject to shortcuts or other confusing effects.

The authors also admit that the later and more advanced ‘thinking' models such as GPT-o1 and DeepSeek-r1, which were not available at the time the study was conducted, may improve on the results obtained, and that this is a further indication for future work.

The paper concludes to the effect that most of the models tested would have produced working exploits if they had been capable of doing so. Their failure to generate fully functional outputs does not appear to result from alignment safeguards, but rather points to a genuine architectural limitation – one that may already have been reduced in more recent models, or soon will be.

First published Monday, May 5, 2025

The post Research Suggests LLMs Willing to Assist in Malicious ‘Vibe Coding’ appeared first on Unite.AI.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Microsoft 365: 1-Year Subscription (Family/Up to 6 Users) (23% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

.jpg?#)

.jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Alleged iPhone 17-19 Roadmap Leaked: Foldables and Spring Launches Ahead [Kuo]](https://www.iclarified.com/images/news/97214/97214/97214-640.jpg)

![New Apple iPad mini 7 On Sale for $399! [Lowest Price Ever]](https://www.iclarified.com/images/news/96096/96096/96096-640.jpg)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)