Qwen3: The Next Evolution in AI Language Models

May 3, 2025 · 8 min read Qwen3 has officially launched, bringing an impressive new suite of language models that push the boundaries of AI capabilities. The newly released Qwen3 family represents a significant leap forward in both thinking capacity and response speed, offering unprecedented flexibility for developers and users alike. The New Qwen3 Lineup The Qwen team has unveiled an extensive collection of models to suit various needs and computational resources: MoE (Mixture of Experts) Models: Qwen3-235B-A22B: The flagship model with 235 billion total parameters and 22 billion activated parameters Qwen3-30B-A3B: A smaller but powerful MoE model with 30 billion total parameters and 3 billion activated parameters Dense Models: Qwen3-32B Qwen3-14B Qwen3-8B Qwen3-4B Qwen3-1.7B Qwen3-0.6B All dense models are available under the Apache 2.0 license, making them accessible for commercial and research applications. Revolutionary Dual-Mode Thinking What sets Qwen3 apart is its innovative hybrid thinking capability: Thinking Mode: When tackling complex problems, Qwen3 can engage in detailed step-by-step reasoning before providing an answer Non-Thinking Mode: For simpler queries, the model delivers quick responses without the computational overhead This adaptive approach allows users to balance depth and speed according to their specific needs. The system intelligently allocates computational resources based on the complexity of each task, creating a more efficient experience. Global Language Support Qwen3 dramatically expands its linguistic capabilities with support for 119 languages and dialects across multiple language families: Indo-European (English, French, German, Russian, etc.) Sino-Tibetan (Chinese, Burmese) Afro-Asiatic (Arabic varieties, Hebrew) Austronesian (Indonesian, Malay, Tagalog) And many more language families This extensive language support makes Qwen3 truly global in its application potential. Technical Advancements The performance gains in Qwen3 come from several key improvements: Expanded Training Data: Nearly 36 trillion tokens (double that of Qwen2.5) Three-Stage Pre-training: Initial 30+ trillion tokens at 4K context length, followed by knowledge-intensive training, and finally extended to 128K context length Four-Stage Post-Training: Long chain-of-thought cold start, reasoning-based reinforcement learning, thinking mode fusion, and general RL These advancements allow smaller Qwen3 models to match or even exceed the performance of larger Qwen2.5 models. For example, Qwen3-4B performs similarly to Qwen2.5-72B-Instruct in many tasks. Deployment Options Developers can access Qwen3 models through multiple platforms: Hugging Face ModelScope Kaggle For deployment, the team recommends: SGLang and vLLM for API endpoints Ollama, LMStudio, MLX, llama.cpp, and KTransformers for local usage Development Examples Using Qwen3 is straightforward with standard interfaces. For example, to use the thinking mode: from modelscope import AutoModelForCausalLM, AutoTokenizer tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen3-30B-A3B") model = AutoModelForCausalLM.from_pretrained( "Qwen/Qwen3-30B-A3B", torch_dtype="auto", device_map="auto" ) messages = [{"role": "user", "content": "Give me a short introduction to large language models."}] text = tokenizer.apply_chat_template( messages, tokenize=False, add_generation_prompt=True, enable_thinking=True # Enable thinking mode ) The system also supports dynamic switching between thinking and non-thinking modes using /think and /no_think directives in conversations. Agentic Capabilities Qwen3 excels at tool calling through the Qwen-Agent framework, which simplifies the integration of external tools and APIs. This enables complex workflows where the model can interact with external systems to solve problems. Looking Ahead The Qwen3 release marks a significant step toward more advanced AI systems. The team continues to work on scaling up model size, extending context length, broadening modality support, and advancing reinforcement learning techniques. As AI transitions from model-centric to agent-centric paradigms, Qwen3 positions itself at the forefront of this evolution. Ready to experience the future of AI language models? Visit Qwen3 today to explore these cutting-edge capabilities for yourself. Whether you're a researcher, developer, or business user, Qwen3 offers the performance and flexibility to transform how you interact with AI.

May 3, 2025 · 8 min read

Qwen3 has officially launched, bringing an impressive new suite of language models that push the boundaries of AI capabilities. The newly released Qwen3 family represents a significant leap forward in both thinking capacity and response speed, offering unprecedented flexibility for developers and users alike.

The New Qwen3 Lineup

The Qwen team has unveiled an extensive collection of models to suit various needs and computational resources:

MoE (Mixture of Experts) Models:

- Qwen3-235B-A22B: The flagship model with 235 billion total parameters and 22 billion activated parameters

- Qwen3-30B-A3B: A smaller but powerful MoE model with 30 billion total parameters and 3 billion activated parameters

Dense Models:

- Qwen3-32B

- Qwen3-14B

- Qwen3-8B

- Qwen3-4B

- Qwen3-1.7B

- Qwen3-0.6B

All dense models are available under the Apache 2.0 license, making them accessible for commercial and research applications.

Revolutionary Dual-Mode Thinking

What sets Qwen3 apart is its innovative hybrid thinking capability:

- Thinking Mode: When tackling complex problems, Qwen3 can engage in detailed step-by-step reasoning before providing an answer

- Non-Thinking Mode: For simpler queries, the model delivers quick responses without the computational overhead

This adaptive approach allows users to balance depth and speed according to their specific needs. The system intelligently allocates computational resources based on the complexity of each task, creating a more efficient experience.

Global Language Support

Qwen3 dramatically expands its linguistic capabilities with support for 119 languages and dialects across multiple language families:

- Indo-European (English, French, German, Russian, etc.)

- Sino-Tibetan (Chinese, Burmese)

- Afro-Asiatic (Arabic varieties, Hebrew)

- Austronesian (Indonesian, Malay, Tagalog)

- And many more language families

This extensive language support makes Qwen3 truly global in its application potential.

Technical Advancements

The performance gains in Qwen3 come from several key improvements:

- Expanded Training Data: Nearly 36 trillion tokens (double that of Qwen2.5)

- Three-Stage Pre-training: Initial 30+ trillion tokens at 4K context length, followed by knowledge-intensive training, and finally extended to 128K context length

- Four-Stage Post-Training: Long chain-of-thought cold start, reasoning-based reinforcement learning, thinking mode fusion, and general RL

These advancements allow smaller Qwen3 models to match or even exceed the performance of larger Qwen2.5 models. For example, Qwen3-4B performs similarly to Qwen2.5-72B-Instruct in many tasks.

Deployment Options

Developers can access Qwen3 models through multiple platforms:

- Hugging Face

- ModelScope

- Kaggle

For deployment, the team recommends:

- SGLang and vLLM for API endpoints

- Ollama, LMStudio, MLX, llama.cpp, and KTransformers for local usage

Development Examples

Using Qwen3 is straightforward with standard interfaces. For example, to use the thinking mode:

from modelscope import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen3-30B-A3B")

model = AutoModelForCausalLM.from_pretrained(

"Qwen/Qwen3-30B-A3B",

torch_dtype="auto",

device_map="auto"

)

messages = [{"role": "user", "content": "Give me a short introduction to large language models."}]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

enable_thinking=True # Enable thinking mode

)

The system also supports dynamic switching between thinking and non-thinking modes using /think and /no_think directives in conversations.

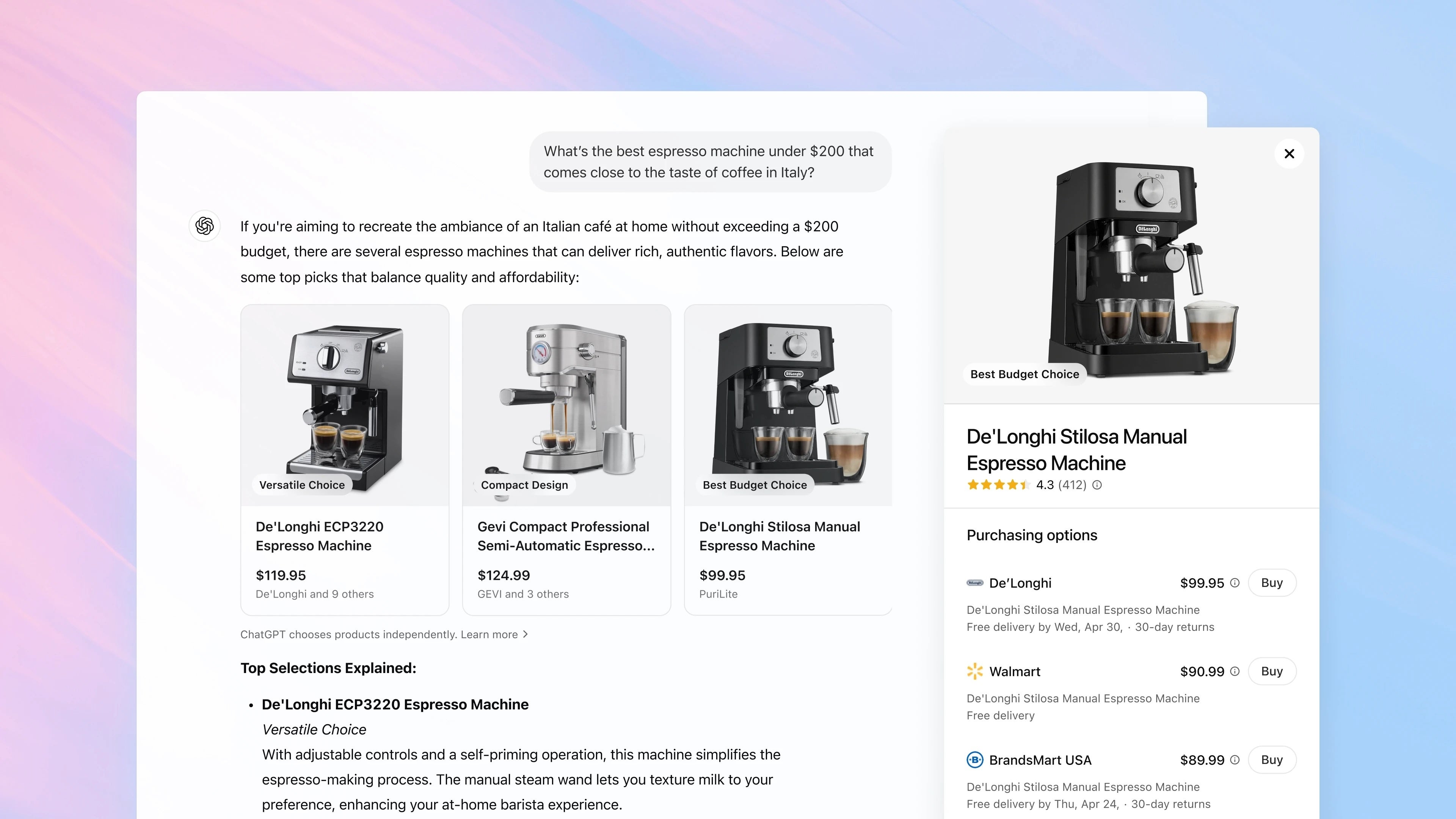

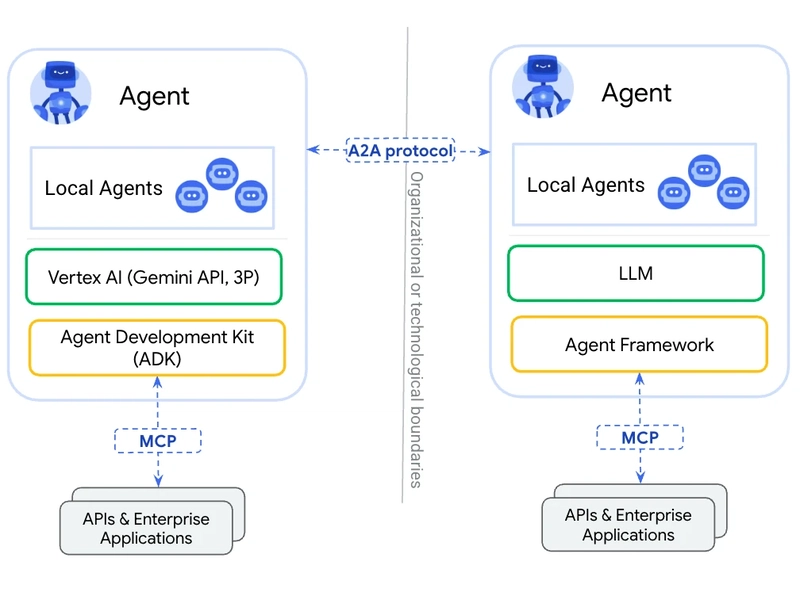

Agentic Capabilities

Qwen3 excels at tool calling through the Qwen-Agent framework, which simplifies the integration of external tools and APIs. This enables complex workflows where the model can interact with external systems to solve problems.

Looking Ahead

The Qwen3 release marks a significant step toward more advanced AI systems. The team continues to work on scaling up model size, extending context length, broadening modality support, and advancing reinforcement learning techniques.

As AI transitions from model-centric to agent-centric paradigms, Qwen3 positions itself at the forefront of this evolution.

Ready to experience the future of AI language models? Visit Qwen3 today to explore these cutting-edge capabilities for yourself. Whether you're a researcher, developer, or business user, Qwen3 offers the performance and flexibility to transform how you interact with AI.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Muhammad_R._Fakhrurrozi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![AirPods Pro 2 With USB-C Back On Sale for Just $169! [Deal]](https://www.iclarified.com/images/news/96315/96315/96315-640.jpg)

![Apple Releases iOS 18.5 Beta 4 and iPadOS 18.5 Beta 4 [Download]](https://www.iclarified.com/images/news/97145/97145/97145-640.jpg)

![Apple Seeds watchOS 11.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97147/97147/97147-640.jpg)

![Apple Seeds visionOS 2.5 Beta 4 to Developers [Download]](https://www.iclarified.com/images/news/97150/97150/97150-640.jpg)