Node.js Memory Apocalypse: Why Your App Dies on Big Files (And How to Stop It Forever)

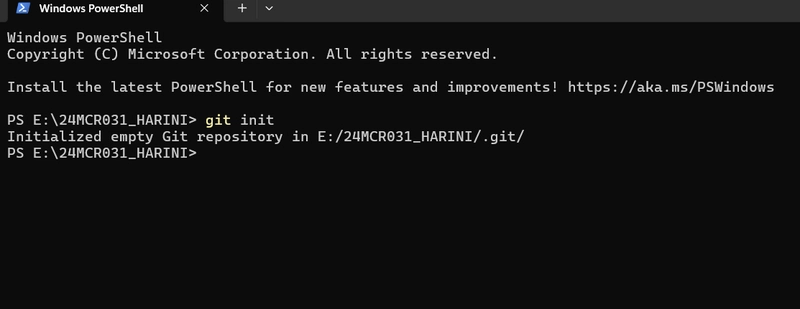

Your Node.js script works perfectly with test data. Then you feed it a real 10GB log file. Suddenly: crash. No warnings, just ENOMEM. Here's why even seasoned developers make this mistake—and the bulletproof solution. The Root of All Evil: fs.readFile fs.readFile is the equivalent of dumping a dump truck’s contents into your living room. It loads every single byte into RAM before you can touch it. Observe: // Processing a 3GB database dump? Enjoy 3GB RAM usage fs.readFile('./mega-database.sql', 'utf8', (err, data) => { parseSQL(data); // Hope you have 3GB to spare }); CLI tools crash processing large CSVs Data pipelines implode on video files Background services die silently at 3AM This isn’t “bad code”—it’s how fs.readFile operates. And it’s why your production system fails catastrophically. Streams: The Memory Ninja Technique Streams process data like a conveyor belt—small chunks enter, get processed, then leave memory forever. No RAM explosions: // Process 100GB file with ~50MB memory const stream = fs.createReadStream('./giant-dataset.csv'); stream.on('data', (chunk) => { analyzeChunk(chunk); // Work with 64KB-1MB pieces }); stream.on('end', () => { console.log('Processed entire file without going nuclear'); }); Real-World Massacre: File Processing The Suicide Approach (Common Mistake) // Data import script that crashes on big files function importUsers() { fs.readFile('./users.json', (err, data) => { JSON.parse(data).forEach(insertIntoDatabase); //

Your Node.js script works perfectly with test data. Then you feed it a real 10GB log file. Suddenly: crash. No warnings, just ENOMEM. Here's why even seasoned developers make this mistake—and the bulletproof solution.

The Root of All Evil: fs.readFile

fs.readFile is the equivalent of dumping a dump truck’s contents into your living room. It loads every single byte into RAM before you can touch it. Observe:

// Processing a 3GB database dump? Enjoy 3GB RAM usage

fs.readFile('./mega-database.sql', 'utf8', (err, data) => {

parseSQL(data); // Hope you have 3GB to spare

});

- CLI tools crash processing large CSVs

- Data pipelines implode on video files

- Background services die silently at 3AM

This isn’t “bad code”—it’s how fs.readFile operates. And it’s why your production system fails catastrophically.

Streams: The Memory Ninja Technique

Streams process data like a conveyor belt—small chunks enter, get processed, then leave memory forever. No RAM explosions:

// Process 100GB file with ~50MB memory

const stream = fs.createReadStream('./giant-dataset.csv');

stream.on('data', (chunk) => {

analyzeChunk(chunk); // Work with 64KB-1MB pieces

});

stream.on('end', () => {

console.log('Processed entire file without going nuclear');

});

Real-World Massacre: File Processing

The Suicide Approach (Common Mistake)

// Data import script that crashes on big files

function importUsers() {

fs.readFile('./users.json', (err, data) => {

JSON.parse(data).forEach(insertIntoDatabase); //

![[Free Webinar] Guide to Securing Your Entire Identity Lifecycle Against AI-Powered Threats](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjqbZf4bsDp6ei3fmQ8swm7GB5XoRrhZSFE7ZNhRLFO49KlmdgpIDCZWMSv7rydpEShIrNb9crnH5p6mFZbURzO5HC9I4RlzJazBBw5aHOTmI38sqiZIWPldRqut4bTgegipjOk5VgktVOwCKF_ncLeBX-pMTO_GMVMfbzZbf8eAj21V04y_NiOaSApGkM/s1600/webinar-play.jpg?#)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

-xl.jpg)

![New Hands-On iPhone 17 Dummy Video Shows Off Ultra-Thin Air Model, Updated Pro Designs [Video]](https://www.iclarified.com/images/news/97171/97171/97171-640.jpg)

![Apple Shares Trailer for First Immersive Feature Film 'Bono: Stories of Surrender' [Video]](https://www.iclarified.com/images/news/97168/97168/97168-640.jpg)