Meta's smart glasses will soon provide detailed information regarding visual stimuli

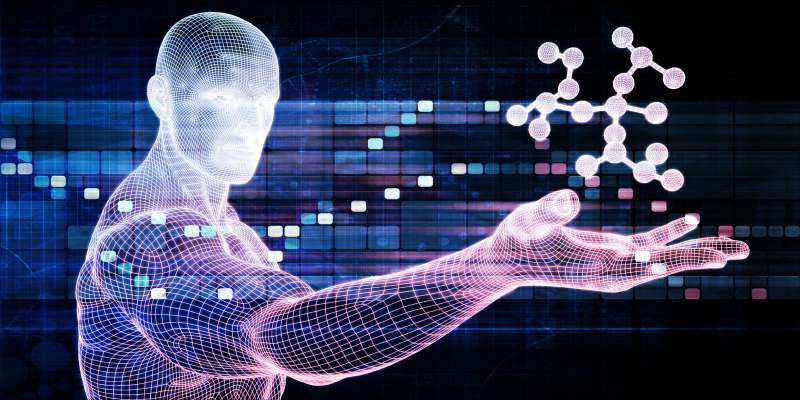

The Ray-Ban Meta glasses are getting an upgrade to better help the blind and low vision community. The AI assistant will now provide "detailed responses" regarding what's in front of users. Meta says it'll kick in "when people ask about their environment." To get started, users just have to opt-in via the Device Settings section in the Meta AI app. The company shared a video of the tool in action in which a blind user asked Meta AI to describe a grassy area in a park. It quickly hopped into action and correctly pointed out a path, trees and a body of water in the distance. The AI assistant was also shown describing the contents of a kitchen. I could see this being a fun add-on even for those without any visual impairment. In any event, it begins rolling out to all users in the US and Canada in the coming weeks. Meta plans on expanding to additional markets in the near future. It's Global Accessibility Awareness Day (GAAD), so that's not the only accessibility-minded tool that Meta announced today. There's the nifty Call a Volunteer, a tool that automatically connects blind or low vision people to a "network of sighted volunteers in real-time" to help complete everyday tasks. The volunteers come from the Be My Eyes foundation and the platform launches later this month in 18 countries. The company recently announced a more refined system for live captions in all of its extended reality products, like the Quest line of VR headsets. This converts spoken words into text in real-time, so users can "read content as it's being delivered." The feature is already available for Quest headsets and within Meta Horizon Worlds.This article originally appeared on Engadget at https://www.engadget.com/ai/metas-smart-glasses-will-soon-provide-detailed-information-regarding-visual-stimuli-153046605.html?src=rss

The Ray-Ban Meta glasses are getting an upgrade to better help the blind and low vision community. The AI assistant will now provide "detailed responses" regarding what's in front of users. Meta says it'll kick in "when people ask about their environment." To get started, users just have to opt-in via the Device Settings section in the Meta AI app.

The company shared a video of the tool in action in which a blind user asked Meta AI to describe a grassy area in a park. It quickly hopped into action and correctly pointed out a path, trees and a body of water in the distance. The AI assistant was also shown describing the contents of a kitchen.

I could see this being a fun add-on even for those without any visual impairment. In any event, it begins rolling out to all users in the US and Canada in the coming weeks. Meta plans on expanding to additional markets in the near future.

It's Global Accessibility Awareness Day (GAAD), so that's not the only accessibility-minded tool that Meta announced today. There's the nifty Call a Volunteer, a tool that automatically connects blind or low vision people to a "network of sighted volunteers in real-time" to help complete everyday tasks. The volunteers come from the Be My Eyes foundation and the platform launches later this month in 18 countries.

The company recently announced a more refined system for live captions in all of its extended reality products, like the Quest line of VR headsets. This converts spoken words into text in real-time, so users can "read content as it's being delivered." The feature is already available for Quest headsets and within Meta Horizon Worlds.This article originally appeared on Engadget at https://www.engadget.com/ai/metas-smart-glasses-will-soon-provide-detailed-information-regarding-visual-stimuli-153046605.html?src=rss

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![How to Enable Remote Access on Windows 10 [Allow RDP]](https://bigdataanalyticsnews.com/wp-content/uploads/2025/05/remote-access-windows.jpg)

![[DEALS] The 2025 Ultimate GenAI Masterclass Bundle (87% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Legends Reborn tier list of best heroes for each class [May 2025]](https://media.pocketgamer.com/artwork/na-33360-1656320479/pg-magnum-quest-fi-1.jpeg?#)

-Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Watch Aston Martin and Top Gear Show Off Apple CarPlay Ultra [Video]](https://www.iclarified.com/images/news/97336/97336/97336-640.jpg)

![Trump Tells Cook to Stop Building iPhones in India and Build in the U.S. Instead [Video]](https://www.iclarified.com/images/news/97329/97329/97329-640.jpg)