How Walled Gardens in Public Safety Are Exposing America’s Data Privacy Crisis

The Expanding Frontier of AI and the Data It Demands Artificial intelligence is rapidly changing how we live, work and govern. In public health and public services, AI tools promise more efficiency and faster decision-making. But beneath the surface of this transformation is a growing imbalance: our ability to collect data has outpaced our ability to […] The post How Walled Gardens in Public Safety Are Exposing America’s Data Privacy Crisis appeared first on Unite.AI.

The Expanding Frontier of AI and the Data It Demands

Artificial intelligence is rapidly changing how we live, work and govern. In public health and public services, AI tools promise more efficiency and faster decision-making. But beneath the surface of this transformation is a growing imbalance: our ability to collect data has outpaced our ability to govern it responsibly.

This goes beyond just a tech challenge to be a privacy crisis. From predictive policing software to surveillance tools and automated license plate readers, data about individuals is being amassed, analyzed and acted upon at unprecedented speed. And yet, most citizens have no idea who owns their data, how it’s used or whether it’s being safeguarded.

I’ve seen this up close. As a former FBI Cyber Special Agent and now the CEO of a leading public safety tech company, I’ve worked across both the government and private sector. One thing is clear: if we don’t fix the way we handle data privacy now, AI will only make existing problems worse. And one of the biggest problems? Walled gardens.

What Are Walled Gardens And Why Are They Dangerous in Public Safety?

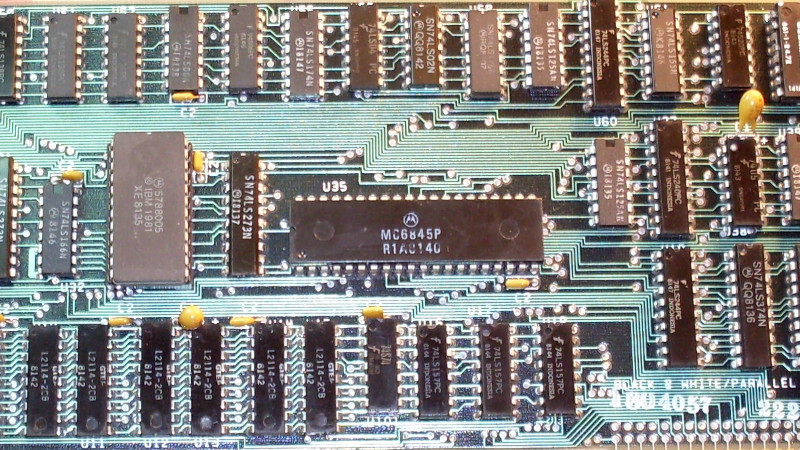

Walled gardens are closed systems where one company controls the access, flow and usage of data. They’re common in advertising and social media (think platforms Facebook, Google and Amazon) but increasingly, they’re showing up in public safety too.

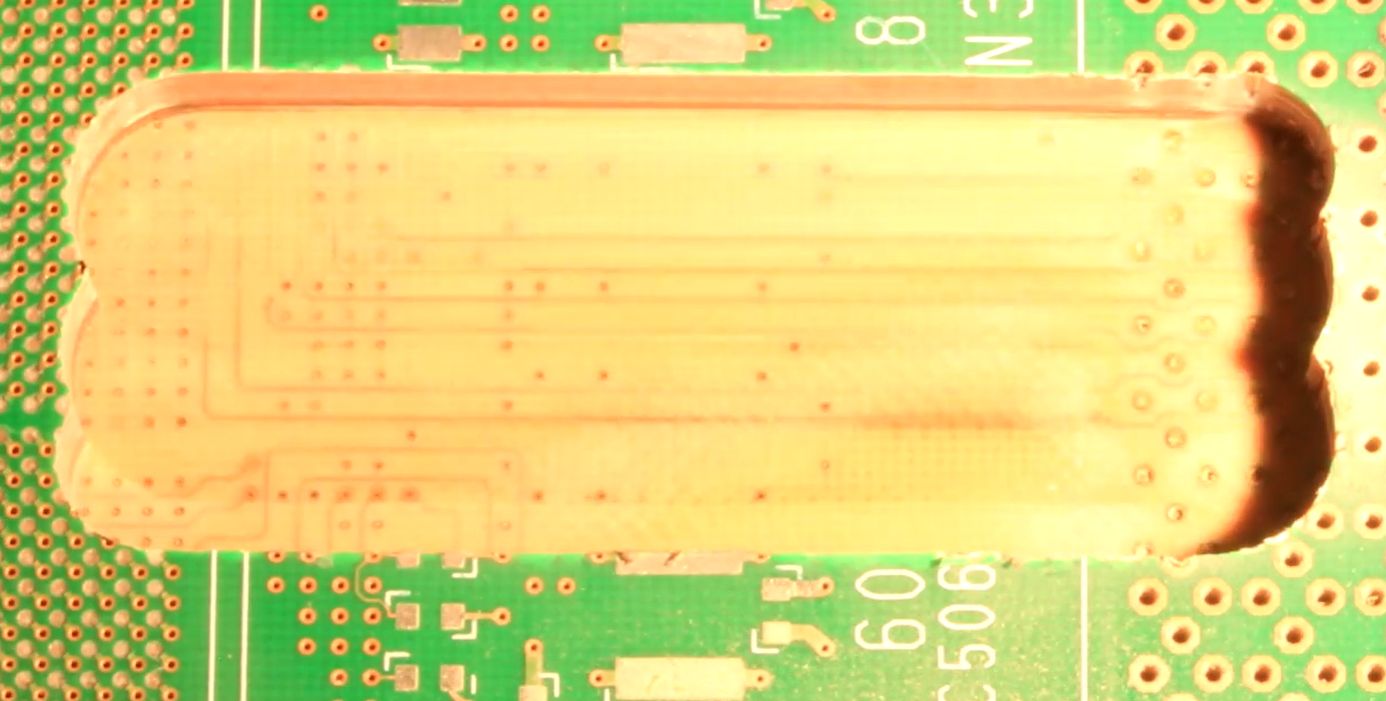

Public safety companies play a key role in modern policing infrastructure, however, the proprietary nature of some of these systems means they aren’t always designed to interact fluidly with tools from other vendors.

These walled gardens may offer powerful functionality like cloud-based bodycam footage or automated license plate readers, but they also create a monopoly over how data is stored, accessed and analyzed. Law enforcement agencies often find themselves locked into long-term contracts with proprietary systems that don’t talk to each other. The result? Fragmentation, siloed insights and an inability to effectively respond in the community when it matters most.

The Public Doesn’t Know, and That’s a Problem

Most people don’t realize just how much of their personal information is flowing into these systems. In many cities, your location, vehicle, online activity and even emotional state can be inferred and tracked through a patchwork of AI-driven tools. These tools can be marketed as crime-fighting upgrades, but in the absence of transparency and regulation, they can easily be misused.

And it’s not just that the data exists, but that it exists in walled ecosystems that are controlled by private companies with minimal oversight. For example, tools like license plate readers are now in thousands of communities across the U.S., collecting data and feeding it into their proprietary network. Police departments often don’t even own the hardware, they rent it, meaning the data pipeline, analysis and alerts are dictated by a vendor and not by public consensus.

Why This Should Raise Red Flags

AI needs data to function. But when data is locked inside walled gardens, it can’t be cross-referenced, validated or challenged. This means decisions about who is pulled over, where resources go or who is flagged as a threat are being made based on partial, sometimes inaccurate information.

The risk? Poor decisions, potential civil liberties violations and a growing gap between police departments and the communities they serve. Transparency erodes. Trust evaporates. And innovation is stifled, because new tools can’t enter the market unless they conform to the constraints of these walled systems.

In a scenario where a license plate recognition system incorrectly flags a stolen vehicle based on outdated or shared data, without the ability to verify that information across platforms or audit how that decision was made, officers may act on false positives. We’ve already seen incidents where flawed technology led to wrongful arrests or escalated confrontations. These outcomes aren’t hypothetical, they’re happening in communities across the country.

What Law Enforcement Actually Needs

Instead of locking data away, we need open ecosystems that support secure, standardized and interoperable data sharing. That doesn’t mean sacrificing privacy. On the contrary, it’s the only way to ensure privacy protections are enforced.

Some platforms are working toward this. For example, FirstTwo offers real-time situational awareness tools that emphasize responsible integration of publically-available data. Others, like ForceMetrics, are focused on combining disparate datasets such as 911 calls, behavioral health records and prior incident history to give officers better context in the field. But crucially, these systems are built with public safety needs and community respect as a priority, not an afterthought.

Building a Privacy-First Infrastructure

A privacy-first approach means more than redacting sensitive information. It means limiting access to data unless there is a clear, lawful need. It means documenting how decisions are made and enabling third-party audits. It means partnering with community stakeholders and civil rights groups to shape policy and implementation. These steps result in strengthened security and overall legitimacy.

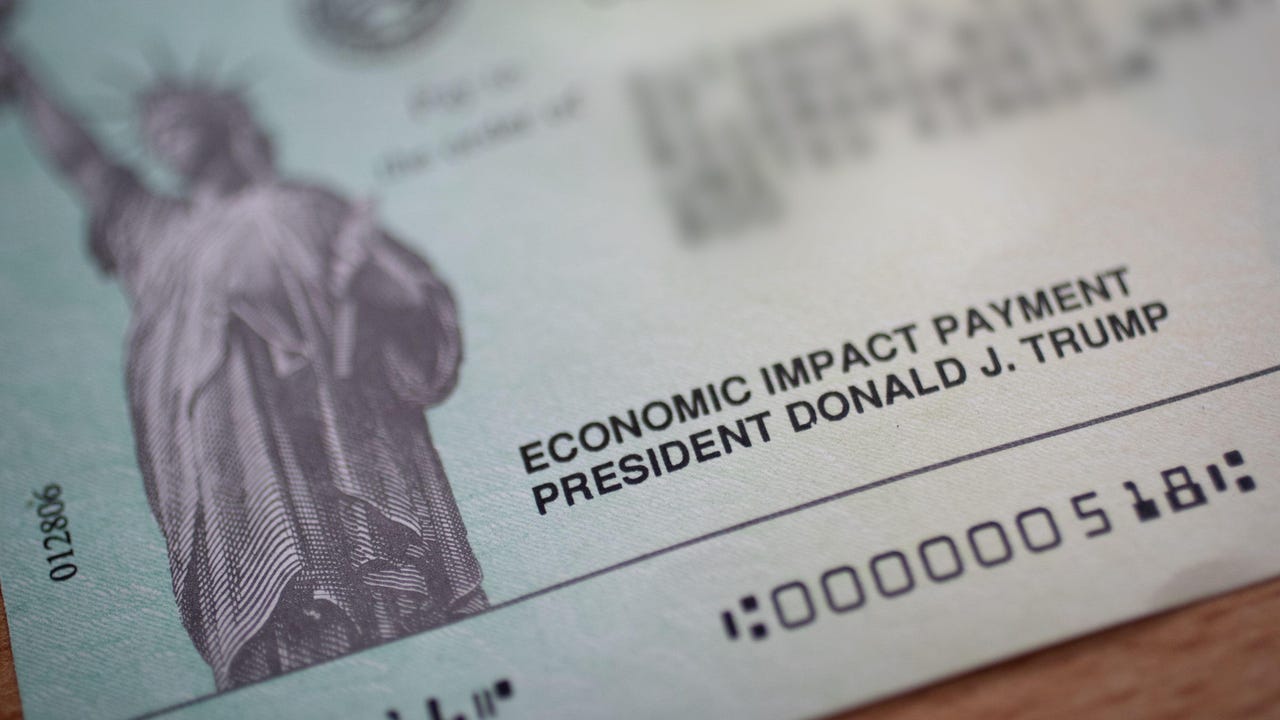

Despite the technological advances, we’re still operating in a legal vacuum. The U.S. lacks comprehensive federal data privacy legislation, leaving agencies and vendors to make up the rules as they go. Europe has GDPR, which offers a roadmap for consent-based data usage and accountability. The U.S., by contrast, has a fragmented patchwork of state-level policies that don’t adequately address the complexities of AI in public systems.

That needs to change. We need clear, enforceable standards around how law enforcement and public safety organizations collect, store and share data. And we need to include community stakeholders in the conversation. Consent, transparency and accountability must be baked into every level of the system, from procurement to implementation to daily use.

The Bottom Line: Without Interoperability, Privacy Suffers

In public safety, lives are on the line. The idea that one vendor could control access to mission-critical data and restrict how and when it’s used is not just inefficient. It’s unethical.

We need to move beyond the myth that innovation and privacy are at odds. Responsible AI means more equitable, effective and accountable systems. It means rejecting vendor lock-in, prioritizing interoperability and demanding open standards. Because in a democracy, no single company should control the data that decides who gets help, who gets stopped or who gets left behind.

The post How Walled Gardens in Public Safety Are Exposing America’s Data Privacy Crisis appeared first on Unite.AI.

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![How to Enable Remote Access on Windows 10 [Allow RDP]](https://bigdataanalyticsnews.com/wp-content/uploads/2025/05/remote-access-windows.jpg)

![[DEALS] The 2025 Ultimate GenAI Masterclass Bundle (87% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Legends Reborn tier list of best heroes for each class [May 2025]](https://media.pocketgamer.com/artwork/na-33360-1656320479/pg-magnum-quest-fi-1.jpeg?#)

-Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Watch Aston Martin and Top Gear Show Off Apple CarPlay Ultra [Video]](https://www.iclarified.com/images/news/97336/97336/97336-640.jpg)